linux - spin lock实现分析

- spinlock

- 1 spinlock的数据结构

- 2 spinlock的接口

- 2.1 spin_lock_init

- 2.2 spin_lock

- 2.3 spin_unlock

- 2.4 spin_lock_irq

- 2.5 spin_unlock_irq

- 2.6 spin_lock_irqsave

- 2.7 spin_unlock_irqrestore

- 2.8 spin_lock_bh

- 2.9 spin_unlock_bh

spinlock

1 spinlock的数据结构

- u16 owner;

- u16 next;

typedef struct { union {u32 slock;struct __raw_tickets {

#ifdef __ARMEB__u16 next;u16 owner;

#elseu16 owner;u16 next;

#endif} tickets;};

} arch_spinlock_t;#define __ARCH_SPIN_LOCK_UNLOCKED { { 0 } }typedef struct {u32 lock;

} arch_rwlock_t;typedef struct raw_spinlock {arch_spinlock_t raw_lock;

#ifdef CONFIG_DEBUG_SPINLOCKunsigned int magic, owner_cpu;void *owner;

#endif

#ifdef CONFIG_DEBUG_LOCK_ALLOCstruct lockdep_map dep_map;

#endif

} raw_spinlock_t;/* Non PREEMPT_RT kernels map spinlock to raw_spinlock */

typedef struct spinlock {union {struct raw_spinlock rlock;#ifdef CONFIG_DEBUG_LOCK_ALLOC

# define LOCK_PADSIZE (offsetof(struct raw_spinlock, dep_map))struct {u8 __padding[LOCK_PADSIZE];struct lockdep_map dep_map;};

#endif };

} spinlock_t;

2 spinlock的接口

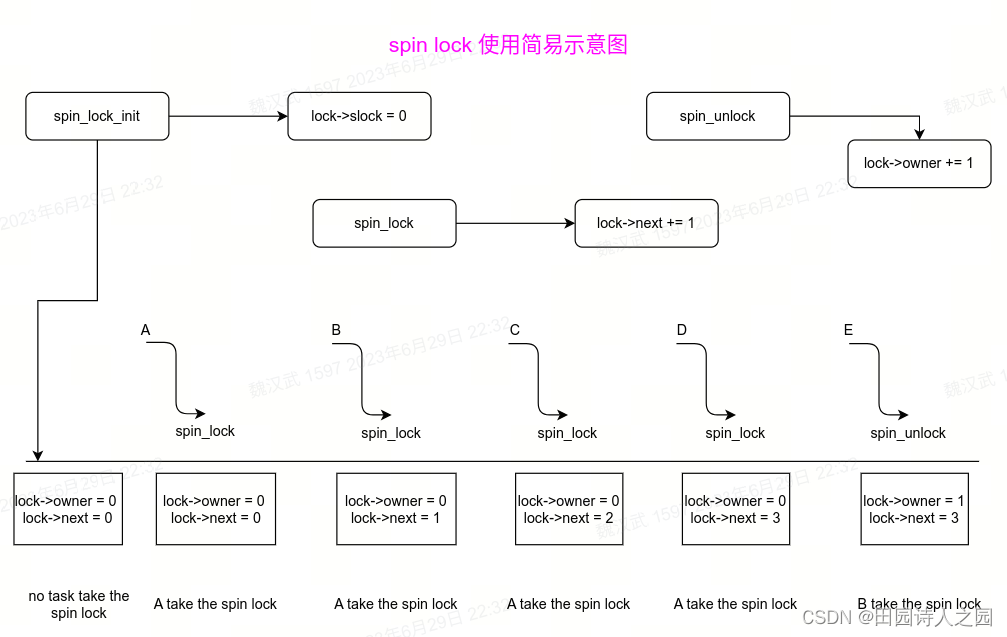

2.1 spin_lock_init

#define __ARCH_SPIN_LOCK_UNLOCKED { { 0 } }#define ___SPIN_LOCK_INITIALIZER(lockname) \ { \.raw_lock = __ARCH_SPIN_LOCK_UNLOCKED, \SPIN_DEBUG_INIT(lockname) \SPIN_DEP_MAP_INIT(lockname) }#define __SPIN_LOCK_INITIALIZER(lockname) \{ { .rlock = ___SPIN_LOCK_INITIALIZER(lockname) } }#define __SPIN_LOCK_UNLOCKED(lockname) \(spinlock_t) __SPIN_LOCK_INITIALIZER(lockname)#define DEFINE_SPINLOCK(x) spinlock_t x = __SPIN_LOCK_UNLOCKED(x)# define spin_lock_init(_lock) \

do { \spinlock_check(_lock); \*(_lock) = __SPIN_LOCK_UNLOCKED(_lock); \

} while (0)spin_lock_init(&lo->lo_lock); spin_lock_init(&lo->lo_work_lock);

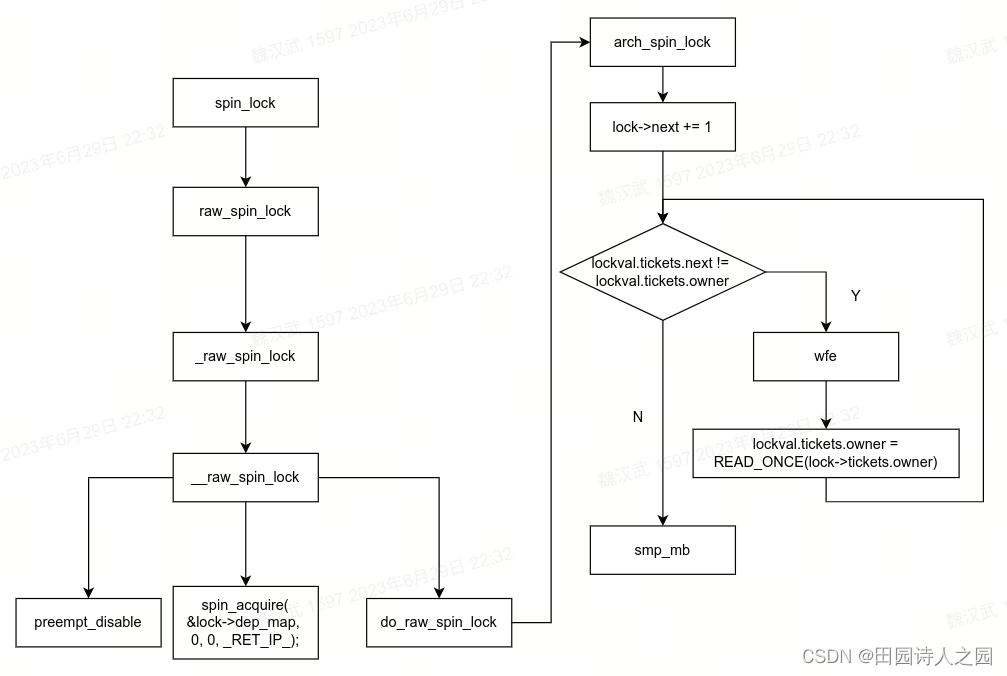

2.2 spin_lock

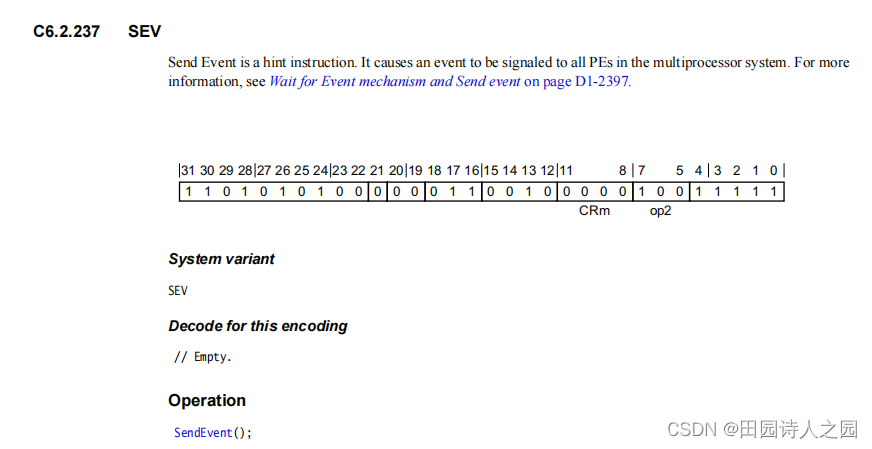

/** ARMv6 ticket-based spin-locking.** A memory barrier is required after we get a lock, and before we * release it, because V6 CPUs are assumed to have weakly ordered* memory.*/ static inline void arch_spin_lock(arch_spinlock_t *lock)

{unsigned long tmp;u32 newval;arch_spinlock_t lockval;prefetchw(&lock->slock);__asm__ __volatile__(

"1: ldrex %0, [%3]\n"

" add %1, %0, %4\n"

" strex %2, %1, [%3]\n"

" teq %2, #0\n"

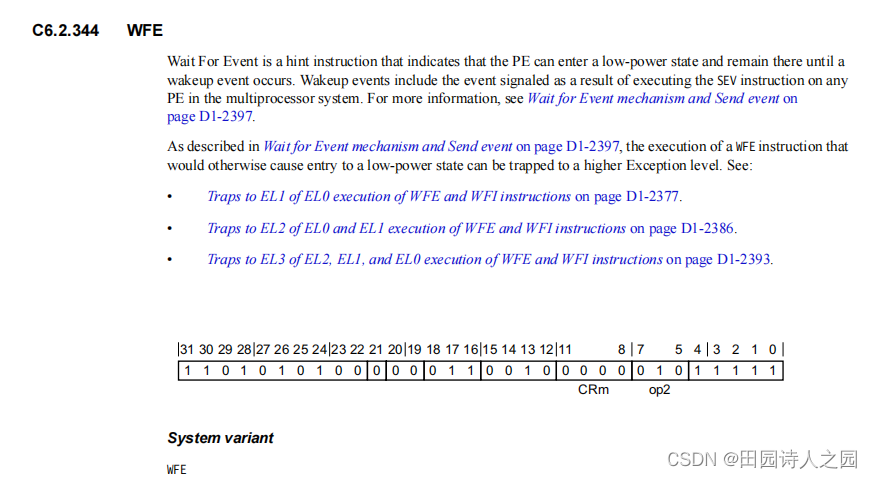

" bne 1b": "=&r" (lockval), "=&r" (newval), "=&r" (tmp) : "r" (&lock->slock), "I" (1 << TICKET_SHIFT): "cc");while (lockval.tickets.next != lockval.tickets.owner) {wfe();lockval.tickets.owner = READ_ONCE(lock->tickets.owner);}smp_mb();

}static inline void __raw_spin_lock(raw_spinlock_t *lock)

{preempt_disable();spin_acquire(&lock->dep_map, 0, 0, _RET_IP_);LOCK_CONTENDED(lock, do_raw_spin_trylock, do_raw_spin_lock);

}#define raw_spin_lock(lock) _raw_spin_lock(lock)static __always_inline void spin_lock(spinlock_t *lock)

{ raw_spin_lock(&lock->rlock);

}

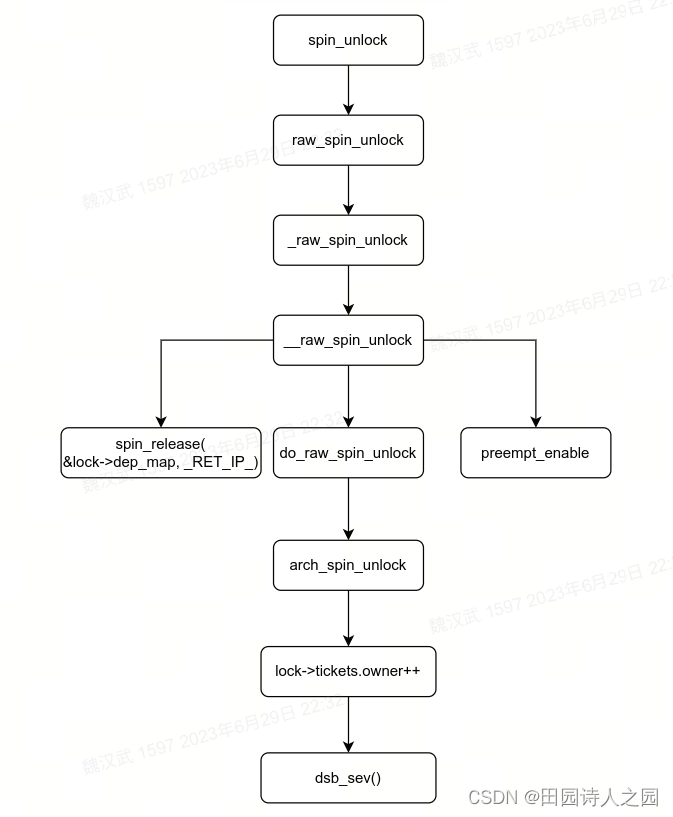

2.3 spin_unlock

#define SEV __ALT_SMP_ASM(WASM(sev), WASM(nop))static inline void dsb_sev(void)

{ dsb(ishst);__asm__(SEV);

}static inline void arch_spin_unlock(arch_spinlock_t *lock)

{ smp_mb();lock->tickets.owner++;dsb_sev();

}static inline void do_raw_spin_unlock(raw_spinlock_t *lock) __releases(lock)

{mmiowb_spin_unlock();arch_spin_unlock(&lock->raw_lock); __release(lock);

}static inline void __raw_spin_unlock(raw_spinlock_t *lock)

{ spin_release(&lock->dep_map, _RET_IP_);do_raw_spin_unlock(lock);preempt_enable();

}#define _raw_spin_unlock(lock) __raw_spin_unlock(lock)#define raw_spin_unlock(lock) _raw_spin_unlock(lock)static __always_inline void spin_unlock(spinlock_t *lock)

{raw_spin_unlock(&lock->rlock);

}

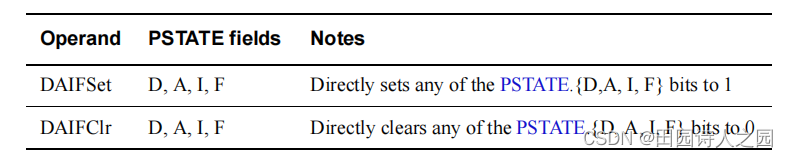

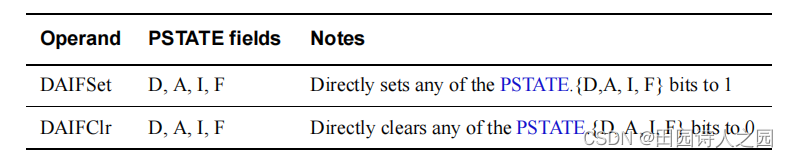

2.4 spin_lock_irq

static inline void arch_local_irq_disable(void)

{if (system_has_prio_mask_debugging()) {u32 pmr = read_sysreg_s(SYS_ICC_PMR_EL1);WARN_ON_ONCE(pmr != GIC_PRIO_IRQON && pmr != GIC_PRIO_IRQOFF);}asm volatile(ALTERNATIVE( "msr daifset, #3 // arch_local_irq_disable",__msr_s(SYS_ICC_PMR_EL1, "%0"),ARM64_HAS_IRQ_PRIO_MASKING):: "r" ((unsigned long) GIC_PRIO_IRQOFF): "memory");

}static inline void __raw_spin_lock_irq(raw_spinlock_t *lock)

{local_irq_disable(); preempt_disable();spin_acquire(&lock->dep_map, 0, 0, _RET_IP_);LOCK_CONTENDED(lock, do_raw_spin_trylock, do_raw_spin_lock);

}

2.5 spin_unlock_irq

static inline void arch_local_irq_enable(void)

{if (system_has_prio_mask_debugging()) {u32 pmr = read_sysreg_s(SYS_ICC_PMR_EL1);WARN_ON_ONCE(pmr != GIC_PRIO_IRQON && pmr != GIC_PRIO_IRQOFF);}asm volatile(ALTERNATIVE("msr daifclr, #3 // arch_local_irq_enable",__msr_s(SYS_ICC_PMR_EL1, "%0"),ARM64_HAS_IRQ_PRIO_MASKING):: "r" ((unsigned long) GIC_PRIO_IRQON): "memory");pmr_sync();

}static inline void __raw_spin_unlock_irq(raw_spinlock_t *lock)

{ spin_release(&lock->dep_map, _RET_IP_);do_raw_spin_unlock(lock);local_irq_enable();preempt_enable();

}

2.6 spin_lock_irqsave

/** Save the current interrupt enable state.*/

static inline unsigned long arch_local_save_flags(void)

{unsigned long flags;asm volatile(ALTERNATIVE("mrs %0, daif",__mrs_s("%0", SYS_ICC_PMR_EL1),ARM64_HAS_IRQ_PRIO_MASKING): "=&r" (flags):: "memory");return flags;

}static inline unsigned long __raw_spin_lock_irqsave(raw_spinlock_t *lock)

{unsigned long flags;local_irq_save(flags);preempt_disable();spin_acquire(&lock->dep_map, 0, 0, _RET_IP_);/** On lockdep we dont want the hand-coded irq-enable of* do_raw_spin_lock_flags() code, because lockdep assumes* that interrupts are not re-enabled during lock-acquire:*/

#ifdef CONFIG_LOCKDEPLOCK_CONTENDED(lock, do_raw_spin_trylock, do_raw_spin_lock);

#elsedo_raw_spin_lock_flags(lock, &flags);

#endifreturn flags;

}#define spin_lock_irqsave(lock, flags) \

do { \raw_spin_lock_irqsave(spinlock_check(lock), flags); \

} while (0)

2.7 spin_unlock_irqrestore

/** restore saved IRQ state*/

static inline void arch_local_irq_restore(unsigned long flags)

{asm volatile(ALTERNATIVE("msr daif, %0",__msr_s(SYS_ICC_PMR_EL1, "%0"),ARM64_HAS_IRQ_PRIO_MASKING):: "r" (flags): "memory");pmr_sync();

}static inline void __raw_spin_unlock_irqrestore(raw_spinlock_t *lock,unsigned long flags)

{spin_release(&lock->dep_map, _RET_IP_);do_raw_spin_unlock(lock);local_irq_restore(flags);preempt_enable();

}static __always_inline void spin_unlock_irqrestore(spinlock_t *lock, unsigned long flags)

{raw_spin_unlock_irqrestore(&lock->rlock, flags);

}

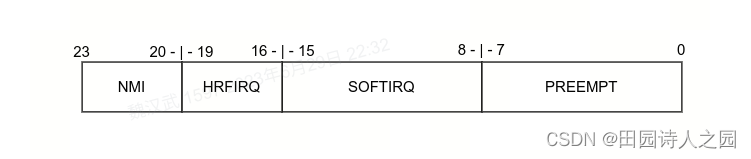

2.8 spin_lock_bh

#define SOFTIRQ_LOCK_OFFSET (SOFTIRQ_DISABLE_OFFSET + PREEMPT_LOCK_OFFSET)static inline void __raw_spin_lock_bh(raw_spinlock_t *lock)

{__local_bh_disable_ip(_RET_IP_, SOFTIRQ_LOCK_OFFSET);spin_acquire(&lock->dep_map, 0, 0, _RET_IP_);LOCK_CONTENDED(lock, do_raw_spin_trylock, do_raw_spin_lock);

}static __always_inline void spin_lock_bh(spinlock_t *lock)

{raw_spin_lock_bh(&lock->rlock);

}

2.9 spin_unlock_bh

#define SOFTIRQ_LOCK_OFFSET (SOFTIRQ_DISABLE_OFFSET + PREEMPT_LOCK_OFFSET)static inline void __raw_spin_unlock_bh(raw_spinlock_t *lock)

{spin_release(&lock->dep_map, _RET_IP_); do_raw_spin_unlock(lock);__local_bh_enable_ip(_RET_IP_, SOFTIRQ_LOCK_OFFSET);

}static __always_inline void spin_unlock_bh(spinlock_t *lock)

{raw_spin_unlock_bh(&lock->rlock);

}