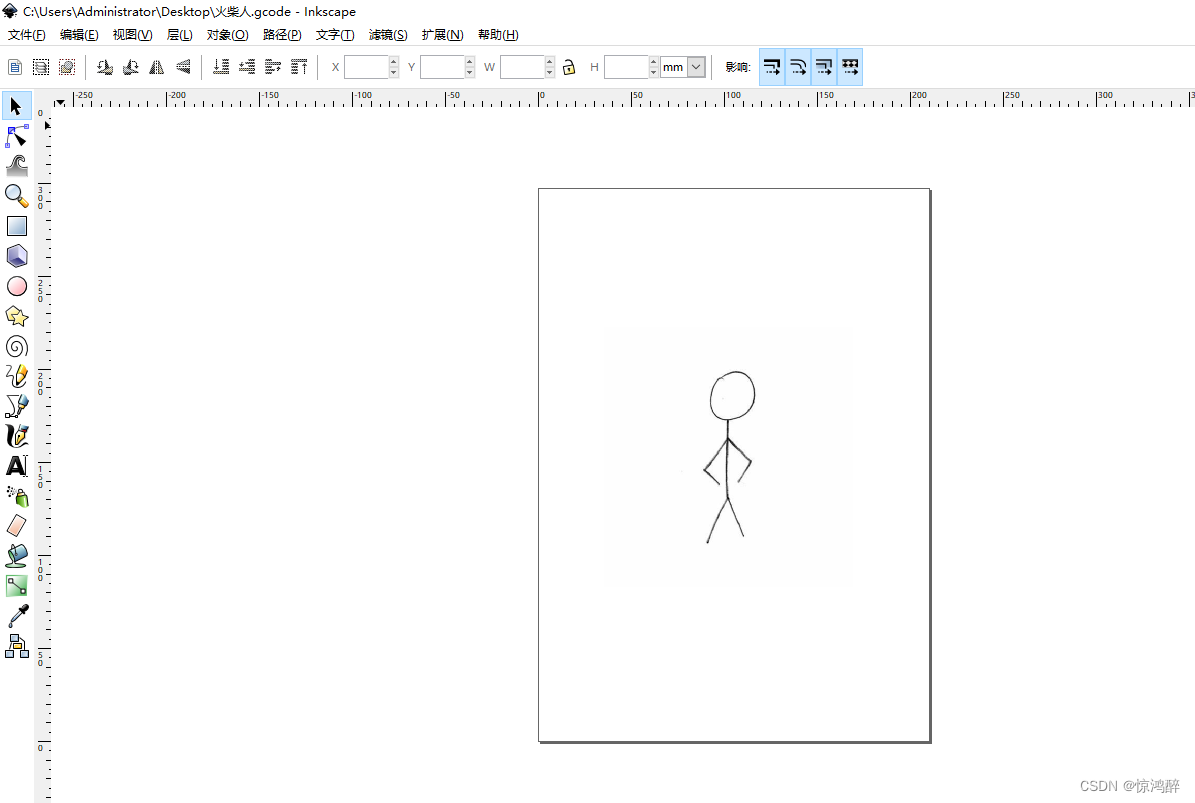

1.到网上找一张简单的图片,拖入软件中

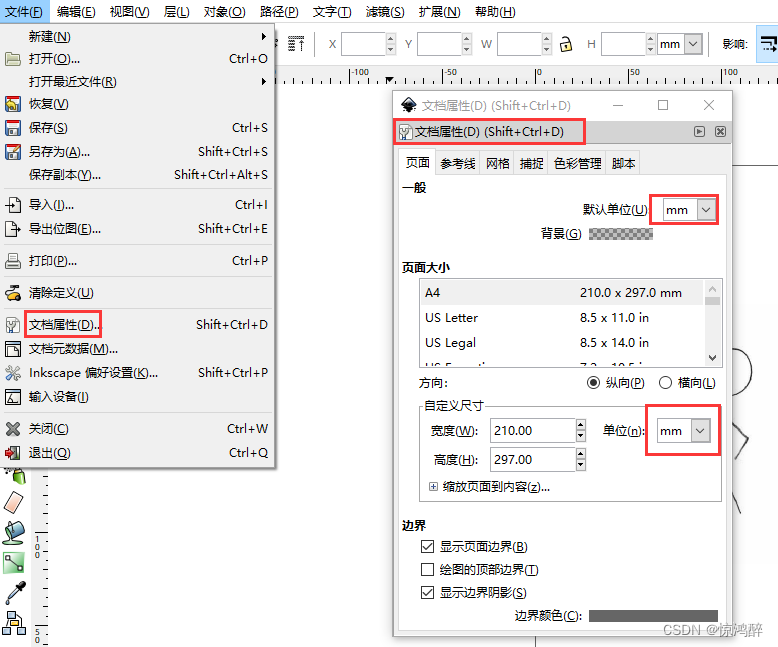

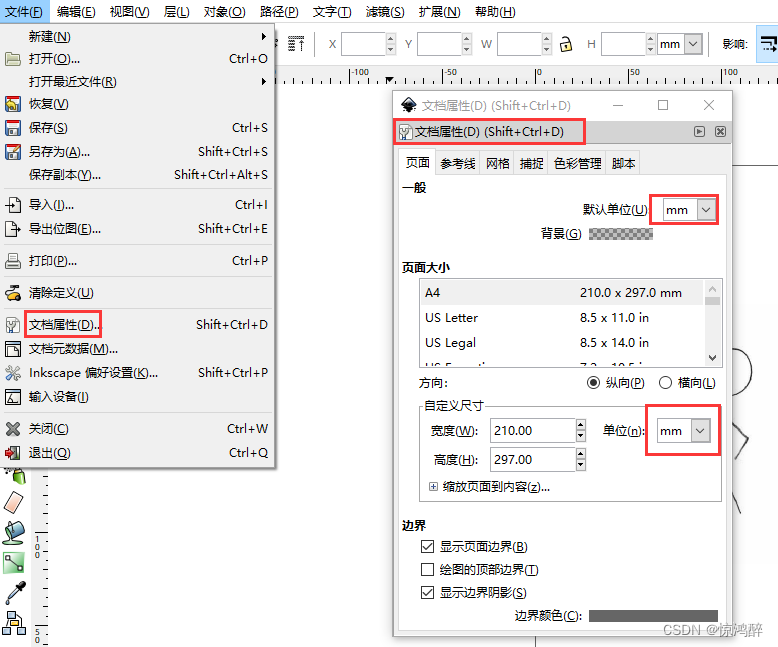

2.文档属性单位改成毫米

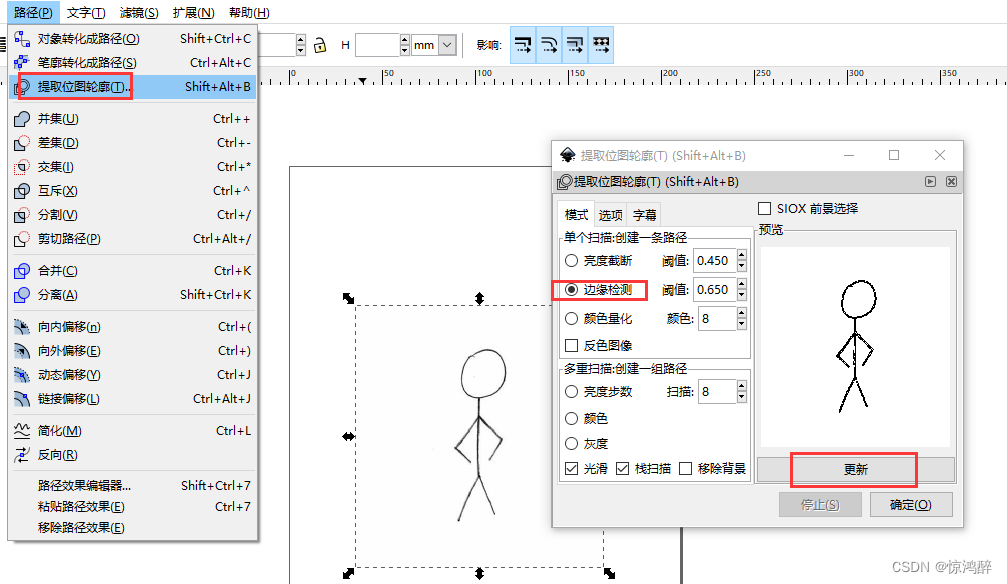

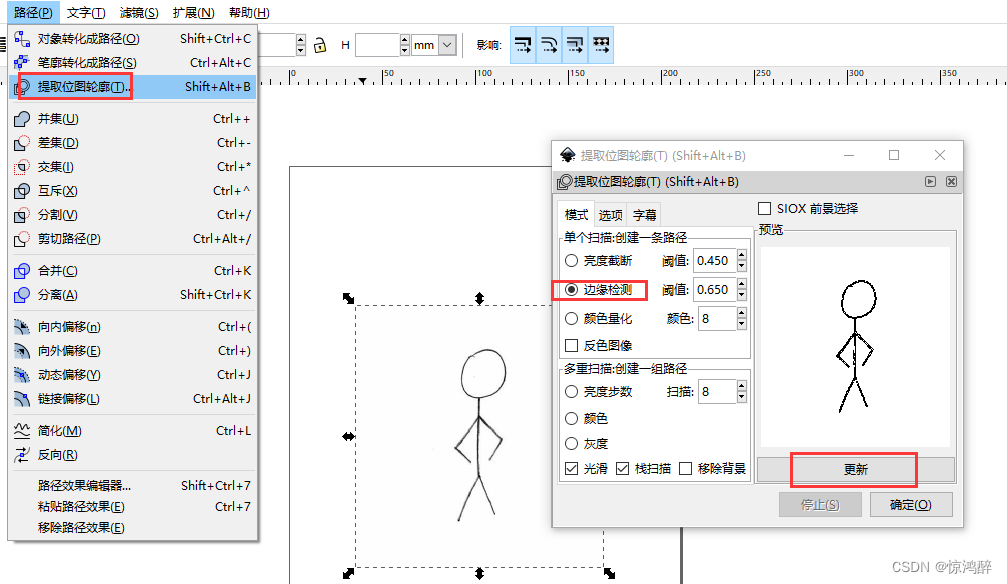

3.路径--->提取位图轮廓-->使用边缘检测

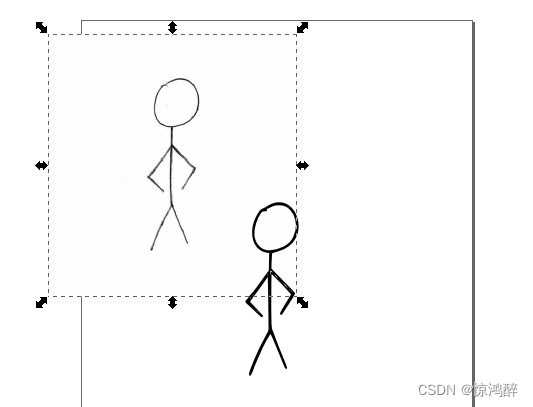

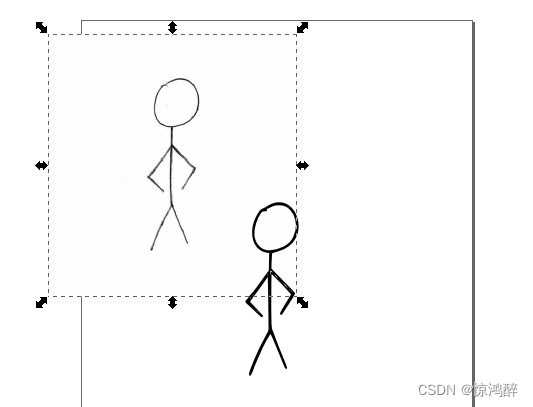

4.删除原图片

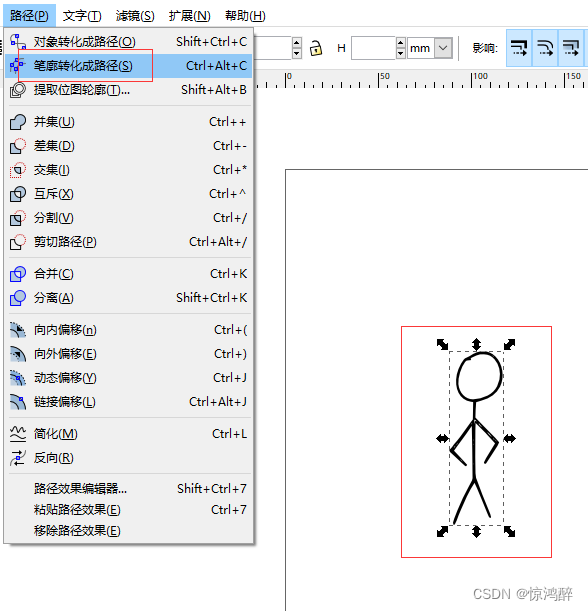

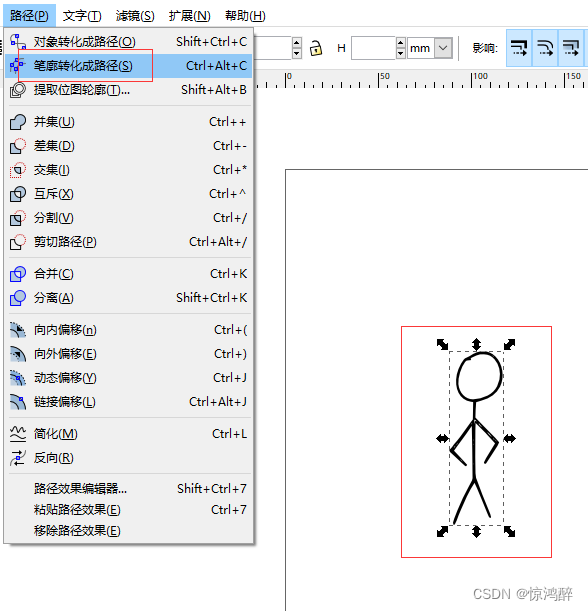

5.路径-->笔廓转化成路径

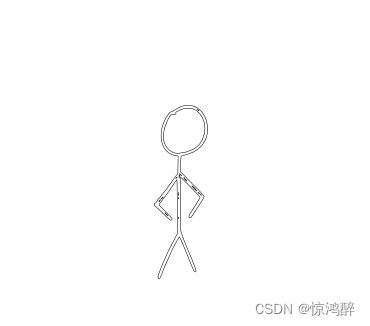

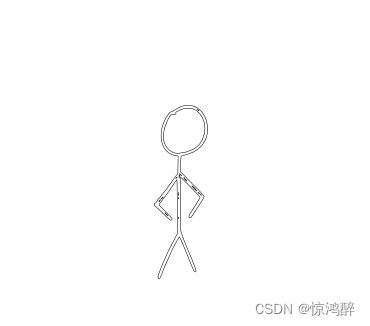

6.转变完了效果如下

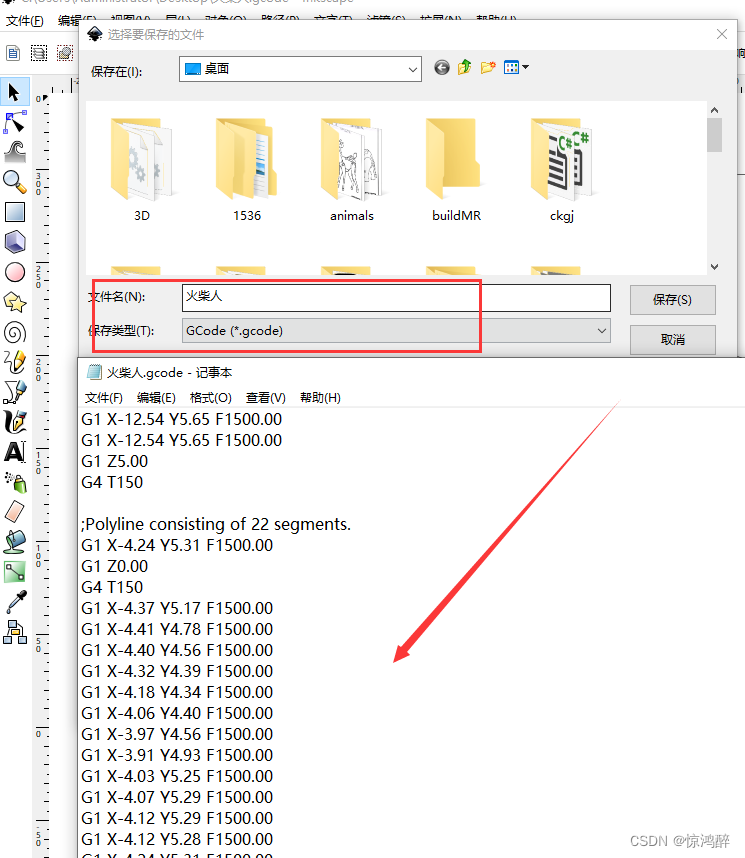

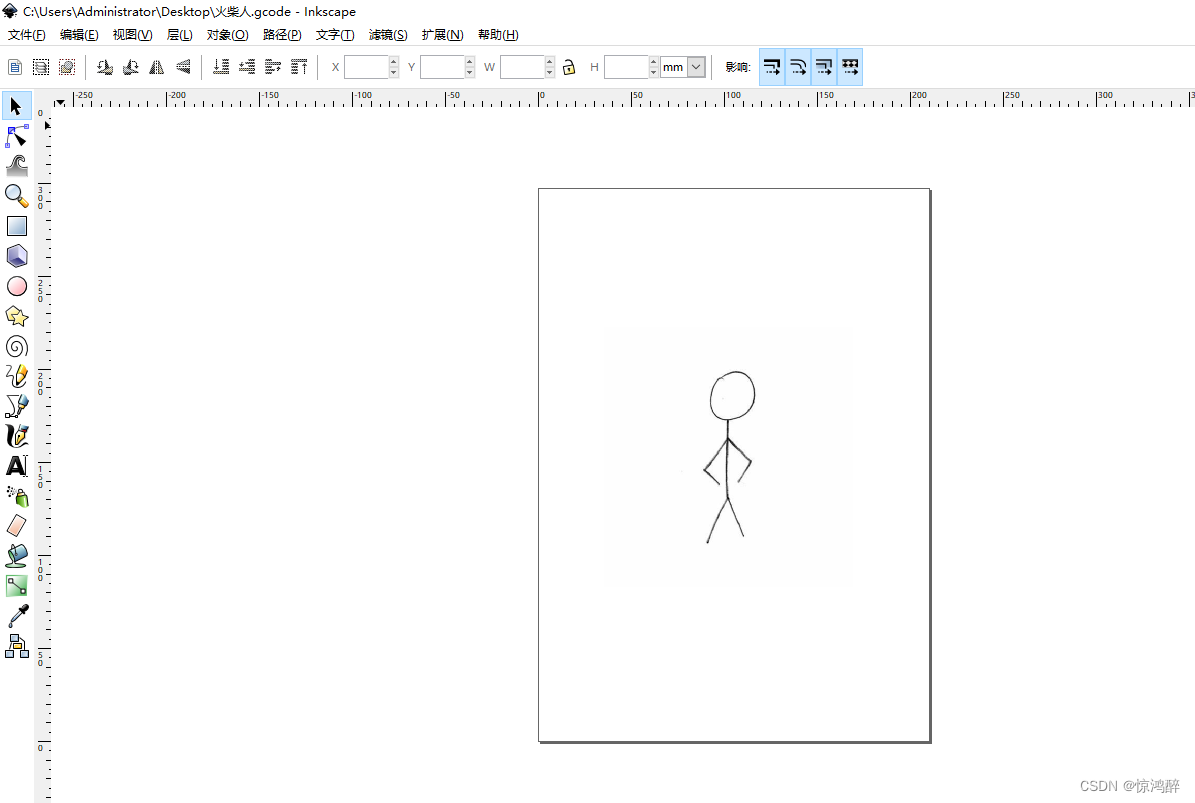

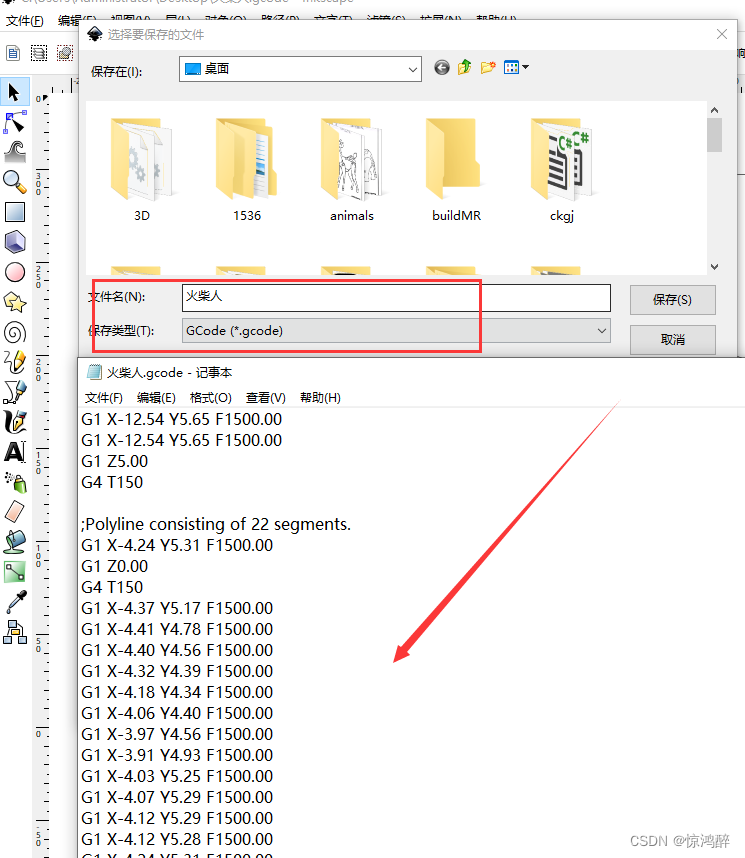

7.文件另存为--> gcode 就大功告成啦

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.hqwc.cn/news/239622.html

如若内容造成侵权/违法违规/事实不符,请联系编程知识网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!