文章目录

- 1.K8s环境搭建

- 2.Ceph集群部署

- 2.1 部署Rook Operator

- 2.2 镜像准备

- 2.3 配置节点角色

- 2.4 部署operator

- 2.5 部署Ceph集群

- 2.6 强制删除命名空间

- 2.7 验证集群

- 3.Ceph界面

1.K8s环境搭建

参考:CentOS7搭建k8s-v1.28.6集群详情,把K8s集群完成搭建,再进行Ceph集群搭建

2.Ceph集群部署

2.1 部署Rook Operator

# 下载 rook 项目,相关源码(2024-02-06最新版本)

git clone --single-branch --branch v1.13.3 https://github.com/rook/rook.git

# 如果网络不好,可以下载:rook-1.13.3.tar.gz

# https://gh.api.99988866.xyz/https://github.com/rook/rook/archive/refs/tags/v1.13.3.tar.gz

# 解压:tar -zxvf rook-1.13.3.tar.gz[root@ceph61 ~]# git clone --single-branch --branch v1.13.3 https://github.com/rook/rook.git

Cloning into 'rook'...

remote: Enumerating objects: 95134, done.

remote: Counting objects: 100% (5514/5514), done.

remote: Compressing objects: 100% (348/348), done.

remote: Total 95134 (delta 5299), reused 5203 (delta 5166), pack-reused 89620

Receiving objects: 100% (95134/95134), 50.70 MiB | 10.43 MiB/s, done.

Resolving deltas: 100% (66720/66720), done.

Note: checking out '54663a4333bb72ba2114f387048775a619f2344d'.You are in 'detached HEAD' state. You can look around, make experimental

changes and commit them, and you can discard any commits you make in this

state without impacting any branches by performing another checkout.If you want to create a new branch to retain commits you create, you may

do so (now or later) by using -b with the checkout command again. Example:git checkout -b new_branch_name2.2 镜像准备

# 第一步:检查需要下载的镜像

[root@ceph61 examples]# cat images.txtgcr.io/k8s-staging-sig-storage/objectstorage-sidecar/objectstorage-sidecar:v20230130-v0.1.0-24-gc0cf995quay.io/ceph/ceph:v18.2.1quay.io/ceph/cosi:v0.1.1quay.io/cephcsi/cephcsi:v3.10.1quay.io/csiaddons/k8s-sidecar:v0.8.0registry.k8s.io/sig-storage/csi-attacher:v4.4.2registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.9.1registry.k8s.io/sig-storage/csi-provisioner:v3.6.3registry.k8s.io/sig-storage/csi-resizer:v1.9.2registry.k8s.io/sig-storage/csi-snapshotter:v6.3.2rook/ceph:v1.13.3

# 修改为:gcr.io/k8s-staging-sig-storage/objectstorage-sidecar/objectstorage-sidecar:v20230130-v0.1.0-24-gc0cf995quay.io/ceph/ceph:v18.2.1quay.io/ceph/cosi:v0.1.1quay.io/cephcsi/cephcsi:v3.10.1quay.io/csiaddons/k8s-sidecar:v0.8.0registry.cn-hangzhou.aliyuncs.com/google_containers/csi-attacher:v4.4.2registry.cn-hangzhou.aliyuncs.com/google_containers/csi-node-driver-registrar:v2.9.1registry.cn-hangzhou.aliyuncs.com/google_containers/csi-provisioner:v3.6.3registry.cn-hangzhou.aliyuncs.com/google_containers/csi-resizer:v1.9.2registry.cn-hangzhou.aliyuncs.com/google_containers/csi-snapshotter:v6.3.2rook/ceph:v1.13.3# 第2步:登录阿里镜像源

docker login --username=你的阿里云账号 registry.cn-beijing.aliyuncs.com

输入你的阿里云账号密码# 第2步:手动下载镜像

# 不知道如何下载(此次部署集群,该镜像不下载也没有影响):gcr.io/k8s-staging-sig-storage/objectstorage-sidecar/objectstorage-sidecar:v20230130-v0.1.0-24-gc0cf995

docker pull quay.io/ceph/ceph:v18.2.1

docker pull quay.io/ceph/cosi:v0.1.1

docker pull quay.io/cephcsi/cephcsi:v3.10.1

docker pull quay.io/csiaddons/k8s-sidecar:v0.8.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/csi-attacher:v4.4.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/csi-node-driver-registrar:v2.9.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/csi-provisioner:v3.6.3

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/csi-resizer:v1.9.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/csi-snapshotter:v6.3.2

docker pull rook/ceph:v1.13.3# 第4步:重新打tag

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/csi-attacher:v4.4.2 registry.k8s.io/sig-storage/csi-attacher:v4.4.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/csi-node-driver-registrar:v2.9.1 registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.9.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/csi-provisioner:v3.6.3 registry.k8s.io/sig-storage/csi-provisioner:v3.6.3

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/csi-resizer:v1.9.2 registry.k8s.io/sig-storage/csi-resizer:v1.9.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/csi-snapshotter:v6.3.2 registry.k8s.io/sig-storage/csi-snapshotter:v6.3.22.3 配置节点角色

# 查看节点标签

kubectl get nodes --show-labels

kubectl get nodes <node-name> --show-labels[root@ceph61 examples]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

ceph61 Ready control-plane 47h v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=ceph61,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers=

ceph62 Ready <none> 47h v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=ceph62,kubernetes.io/os=linux

ceph63 Ready <none> 47h v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=ceph63,kubernetes.io/os=linux# 设置ceph61、ceph62、ceph63节点标签

kubectl label nodes {ceph61,ceph62,ceph63} ceph-osd=enabled

kubectl label nodes {ceph61,ceph62,ceph63} ceph-mon=enabled

kubectl label nodes ceph61 ceph-mgr=enabled[root@ceph61 examples]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

ceph61 Ready control-plane 47h v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ceph-mgr=enabled,ceph-mon=enabled,ceph-osd=enabled,kubernetes.io/arch=amd64,kubernetes.io/hostname=ceph61,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers=

ceph62 Ready <none> 47h v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ceph-mon=enabled,ceph-osd=enabled,kubernetes.io/arch=amd64,kubernetes.io/hostname=ceph62,kubernetes.io/os=linux

ceph63 Ready <none> 47h v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,ceph-mon=enabled,ceph-osd=enabled,kubernetes.io/arch=amd64,kubernetes.io/hostname=ceph63,kubernetes.io/os=linux2.4 部署operator

# 第1步:修改去掉注释 vim /root/rook-1.13.3/deploy/examples/operator.yaml

ROOK_CSI_CEPH_IMAGE: "quay.io/cephcsi/cephcsi:v3.10.1"

ROOK_CSI_REGISTRAR_IMAGE: "registry.k8s.io/sig-storage/csi-node-driver-registrar:v2.9.1"

ROOK_CSI_RESIZER_IMAGE: "registry.k8s.io/sig-storage/csi-resizer:v1.9.2"

ROOK_CSI_PROVISIONER_IMAGE: "registry.k8s.io/sig-storage/csi-provisioner:v3.6.3"

ROOK_CSI_SNAPSHOTTER_IMAGE: "registry.k8s.io/sig-storage/csi-snapshotter:v6.3.2"

ROOK_CSI_ATTACHER_IMAGE: "registry.k8s.io/sig-storage/csi-attacher:v4.4.2"

# 修改为:

ROOK_CSI_CEPH_IMAGE: "quay.io/cephcsi/cephcsi:v3.10.1"

ROOK_CSI_REGISTRAR_IMAGE: "registry.cn-hangzhou.aliyuncs.com/google_containers/csi-node-driver-registrar:v2.9.1"

ROOK_CSI_RESIZER_IMAGE: "registry.cn-hangzhou.aliyuncs.com/google_containers/csi-resizer:v1.9.2"

ROOK_CSI_PROVISIONER_IMAGE: "registry.cn-hangzhou.aliyuncs.com/google_containers/csi-provisioner:v3.6.3"

ROOK_CSI_SNAPSHOTTER_IMAGE: "registry.cn-hangzhou.aliyuncs.com/google_containers/csi-snapshotter:v6.3.2"

ROOK_CSI_ATTACHER_IMAGE: "registry.cn-hangzhou.aliyuncs.com/google_containers/csi-attacher:v4.4.2"

# To indicate the image pull policy to be applied to all the containers in the csi driver pods.

ROOK_CSI_IMAGE_PULL_POLICY: "IfNotPresent"# 检查:crds.yaml、common.yaml、operator.yamlcd /root/rook-1.13.3/deploy/examples

kubectl create -f crds.yaml -f common.yaml -f operator.yaml

# kubectl apply -f crds.yaml -f common.yaml -f operator.yaml

# kubectl delete -f crds.yaml -f common.yaml -f operator.yaml[root@ceph61 examples]# kubectl create -f crds.yaml -f common.yaml -f operator.yaml

customresourcedefinition.apiextensions.k8s.io/cephblockpoolradosnamespaces.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephblockpools.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephbucketnotifications.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephbuckettopics.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephclients.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephclusters.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephcosidrivers.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystemmirrors.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystems.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystemsubvolumegroups.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephnfses.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectrealms.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstores.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstoreusers.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectzonegroups.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectzones.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephrbdmirrors.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/objectbucketclaims.objectbucket.io created

customresourcedefinition.apiextensions.k8s.io/objectbuckets.objectbucket.io created

namespace/rook-ceph created

clusterrole.rbac.authorization.k8s.io/cephfs-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/cephfs-external-provisioner-runner created

clusterrole.rbac.authorization.k8s.io/objectstorage-provisioner-role created

clusterrole.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/rbd-external-provisioner-runner created

clusterrole.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

clusterrole.rbac.authorization.k8s.io/rook-ceph-global created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-system created

clusterrole.rbac.authorization.k8s.io/rook-ceph-object-bucket created

clusterrole.rbac.authorization.k8s.io/rook-ceph-osd created

clusterrole.rbac.authorization.k8s.io/rook-ceph-system created

clusterrolebinding.rbac.authorization.k8s.io/cephfs-csi-nodeplugin-role created

clusterrolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/objectstorage-provisioner-role-binding created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-global created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-object-bucket created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-system created

role.rbac.authorization.k8s.io/cephfs-external-provisioner-cfg created

role.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

role.rbac.authorization.k8s.io/rbd-external-provisioner-cfg created

role.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

role.rbac.authorization.k8s.io/rook-ceph-mgr created

role.rbac.authorization.k8s.io/rook-ceph-osd created

role.rbac.authorization.k8s.io/rook-ceph-purge-osd created

role.rbac.authorization.k8s.io/rook-ceph-rgw created

role.rbac.authorization.k8s.io/rook-ceph-system created

rolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin-role-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role-cfg created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-system created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-purge-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-rgw created

rolebinding.rbac.authorization.k8s.io/rook-ceph-system created

serviceaccount/objectstorage-provisioner created

serviceaccount/rook-ceph-cmd-reporter created

serviceaccount/rook-ceph-mgr created

serviceaccount/rook-ceph-osd created

serviceaccount/rook-ceph-purge-osd created

serviceaccount/rook-ceph-rgw created

serviceaccount/rook-ceph-system created

serviceaccount/rook-csi-cephfs-plugin-sa created

serviceaccount/rook-csi-cephfs-provisioner-sa created

serviceaccount/rook-csi-rbd-plugin-sa created

serviceaccount/rook-csi-rbd-provisioner-sa created

configmap/rook-ceph-operator-config created

deployment.apps/rook-ceph-operator created# 在继续操作之前,验证 rook-ceph-operator 是否正常运行,时间稍微长一些

kubectl get deployment -n rook-ceph

kubectl get pod -n rook-ceph[root@ceph61 examples]# kubectl get deployment -n rook-ceph

NAME READY UP-TO-DATE AVAILABLE AGE

rook-ceph-operator 1/1 1 1 4m52s

[root@ceph61 examples]# kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-5bfd9fd4fb-gg2g2 1/1 Running 0 4m56s2.5 部署Ceph集群

# apply可以重复执行,create不能重复执行

kubectl create -f cluster.yaml

# kubectl apply -f cluster.yaml# 删除资源对象

kubectl delete -f cluster.yaml# 强制删除命名空间

kubectl delete ns rook-ceph --grace-period=0 --force

kubectl delete namespace rook-ceph --force --grace-period=0

# 如果强制删除,还是无法成功的话,执行如下命令:

yum install jq -y

NAMESPACE=rook-ceph

kubectl proxy & kubectl get namespace $NAMESPACE -o json |jq '.spec = {"finalizers":[]}' >temp.json

curl -k -H "Content-Type: application/json" -X PUT --data-binary @temp.json 127.0.0.1:8001/api/v1/namespaces/$NAMESPACE/finalize# 指定Pod某个容器执行命令

kubectl exec <pod-name> -c <container-name> date# 查看日志

kubectl logs <pod-name>

kubectl logs -f <pod-name> # 实时查看日志

kubectl logs -f <pod-name> -c <container-name># 查看所有资源

kubectl get all -A[root@ceph61 examples]# kubectl apply -f cluster.yaml

cephcluster.ceph.rook.io/rook-ceph created# 实时查看进度,确保所有Pod处于Running,OSD prepare POD处于Completed,时间稍微长一些

kubectl get pod -n rook-ceph -w

# 实时查看集群创建进度

kubectl get cephcluster -n rook-ceph rook-ceph -w

# 详细描述

kubectl describe cephcluster -n rook-ceph rook-ceph[root@ceph61 examples]# kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-6jcsf 2/2 Running 1 (14m ago) 25m

csi-cephfsplugin-8pj67 2/2 Running 1 (24m ago) 25m

csi-cephfsplugin-lgxb8 2/2 Running 0 25m

csi-cephfsplugin-provisioner-7b94748884-6st2v 5/5 Running 1 (14m ago) 25m

csi-cephfsplugin-provisioner-7b94748884-lbb5q 5/5 Running 0 25m

csi-rbdplugin-7h4fd 2/2 Running 1 (24m ago) 25m

csi-rbdplugin-dflzl 2/2 Running 1 (14m ago) 25m

csi-rbdplugin-jz9fq 2/2 Running 0 25m

csi-rbdplugin-provisioner-5d78f964b7-h4tbd 5/5 Running 3 (13m ago) 25m

csi-rbdplugin-provisioner-5d78f964b7-xxqzp 5/5 Running 1 (14m ago) 25m

rook-ceph-crashcollector-ceph61-5cbdcd57f4-7xwgv 1/1 Running 0 2m10s

rook-ceph-crashcollector-ceph62-745dc4c977-vdctc 1/1 Running 0 2m22s

rook-ceph-crashcollector-ceph63-b8f9b54d6-j2784 1/1 Running 0 2m23s

rook-ceph-exporter-ceph61-74c796f9b4-2g8bv 1/1 Running 0 2m10s

rook-ceph-exporter-ceph62-687b86d6f5-dv7x6 1/1 Running 0 2m22s

rook-ceph-exporter-ceph63-5d86c955fb-nc2q4 1/1 Running 0 2m23s

rook-ceph-mgr-a-5d5ccb978d-ss77f 3/3 Running 0 2m23s

rook-ceph-mgr-b-5f5fcfcb5f-slzv5 3/3 Running 0 2m22s

rook-ceph-mon-a-57dd87b4f-bnm5t 2/2 Running 0 14m

rook-ceph-mon-b-6bdf4c8d65-r7ncw 2/2 Running 0 14m

rook-ceph-mon-c-6ffb957cb7-77fcb 2/2 Running 0 9m10s

rook-ceph-operator-5bfd9fd4fb-k2jr4 1/1 Running 0 30m

rook-ceph-osd-prepare-ceph61-96lgt 0/1 Completed 0 2m

rook-ceph-osd-prepare-ceph62-xbzmm 0/1 Completed 0 2m

rook-ceph-osd-prepare-ceph63-hj5m7 0/1 Completed 0 119s2.6 强制删除命名空间

# 强制删除命名空间

[root@ceph61 examples]# kubectget namespace

NAME STATUS AGE

cattle-system Active 40h

default Active 42h

kube-node-lease Active 42h

kube-public Active 42h

kube-system Active 42h

kuboard Active 41h

rook-ceph Terminating 23h# rook-ceph命名空间 Terminating

kubectl delete ns rook-ceph --grace-period=0 --force

kubectl delete namespace rook-ceph --force --grace-period=0# 如果强制删除,还是无法成功的话,执行如下命令:

yum install jq -y

NAMESPACE=rook-ceph

kubectl proxy & kubectl get namespace $NAMESPACE -o json |jq '.spec = {"finalizers":[]}' >temp.json

curl -k -H "Content-Type: application/json" -X PUT --data-binary @temp.json 127.0.0.1:8001/api/v1/namespaces/$NAMESPACE/finalize[root@ceph61 ~]# NAMESPACE=rook-ceph

[root@ceph61 ~]# kubectl proxy & kubectl get namespace $NAMESPACE -o json |jq '.spec = {"finalizers":[]}' >temp.json

[1] 29753

Starting to serve on 127.0.0.1:8001

[root@ceph61 ~]# curl -k -H "Content-Type: application/json" -X PUT --data-binary @temp.json 127.0.0.1:8001/api/v1/namespaces/$NAMESPACE/finalize

{"kind": "Namespace","apiVersion": "v1","metadata": {"name": "rook-ceph","uid": "92a3a816-1464-4ecd-8d1c-c13cc1f8a345","resourceVersion": "70192","creationTimestamp": "2024-02-06T02:51:20Z","deletionTimestamp": "2024-02-06T11:03:41Z","labels": {"kubernetes.io/metadata.name": "rook-ceph"},"managedFields": [{"manager": "kubectl-create","operation": "Update","apiVersion": "v1","time": "2024-02-06T02:51:20Z","fieldsType": "FieldsV1","fieldsV1": {"f:metadata": {"f:labels": {".": {},"f:kubernetes.io/metadata.name": {}}}}},{"manager": "kube-controller-manager","operation": "Update","apiVersion": "v1","time": "2024-02-06T11:04:08Z","fieldsType": "FieldsV1","fieldsV1": {"f:status": {"f:conditions": {".": {},"k:{\"type\":\"NamespaceContentRemaining\"}": {".": {},"f:lastTransitionTime": {},"f:message": {},"f:reason": {},"f:status": {},"f:type": {}},"k:{\"type\":\"NamespaceDeletionContentFailure\"}": {".": {},"f:lastTransitionTime": {},"f:message": {},"f:reason": {},"f:status": {},"f:type": {}},"k:{\"type\":\"NamespaceDeletionDiscoveryFailure\"}": {".": {},"f:lastTransitionTime": {},"f:message": {},"f:reason": {},"f:status": {},"f:type": {}},"k:{\"type\":\"NamespaceDeletionGroupVersionParsingFailure\"}": {".": {},"f:lastTransitionTime": {},"f:message": {},"f:reason": {},"f:status": {},"f:type": {}},"k:{\"type\":\"NamespaceFinalizersRemaining\"}": {".": {},"f:lastTransitionTime": {},"f:message": {},"f:reason": {},"f:status": {},"f:type": {}}}}},"subresource": "status"}]},"spec": {},"status": {"phase": "Terminating","conditions": [{"type": "NamespaceDeletionDiscoveryFailure","status": "True","lastTransitionTime": "2024-02-06T11:03:46Z","reason": "DiscoveryFailed","message": "Discovery failed for some groups, 1 failing: unable to retrieve the complete list of server APIs: metrics.k8s.io/v1beta1: stale GroupVersion discovery: metrics.k8s.io/v1beta1"},{"type": "NamespaceDeletionGroupVersionParsingFailure","status": "False","lastTransitionTime": "2024-02-06T11:03:46Z","reason": "ParsedGroupVersions","message": "All legacy kube types successfully parsed"},{"type": "NamespaceDeletionContentFailure","status": "False","lastTransitionTime": "2024-02-06T11:03:46Z","reason": "ContentDeleted","message": "All content successfully deleted, may be waiting on finalization"},{"type": "NamespaceContentRemaining","status": "False","lastTransitionTime": "2024-02-06T11:04:08Z","reason": "ContentRemoved","message": "All content successfully removed"},{"type": "NamespaceFinalizersRemaining","status": "False","lastTransitionTime": "2024-02-06T11:03:46Z","reason": "ContentHasNoFinalizers","message": "All content-preserving finalizers finished"}]}

}# 查看

[root@ceph61 ~]# kubectl get namespace

NAME STATUS AGE

cattle-system Active 40h

default Active 42h

kube-node-lease Active 42h

kube-public Active 42h

kube-system Active 42h

kuboard Active 41h2.7 验证集群

# 要验证群集是否处于正常状态,可以连接到 toolbox 工具箱并运行命令

cd /root/rook-1.13.3/deploy/examples

kubectl create -f toolbox.yaml

[root@ceph61 examples]# kubectl create -f toolbox.yaml

deployment.apps/rook-ceph-tools created# 查看Pod

[root@ceph61 examples]# kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-6jcsf 2/2 Running 1 (21m ago) 32m

csi-cephfsplugin-8pj67 2/2 Running 1 (32m ago) 32m

csi-cephfsplugin-lgxb8 2/2 Running 0 32m

csi-cephfsplugin-provisioner-7b94748884-6st2v 5/5 Running 1 (21m ago) 32m

csi-cephfsplugin-provisioner-7b94748884-lbb5q 5/5 Running 0 32m

csi-rbdplugin-7h4fd 2/2 Running 1 (32m ago) 32m

csi-rbdplugin-dflzl 2/2 Running 1 (21m ago) 32m

csi-rbdplugin-jz9fq 2/2 Running 0 32m

csi-rbdplugin-provisioner-5d78f964b7-h4tbd 5/5 Running 3 (20m ago) 32m

csi-rbdplugin-provisioner-5d78f964b7-xxqzp 5/5 Running 1 (21m ago) 32m

rook-ceph-crashcollector-ceph61-5cbdcd57f4-7xwgv 1/1 Running 0 9m40s

rook-ceph-crashcollector-ceph62-6cbdf99cff-vrw44 1/1 Running 0 7m15s

rook-ceph-crashcollector-ceph63-855974fbd8-8w5ql 1/1 Running 0 7m16s

rook-ceph-exporter-ceph61-74c796f9b4-2g8bv 1/1 Running 0 9m40s

rook-ceph-exporter-ceph62-7f845dcd9c-fjr87 1/1 Running 0 7m11s

rook-ceph-exporter-ceph63-5dcf677d86-ct776 1/1 Running 0 7m11s

rook-ceph-mgr-a-5d5ccb978d-ss77f 3/3 Running 0 9m53s

rook-ceph-mgr-b-5f5fcfcb5f-slzv5 3/3 Running 0 9m52s

rook-ceph-mon-a-57dd87b4f-bnm5t 2/2 Running 0 22m

rook-ceph-mon-b-6bdf4c8d65-r7ncw 2/2 Running 0 21m

rook-ceph-mon-c-6ffb957cb7-77fcb 2/2 Running 0 16m

rook-ceph-operator-5bfd9fd4fb-k2jr4 1/1 Running 0 38m

rook-ceph-osd-0-7cbd59747f-5sl7v 2/2 Running 0 7m16s

rook-ceph-osd-1-f487664fb-gdwlx 2/2 Running 0 7m16s

rook-ceph-osd-2-77b849c5b-lc7p2 2/2 Running 0 7m16s

rook-ceph-osd-3-5469748766-tj256 2/2 Running 0 7m16s

rook-ceph-osd-4-77d4bd4b7c-2gqdz 2/2 Running 0 7m16s

rook-ceph-osd-5-694548dbcc-jr9bg 2/2 Running 0 7m16s

rook-ceph-osd-6-844ff54957-d84xs 2/2 Running 0 7m16s

rook-ceph-osd-7-5c7b9d4b76-fsh46 2/2 Running 0 7m16s

rook-ceph-osd-8-6dd768d7fb-9smbs 2/2 Running 0 7m16s

rook-ceph-osd-prepare-ceph61-czhcp 0/1 Completed 0 2m53s

rook-ceph-osd-prepare-ceph62-2754w 0/1 Completed 0 2m49s

rook-ceph-osd-prepare-ceph63-fhqfl 0/1 Completed 0 2m45s

rook-ceph-tools-66b77b8df5-gj256 1/1 Running 0 3m26s# 进入Pod容器

kubectl exec -it rook-ceph-tools-66b77b8df5-gj256 -n rook-ceph -- bash# 进去之后就可以执行各种命令了:

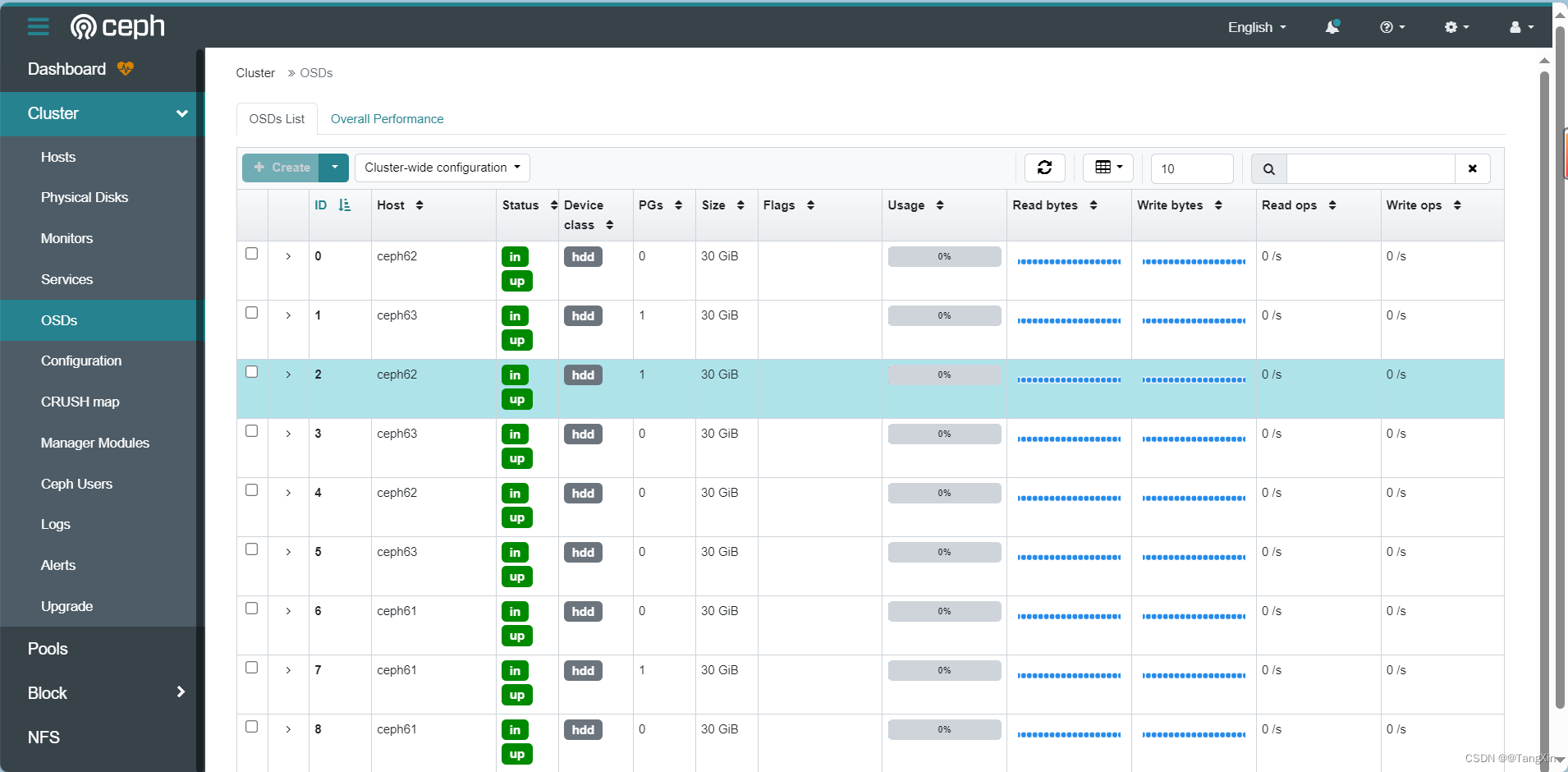

ceph status

ceph osd status

ceph df

rados df[root@ceph61 examples]# kubectl exec -it rook-ceph-tools-66b77b8df5-gj256 -n rook-ceph -- bash

bash-4.4$ ceph -v

ceph version 18.2.1 (7fe91d5d5842e04be3b4f514d6dd990c54b29c76) reef (stable)

bash-4.4$ ceph -scluster:id: 7793dc7d-fb3a-48dd-9297-8f2d37fb9901health: HEALTH_WARNSlow OSD heartbeats on back (longest 69426.357ms)Slow OSD heartbeats on front (longest 65492.636ms)services:mon: 3 daemons, quorum a,c,b (age 18s)mgr: b(active, since 9m), standbys: aosd: 9 osds: 9 up (since 76s), 9 in (since 15m)data:pools: 1 pools, 1 pgsobjects: 2 objects, 449 KiBusage: 240 MiB used, 270 GiB / 270 GiB availpgs: 1 active+cleanbash-4.4$ ceph osd status

ID HOST USED AVAIL WR OPS WR DATA RD OPS RD DATA STATE0 ceph62 26.5M 29.9G 0 0 0 0 exists,up1 ceph63 26.9M 29.9G 0 0 0 0 exists,up2 ceph62 26.9M 29.9G 0 0 0 0 exists,up3 ceph63 26.5M 29.9G 0 0 0 0 exists,up4 ceph62 26.5M 29.9G 0 0 0 0 exists,up5 ceph63 26.5M 29.9G 0 0 0 0 exists,up6 ceph61 26.5M 29.9G 0 0 0 0 exists,up7 ceph61 26.9M 29.9G 0 0 0 0 exists,up8 ceph61 26.5M 29.9G 0 0 0 0 exists,up

bash-4.4$ ceph df

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 270 GiB 270 GiB 240 MiB 240 MiB 0.09

TOTAL 270 GiB 270 GiB 240 MiB 240 MiB 0.09--- POOLS ---

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

.mgr 1 1 449 KiB 2 1.3 MiB 0 85 GiB

bash-4.4$ rados df

POOL_NAME USED OBJECTS CLONES COPIES MISSING_ON_PRIMARY UNFOUND DEGRADED RD_OPS RD WR_OPS WR USED COMPR UNDER COMPR

.mgr 1.3 MiB 2 0 6 0 0 0 288 494 KiB 153 1.3 MiB 0 B 0 Btotal_objects 2

total_used 240 MiB

total_avail 270 GiB

total_space 270 GiB3.Ceph界面

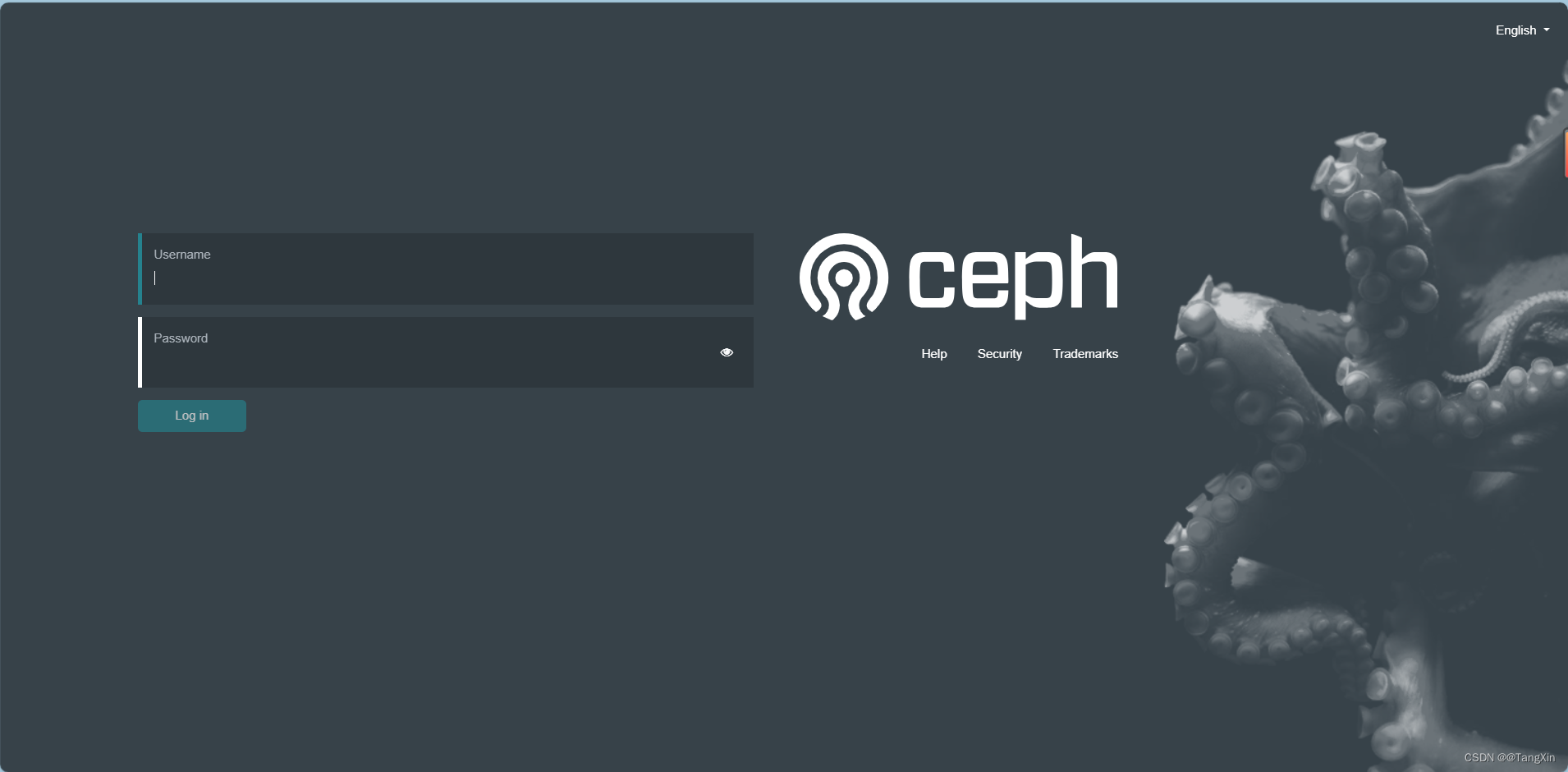

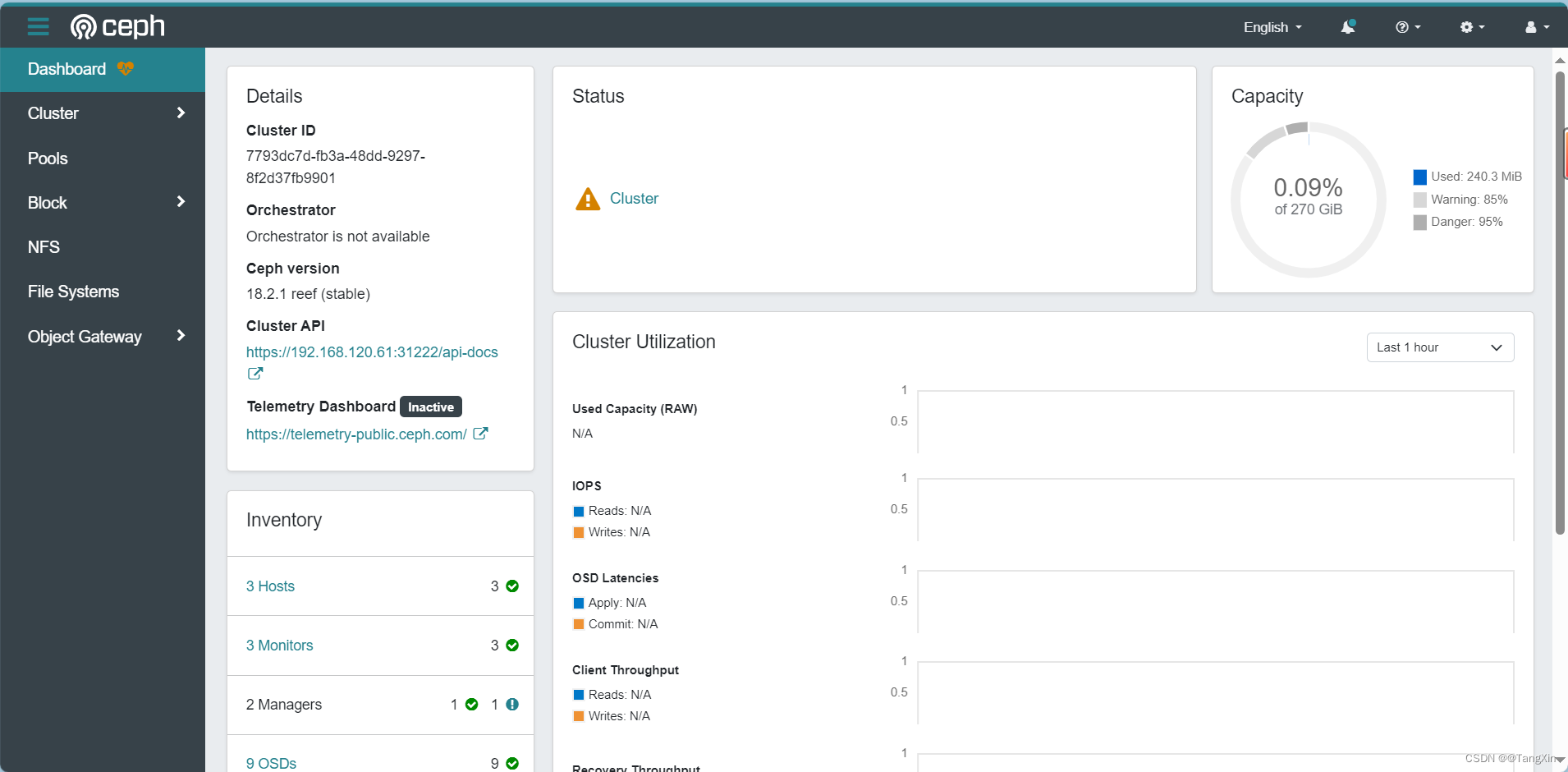

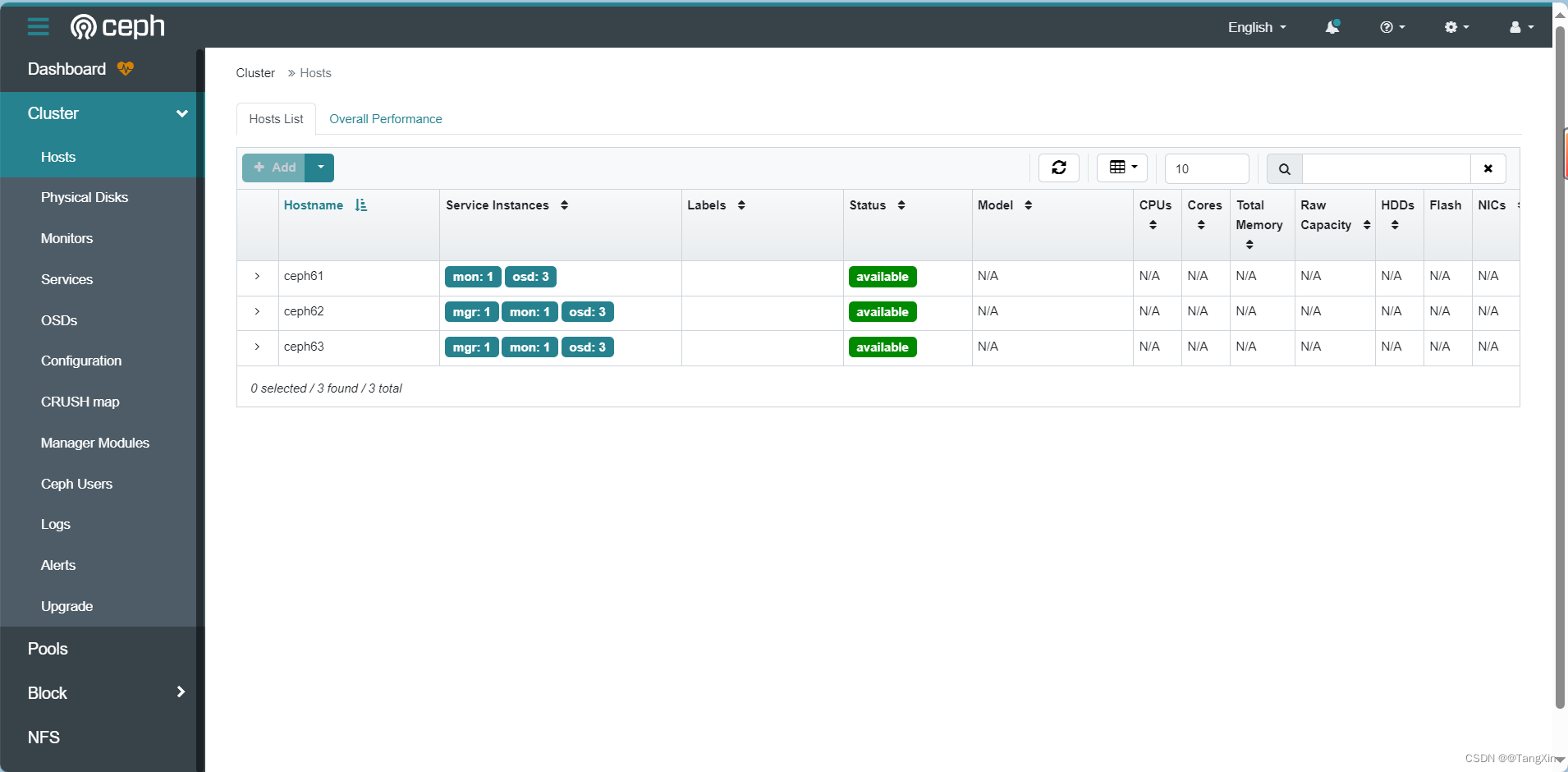

# Dashboard,查看dashboard的svc,Rook将启用端口 8443 以进行 https 访问

kubectl get svc -n rook-ceph

[root@ceph61 examples]# kubectl get svc -n rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rook-ceph-exporter ClusterIP 10.104.154.115 <none> 9926/TCP 22m

rook-ceph-mgr ClusterIP 10.109.137.46 <none> 9283/TCP 22m

rook-ceph-mgr-dashboard ClusterIP 10.101.248.83 <none> 8443/TCP 22m

rook-ceph-mon-a ClusterIP 10.100.67.82 <none> 6789/TCP,3300/TCP 35m

rook-ceph-mon-b ClusterIP 10.97.62.97 <none> 6789/TCP,3300/TCP 34m

rook-ceph-mon-c ClusterIP 10.99.101.215 <none> 6789/TCP,3300/TCP 29m# rook-ceph-mgr:是 Ceph 的管理进程(Manager),负责集群的监控、状态报告、数据分析、调度等功能,它默认监听 9283 端口,并提供了 Prometheus 格式的监控指标,可以被 Prometheus 拉取并用于集群监控。

# rook-ceph-mgr-dashboard:是 Rook 提供的一个 Web 界面,用于方便地查看 Ceph 集群的监控信息、状态、性能指标等。

# rook-ceph-mon:是 Ceph Monitor 进程的 Kubernetes 服务。Ceph Monitor 是 Ceph 集群的核心组件之一,负责维护 Ceph 集群的状态、拓扑结构、数据分布等信息,是 Ceph 集群的管理节点。# 查看默认账号admin的密码,这个密码就是等会用来登录界面的

# kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}" | base64 --decode && echo

[root@ceph61 examples]# kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}" | base64 --decode && echo

2<rI.]qNXqGX#F2c-M@E# 使用 NodePort 类型的Service暴露dashboard:dashboard-external-https.yaml

[root@ceph61 examples]# cat dashboard-external-https.yaml

apiVersion: v1

kind: Service

metadata:name: rook-ceph-mgr-dashboard-external-httpsnamespace: rook-ceph # namespace:clusterlabels:app: rook-ceph-mgrrook_cluster: rook-ceph # namespace:cluster

spec:ports:- name: dashboardport: 8443protocol: TCPtargetPort: 8443selector:app: rook-ceph-mgrmgr_role: activerook_cluster: rook-ceph # namespace:clustersessionAffinity: Nonetype: NodePort# 创建

kubectl create -f dashboard-external-https.yaml

[root@ceph61 examples]# kubectl create -f dashboard-external-https.yaml

service/rook-ceph-mgr-dashboard-external-https created[root@ceph61 examples]# kubectl get svc -n rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rook-ceph-exporter ClusterIP 10.104.154.115 <none> 9926/TCP 28m

rook-ceph-mgr ClusterIP 10.109.137.46 <none> 9283/TCP 28m

rook-ceph-mgr-dashboard ClusterIP 10.101.248.83 <none> 8443/TCP 28m

rook-ceph-mgr-dashboard-external-https NodePort 10.97.245.134 <none> 8443:31222/TCP 20s

rook-ceph-mon-a ClusterIP 10.100.67.82 <none> 6789/TCP,3300/TCP 40m

rook-ceph-mon-b ClusterIP 10.97.62.97 <none> 6789/TCP,3300/TCP 40m

rook-ceph-mon-c ClusterIP 10.99.101.215 <none> 6789/TCP,3300/TCP 35m# 访问

https://192.168.120.61:31222/

用户名:admin

密码:2<rI.]qNXqGX#F2c-M@E

![[设计模式Java实现附plantuml源码~行为型]请求的链式处理——职责链模式](https://img-blog.csdnimg.cn/direct/699aac3ed0c446d088772a0ed4c444ed.png)