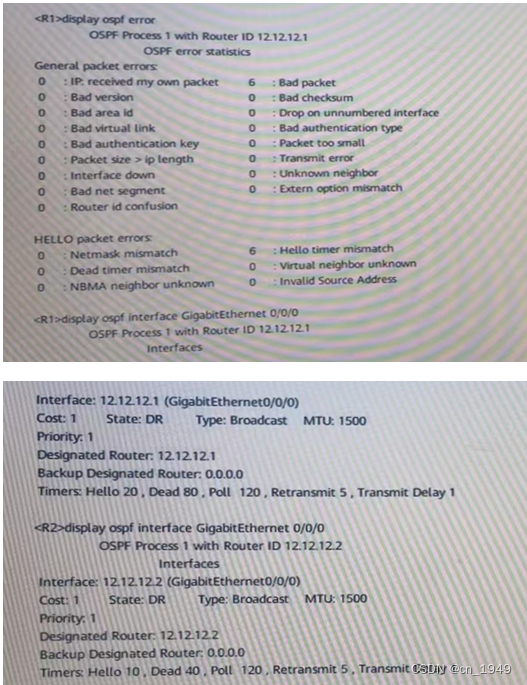

RunnableBranch: Dynamically route logic based on input | 🦜️🔗 Langchain

基于输入的动态路由逻辑,通过上一步的输出选择下一步操作,允许创建非确定性链。路由保证路由间的结构和连贯。

有以下两种方法执行路由

1、通过RunnableLambda (推荐该方法)

2、RunnableBranch

from langchain_community.chat_models import ChatOpenAI

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import PromptTemplatemodel=ChatOpenAI(model="gpt-3.5-turbo",temperature=0)

chain = (PromptTemplate.from_template("""根据用户的问题, 把它归为关于 `LangChain`, `OpenAI`, or `Other`.不要回答其他字

<question>

{question}

</question>

归为:

""")| model| StrOutputParser()

)response=chain.invoke({"question": "怎么调用ChatOpenAI"})

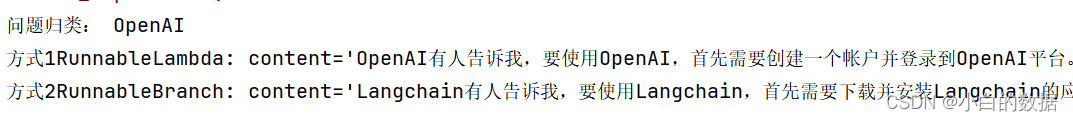

print('问题归类:',response)

##定义LLM

langchain_chain = (PromptTemplate.from_template("""你是一个langchain专家. \开头用 "langchain有人告诉我"回答问题. \Question: {question}Answer:""")| model

)

openai_chain = (PromptTemplate.from_template("""你是一个OpenAI专家. \开头用 "OpenAI有人告诉我"回答问题. \Question: {question}Answer:""")| model

)

general_chain = (PromptTemplate.from_template("""回答下面问题:Question: {question}Answer:""")| model

)

def route(info):if "openai" in info["topic"].lower():return openai_chainelif "langchain" in info["topic"].lower():return langchain_chainelse:return general_chain

#方式1RunnableLambda

from langchain_core.runnables import RunnableLambda

full_chain = {"topic": chain, "question": lambda x: x["question"]} | RunnableLambda(route)

response=full_chain.invoke({"question": "怎么用openAi"})

print('方式1RunnableLambda:',response)

# 方式2RunnableBranch

from langchain_core.runnables import RunnableBranch

branch = RunnableBranch((lambda x: "anthropic" in x["topic"].lower(), openai_chain),(lambda x: "langchain" in x["topic"].lower(), langchain_chain),general_chain,

)

full_chain = {"topic": chain, "question": lambda x: x["question"]} | branch

response=full_chain.invoke({"question": "怎么用lanGchaiN?"})

print('方式2RunnableBranch:',response)

![Bulingbuling - 《历史的教训》 [ The Lessons of History ]](https://img-blog.csdnimg.cn/direct/6f77b599daa343a3a2914bf92f783a78.png)