目录

免责声明

任务

文件简介

爬取当当网内容单管道

pipelines.py

items.py

setting

dang.py

当当网多管道下载图片

pipelines.py

settings

当当网多页下载

dang.py

pielines.py

settings

items.py

总结

免责声明

该文章用于学习,无任何商业用途

文章部分图片来自尚硅谷

任务

爬取当当网汽车用品_汽车用品【价格 品牌 推荐 正品折扣】-当当网页面的全部商品数据

文件简介

在Scrapy框架中,pipelines和items都是用于处理和存储爬取到的数据的工具。

-

Items:Items是用于存储爬取到的数据的容器。它类似于一个Python字典,可以存储各种字段和对应的值。在Scrapy中,你可以定义一个自己的Item类,然后在爬虫中创建Item对象,并将爬取到的数据填充到Item对象中。Items可以在爬取过程中传递给pipelines进行进一步处理和存储。 -

Pipelines:Pipelines是用于处理和存储Item对象的组件。当爬虫爬取到数据后,它会将数据填充到Item对象中,并通过Pipeline进行处理和存储。Pipeline可以在爬取过程中执行各种操作,比如数据的清洗、去重、验证、存储等。你可以定义多个Pipeline,并按优先级顺序执行它们。

在我们的这个项目中就需要用到了

爬取当当网内容单管道

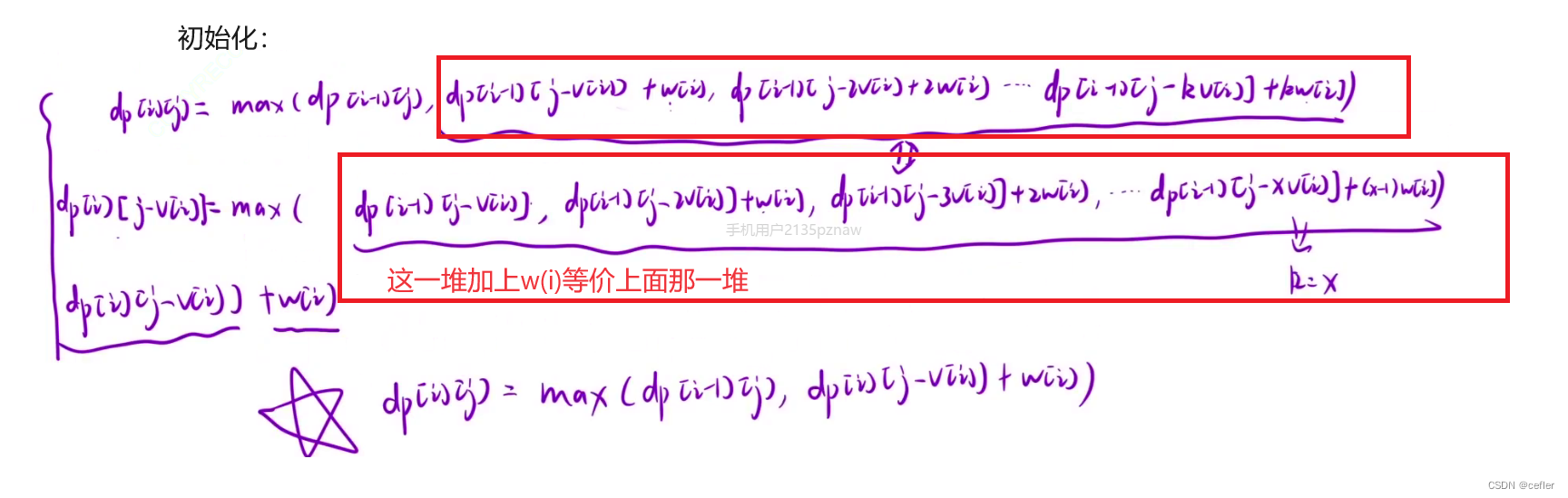

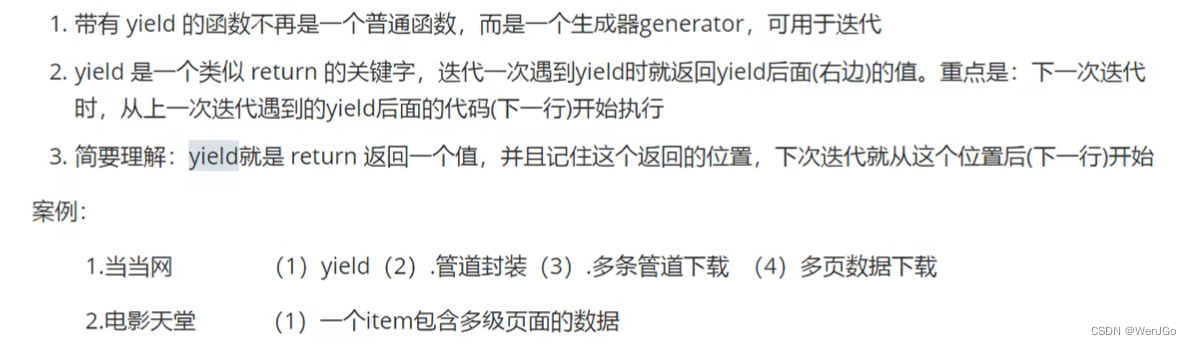

下面的图片来自尚硅谷

pipelines.py

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html# useful for handling different item types with a single interface

from itemadapter import ItemAdapter# 如果想使用管道的话,就要在setting中开启

class ScrapyDangdang060Pipeline:"""在爬虫文件开始之前执行"""def open_spider(self, spider):print("++++++++++=========")self.fp = open('book.json', 'w', encoding='utf-8')# item 就是yield后面的book对象# book = ScrapyDangdang060Pipeline(src=src, name=name, price=price)def process_item(self, item, spider):# TODO 一下这种方法并不推荐,因为每传递过来一个对象就打开一个文件# TODO 对文件的操作过于频繁# (1) write方法必须是字符串,而不能是其他的对象# w 会每一个对象都打开一次文件,然后后一个文件会将前一个文件覆盖# with open('book.json', 'a', encoding='utf-8') as fp:# fp.write(str(item))# todo 这样就解决了文件的打开过于频繁self.fp.write(str(item))return item"""在爬虫文件执行完成之后执行"""def close_spider(self, spider):print("------------------==========")self.fp.close()在setting中解除注释,开启pipelines

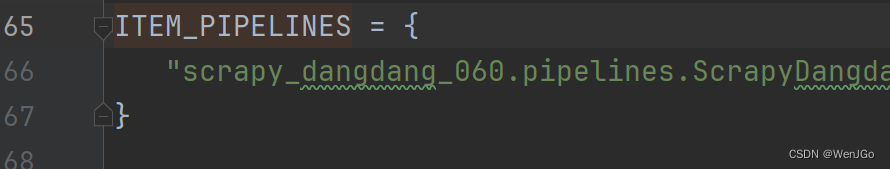

ITEM_PIPELINES = {# 管道可以有很多个,管道也存在优先级,范围1~1000,值越小,优先级越高"scrapy_dangdang_060.pipelines.ScrapyDangdang060Pipeline": 300,

}items.py

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.htmlimport scrapyclass ScrapyDangdang060Item(scrapy.Item):# define the fields for your item here like:# name = scrapy.Field()# 通俗的说就是我们要下载的数据都有什么# 图片src = scrapy.Field()# 名字name = scrapy.Field()# 价格price = scrapy.Field()setting

# Scrapy settings for scrapy_dangdang_060 project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.htmlBOT_NAME = "scrapy_dangdang_060"SPIDER_MODULES = ["scrapy_dangdang_060.spiders"]

NEWSPIDER_MODULE = "scrapy_dangdang_060.spiders"# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = "scrapy_dangdang_060 (+http://www.yourdomain.com)"# Obey robots.txt rules

ROBOTSTXT_OBEY = True# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16# Disable cookies (enabled by default)

#COOKIES_ENABLED = False# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# "Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8",

# "Accept-Language": "en",

#}# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# "scrapy_dangdang_060.middlewares.ScrapyDangdang060SpiderMiddleware": 543,

#}# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# "scrapy_dangdang_060.middlewares.ScrapyDangdang060DownloaderMiddleware": 543,

#}# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# "scrapy.extensions.telnet.TelnetConsole": None,

#}# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# 管道可以有很多个,管道也存在优先级,范围1~1000,值越小,优先级越高

ITEM_PIPELINES = {"scrapy_dangdang_060.pipelines.ScrapyDangdang060Pipeline": 300,

}# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = "httpcache"

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = "scrapy.extensions.httpcache.FilesystemCacheStorage"# Set settings whose default value is deprecated to a future-proof value

REQUEST_FINGERPRINTER_IMPLEMENTATION = "2.7"

TWISTED_REACTOR = "twisted.internet.asyncioreactor.AsyncioSelectorReactor"

FEED_EXPORT_ENCODING = "utf-8"

dang.py

import scrapy

# 这里报错是编译器的问题,但是并不影响下面的代码

from scrapy_dangdang_060.items import ScrapyDangdang060Itemclass DangSpider(scrapy.Spider):name = "dang"allowed_domains = ["category.dangdang.com"]start_urls = ["https://category.dangdang.com/cid4002429.html"]def parse(self, response):print("===============成功================")# pipelines 管道用于下载数据# items 定义数据结构的# src = //ul[@id="component_47"]/li//img/@src# alt = //ul[@id="component_47"]/li//img/@alt# price = //ul[@id="component_47"]/li//p/span/text()# 所有的seletor的对象都可以再次调用xpathli_list = response.xpath('//ul[@id="component_47"]/li')for li in li_list:# 这里页面使用了懒加载,所以不能使用src了src = li.xpath('.//a//img/@data-original').extract_first()# 前几张图片的和其他图片你的标签属性并不一样# 第一章图片的src是可以使用的,其他的图片的地址是data-originalif src:src = srcelse:src = li.xpath('.//a//img/@src').extract_first()name = li.xpath('.//img/@alt').extract_first()# /span/text()price = li.xpath('.//p[@class="price"]/span[1]/text()').extract_first()# print(src, name, price)book = ScrapyDangdang060Item(src=src, name=name, price=price)# 获取一个book就将book交给pipelinesyield book这样之后就可以拿下book.json也就是当当网这一页的全部json数据了。

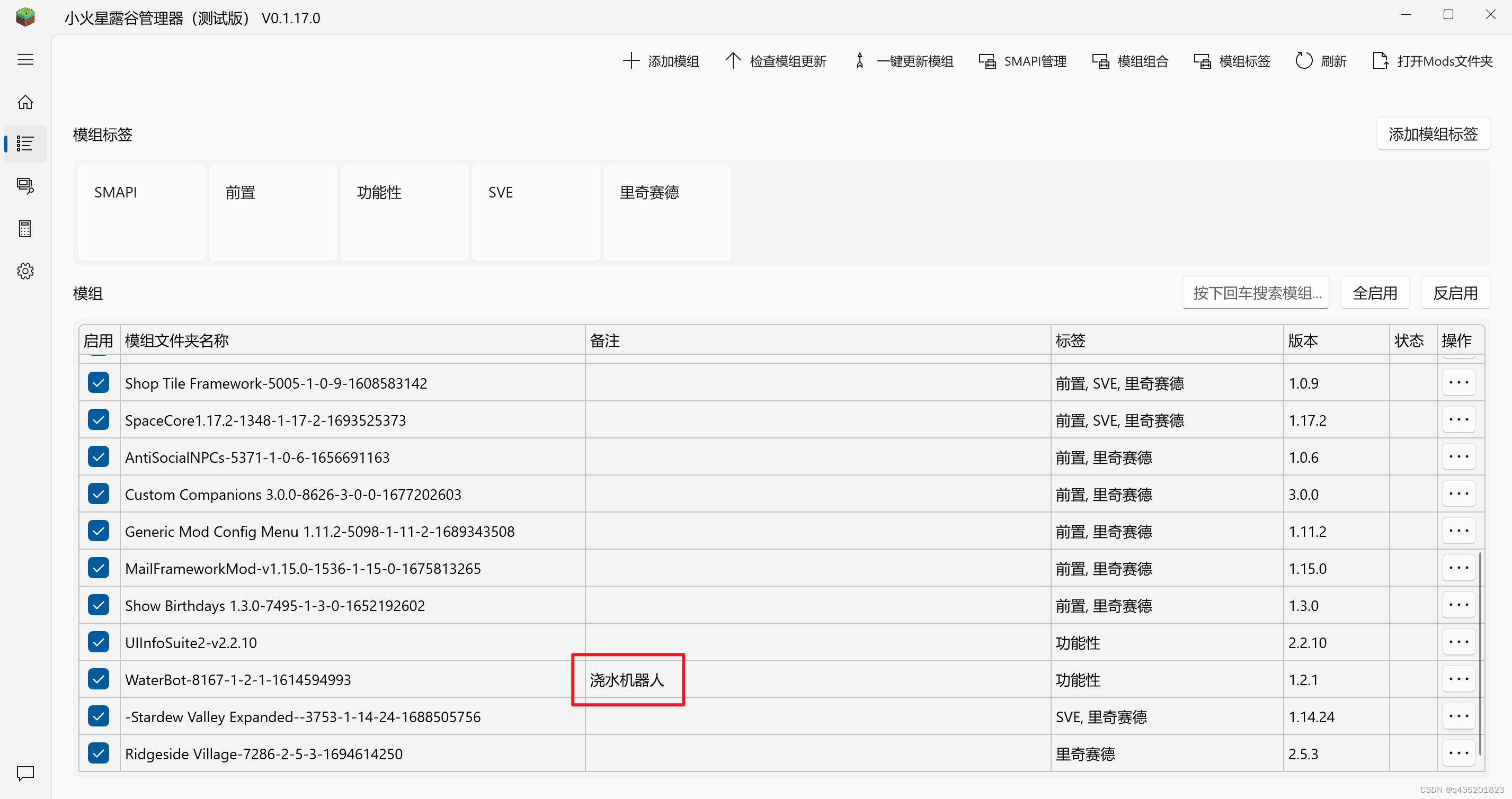

当当网多管道下载图片

# (1)定义管道类 # (2)在settings中开启管道 # "scrapy_dangdang_060.pipelines.DangDangDownloadPipeline": 301,

pipelines.py

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html# useful for handling different item types with a single interface

from itemadapter import ItemAdapter# 如果想使用管道的话,就要在setting中开启

class ScrapyDangdang060Pipeline:"""在爬虫文件开始之前执行"""def open_spider(self, spider):print("++++++++++=========")self.fp = open('book.json', 'w', encoding='utf-8')# item 就是yield后面的book对象# book = ScrapyDangdang060Pipeline(src=src, name=name, price=price)def process_item(self, item, spider):# TODO 一下这种方法并不推荐,因为每传递过来一个对象就打开一个文件# TODO 对文件的操作过于频繁# (1) write方法必须是字符串,而不能是其他的对象# w 会每一个对象都打开一次文件,然后后一个文件会将前一个文件覆盖# with open('book.json', 'a', encoding='utf-8') as fp:# fp.write(str(item))# todo 这样就解决了文件的打开过于频繁self.fp.write(str(item))return item"""在爬虫文件执行完成之后执行"""def close_spider(self, spider):print("------------------==========")self.fp.close()import urllib.request# 多条管道开启

# (1)定义管道类

# (2)在settings中开启管道

# "scrapy_dangdang_060.pipelines.DangDangDownloadPipeline": 301,class DangDangDownloadPipeline:def process_item(self, item, spider):url = 'https:' + item.get('src')filename = './books/' + item.get('name') + '.jpg'urllib.request.urlretrieve(url=url, filename=filename)return itemsettings

ITEM_PIPELINES = {"scrapy_dangdang_060.pipelines.ScrapyDangdang060Pipeline": 300,# DangDangDownloadPipeline"scrapy_dangdang_060.pipelines.DangDangDownloadPipeline": 301,

}就修改这两处文件,其他的无需变化

当当网多页下载

dang.py

这里寻找了一下不同page页之间的区别,然后使用parse方法来爬取数据

import scrapy

# 这里报错是编译器的问题,但是并不影响下面的代码

from scrapy_dangdang_060.items import ScrapyDangdang060Itemclass DangSpider(scrapy.Spider):name = "dang"# 如果要多页爬取,那么需要调整allowed_domains的范围一般情况下只写域名allowed_domains = ["category.dangdang.com"]start_urls = ["https://category.dangdang.com/cid4002429.html"]base_url = 'https://category.dangdang.com/pg'page = 1def parse(self, response):print("===============成功================")# pipelines 管道用于下载数据# items 定义数据结构的# src = //ul[@id="component_47"]/li//img/@src# alt = //ul[@id="component_47"]/li//img/@alt# price = //ul[@id="component_47"]/li//p/span/text()# 所有的seletor的对象都可以再次调用xpathli_list = response.xpath('//ul[@id="component_47"]/li')for li in li_list:# 这里页面使用了懒加载,所以不能使用src了src = li.xpath('.//a//img/@data-original').extract_first()# 前几张图片的和其他图片你的标签属性并不一样# 第一章图片的src是可以使用的,其他的图片的地址是data-originalif src:src = srcelse:src = li.xpath('.//a//img/@src').extract_first()name = li.xpath('.//img/@alt').extract_first()# /span/text()price = li.xpath('.//p[@class="price"]/span[1]/text()').extract_first()# print(src, name, price)book = ScrapyDangdang060Item(src=src, name=name, price=price)# 获取一个book就将book交给pipelinesyield book# 每一页的爬取逻辑都是一样的,所以我们只需要将执行的那个页的请求再次调用parse方法即可

# 第一页:https://category.dangdang.com/cid4002429.html

# 第二页:https://category.dangdang.com/pg2-cid4002429.html

# 第三页:https://category.dangdang.com/pg3-cid4002429.htmlif self.page < 100:self.page = self.page + 1url = self.base_url + str(self.page) + '-cid4002429.html'# 调用parse方法# 下面的代码就是scrapy的get请求# 这里的parse千万不要加括号()yield scrapy.Request(url=url, callback=self.parse)pielines.py

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html# useful for handling different item types with a single interface

from itemadapter import ItemAdapter# 如果想使用管道的话,就要在setting中开启

class ScrapyDangdang060Pipeline:"""在爬虫文件开始之前执行"""def open_spider(self, spider):print("++++++++++=========")self.fp = open('book.json', 'w', encoding='utf-8')# item 就是yield后面的book对象# book = ScrapyDangdang060Pipeline(src=src, name=name, price=price)def process_item(self, item, spider):# TODO 一下这种方法并不推荐,因为每传递过来一个对象就打开一个文件# TODO 对文件的操作过于频繁# (1) write方法必须是字符串,而不能是其他的对象# w 会每一个对象都打开一次文件,然后后一个文件会将前一个文件覆盖# with open('book.json', 'a', encoding='utf-8') as fp:# fp.write(str(item))# todo 这样就解决了文件的打开过于频繁self.fp.write(str(item))return item"""在爬虫文件执行完成之后执行"""def close_spider(self, spider):print("------------------==========")self.fp.close()import urllib.request# 多条管道开启

# (1)定义管道类

# (2)在settings中开启管道

# "scrapy_dangdang_060.pipelines.DangDangDownloadPipeline": 301,class DangDangDownloadPipeline:def process_item(self, item, spider):url = 'https:' + item.get('src')filename = './books/' + item.get('name') + '.jpg'urllib.request.urlretrieve(url=url, filename=filename)return itemsettings

# Scrapy settings for scrapy_dangdang_060 project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.htmlBOT_NAME = "scrapy_dangdang_060"SPIDER_MODULES = ["scrapy_dangdang_060.spiders"]

NEWSPIDER_MODULE = "scrapy_dangdang_060.spiders"# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = "scrapy_dangdang_060 (+http://www.yourdomain.com)"# Obey robots.txt rules

ROBOTSTXT_OBEY = True# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16# Disable cookies (enabled by default)

#COOKIES_ENABLED = False# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# "Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8",

# "Accept-Language": "en",

#}# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# "scrapy_dangdang_060.middlewares.ScrapyDangdang060SpiderMiddleware": 543,

#}# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# "scrapy_dangdang_060.middlewares.ScrapyDangdang060DownloaderMiddleware": 543,

#}# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# "scrapy.extensions.telnet.TelnetConsole": None,

#}# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# 管道可以有很多个,管道也存在优先级,范围1~1000,值越小,优先级越高

ITEM_PIPELINES = {"scrapy_dangdang_060.pipelines.ScrapyDangdang060Pipeline": 300,# DangDangDownloadPipeline"scrapy_dangdang_060.pipelines.DangDangDownloadPipeline": 301,

}# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = "httpcache"

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = "scrapy.extensions.httpcache.FilesystemCacheStorage"# Set settings whose default value is deprecated to a future-proof value

REQUEST_FINGERPRINTER_IMPLEMENTATION = "2.7"

TWISTED_REACTOR = "twisted.internet.asyncioreactor.AsyncioSelectorReactor"

FEED_EXPORT_ENCODING = "utf-8"

items.py

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.htmlimport scrapyclass ScrapyDangdang060Item(scrapy.Item):# define the fields for your item here like:# name = scrapy.Field()# 通俗的说就是我们要下载的数据都有什么# 图片src = scrapy.Field()# 名字name = scrapy.Field()# 价格price = scrapy.Field()总结

虽然难,但是男人不能说这难┭┮﹏┭┮

ヾ( ̄▽ ̄)Bye~Bye~

![[HackMyVM]靶场 Run](https://img-blog.csdnimg.cn/direct/08fa5bfdc73c45f29708d36b6bbfb8d5.png)