初赛之baseline解读篇

- 一、模型框架图

- 1、框架解读

- 2、评价指标解读

- 二、代码功能

- 1、数据集加载

- 2、模型定义

- 3、模型训练

- 4、模型预测

- 三、写在最后

一、模型框架图

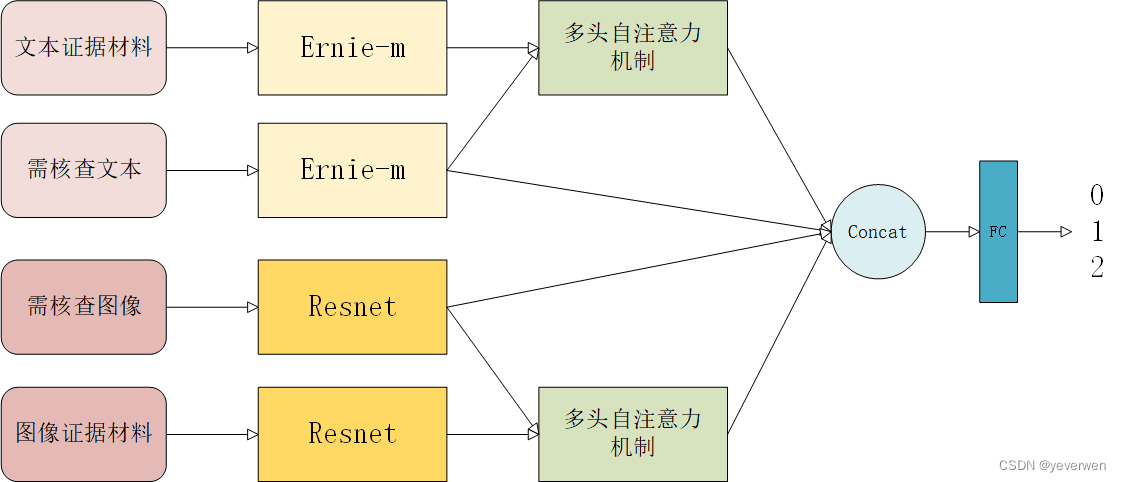

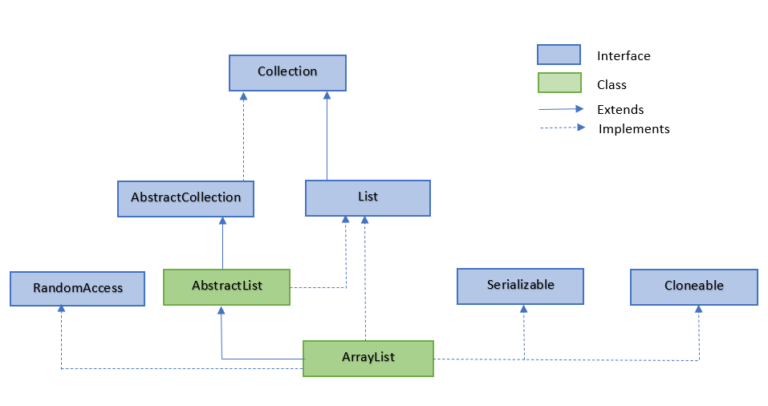

1、框架解读

第一列是输入,一部分是文本(需核查文本、文本证据材料),一部分是图片(需核查图像、图像证据材料)。

第二列是pre-trained模型,用于特征提取。文本部分采用Ernie-m模型提取特征,图像部分采用Resnet模型提取特征。

第三列是多头自注意力机制,可得到相关的文本证据特征、相关的图像证据特征。

最后,使用全连接层将标题特征、图像特征、相关的文本证据特征、相关的图像证据特征四块特征拼接,输入到分类器得到最终预测结果

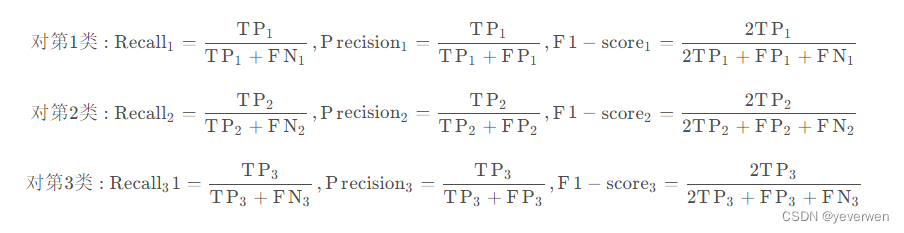

2、评价指标解读

采用在三个不同类别上的macro F1的高低进行评分,兼顾了准确率与召回率,是谣言检测领域主流的自动评价指标。

Macro-F1在sklearn里的计算方法就是计算每个类的F1-score的算数平均值,符合赛题定义。

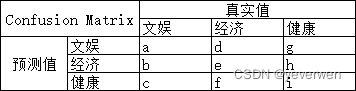

本赛题共有三类,包含文娱、经济、健康。先分别计算每个类别的F1,再求平均值。

F1的计算,首先要了解混淆矩阵:

TPi 是指第 i 类的 True Positive 正类判定为正类;

FPi 是指第 i 类的 False Positive 负类判定为正类;

FNi 是指第 i 类的 FalseNegative 正类判定为负类;

TNi 是指第 i 类的 True Negative 负类判定为负类。

对第1类 :TP1=a;FP1=d+g;FN1=b+c;TN1=e+f+h+i;

对第2类 :TP2=e;FP2=b+h;FN2=d+f; TN2=a+c+g+i;

对第3类 :TP3=i; FP3=c+f; FN3=g+h;TN3=a+b+d+e;

最后计算公式如下:

m a c r o − F 1 = ( F 1 − s c o r e 1 + F 1 − s c o r e 2 + F 1 − s c o r e 3 ) / 3 macro-F1= (F1−score_1+F1−score_2+ F1−score_3)/3 macro−F1=(F1−score1+F1−score2+F1−score3)/3

拿文娱举例,召回率:预测正确文娱的数占真实文娱数的比值;准确率:预测正确文娱的数占预测为文娱数的比值

二、代码功能

1、数据集加载

#### load Datasets ####

train_dataset = NewsContextDatasetEmbs(data_items_train, 'queries_dataset_merge','train')

val_dataset = NewsContextDatasetEmbs(data_items_val,'queries_dataset_merge','val')

test_dataset = NewsContextDatasetEmbs(data_items_test,'queries_dataset_merge','test')

训练集、测试集、验证集都是通过NewsContextDatasetEmbs这个类函数来创建的。

传入的三个参数分别为 json文件数据、指定数据集的根目录、指定数据集类别

1)NewsContextDatasetEmbs

class NewsContextDatasetEmbs(Dataset):def __init__(self, context_data_items_dict, queries_root_dir, split):self.context_data_items_dict = context_data_items_dictself.queries_root_dir = queries_root_dirself.idx_to_keys = list(context_data_items_dict.keys())# 使用Imagenet的均值和标准差归一化# 将图像大小调整为(256×256)# 将其转换为(224×224)# 将其转换为张量 - 图像中的所有元素值都将被缩放,以便在[0,1]之间而不是原来的[0,255]范围内# 将其正则化,使用Imagenet数据# 均值 = [0.485,0.456,0.406],标准差 = [0.229,0.224,0.225]self.transform = T.Compose([T.Resize(256),T.CenterCrop(224),T.ToTensor(),T.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]), ])self.split = split# 计算字典的长度def __len__(self):return len(self.context_data_items_dict)#通过pil读取img图像,深度学习模型一般只支持三通道,(其他通道可能是透明度)def load_img_pil(self, image_path):# imghdr用于探测图片的格式,实际就是图片格式遍历匹配if imghdr.what(image_path) == 'gif':try:with open(image_path, 'rb') as f:img = Image.open(f)return img.convert('RGB')except:return Nonewith open(image_path, 'rb') as f:img = Image.open(f)return img.convert('RGB')#加载图片直接返回图片的tensordef load_imgs_direct_search(self, item_folder_path, direct_dict):list_imgs_tensors = []count = 0keys_to_check = ['images_with_captions', 'images_with_no_captions', 'images_with_caption_matched_tags']for key1 in keys_to_check:if key1 in direct_dict.keys():for page in direct_dict[key1]:image_path = os.path.join(item_folder_path, page['image_path'].split('/')[-1])try:pil_img = self.load_img_pil(image_path) #调用load_img_pil函数读入只含三通道的图片except Exception as e:print(e)print(image_path)if pil_img == None: continuetransform_img = self.transform(pil_img) # 将读入的图片处理成统一大小count = count + 1list_imgs_tensors.append(transform_img)stacked_tensors = paddle.stack(list_imgs_tensors, axis=0)return stacked_tensors#加载inverse_search文件夹下的说明文字,返回说明文字,通过图匹配到的文字def load_captions(self, inv_dict):captions = ['']#不同的方式处理方式不一样pages_with_captions_keys = ['all_fully_matched_captions', 'all_partially_matched_captions']for key1 in pages_with_captions_keys:if key1 in inv_dict.keys():for page in inv_dict[key1]:#有title的dictif 'title' in page.keys():item = page['title']item = process_string(item)captions.append(item)#有caption的dictif 'caption' in page.keys():sub_captions_list = []unfiltered_captions = []for key2 in page['caption']:sub_caption = page['caption'][key2]sub_caption_filter = process_string(sub_caption) # 将文字中的单引号、字体加粗的网页标签过滤掉,为啥需要替换,会有信息损失吗# 是否可以替换更多,或者有其他方式解决if sub_caption in unfiltered_captions: continue # 如果已经加过的caption数据就不再加了sub_captions_list.append(sub_caption_filter)unfiltered_captions.append(sub_caption)captions = captions + sub_captions_list#不同的方式处理不一样pages_with_title_only_keys = ['partially_matched_no_text', 'fully_matched_no_text']for key1 in pages_with_title_only_keys:if key1 in inv_dict.keys():for page in inv_dict[key1]:if 'title' in page.keys():title = process_string(page['title'])captions.append(title)return captions# 加载img_html_news文件夹下的说明文字,返回说明文字,通过文字匹配到的图def load_captions_weibo(self, direct_dict):captions = ['']keys = ['images_with_captions', 'images_with_no_captions', 'images_with_caption_matched_tags']for key1 in keys:if key1 in direct_dict.keys():for page in direct_dict[key1]:if 'page_title' in page.keys():item = page['page_title']item = process_string(item)captions.append(item)if 'caption' in page.keys():sub_captions_list = []unfiltered_captions = []for key2 in page['caption']:sub_caption = page['caption'][key2]sub_caption_filter = process_string(sub_caption)if sub_caption in unfiltered_captions: continuesub_captions_list.append(sub_caption_filter)unfiltered_captions.append(sub_caption)captions = captions + sub_captions_list# print(captions)return captions# 加载 dataset_items_train.json ,img文件夹的图片,返回 transform的图片img, 和文字captiondef load_queries(self, key):caption = self.context_data_items_dict[key]['caption']image_path = os.path.join(self.queries_root_dir, self.context_data_items_dict[key]['image_path'])pil_img = self.load_img_pil(image_path)transform_img = self.transform(pil_img)return transform_img, captiondef __getitem__(self, idx):key = self.idx_to_keys[idx] #对应id的key值查询item = self.context_data_items_dict.get(str(key))# 如果为test没有label属性,所以train和val一起处理,else为test处理部分if self.split == 'train' or self.split == 'val':label = paddle.to_tensor(int(item['label']))direct_path_item = os.path.join(self.queries_root_dir, item['direct_path'])inverse_path_item = os.path.join(self.queries_root_dir, item['inv_path'])inv_ann_dict = json.load(open(os.path.join(inverse_path_item, 'inverse_annotation.json'),'r',encoding='UTF8'))direct_dict = json.load(open(os.path.join(direct_path_item, 'direct_annotation.json'),'r',encoding='UTF8'))captions = self.load_captions(inv_ann_dict)captions += self.load_captions_weibo(direct_dict)imgs = self.load_imgs_direct_search(direct_path_item, direct_dict)qImg, qCap = self.load_queries(key)sample = {'label': label, 'caption': captions, 'imgs': imgs, 'qImg': qImg, 'qCap': qCap}else:direct_path_item = os.path.join(self.queries_root_dir, item['direct_path'])inverse_path_item = os.path.join(self.queries_root_dir, item['inv_path'])inv_ann_dict = json.load(open(os.path.join(inverse_path_item, 'inverse_annotation.json'),'r',encoding='UTF8'))direct_dict = json.load(open(os.path.join(direct_path_item, 'direct_annotation.json'),'r',encoding='UTF8'))captions = self.load_captions(inv_ann_dict)captions += self.load_captions_weibo(direct_dict)imgs = self.load_imgs_direct_search(direct_path_item, direct_dict)qImg, qCap = self.load_queries(key)sample = {'caption': captions, 'imgs': imgs, 'qImg': qImg, 'qCap': qCap}return sample, len(captions), imgs.shape[0] # 返回样本(包含核查文本、核查图片、query图片、query文本),样本个数,图片个数

2)Dataloader

将dataset数据集传入DataLoader,实现批量读取数据。

dataset:传入的数据集

batch_size:每个batch有多少个样本

shuffle:在每个epoch开始的时候,对数据进行重新排序

collate_fn:指定如何将sample list组成一个mini-batch数据。传给它参数需要是一个callable对象,需要实现对组建的batch的处理逻辑,并返回每个batch的数据。在这里传入的是collate_context_bert_train、collate_context_bert_test函数。

return_list:数据是否以list形式返回

# load DataLoader

from paddle.io import DataLoader

train_dataloader = DataLoader(train_dataset, batch_size=4, shuffle=True, collate_fn = collate_context_bert_train, return_list=True)

val_dataloader = DataLoader(val_dataset, batch_size=4, shuffle=False, collate_fn = collate_context_bert_train, return_list=True)

test_dataloader = DataLoader(test_dataset, batch_size=2, shuffle=False, collate_fn = collate_context_bert_test, return_list=True)

这里的mini-batch函数有两个,实现代码如下:

#文本行图像长度不一,需要自定义整理,进行格式大小统一,将数据整理成batch

def collate_context_bert_train(batch):#print(batch)samples = [item[0] for item in batch]max_captions_len = max([item[1] for item in batch])max_images_len = max([item[2] for item in batch])qCap_batch = []qImg_batch = []img_batch = []cap_batch = []labels = []for j in range(0,len(samples)):sample = samples[j]labels.append(sample['label'])captions = sample['caption']cap_len = len(captions)for i in range(0,max_captions_len-cap_len):captions.append("")if len(sample['imgs'].shape) > 2:padding_size = (max_images_len-sample['imgs'].shape[0], sample['imgs'].shape[1], sample['imgs'].shape[2], sample['imgs'].shape[3])else:padding_size = (max_images_len-sample['imgs'].shape[0],sample['imgs'].shape[1])padded_mem_img = paddle.concat((sample['imgs'], paddle.zeros(padding_size)),axis=0)img_batch.append(padded_mem_img)#pad证据图片cap_batch.append(captions)qImg_batch.append(sample['qImg'])#[3, 224, 224]qCap_batch.append(sample['qCap'])img_batch = paddle.stack(img_batch, axis=0)qImg_batch = paddle.stack(qImg_batch, axis=0)labels = paddle.stack(labels, axis=0)return labels, cap_batch, img_batch, qCap_batch, qImg_batchdef collate_context_bert_test(batch):samples = [item[0] for item in batch]max_captions_len = max([item[1] for item in batch])max_images_len = max([item[2] for item in batch])qCap_batch = []qImg_batch = []img_batch = []cap_batch = []for j in range(0,len(samples)):sample = samples[j]captions = sample['caption']cap_len = len(captions)for i in range(0,max_captions_len-cap_len):captions.append("")if len(sample['imgs'].shape) > 2:padding_size = (max_images_len-sample['imgs'].shape[0],sample['imgs'].shape[1],sample['imgs'].shape[2],sample['imgs'].shape[3])else:padding_size = (max_images_len-sample['imgs'].shape[0],sample['imgs'].shape[1])padded_mem_img = paddle.concat((sample['imgs'], paddle.zeros(padding_size)),axis=0)img_batch.append(padded_mem_img)cap_batch.append(captions)qImg_batch.append(sample['qImg'])qCap_batch.append(sample['qCap'])img_batch = paddle.stack(img_batch, axis=0)qImg_batch = paddle.stack(qImg_batch, axis=0)return cap_batch, img_batch, qCap_batch, qImg_batch

2、模型定义

主要是Network,其中ErnieMModel由于是预训练的模型,所以不需要写forward。

class EncoderCNN(nn.Layer):def __init__(self, resnet_arch = 'resnet101'):super(EncoderCNN, self).__init__()if resnet_arch == 'resnet101':resnet = models.resnet101(pretrained=True)modules = list(resnet.children())[:-2]self.resnet = nn.Sequential(*modules)self.adaptive_pool = nn.AdaptiveAvgPool2D((1, 1))def forward(self, images, features='pool'):out = self.resnet(images)if features == 'pool':out = self.adaptive_pool(out)out = paddle.reshape(out, (out.shape[0],out.shape[1]))return outclass NetWork(nn.Layer):def __init__(self, mode):super(NetWork, self).__init__()self.mode = modeself.ernie = ErnieMModel.from_pretrained('ernie-m-base')self.tokenizer = ErnieMTokenizer.from_pretrained('ernie-m-base')self.resnet = EncoderCNN()self.classifier1 = nn.Linear(2*(768+2048),1024)self.classifier2 = nn.Linear(1024,3)self.attention_text = nn.MultiHeadAttention(768,16)self.attention_image = nn.MultiHeadAttention(2048,16)if self.mode == 'text':self.classifier = nn.Linear(768,3)self.resnet.eval()def forward(self,qCap,qImg,caps,imgs):self.resnet.eval()encode_dict_qcap = self.tokenizer(text = qCap ,max_length = 128 ,truncation=True, padding='max_length')input_ids_qcap = encode_dict_qcap['input_ids']input_ids_qcap = paddle.to_tensor(input_ids_qcap)qcap_feature, pooled_output= self.ernie(input_ids_qcap) #(b,length,dim)if self.mode == 'text':logits = self.classifier(qcap_feature[:,0,:].squeeze(1))return logitscaps_feature = []for i,caption in enumerate (caps):encode_dict_cap = self.tokenizer(text = caption ,max_length = 128 ,truncation=True, padding='max_length')input_ids_caps = encode_dict_cap['input_ids']input_ids_caps = paddle.to_tensor(input_ids_caps)cap_feature, pooled_output= self.ernie(input_ids_caps) #(b,length,dim)caps_feature.append(cap_feature)caps_feature = paddle.stack(caps_feature,axis=0) #(b,num,length,dim)caps_feature = caps_feature.mean(axis=1)#(b,length,dim)caps_feature = self.attention_text(qcap_feature,caps_feature,caps_feature) #(b,length,dim)imgs_features = []for img in imgs:imgs_feature = self.resnet(img) #(length,dim)imgs_features.append(imgs_feature)imgs_features = paddle.stack(imgs_features,axis=0) #(b,length,dim)qImg_features = []for qImage in qImg:qImg_feature = self.resnet(qImage.unsqueeze(axis=0)) #(1,dim)qImg_features.append(qImg_feature)qImg_feature = paddle.stack(qImg_features,axis=0) #(b,1,dim)imgs_features = self.attention_image(qImg_feature,imgs_features,imgs_features) #(b,1,dim)# [1, 128, 768] [1, 128, 768] [1, 1, 2048] [1, 1, 2048] origin# print(qcap_feature.shape,caps_feature.shape,qImg_feature.shape,imgs_features.shape)# print((qcap_feature[:,0,:].shape,caps_feature[:,0,:].shape,qImg_feature.squeeze(1).shape,imgs_features.squeeze(1).shape))# ([1,768], [1 , 768], [1, 2048], [1, 2048])feature = paddle.concat(x=[qcap_feature[:,0,:], caps_feature[:,0,:], qImg_feature.squeeze(1), imgs_features.squeeze(1)], axis=-1)logits = self.classifier1(feature)logits = self.classifier2(logits)return logitsmodel = NetWork("image")

3、模型训练

训练参数设置,包含训练周期,学习率lr,优化器,损失函数,评估指标等

# train_setting

epochs = 2 #迭代周期为2,每个周期都会生成一组模型参数

num_training_steps = len(train_dataloader) * epochs

warmup_steps = int(num_training_steps*0.1)

print(num_training_steps,warmup_steps) #5592 559

# 定义 learning_rate_scheduler,负责在训练过程中对 lr 进行调度

lr_scheduler = LinearDecayWithWarmup(1e-6, num_training_steps, warmup_steps)

# 训练结束后,存储模型参数

save_dir ="checkpoint/" #该目录是指在每个周期中最终保存的模型参数

best_dir = "best_model" #该目录为最好的模型参数,即为最终预测需要的模型参数

# 创建保存的文件夹

os.makedirs(save_dir,exist_ok=True)

os.makedirs(best_dir,exist_ok=True)decay_params = [p.name for n, p in model.named_parameters()if not any(nd in n for nd in ["bias", "norm"])

]# 定义优化器 Optimizer

optimizer = paddle.optimizer.AdamW(learning_rate=lr_scheduler,parameters=model.parameters(),weight_decay=1.2e-4, apply_decay_param_fun=lambda x: x in decay_params)# 定义损失函数,交叉熵损失

criterion = paddle.nn.loss.CrossEntropyLoss()# 评估的时候采用准确率指标

metric = paddle.metric.Accuracy()# 定义线下评估 评价指标为acc,注意线上评估是macro-f1 score

@paddle.no_grad()

def evaluate(model, criterion, metric, data_loader):model.eval()metric.reset()losses = []for batch in data_loader:labels, cap_batch, img_batch, qCap_batch, qImg_batch = batchlogits = model(qCap=qCap_batch,qImg=qImg_batch,caps=cap_batch,imgs=img_batch)loss = criterion(logits, labels)losses.append(loss.numpy())correct = metric.compute(logits, labels)metric.update(correct)accu = metric.accumulate()print("eval loss: %.5f, accu: %.5f" % (np.mean(losses), accu))model.train()metric.reset()return np.mean(losses), accu

定义训练,包含五个部分:模型,损失函数,评价指标,训练dataloader,验证dataloader

def do_train(model, criterion, metric, val_dataloader, train_dataloader):print("train run start")global_step = 0tic_train = time.time()best_accuracy = 0.0for epoch in range(1, epochs + 1):for step, batch in enumerate(train_dataloader, start=1):labels, cap_batch, img_batch, qCap_batch, qImg_batch = batchprobs = model(qCap=qCap_batch, qImg=qImg_batch, caps=cap_batch, imgs=img_batch)loss = criterion(probs, labels)correct = metric.compute(probs, labels)metric.update(correct)acc = metric.accumulate()global_step += 1# 每间隔 100 step 输出训练指标if global_step % 100 == 0:print("global step %d, epoch: %d, batch: %d, loss: %.5f, accu: %.5f, speed: %.2f step/s"% (global_step, epoch, step, loss, acc,10 / (time.time() - tic_train)))tic_train = time.time()loss.backward()optimizer.step()lr_scheduler.step()optimizer.clear_grad()# 每间隔一个epoch 在验证集进行评估if global_step % len(train_dataloader) == 0:eval_loss, eval_accu = evaluate(model, criterion, metric, val_dataloader)save_param_path = os.path.join(save_dir + str(epoch), 'model_state.pdparams')paddle.save(model.state_dict(), save_param_path)if (best_accuracy < eval_accu):best_accuracy = eval_accu# 保存模型save_param_path = os.path.join(best_dir, 'model_best.pdparams')paddle.save(model.state_dict(), save_param_path)do_train(model, criterion, metric, val_dataloader, train_dataloader)

4、模型预测

在预测模型前,需要重启内核,释放了内存(图片数据很吃内存)。

需要重新运行第一块和第二块的代码,再运行以下代码:

params_path = 'best_model/model_best.pdparams'#加载训练好的模型参数

if params_path and os.path.isfile(params_path):# 加载模型参数state_dict = paddle.load(params_path)model.set_dict(state_dict)print("Loaded parameters from %s" % params_path)results = []

# 切换model模型为评估模式,关闭dropout等随机因素

id2name ={ 0:"non-rumor", 1:"rumor",2:"unverified"}

model.eval()

count=0

bar = tqdm(test_dataloader, total=len(test_dataloader))

for batch in bar:count+=1cap_batch, img_batch, qCap_batch, qImg_batch = batchlogits = model(qCap=qCap_batch,qImg=qImg_batch,caps=cap_batch,imgs=img_batch)# 预测分类probs = F.softmax(logits, axis=-1)label = paddle.argmax(probs, axis=1).numpy()results += label.tolist()print(results[:5])

print(len(results))

results = [id2name[i] for i in results]

输出结果

#id/label

#字典中的key值即为csv中的列名

id_list=range(len(results))

print(id_list)

frame = pd.DataFrame({'id':id_list,'label':results})

frame.to_csv("result.csv",index=False,sep=',')# 根据要求打包

!zip test.zip result.csv

三、写在最后

讲讲最精华的部分,需要从哪些地方入手来提升模型,谈谈我的理解:

1、数据源:数据并不干净,图片数据量很大,是否有操作空间

2、数据特征:抽取的数据特征是否存在信息丢失,或者说能补充更多通过数据探索发现的规律特征

3、模型选择:baseline是一个比较稳的方式,也可以尝试

4、参数调整:这部分尽量放到最后做,好的参数也可能让模型work更好

本次记录主要还是以学习为主,抽了工作之余来进行baseline的翻译和整理。探索了一个带大家最快上手的路径,降低大家的入门难度。

看完觉得有用的话,记得点个赞,不做白嫖党~

![[数据集][目标检测]遛狗不牵绳数据集VOC格式-1980张](https://i0.hdslb.com/bfs/archive/b218ed1884b250be57b2ee3ae09d26814eb427c8.jpg@100w_100h_1c.png@57w_57h_1c.png)