LLaMA-Factory微调(sft)ChatGLM3-6B保姆教程

准备

1、下载

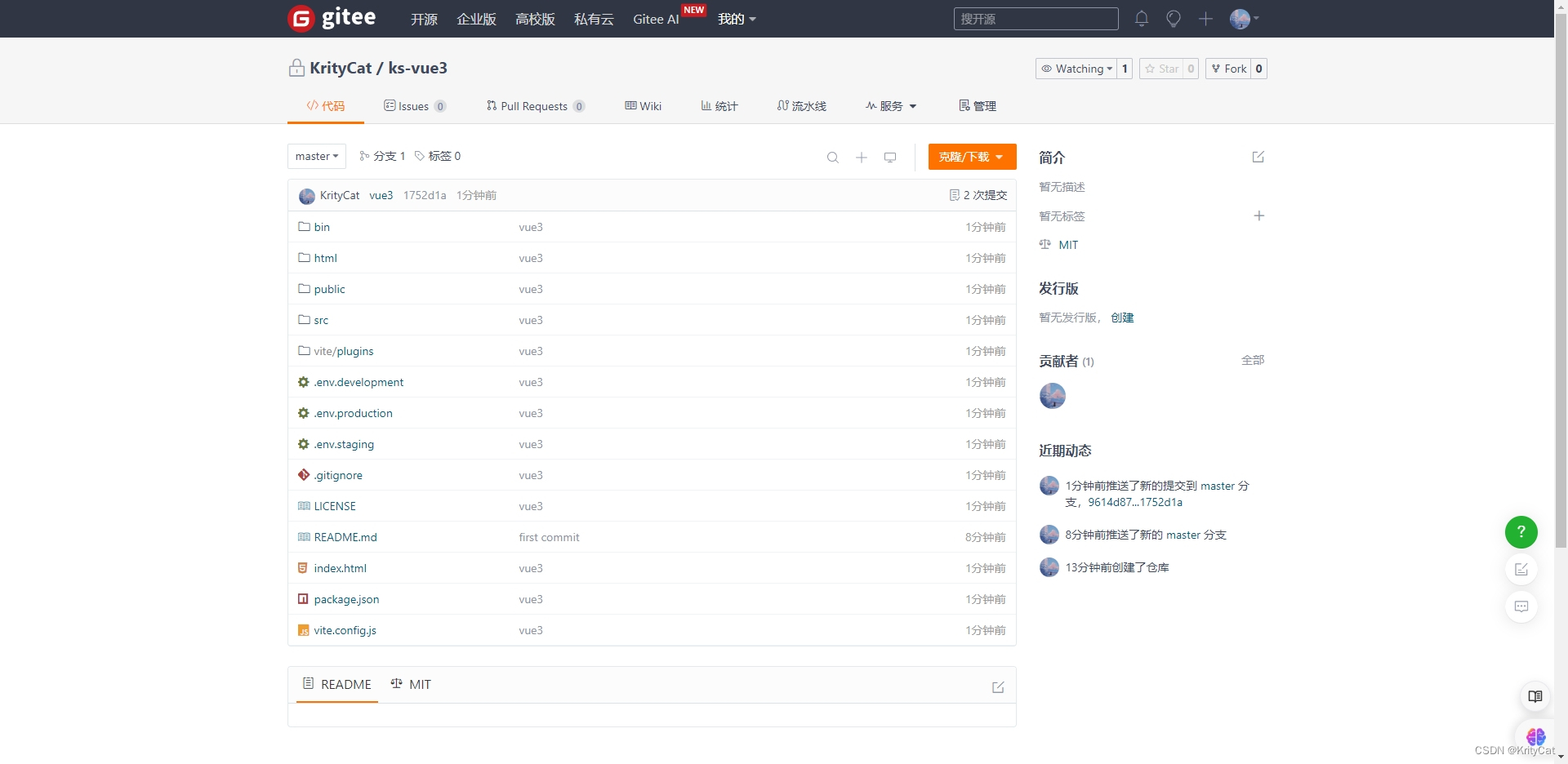

- 下载LLaMA-Factory

- 下载ChatGLM3-6B

- 下载ChatGLM3

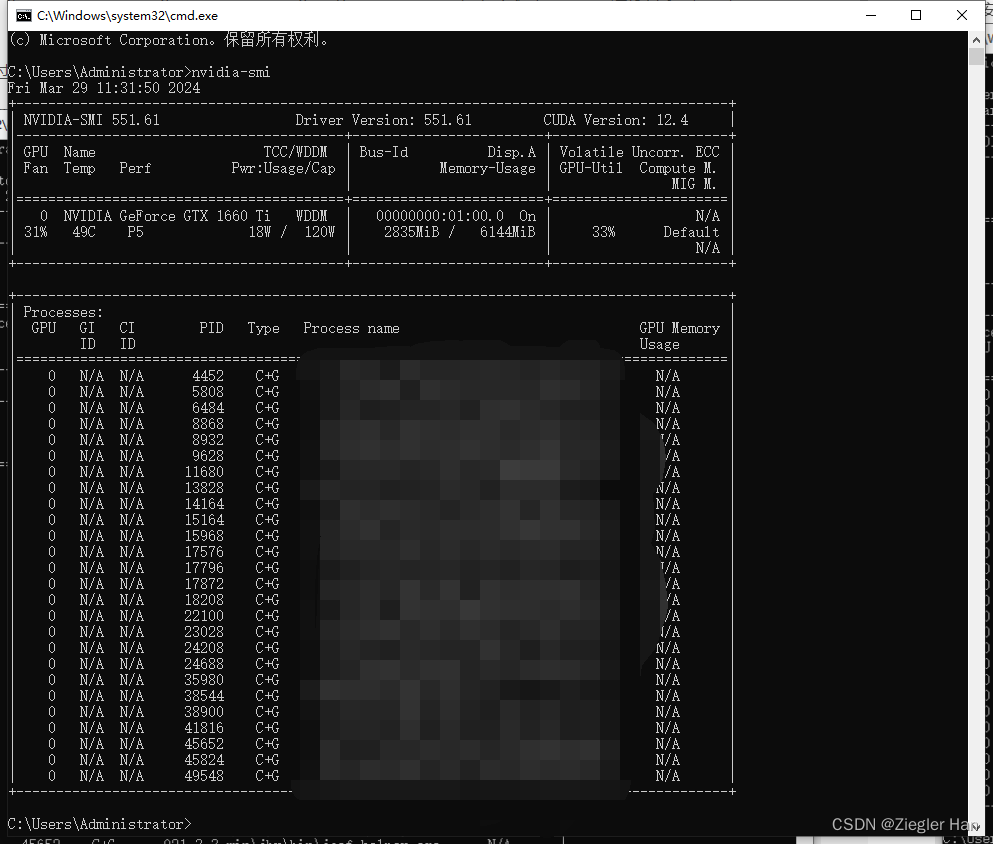

- windows下载CUDA ToolKit 12.1 (本人是在windows进行训练的,显卡GTX 1660 Ti)

CUDA安装完毕后,通过指令nvidia-smi查看

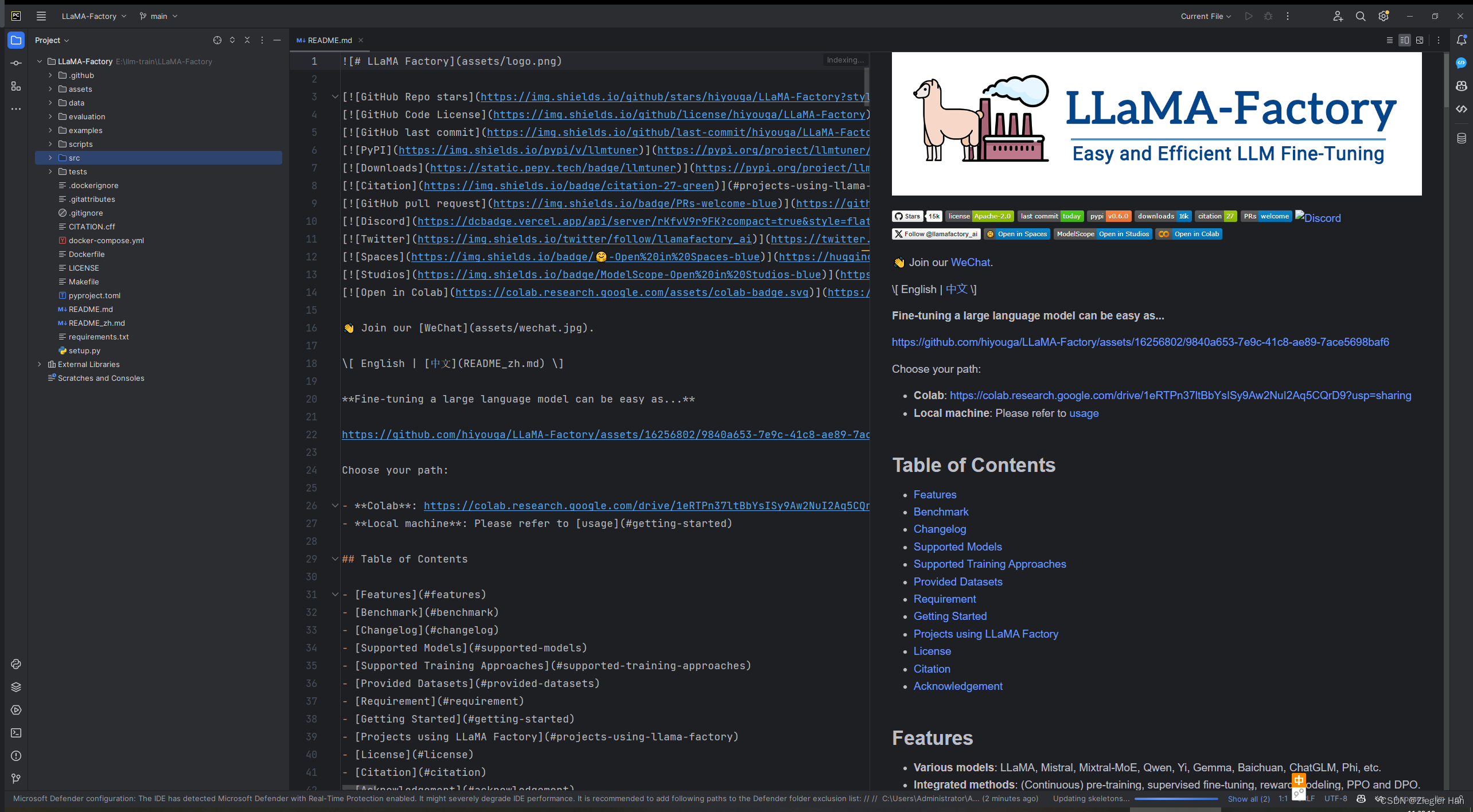

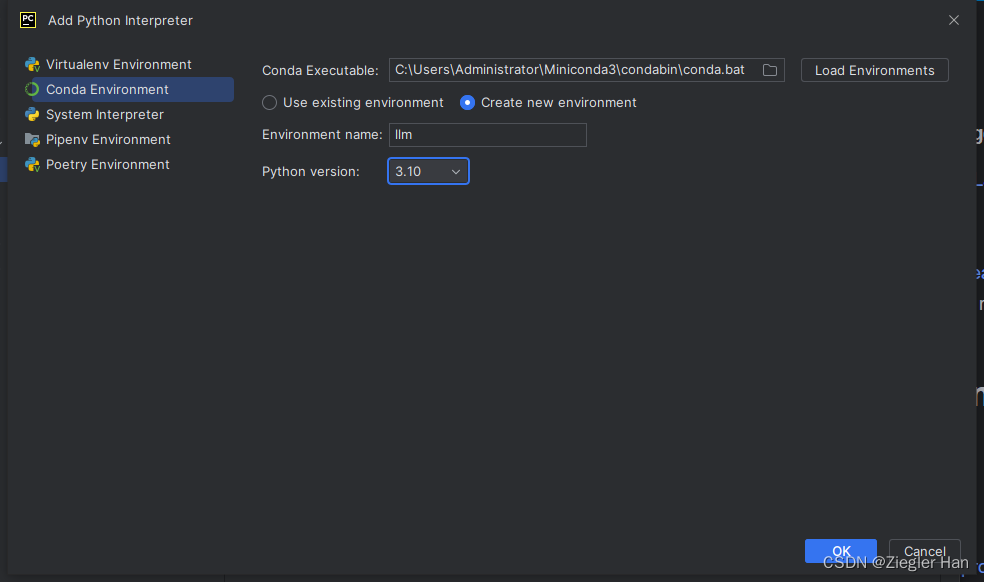

2、PyCharm打开LLaMA-Factory项目

1、选择下载目录:E:\llm-train\LLaMA-Factory,并打开

2、创建新的python环境,这里使用conda创建一个python空环境,选择python3.10

3、安装依赖

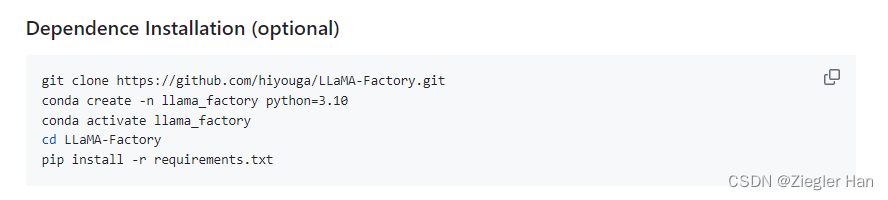

参考LLaMA-Factory的依赖安装步骤

安装LLaMA-Factory依赖

(llm) PS E:\llm-train\LLaMA-Factory> pwdPath

----

E:\llm-train\LLaMA-Factory(llm) PS E:\llm-train\LLaMA-Factory> pip install -r requirements.txt

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Collecting torch>=1.13.1 (from -r requirements.txt (line 1))Downloading https://pypi.tuna.tsinghua.edu.cn/packages/3a/81/684d99e536b20e869a7c1222cf1dd233311fb05d3628e9570992bfb65760/torch-2.2.2-cp310-cp310-win_amd64.whl (198.6 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 198.6/198.6 MB 5.0 MB/s eta 0:00:00

Collecting transformers>=4.37.2 (from -r requirements.txt (line 2))Downloading https://pypi.tuna.tsinghua.edu.cn/packages/e2/52/02271ef16713abea41bab736dfc2dbee75e5e3512cf7441e233976211ba5/transformers-4.39.2-py3-none-any.whl (8.8 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 8.8/8.8 MB 4.0 MB/s eta 0:00:00

Collecting datasets>=2.14.3 (from -r requirements.txt (line 3))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/95/fc/661a7f06e8b7d48fcbd3f55423b7ff1ac3ce59526f146fda87a1e1788ee4/datasets-2.18.0-py3-none-any.whl (510 kB)

Collecting accelerate>=0.27.2 (from -r requirements.txt (line 4))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/a0/11/9bfcf765e71a2c84bbf715719ba520aeacb2ad84113f14803ff1947ddf69/accelerate-0.28.0-py3-none-any.whl (290 kB)

Collecting peft>=0.10.0 (from -r requirements.txt (line 5))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/b4/5d/758c00ba637bc850f35fff7fad442c470ac3d606fe586d881b0bed7ef5a5/peft-0.10.0-py3-none-any.whl (199 kB)

Collecting trl>=0.8.1 (from -r requirements.txt (line 6))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/89/12/bdad48d9eb5d2f3e71fdfaa3d634737a79375be7caa927c6e95aa05f9d4a/trl-0.8.1-py3-none-any.whl (225 kB)

Collecting gradio<4.0.0,>=3.38.0 (from -r requirements.txt (line 7))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/bd/ea/ca6506e4da9b5338da3bfdd6115dc1c90ffd58c1ec50ca2792b84a7b4bdb/gradio-3.50.2-py3-none-any.whl (20.3 MB)

Collecting scipy (from -r requirements.txt (line 8))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/fd/a7/5f829b100d208c85163aecba93faf01d088d944fc91585338751d812f1e4/scipy-1.12.0-cp310-cp310-win_amd64.whl (46.2 MB)

Collecting einops (from -r requirements.txt (line 9))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/29/0b/2d1c0ebfd092e25935b86509a9a817159212d82aa43d7fb07eca4eeff2c2/einops-0.7.0-py3-none-any.whl (44 kB)

Collecting sentencepiece (from -r requirements.txt (line 10))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/85/f4/4ef1a6e0e9dbd8a60780a91df8b7452ada14cfaa0e17b3b8dfa42cecae18/sentencepiece-0.2.0-cp310-cp310-win_amd64.whl (991 kB)

Collecting protobuf (from -r requirements.txt (line 11))Downloading https://pypi.tuna.tsinghua.edu.cn/packages/8d/83/d70cb6fedb1f38318af01f0035f2103732630af0ca323c0198122b49323b/protobuf-5.26.1-cp310-abi3-win_amd64.whl (420 kB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 420.9/420.9 kB 3.7 MB/s eta 0:00:00

Collecting uvicorn (from -r requirements.txt (line 12))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/73/f5/cbb16fcbe277c1e0b8b3ddd188f2df0e0947f545c49119b589643632d156/uvicorn-0.29.0-py3-none-any.whl (60 kB)

Collecting pydantic (from -r requirements.txt (line 13))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/e5/f3/8296f550276194a58c5500d55b19a27ae0a5a3a51ffef66710c58544b32d/pydantic-2.6.4-py3-none-any.whl (394 kB)

Collecting fastapi (from -r requirements.txt (line 14))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/f0/f7/ea860cb8aa18e326f411e32ab537424690a53db20de6bad73d70da611fae/fastapi-0.110.0-py3-none-any.whl (92 kB)

Collecting sse-starlette (from -r requirements.txt (line 15))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/50/04/b1569f95ce91fbfe35e5e6b3b11e8373ffe4fa81e998818c8c995a873896/sse_starlette-2.0.0-py3-none-any.whl (9.0 kB)

Collecting matplotlib (from -r requirements.txt (line 16))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/e4/43/fd3cd5989d6b592af1c2e4f37bf887f74b790f10b568b2497fe874a67fc7/matplotlib-3.8.3-cp310-cp310-win_amd64.whl (7.6 MB)

Collecting fire (from -r requirements.txt (line 17))Using cached fire-0.6.0-py2.py3-none-any.whl

Collecting galore-torch (from -r requirements.txt (line 18))Downloading https://pypi.tuna.tsinghua.edu.cn/packages/2b/b9/e9e88f989c62edefaa45df07198ce280ac0d373f5e2842686c6ece2ddb1e/galore_torch-1.0-py3-none-any.whl (13 kB)

Collecting filelock (from torch>=1.13.1->-r requirements.txt (line 1))Downloading https://pypi.tuna.tsinghua.edu.cn/packages/8b/69/acdf492db27dea7be5c63053230130e0574fd8a376de3555d5f8bbc3d3ad/filelock-3.13.3-py3-none-any.whl (11 kB)

Collecting typing-extensions>=4.8.0 (from torch>=1.13.1->-r requirements.txt (line 1))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/f9/de/dc04a3ea60b22624b51c703a84bbe0184abcd1d0b9bc8074b5d6b7ab90bb/typing_extensions-4.10.0-py3-none-any.whl (33 kB)

Collecting sympy (from torch>=1.13.1->-r requirements.txt (line 1))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/d2/05/e6600db80270777c4a64238a98d442f0fd07cc8915be2a1c16da7f2b9e74/sympy-1.12-py3-none-any.whl (5.7 MB)

Collecting networkx (from torch>=1.13.1->-r requirements.txt (line 1))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/d5/f0/8fbc882ca80cf077f1b246c0e3c3465f7f415439bdea6b899f6b19f61f70/networkx-3.2.1-py3-none-any.whl (1.6 MB)

Collecting jinja2 (from torch>=1.13.1->-r requirements.txt (line 1))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/30/6d/6de6be2d02603ab56e72997708809e8a5b0fbfee080735109b40a3564843/Jinja2-3.1.3-py3-none-any.whl (133 kB)

Collecting fsspec (from torch>=1.13.1->-r requirements.txt (line 1))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/93/6d/66d48b03460768f523da62a57a7e14e5e95fdf339d79e996ce3cecda2cdb/fsspec-2024.3.1-py3-none-any.whl (171 kB)

Collecting huggingface-hub<1.0,>=0.19.3 (from transformers>=4.37.2->-r requirements.txt (line 2))Downloading https://pypi.tuna.tsinghua.edu.cn/packages/b6/51/d418bb7bb9e32845d3cb4d526012ddf1fea6bb3d55b6a1880698e4b7f19f/huggingface_hub-0.22.1-py3-none-any.whl (388 kB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 388.6/388.6 kB 967.7 kB/s eta 0:00:00

Collecting numpy>=1.17 (from transformers>=4.37.2->-r requirements.txt (line 2))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/19/77/538f202862b9183f54108557bfda67e17603fc560c384559e769321c9d92/numpy-1.26.4-cp310-cp310-win_amd64.whl (15.8 MB)

Collecting packaging>=20.0 (from transformers>=4.37.2->-r requirements.txt (line 2))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/49/df/1fceb2f8900f8639e278b056416d49134fb8d84c5942ffaa01ad34782422/packaging-24.0-py3-none-any.whl (53 kB)

Collecting pyyaml>=5.1 (from transformers>=4.37.2->-r requirements.txt (line 2))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/24/97/9b59b43431f98d01806b288532da38099cc6f2fea0f3d712e21e269c0279/PyYAML-6.0.1-cp310-cp310-win_amd64.whl (145 kB)

Collecting regex!=2019.12.17 (from transformers>=4.37.2->-r requirements.txt (line 2))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/83/eb/144d2db5cf2ac3989d0ea4273040218d68bd67422133548da47043423594/regex-2023.12.25-cp310-cp310-win_amd64.whl (269 kB)

Collecting requests (from transformers>=4.37.2->-r requirements.txt (line 2))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/70/8e/0e2d847013cb52cd35b38c009bb167a1a26b2ce6cd6965bf26b47bc0bf44/requests-2.31.0-py3-none-any.whl (62 kB)

Collecting tokenizers<0.19,>=0.14 (from transformers>=4.37.2->-r requirements.txt (line 2))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/c9/87/0bf37626c5f1ea2462e0398be88c287f3d40c696c255ba478bf525bdc852/tokenizers-0.15.2-cp310-none-win_amd64.whl (2.2 MB)

Collecting safetensors>=0.4.1 (from transformers>=4.37.2->-r requirements.txt (line 2))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/58/48/8d228afbcb4a48d5a9f40eca65ab250703de7fd802f5b5148e5fb1edfe6e/safetensors-0.4.2-cp310-none-win_amd64.whl (269 kB)

Collecting tqdm>=4.27 (from transformers>=4.37.2->-r requirements.txt (line 2))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/2a/14/e75e52d521442e2fcc9f1df3c5e456aead034203d4797867980de558ab34/tqdm-4.66.2-py3-none-any.whl (78 kB)

Collecting pyarrow>=12.0.0 (from datasets>=2.14.3->-r requirements.txt (line 3))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/ec/85/abca962d99950aad803bd755baf020a8183ca3be1319bb205f52bbbcce16/pyarrow-15.0.2-cp310-cp310-win_amd64.whl (24.8 MB)

Collecting pyarrow-hotfix (from datasets>=2.14.3->-r requirements.txt (line 3))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/e4/f4/9ec2222f5f5f8ea04f66f184caafd991a39c8782e31f5b0266f101cb68ca/pyarrow_hotfix-0.6-py3-none-any.whl (7.9 kB)

Collecting dill<0.3.9,>=0.3.0 (from datasets>=2.14.3->-r requirements.txt (line 3))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/c9/7a/cef76fd8438a42f96db64ddaa85280485a9c395e7df3db8158cfec1eee34/dill-0.3.8-py3-none-any.whl (116 kB)

Collecting pandas (from datasets>=2.14.3->-r requirements.txt (line 3))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/93/26/2a695303a4a3194014dca7cb5d5ce08f0d2c6baa344fb5f562c642e77b2b/pandas-2.2.1-cp310-cp310-win_amd64.whl (11.6 MB)

Collecting xxhash (from datasets>=2.14.3->-r requirements.txt (line 3))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/a5/b0/2950f76c07e467586d01d9a6cdd4ac668c13d20de5fde49af5364f513e54/xxhash-3.4.1-cp310-cp310-win_amd64.whl (29 kB)

Collecting multiprocess (from datasets>=2.14.3->-r requirements.txt (line 3))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/bc/f7/7ec7fddc92e50714ea3745631f79bd9c96424cb2702632521028e57d3a36/multiprocess-0.70.16-py310-none-any.whl (134 kB)

Collecting fsspec (from torch>=1.13.1->-r requirements.txt (line 1))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/ad/30/2281c062222dc39328843bd1ddd30ff3005ef8e30b2fd09c4d2792766061/fsspec-2024.2.0-py3-none-any.whl (170 kB)

Collecting aiohttp (from datasets>=2.14.3->-r requirements.txt (line 3))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/54/2d/746a93808c2d9d247b554155a1576bf7ed0e0029e8cfb667a9007e2d53b0/aiohttp-3.9.3-cp310-cp310-win_amd64.whl (365 kB)

Collecting psutil (from accelerate>=0.27.2->-r requirements.txt (line 4))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/93/52/3e39d26feae7df0aa0fd510b14012c3678b36ed068f7d78b8d8784d61f0e/psutil-5.9.8-cp37-abi3-win_amd64.whl (255 kB)

Collecting tyro>=0.5.11 (from trl>=0.8.1->-r requirements.txt (line 6))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/e3/0e/c421c916bc8a48a861197bda6554588f8281db3e3533083f0d4d49b80ddb/tyro-0.7.3-py3-none-any.whl (79 kB)

Collecting aiofiles<24.0,>=22.0 (from gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/c5/19/5af6804c4cc0fed83f47bff6e413a98a36618e7d40185cd36e69737f3b0e/aiofiles-23.2.1-py3-none-any.whl (15 kB)

Collecting altair<6.0,>=4.2.0 (from gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/c5/e4/7fcceef127badbb0d644d730d992410e4f3799b295c9964a172f92a469c7/altair-5.2.0-py3-none-any.whl (996 kB)

Collecting ffmpy (from gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Using cached ffmpy-0.3.2-py3-none-any.whl

Collecting gradio-client==0.6.1 (from gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/7d/04/e1654ee28fb2686514ca8ae31af5e489403964d48764788f9a168e069c0f/gradio_client-0.6.1-py3-none-any.whl (299 kB)

Collecting httpx (from gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/41/7b/ddacf6dcebb42466abd03f368782142baa82e08fc0c1f8eaa05b4bae87d5/httpx-0.27.0-py3-none-any.whl (75 kB)

Collecting importlib-resources<7.0,>=1.3 (from gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/75/06/4df55e1b7b112d183f65db9503bff189e97179b256e1ea450a3c365241e0/importlib_resources-6.4.0-py3-none-any.whl (38 kB)

Collecting markupsafe~=2.0 (from gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/69/48/acbf292615c65f0604a0c6fc402ce6d8c991276e16c80c46a8f758fbd30c/MarkupSafe-2.1.5-cp310-cp310-win_amd64.whl (17 kB)

Collecting orjson~=3.0 (from gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Downloading https://pypi.tuna.tsinghua.edu.cn/packages/ed/71/9b653e2ac43769385e89a30153c5374e07788c6d8fc44bb616de427a8aa2/orjson-3.10.0-cp310-none-win_amd64.whl (139 kB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 139.2/139.2 kB 2.1 MB/s eta 0:00:00

Collecting pillow<11.0,>=8.0 (from gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/ef/d8/f97270d25a003435e408e6d1e38d8eddc9b3e2c7b646719f4b3a5293685d/pillow-10.2.0-cp310-cp310-win_amd64.whl (2.6 MB)

Collecting pydub (from gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/a6/53/d78dc063216e62fc55f6b2eebb447f6a4b0a59f55c8406376f76bf959b08/pydub-0.25.1-py2.py3-none-any.whl (32 kB)

Collecting python-multipart (from gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/3d/47/444768600d9e0ebc82f8e347775d24aef8f6348cf00e9fa0e81910814e6d/python_multipart-0.0.9-py3-none-any.whl (22 kB)

Collecting semantic-version~=2.0 (from gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/6a/23/8146aad7d88f4fcb3a6218f41a60f6c2d4e3a72de72da1825dc7c8f7877c/semantic_version-2.10.0-py2.py3-none-any.whl (15 kB)

Collecting websockets<12.0,>=10.0 (from gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/98/a7/0ed69892981351e5acf88fac0ff4c801fabca2c3bdef9fca4c7d3fde8c53/websockets-11.0.3-cp310-cp310-win_amd64.whl (124 kB)

Collecting click>=7.0 (from uvicorn->-r requirements.txt (line 12))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/00/2e/d53fa4befbf2cfa713304affc7ca780ce4fc1fd8710527771b58311a3229/click-8.1.7-py3-none-any.whl (97 kB)

Collecting h11>=0.8 (from uvicorn->-r requirements.txt (line 12))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/95/04/ff642e65ad6b90db43e668d70ffb6736436c7ce41fcc549f4e9472234127/h11-0.14.0-py3-none-any.whl (58 kB)

Collecting annotated-types>=0.4.0 (from pydantic->-r requirements.txt (line 13))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/28/78/d31230046e58c207284c6b2c4e8d96e6d3cb4e52354721b944d3e1ee4aa5/annotated_types-0.6.0-py3-none-any.whl (12 kB)

Collecting pydantic-core==2.16.3 (from pydantic->-r requirements.txt (line 13))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/ec/e8/49d65816802781451af7e758bdf9ff9d976a6b3959e1aab843da9931e89f/pydantic_core-2.16.3-cp310-none-win_amd64.whl (1.9 MB)

Collecting starlette<0.37.0,>=0.36.3 (from fastapi->-r requirements.txt (line 14))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/eb/f7/372e3953b6e6fbfe0b70a1bb52612eae16e943f4288516480860fcd4ac41/starlette-0.36.3-py3-none-any.whl (71 kB)

Collecting anyio (from sse-starlette->-r requirements.txt (line 15))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/14/fd/2f20c40b45e4fb4324834aea24bd4afdf1143390242c0b33774da0e2e34f/anyio-4.3.0-py3-none-any.whl (85 kB)

Collecting contourpy>=1.0.1 (from matplotlib->-r requirements.txt (line 16))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/fd/7c/168f8343f33d861305e18c56901ef1bb675d3c7f977f435ec72751a71a54/contourpy-1.2.0-cp310-cp310-win_amd64.whl (186 kB)

Collecting cycler>=0.10 (from matplotlib->-r requirements.txt (line 16))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/e7/05/c19819d5e3d95294a6f5947fb9b9629efb316b96de511b418c53d245aae6/cycler-0.12.1-py3-none-any.whl (8.3 kB)

Collecting fonttools>=4.22.0 (from matplotlib->-r requirements.txt (line 16))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/e9/59/b95df4ad8c5657dddec2a682d105ed3d3bd8734b3b158f63340f4f76d983/fonttools-4.50.0-cp310-cp310-win_amd64.whl (2.2 MB)

Collecting kiwisolver>=1.3.1 (from matplotlib->-r requirements.txt (line 16))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/4a/a1/8a9c9be45c642fa12954855d8b3a02d9fd8551165a558835a19508fec2e6/kiwisolver-1.4.5-cp310-cp310-win_amd64.whl (56 kB)

Collecting pyparsing>=2.3.1 (from matplotlib->-r requirements.txt (line 16))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/9d/ea/6d76df31432a0e6fdf81681a895f009a4bb47b3c39036db3e1b528191d52/pyparsing-3.1.2-py3-none-any.whl (103 kB)

Collecting python-dateutil>=2.7 (from matplotlib->-r requirements.txt (line 16))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/ec/57/56b9bcc3c9c6a792fcbaf139543cee77261f3651ca9da0c93f5c1221264b/python_dateutil-2.9.0.post0-py2.py3-none-any.whl (229 kB)

Collecting six (from fire->-r requirements.txt (line 17))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/d9/5a/e7c31adbe875f2abbb91bd84cf2dc52d792b5a01506781dbcf25c91daf11/six-1.16.0-py2.py3-none-any.whl (11 kB)

Collecting termcolor (from fire->-r requirements.txt (line 17))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/d9/5f/8c716e47b3a50cbd7c146f45881e11d9414def768b7cd9c5e6650ec2a80a/termcolor-2.4.0-py3-none-any.whl (7.7 kB)

Collecting bitsandbytes (from galore-torch->-r requirements.txt (line 18))Downloading https://pypi.tuna.tsinghua.edu.cn/packages/db/5b/ce65d93d7bb60fb07e5253f8f4c2da79d0329386188374a25b0ace74aae4/bitsandbytes-0.43.0-py3-none-win_amd64.whl (101.6 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 101.6/101.6 MB 4.4 MB/s eta 0:00:00

Collecting jsonschema>=3.0 (from altair<6.0,>=4.2.0->gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/39/9d/b035d024c62c85f2e2d4806a59ca7b8520307f34e0932fbc8cc75fe7b2d9/jsonschema-4.21.1-py3-none-any.whl (85 kB)

Collecting toolz (from altair<6.0,>=4.2.0->gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/b7/8a/d82202c9f89eab30f9fc05380daae87d617e2ad11571ab23d7c13a29bb54/toolz-0.12.1-py3-none-any.whl (56 kB)

Requirement already satisfied: colorama in c:\users\administrator\appdata\roaming\python\python310\site-packages (from click>=7.0->uvicorn->-r requirements.txt (line 12)) (0.4.6)

Collecting aiosignal>=1.1.2 (from aiohttp->datasets>=2.14.3->-r requirements.txt (line 3))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/76/ac/a7305707cb852b7e16ff80eaf5692309bde30e2b1100a1fcacdc8f731d97/aiosignal-1.3.1-py3-none-any.whl (7.6 kB)

Collecting attrs>=17.3.0 (from aiohttp->datasets>=2.14.3->-r requirements.txt (line 3))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/e0/44/827b2a91a5816512fcaf3cc4ebc465ccd5d598c45cefa6703fcf4a79018f/attrs-23.2.0-py3-none-any.whl (60 kB)

Collecting frozenlist>=1.1.1 (from aiohttp->datasets>=2.14.3->-r requirements.txt (line 3))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/61/15/2b5d644d81282f00b61e54f7b00a96f9c40224107282efe4cd9d2bf1433a/frozenlist-1.4.1-cp310-cp310-win_amd64.whl (50 kB)

Collecting multidict<7.0,>=4.5 (from aiohttp->datasets>=2.14.3->-r requirements.txt (line 3))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/ef/3d/ba0dc18e96c5d83731c54129819d5892389e180f54ebb045c6124b2e8b87/multidict-6.0.5-cp310-cp310-win_amd64.whl (28 kB)

Collecting yarl<2.0,>=1.0 (from aiohttp->datasets>=2.14.3->-r requirements.txt (line 3))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/31/d4/2085272a5ccf87af74d4e02787c242c5d60367840a4637b2835565264302/yarl-1.9.4-cp310-cp310-win_amd64.whl (76 kB)

Collecting async-timeout<5.0,>=4.0 (from aiohttp->datasets>=2.14.3->-r requirements.txt (line 3))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/a7/fa/e01228c2938de91d47b307831c62ab9e4001e747789d0b05baf779a6488c/async_timeout-4.0.3-py3-none-any.whl (5.7 kB)

Collecting pytz>=2020.1 (from pandas->datasets>=2.14.3->-r requirements.txt (line 3))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/9c/3d/a121f284241f08268b21359bd425f7d4825cffc5ac5cd0e1b3d82ffd2b10/pytz-2024.1-py2.py3-none-any.whl (505 kB)

Collecting tzdata>=2022.7 (from pandas->datasets>=2.14.3->-r requirements.txt (line 3))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/65/58/f9c9e6be752e9fcb8b6a0ee9fb87e6e7a1f6bcab2cdc73f02bb7ba91ada0/tzdata-2024.1-py2.py3-none-any.whl (345 kB)

Collecting charset-normalizer<4,>=2 (from requests->transformers>=4.37.2->-r requirements.txt (line 2))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/a2/a0/4af29e22cb5942488cf45630cbdd7cefd908768e69bdd90280842e4e8529/charset_normalizer-3.3.2-cp310-cp310-win_amd64.whl (100 kB)

Collecting idna<4,>=2.5 (from requests->transformers>=4.37.2->-r requirements.txt (line 2))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/c2/e7/a82b05cf63a603df6e68d59ae6a68bf5064484a0718ea5033660af4b54a9/idna-3.6-py3-none-any.whl (61 kB)

Collecting urllib3<3,>=1.21.1 (from requests->transformers>=4.37.2->-r requirements.txt (line 2))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/a2/73/a68704750a7679d0b6d3ad7aa8d4da8e14e151ae82e6fee774e6e0d05ec8/urllib3-2.2.1-py3-none-any.whl (121 kB)

Collecting certifi>=2017.4.17 (from requests->transformers>=4.37.2->-r requirements.txt (line 2))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/ba/06/a07f096c664aeb9f01624f858c3add0a4e913d6c96257acb4fce61e7de14/certifi-2024.2.2-py3-none-any.whl (163 kB)

Collecting sniffio>=1.1 (from anyio->sse-starlette->-r requirements.txt (line 15))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/e9/44/75a9c9421471a6c4805dbf2356f7c181a29c1879239abab1ea2cc8f38b40/sniffio-1.3.1-py3-none-any.whl (10 kB)

Collecting exceptiongroup>=1.0.2 (from anyio->sse-starlette->-r requirements.txt (line 15))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/b8/9a/5028fd52db10e600f1c4674441b968cf2ea4959085bfb5b99fb1250e5f68/exceptiongroup-1.2.0-py3-none-any.whl (16 kB)

Collecting docstring-parser>=0.14.1 (from tyro>=0.5.11->trl>=0.8.1->-r requirements.txt (line 6))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/d5/7c/e9fcff7623954d86bdc17782036cbf715ecab1bec4847c008557affe1ca8/docstring_parser-0.16-py3-none-any.whl (36 kB)

Collecting rich>=11.1.0 (from tyro>=0.5.11->trl>=0.8.1->-r requirements.txt (line 6))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/87/67/a37f6214d0e9fe57f6ae54b2956d550ca8365857f42a1ce0392bb21d9410/rich-13.7.1-py3-none-any.whl (240 kB)

Collecting shtab>=1.5.6 (from tyro>=0.5.11->trl>=0.8.1->-r requirements.txt (line 6))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/e2/d1/a1d3189e7873408b9dc396aef0d7926c198b0df2aa3ddb5b539d3e89a70f/shtab-1.7.1-py3-none-any.whl (14 kB)

Collecting httpcore==1.* (from httpx->gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Downloading https://pypi.tuna.tsinghua.edu.cn/packages/78/d4/e5d7e4f2174f8a4d63c8897d79eb8fe2503f7ecc03282fee1fa2719c2704/httpcore-1.0.5-py3-none-any.whl (77 kB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 77.9/77.9 kB 240.9 kB/s eta 0:00:00

Collecting mpmath>=0.19 (from sympy->torch>=1.13.1->-r requirements.txt (line 1))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/43/e3/7d92a15f894aa0c9c4b49b8ee9ac9850d6e63b03c9c32c0367a13ae62209/mpmath-1.3.0-py3-none-any.whl (536 kB)

Collecting jsonschema-specifications>=2023.03.6 (from jsonschema>=3.0->altair<6.0,>=4.2.0->gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/ee/07/44bd408781594c4d0a027666ef27fab1e441b109dc3b76b4f836f8fd04fe/jsonschema_specifications-2023.12.1-py3-none-any.whl (18 kB)

Collecting referencing>=0.28.4 (from jsonschema>=3.0->altair<6.0,>=4.2.0->gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/42/8e/ae1de7b12223986e949bdb886c004de7c304b6fa94de5b87c926c1099656/referencing-0.34.0-py3-none-any.whl (26 kB)

Collecting rpds-py>=0.7.1 (from jsonschema>=3.0->altair<6.0,>=4.2.0->gradio<4.0.0,>=3.38.0->-r requirements.txt (line 7))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/3f/17/abab0fc0ec544b89aa3de13fd9267721154a76a783279104c358e58341e2/rpds_py-0.18.0-cp310-none-win_amd64.whl (206 kB)

Collecting markdown-it-py>=2.2.0 (from rich>=11.1.0->tyro>=0.5.11->trl>=0.8.1->-r requirements.txt (line 6))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/42/d7/1ec15b46af6af88f19b8e5ffea08fa375d433c998b8a7639e76935c14f1f/markdown_it_py-3.0.0-py3-none-any.whl (87 kB)

Collecting pygments<3.0.0,>=2.13.0 (from rich>=11.1.0->tyro>=0.5.11->trl>=0.8.1->-r requirements.txt (line 6))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/97/9c/372fef8377a6e340b1704768d20daaded98bf13282b5327beb2e2fe2c7ef/pygments-2.17.2-py3-none-any.whl (1.2 MB)

Collecting mdurl~=0.1 (from markdown-it-py>=2.2.0->rich>=11.1.0->tyro>=0.5.11->trl>=0.8.1->-r requirements.txt (line 6))Using cached https://pypi.tuna.tsinghua.edu.cn/packages/b3/38/89ba8ad64ae25be8de66a6d463314cf1eb366222074cfda9ee839c56a4b4/mdurl-0.1.2-py3-none-any.whl (10.0 kB)

Installing collected packages: sentencepiece, pytz, pydub, mpmath, ffmpy, xxhash, websockets, urllib3, tzdata, typing-extensions, tqdm, toolz, termcolor, sympy, sniffio, six, shtab, semantic-version, safetensors, rpds-py, re

gex, pyyaml, python-multipart, pyparsing, pygments, pyarrow-hotfix, psutil, protobuf, pillow, packaging, orjson, numpy, networkx, multidict, mdurl, markupsafe, kiwisolver, importlib-resources, idna, h11, fsspec, frozenlist,

fonttools, filelock, exceptiongroup, einops, docstring-parser, dill, cycler, click, charset-normalizer, certifi, attrs, async-timeout, annotated-types, aiofiles, yarl, uvicorn, scipy, requests, referencing, python-dateutil,

pydantic-core, pyarrow, multiprocess, markdown-it-py, jinja2, httpcore, fire, contourpy, anyio, aiosignal, torch, starlette, rich, pydantic, pandas, matplotlib, jsonschema-specifications, huggingface-hub, httpx, aiohttp, tyr

o, tokenizers, sse-starlette, jsonschema, gradio-client, fastapi, bitsandbytes, accelerate, transformers, datasets, altair, trl, peft, gradio, galore-torch

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

pylint 2.17.1 requires tomlkit>=0.10.1, which is not installed.

Successfully installed accelerate-0.28.0 aiofiles-23.2.1 aiohttp-3.9.3 aiosignal-1.3.1 altair-5.2.0 annotated-types-0.6.0 anyio-4.3.0 async-timeout-4.0.3 attrs-23.2.0 bitsandbytes-0.43.0 certifi-2024.2.2 charset-normalizer-3

.3.2 click-8.1.7 contourpy-1.2.0 cycler-0.12.1 datasets-2.18.0 dill-0.3.8 docstring-parser-0.16 einops-0.7.0 exceptiongroup-1.2.0 fastapi-0.110.0 ffmpy-0.3.2 filelock-3.13.3 fire-0.6.0 fonttools-4.50.0 frozenlist-1.4.1 fsspe

c-2024.2.0 galore-torch-1.0 gradio-3.50.2 gradio-client-0.6.1 h11-0.14.0 httpcore-1.0.5 httpx-0.27.0 huggingface-hub-0.22.1 idna-3.6 importlib-resources-6.4.0 jinja2-3.1.3 jsonschema-4.21.1 jsonschema-specifications-2023.12.

1 kiwisolver-1.4.5 markdown-it-py-3.0.0 markupsafe-2.1.5 matplotlib-3.8.3 mdurl-0.1.2 mpmath-1.3.0 multidict-6.0.5 multiprocess-0.70.16 networkx-3.2.1 numpy-1.26.4 orjson-3.10.0 packaging-24.0 pandas-2.2.1 peft-0.10.0 pillow

-10.2.0 protobuf-5.26.1 psutil-5.9.8 pyarrow-15.0.2 pyarrow-hotfix-0.6 pydantic-2.6.4 pydantic-core-2.16.3 pydub-0.25.1 pygments-2.17.2 pyparsing-3.1.2 python-dateutil-2.9.0.post0 python-multipart-0.0.9 pytz-2024.1 pyyaml-6.

0.1 referencing-0.34.0 regex-2023.12.25 requests-2.31.0 rich-13.7.1 rpds-py-0.18.0 safetensors-0.4.2 scipy-1.12.0 semantic-version-2.10.0 sentencepiece-0.2.0 shtab-1.7.1 six-1.16.0 sniffio-1.3.1 sse-starlette-2.0.0 starlette

-0.36.3 sympy-1.12 termcolor-2.4.0 tokenizers-0.15.2 toolz-0.12.1 torch-2.2.2 tqdm-4.66.2 transformers-4.39.2 trl-0.8.1 typing-extensions-4.10.0 tyro-0.7.3 tzdata-2024.1 urllib3-2.2.1 uvicorn-0.29.0 websockets-11.0.3 xxhash-

3.4.1 yarl-1.9.4

(llm) PS E:\llm-train\LLaMA-Factory> 为了使用cuda(GPU)加速训练和推理,不安装则使用cpu。安装torch的cuda版本:pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

(llm) PS E:\llm-train\LLaMA-Factory> pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

Looking in indexes: https://download.pytorch.org/whl/cu121

Requirement already satisfied: torch in c:\users\administrator\miniconda3\envs\llm\lib\site-packages (2.2.2)

Collecting torchvisionUsing cached https://download.pytorch.org/whl/cu121/torchvision-0.17.2%2Bcu121-cp310-cp310-win_amd64.whl (5.7 MB)

Collecting torchaudioUsing cached https://download.pytorch.org/whl/cu121/torchaudio-2.2.2%2Bcu121-cp310-cp310-win_amd64.whl (4.1 MB)

Requirement already satisfied: filelock in c:\users\administrator\miniconda3\envs\llm\lib\site-packages (from torch) (3.13.3)

Requirement already satisfied: typing-extensions>=4.8.0 in c:\users\administrator\miniconda3\envs\llm\lib\site-packages (from torch) (4.10.0)

Requirement already satisfied: sympy in c:\users\administrator\miniconda3\envs\llm\lib\site-packages (from torch) (1.12)

Requirement already satisfied: networkx in c:\users\administrator\miniconda3\envs\llm\lib\site-packages (from torch) (3.2.1)

Requirement already satisfied: jinja2 in c:\users\administrator\miniconda3\envs\llm\lib\site-packages (from torch) (3.1.3)

Requirement already satisfied: fsspec in c:\users\administrator\miniconda3\envs\llm\lib\site-packages (from torch) (2024.2.0)

Requirement already satisfied: numpy in c:\users\administrator\miniconda3\envs\llm\lib\site-packages (from torchvision) (1.26.4)

Collecting torchUsing cached https://download.pytorch.org/whl/cu121/torch-2.2.2%2Bcu121-cp310-cp310-win_amd64.whl (2454.8 MB)

Requirement already satisfied: pillow!=8.3.*,>=5.3.0 in c:\users\administrator\miniconda3\envs\llm\lib\site-packages (from torchvision) (10.2.0)

Requirement already satisfied: MarkupSafe>=2.0 in c:\users\administrator\miniconda3\envs\llm\lib\site-packages (from jinja2->torch) (2.1.5)

Requirement already satisfied: mpmath>=0.19 in c:\users\administrator\miniconda3\envs\llm\lib\site-packages (from sympy->torch) (1.3.0)

Installing collected packages: torch, torchvision, torchaudioAttempting uninstall: torchFound existing installation: torch 2.2.2Uninstalling torch-2.2.2:Successfully uninstalled torch-2.2.2

Successfully installed torch-2.2.2+cu121 torchaudio-2.2.2+cu121 torchvision-0.17.2+cu121

(llm) PS E:\llm-train\LLaMA-Factory> 准备数据集

1、创建LLaMA-Factory\data\chatglm3_zh.json文件,拷贝一下内容。作为训练测试数据

[{"instruction": "","input": "安妮","output": "女仆。 精灵族\n声音温柔娇媚,嗲音。\n年龄:26岁"},{"instruction": "","input": "奥利维亚","output": "元气少女,中气十足。\n活泼可爱,心直口快。\n年龄:16岁 矮人族"},{"instruction": "","input": "维京人甲-\n维京战士","output": "野蛮无礼的维京人。\n市井气息,有些无赖。\n年龄:38岁。"},{"instruction": "","input": "维京人乙-维京射手","output": "龙套角色。\n女性\n维京人。"},{"instruction": "","input": "英灵-维京战神","output": "维京人首领。\n王者风范,霸气十足。\n声如洪钟,气势如虹。\n年龄:55岁"},{"instruction": "","input": "魔武·凛冬刃","output": "魔武。\n物品NPC"},{"instruction": "","input": "战斗教官","output": "人类。\n粗犷的女性战士,中性范。\n年龄:35岁"},{"instruction": "","input": "雅妮拉","output": "青年女性,声音甜美。\n骄横,喜欢冷嘲热讽。\n\n主角团之一。"},{"instruction": "","input": "公墓守卫队长","output": "龙套角色。\n男性,骑士团战士。"},{"instruction": "","input": "邪恶魔法师/黑巫师","output": "人类。\n丧失理智的人类法师。\n癫狂,变态。\n\n年龄:33岁"},{"instruction": "","input": "魔物-赤炎妖","output": "魔物。\n嗜血恐怖的妖魔。"},{"instruction": "","input": "英灵-裁决骑士","output": "逝去的英雄之魂。\n女性。\n言辞铿锵有力,正义之士。"},{"instruction": "","input": "布鲁克","output": "人类贵族公子,商人。\n20岁。\n天真傲慢,蛮横自大,骄傲易怒。但实际上非常弱鸡,未涉世事。\n毫无社会常识的公子哥。\n"},{"instruction": "","input": "地精仆从","output": "布鲁克的仆人。\n地精。\n胆小怕事,说话结巴。"},{"instruction": "","input": "嚣张佣兵","output": "雷根佣兵团的佣兵,狗仗人势。"},{"instruction": "","input": "神秘女神","output": "女神,端庄温柔,持稳宁静。\n不紧不慢,慢条斯理。\n年龄:20岁"},{"instruction": "","input": "托托","output": "酒馆老板,人类\n八面玲珑,诙谐幽默。\n言谈举止进退有度,侃侃而谈。\n年龄:45岁"},{"instruction": "","input": "酒馆女仆-露娜","output": "温柔可人的女仆。"},{"instruction": "","input": "狡诈魔魂","output": "人类。-灵体\n阴险狡诈的腹黑女性。\n得势时飞扬跋扈,弱势时楚楚可怜。\n年龄:29岁"},{"instruction": "","input": "火元素","output": "元素生命。\n魔物。\n龙套角色。"},{"instruction": "","input": "商会管事/商队运输理事","output": "龙套角色,均为年轻女性。"},{"instruction": "","input": "安吉尔","output": "女骑士,中性声线。\n热诚坦率,刚正不阿。"},{"instruction": "","input": "托克","output": "龙套角色。\n地精商贩 地精种族\n年龄:30岁\n青年商贩,有一点怪物腔调。"},{"instruction": "","input": "王国守卫/铁卫禁军","output": "龙套角色,普通男性战士。"},{"instruction": "","input": "爱德华","output": "人类,狮心骑士团中的精英骑士\n青壮男性,中气十足,正义之士。\n年龄:35岁"},{"instruction": "","input": "骑士团长","output": "血族\n大家风范,中性风。\n雷厉风行,刚正不阿。\n年龄:38岁"},{"instruction": "","input": "主教","output": "人类\n圣光教会主教。\n传令官,声音清晰中正,官方强调。\n年龄:30岁"},{"instruction": "","input": "女王","output": "人类。\n年轻而阴郁的贵族少女。\n郁郁寡欢。\n\n年龄:15岁"},{"instruction": "","input": "艾伦","output": "人类佣兵。——剧情主角之一。\n20岁。\n青年才俊,隐藏身份-皇室王子。\n绝顶聪明、攻于算计、略显市侩、巧于辞令。\n言语之中,充满了少年英气,但偶尔会有些轻浮。\n\n人物参考:《雪中悍刀行》徐凤年\n"},{"instruction": "","input": "雅各布","output": "半神。\n狼人始祖。(中年男子)\n傲慢无礼,嚣张跋扈,却又不失大家风范。\n\n\n人物参考:网易阴阳师里的八岐大蛇。\n"},{"instruction": "","input": "佣兵首领威尔曼","output": "人类佣兵。\n35岁。\n中年男子,沉稳持重,波澜不惊。\n"},{"instruction": "","input": "食人魔","output": "食人怪物,半兽人腔调,残暴贪婪。\n年龄:150岁"},{"instruction": "","input": "兽人首领","output": "兽人首领。\n年龄未知。(相当于成年男性)\n蛮横残暴,对人类等弱小种族表示轻蔑和无视。"},{"instruction": "","input": "霍华德","output": "半神。\n血族始祖。(神魔大陆之中非常重要的种族)\n沉默寡言,沉稳持重,有一种成熟大叔的依赖感。\n\n例子:《秦时明月》中的盖聂。"},{"instruction": "","input": "凯尼","output": "骄横佣兵,满不在乎,阴险狡诈。"},{"instruction": "","input": "西蒙斯","output": "佣兵战士,中年男性,外强中干。"},{"instruction": "","input": "迪比斯","output": "魔法师佣兵,龙套角色。"},{"instruction": "","input": "兰希亚","output": "衰弱的女神,有气无力。\n气若游丝,声音迟缓。\n年龄:45岁"},{"instruction": "","input": "树灵龙","output": "古龙级别。\n\n可类比《霍比特人》里的恶龙史矛革"},{"instruction": "","input": "世界之母","output": "世界之母,声音沉稳而空灵,声音可带回音。\n\n可参考FF14中的水晶之母,海德林。"},{"instruction": "","input": "树灵精灵","output": "较弱可爱,声线可低幼一些,软萌音。\n楚楚可怜。"},{"instruction": "","input": "狮心骑士维达","output": "外表为中老年骑士。\n老年人特有的亲和力。\n深沉慈祥,智者形象。"},{"instruction": "","input": "亚杰拉","output": "龙套角色。\n初级佣兵。\n少女。\n战斗力低下。"},{"instruction": "","input": "查克·肖特奇","output": "龙套角色。\n初级佣兵。\n青年男性。\n战斗力低下。"},{"instruction": "","input": "布里克","output": "人族,中年男性。\n狮心高阶骑士。"},{"instruction": "","input": "雷切尔","output": "人族,中年女性。\n狮心高阶骑士。"},{"instruction": "","input": "戴安娜","output": "人族,年轻女性。\n狮心高阶骑士。"},{"instruction": "","input": "巴伦德","output": "矮人,中年男性。\n狮心高阶骑士。"},{"instruction": "","input": "龙十八","output": "禅国青年才俊。\n好风雅,外在散漫放浪。\n实则极有"},{"instruction": "","input": "禅国商贩","output": "龙套角色。\n外表看上去老实巴交,实则内心满是算计的中年男子。\n"},{"instruction": "","input": "琳达·安吉","output": "龙套角色。\n禅国年轻女子。"},{"instruction": "","input": "芙娜德","output": "龙套角色。\n禅国年轻女子。"},{"instruction": "","input": "闹事者","output": "龙套角色。\n禅国年轻男子,脾气暴躁,对商会有很深的怨念。"},{"instruction": "","input": "商会守卫","output": "龙套角色。\n中年男性\n禅国商会的守卫。"},{"instruction": "","input": "穷苦孩童","output": "龙套角色。\n少年男孩。\n生活潦倒,贫穷可怜。"},{"instruction": "","input": "龙神傀儡/龙神","output": "禅国幕后的统治者。\n实际上本身是利用死者捏造的傀儡,替真正的龙神-古龙青办事。\n为人腹黑阴险,却又气度非凡。"},{"instruction": "","input": "佛陀八","output": "寿命不可知。\n龙十八以及数代会长的老师。\n暗中与龙神勾结,获得不死之身,震慑禅国的朝纲。\n常年居住于观星阁。"},{"instruction": "","input": "古龙青","output": "龙神真身,在漫长的岁月当中,生命力之间衰弱,依靠着吞噬活人续命。\n从曾经禅国的守护者,成了禅国逐渐毁灭的黑洞。"},{"instruction": "","input": "祭天坛护院/祭天坛守卫","output": "龙套角色。\n禅国祭天坛的守护者。"}

]

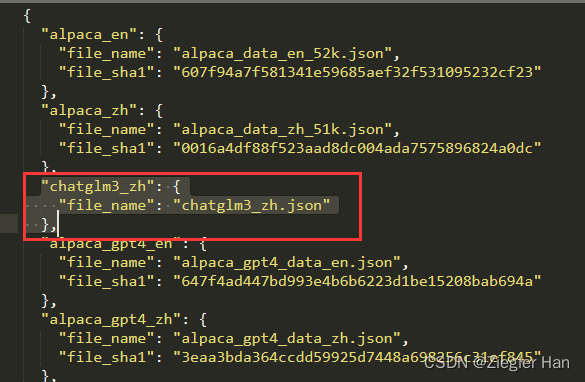

2、编辑LLaMA-Factory\data\dataset_info.json,添加测试数据集到配置文件

..."chatglm3_zh": {"file_name": "chatglm3_zh.json"},...

训练微调

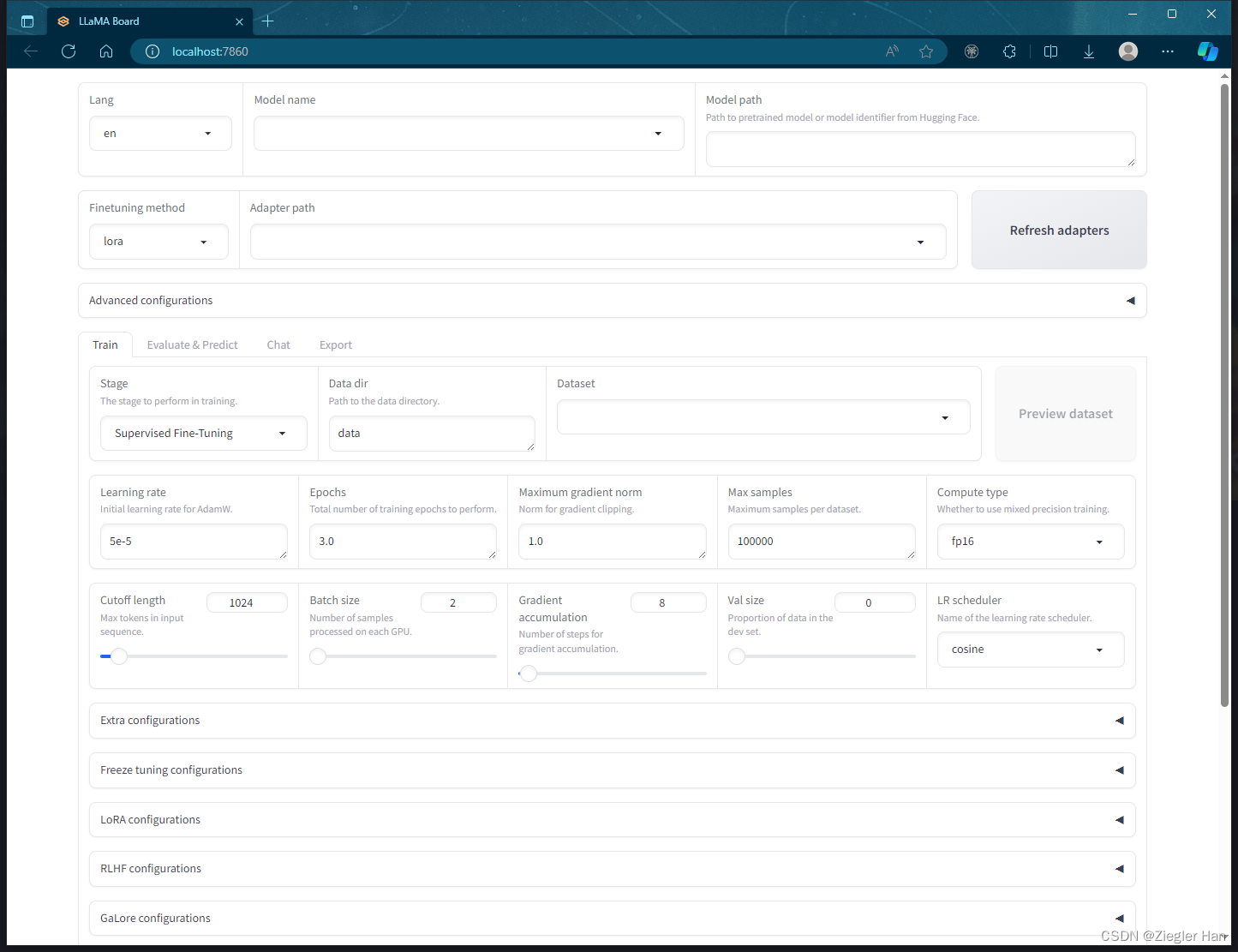

1、启动web版本的训练

(llm) PS E:\llm-train\LLaMA-Factory> set CUDA_VISIBLE_DEVICES=0

(llm) PS E:\llm-train\LLaMA-Factory> python src/train_web.py

Running on local URL: http://0.0.0.0:7860To create a public link, set `share=True` in `launch()`.

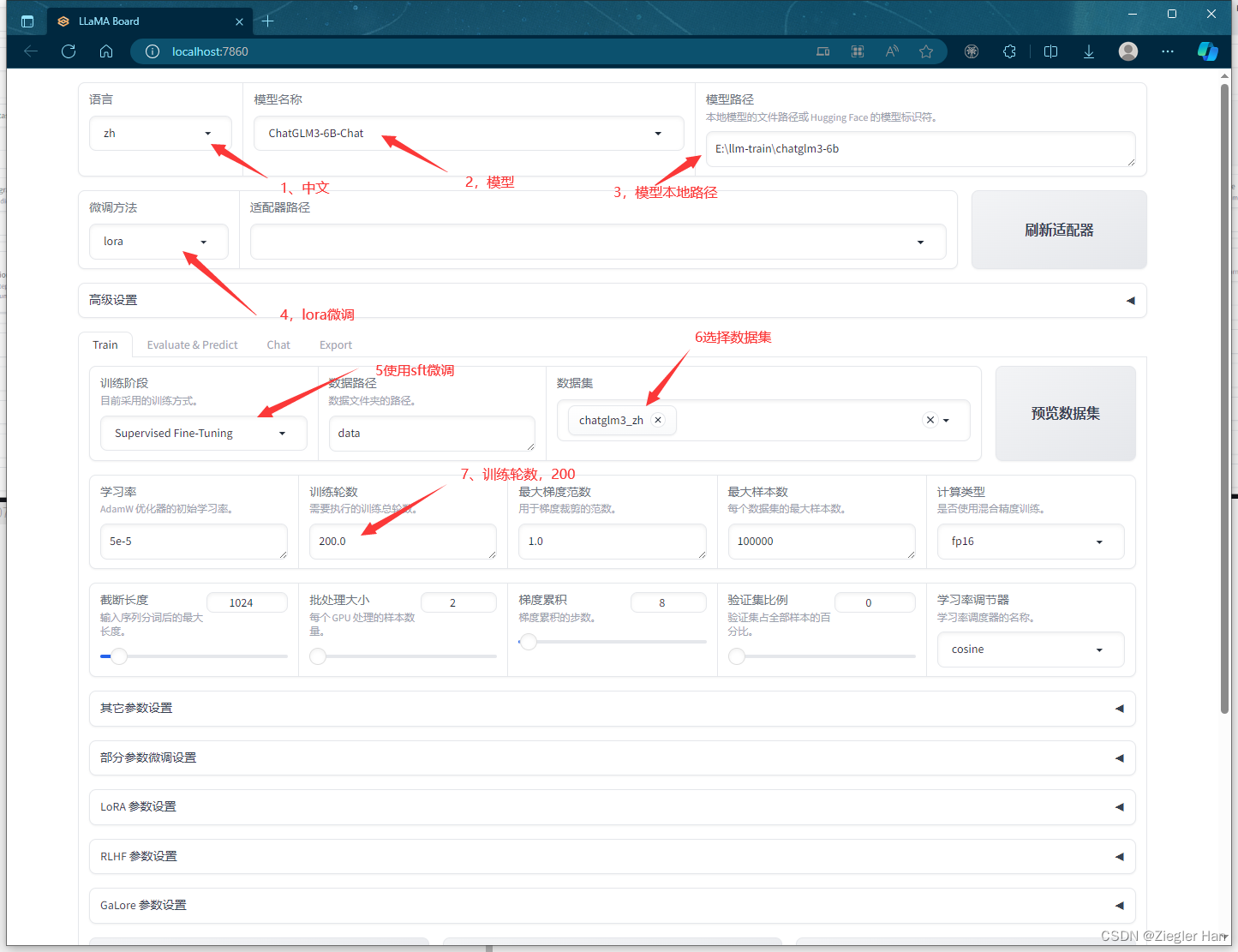

2、调整配置,浏览器打开:http://localhost:7860/

- 语言选择中文

- 模型名称:ChatGLM3-6B-Chat

- 前面从Hugging Face下载的ChatGLM3-6B模型本地路径

- 微调方法:lora

- 训练阶段:sft

- 数据集:上面新添加的测试数据集

- 训练轮数:200,因为数据量比较小,为了能表现效果,这里使用200轮

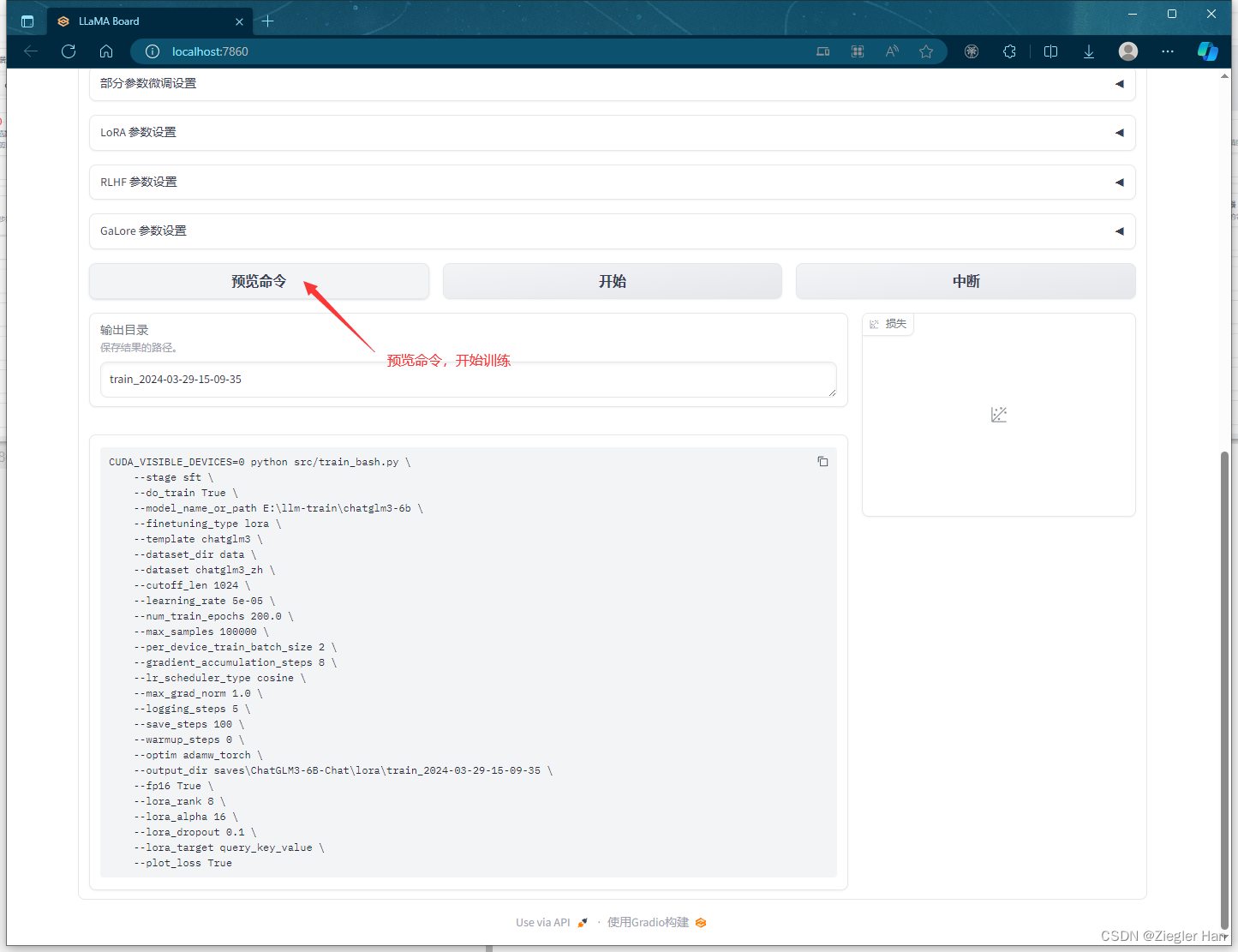

预览命令

CUDA_VISIBLE_DEVICES=0 python src/train_bash.py \--stage sft \--do_train True \--model_name_or_path E:\llm-train\chatglm3-6b \--finetuning_type lora \--template chatglm3 \--dataset_dir data \--dataset chatglm3_zh \--cutoff_len 1024 \--learning_rate 5e-05 \--num_train_epochs 200.0 \--max_samples 100000 \--per_device_train_batch_size 2 \--gradient_accumulation_steps 8 \--lr_scheduler_type cosine \--max_grad_norm 1.0 \--logging_steps 5 \--save_steps 100 \--warmup_steps 0 \--optim adamw_torch \--output_dir saves\ChatGLM3-6B-Chat\lora\train_2024-03-29-15-09-35 \--fp16 True \--lora_rank 8 \--lora_alpha 16 \--lora_dropout 0.1 \--lora_target query_key_value \--plot_loss True

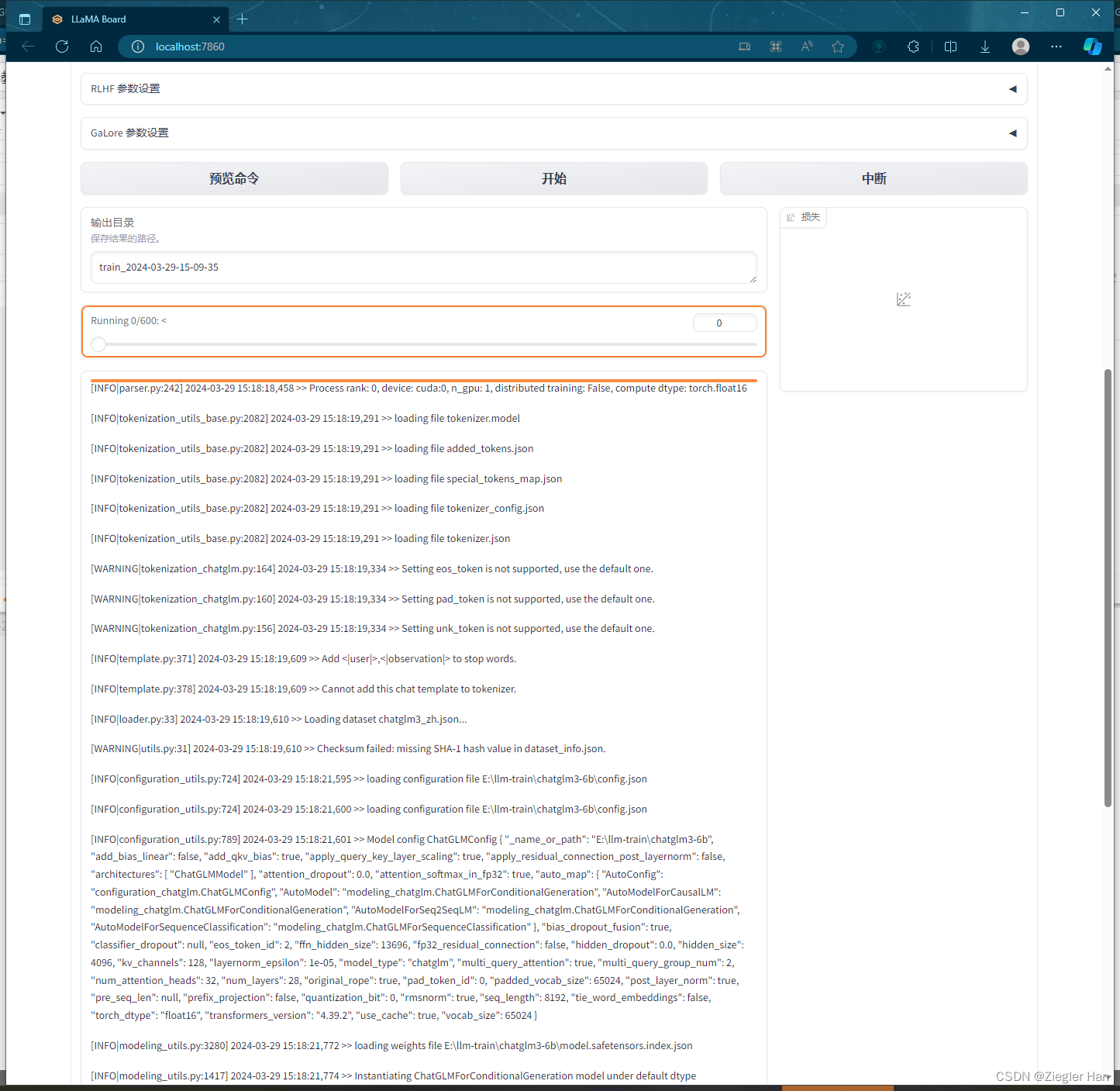

3、开始训练

[INFO|parser.py:242] 2024-03-29 15:18:18,458 >> Process rank: 0, device: cuda:0, n_gpu: 1, distributed training: False, compute dtype: torch.float16[INFO|tokenization_utils_base.py:2082] 2024-03-29 15:18:19,291 >> loading file tokenizer.model[INFO|tokenization_utils_base.py:2082] 2024-03-29 15:18:19,291 >> loading file added_tokens.json[INFO|tokenization_utils_base.py:2082] 2024-03-29 15:18:19,291 >> loading file special_tokens_map.json[INFO|tokenization_utils_base.py:2082] 2024-03-29 15:18:19,291 >> loading file tokenizer_config.json[INFO|tokenization_utils_base.py:2082] 2024-03-29 15:18:19,291 >> loading file tokenizer.json[WARNING|tokenization_chatglm.py:164] 2024-03-29 15:18:19,334 >> Setting eos_token is not supported, use the default one.[WARNING|tokenization_chatglm.py:160] 2024-03-29 15:18:19,334 >> Setting pad_token is not supported, use the default one.[WARNING|tokenization_chatglm.py:156] 2024-03-29 15:18:19,334 >> Setting unk_token is not supported, use the default one.[INFO|template.py:371] 2024-03-29 15:18:19,609 >> Add <|user|>,<|observation|> to stop words.[INFO|template.py:378] 2024-03-29 15:18:19,609 >> Cannot add this chat template to tokenizer.[INFO|loader.py:33] 2024-03-29 15:18:19,610 >> Loading dataset chatglm3_zh.json...[WARNING|utils.py:31] 2024-03-29 15:18:19,610 >> Checksum failed: missing SHA-1 hash value in dataset_info.json.[INFO|configuration_utils.py:724] 2024-03-29 15:18:21,595 >> loading configuration file E:\llm-train\chatglm3-6b\config.json[INFO|configuration_utils.py:724] 2024-03-29 15:18:21,600 >> loading configuration file E:\llm-train\chatglm3-6b\config.json[INFO|configuration_utils.py:789] 2024-03-29 15:18:21,601 >> Model config ChatGLMConfig { "_name_or_path": "E:\llm-train\chatglm3-6b", "add_bias_linear": false, "add_qkv_bias": true, "apply_query_key_layer_scaling": true, "apply_residual_connection_post_layernorm": false, "architectures": [ "ChatGLMModel" ], "attention_dropout": 0.0, "attention_softmax_in_fp32": true, "auto_map": { "AutoConfig": "configuration_chatglm.ChatGLMConfig", "AutoModel": "modeling_chatglm.ChatGLMForConditionalGeneration", "AutoModelForCausalLM": "modeling_chatglm.ChatGLMForConditionalGeneration", "AutoModelForSeq2SeqLM": "modeling_chatglm.ChatGLMForConditionalGeneration", "AutoModelForSequenceClassification": "modeling_chatglm.ChatGLMForSequenceClassification" }, "bias_dropout_fusion": true, "classifier_dropout": null, "eos_token_id": 2, "ffn_hidden_size": 13696, "fp32_residual_connection": false, "hidden_dropout": 0.0, "hidden_size": 4096, "kv_channels": 128, "layernorm_epsilon": 1e-05, "model_type": "chatglm", "multi_query_attention": true, "multi_query_group_num": 2, "num_attention_heads": 32, "num_layers": 28, "original_rope": true, "pad_token_id": 0, "padded_vocab_size": 65024, "post_layer_norm": true, "pre_seq_len": null, "prefix_projection": false, "quantization_bit": 0, "rmsnorm": true, "seq_length": 8192, "tie_word_embeddings": false, "torch_dtype": "float16", "transformers_version": "4.39.2", "use_cache": true, "vocab_size": 65024 }[INFO|modeling_utils.py:3280] 2024-03-29 15:18:21,772 >> loading weights file E:\llm-train\chatglm3-6b\model.safetensors.index.json[INFO|modeling_utils.py:1417] 2024-03-29 15:18:21,774 >> Instantiating ChatGLMForConditionalGeneration model under default dtype torch.float16.[INFO|configuration_utils.py:928] 2024-03-29 15:18:21,775 >> Generate config GenerationConfig { "eos_token_id": 2, "pad_token_id": 0 }[INFO|modeling_utils.py:4024] 2024-03-29 15:18:37,990 >> All model checkpoint weights were used when initializing ChatGLMForConditionalGeneration.[INFO|modeling_utils.py:4032] 2024-03-29 15:18:37,990 >> All the weights of ChatGLMForConditionalGeneration were initialized from the model checkpoint at E:\llm-train\chatglm3-6b. If your task is similar to the task the model of the checkpoint was trained on, you can already use ChatGLMForConditionalGeneration for predictions without further training.[INFO|modeling_utils.py:3573] 2024-03-29 15:18:37,999 >> Generation config file not found, using a generation config created from the model config.[INFO|patcher.py:259] 2024-03-29 15:18:38,004 >> Gradient checkpointing enabled.[INFO|adapter.py:90] 2024-03-29 15:18:38,004 >> Fine-tuning method: LoRA[INFO|loader.py:126] 2024-03-29 15:18:38,190 >> trainable params: 1949696 || all params: 6245533696 || trainable%: 0.0312[INFO|trainer.py:607] 2024-03-29 15:18:38,204 >> Using auto half precision backend[INFO|trainer.py:1969] 2024-03-29 15:18:38,350 >> ***** Running training *****[INFO|trainer.py:1970] 2024-03-29 15:18:38,351 >> Num examples = 59[INFO|trainer.py:1971] 2024-03-29 15:18:38,351 >> Num Epochs = 200[INFO|trainer.py:1972] 2024-03-29 15:18:38,351 >> Instantaneous batch size per device = 2[INFO|trainer.py:1975] 2024-03-29 15:18:38,351 >> Total train batch size (w. parallel, distributed & accumulation) = 16[INFO|trainer.py:1976] 2024-03-29 15:18:38,351 >> Gradient Accumulation steps = 8[INFO|trainer.py:1977] 2024-03-29 15:18:38,352 >> Total optimization steps = 600[INFO|trainer.py:1978] 2024-03-29 15:18:38,354 >> Number of trainable parameters = 1,949,696

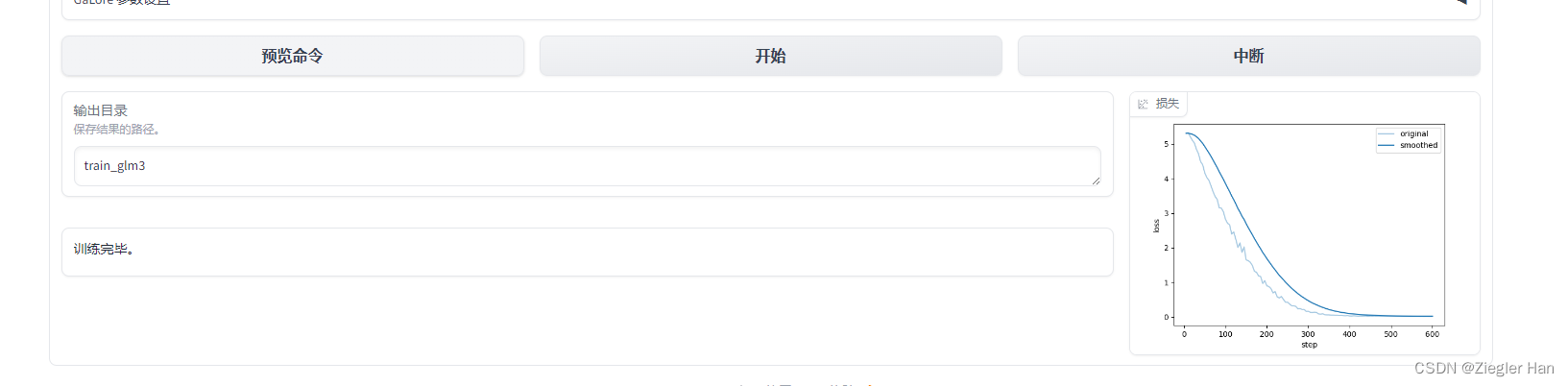

4、训练完毕

导出模型:需要设置训练的最后一个检查点。

(llm) PS E:\llm-train\LLaMA-Factory> python src/export_model.py --model_name_or_path E:\\llm-train\\chatglm3-6b --adapter_name_or_path E:\\llm-train\\LLaMA-Factory\\saves\\ChatGLM3-6B-Chat\\lora\\train_glm3\\checkpoint-200 -

-template default --finetuning_type lora --export_dir E:\\llm-train\\chatglm3-trained --export_size 2 --export_legacy_format False

[INFO|tokenization_utils_base.py:2082] 2024-03-30 17:18:44,968 >> loading file tokenizer.model

[INFO|tokenization_utils_base.py:2082] 2024-03-30 17:18:44,968 >> loading file added_tokens.json

[INFO|tokenization_utils_base.py:2082] 2024-03-30 17:18:44,968 >> loading file special_tokens_map.json

[INFO|tokenization_utils_base.py:2082] 2024-03-30 17:18:44,969 >> loading file tokenizer_config.json

[INFO|tokenization_utils_base.py:2082] 2024-03-30 17:18:44,969 >> loading file tokenizer.json

Setting eos_token is not supported, use the default one.

Setting pad_token is not supported, use the default one.

Setting unk_token is not supported, use the default one.

[INFO|configuration_utils.py:724] 2024-03-30 17:18:45,127 >> loading configuration file E:\\llm-train\\chatglm3-6b\config.json

[INFO|configuration_utils.py:724] 2024-03-30 17:18:45,132 >> loading configuration file E:\\llm-train\\chatglm3-6b\config.json

[INFO|configuration_utils.py:789] 2024-03-30 17:18:45,133 >> Model config ChatGLMConfig {"_name_or_path": "E:\\\\llm-train\\\\chatglm3-6b","add_bias_linear": false,"add_qkv_bias": true,"apply_query_key_layer_scaling": true,"apply_residual_connection_post_layernorm": false,"architectures": ["ChatGLMModel"],"attention_dropout": 0.0,"attention_softmax_in_fp32": true,"auto_map": {"AutoConfig": "configuration_chatglm.ChatGLMConfig","AutoModel": "modeling_chatglm.ChatGLMForConditionalGeneration","AutoModelForCausalLM": "modeling_chatglm.ChatGLMForConditionalGeneration","AutoModelForSeq2SeqLM": "modeling_chatglm.ChatGLMForConditionalGeneration","AutoModelForSequenceClassification": "modeling_chatglm.ChatGLMForSequenceClassification"},"bias_dropout_fusion": true,"classifier_dropout": null,"eos_token_id": 2,"ffn_hidden_size": 13696,"fp32_residual_connection": false,"hidden_dropout": 0.0,"hidden_size": 4096,"kv_channels": 128,"layernorm_epsilon": 1e-05,"model_type": "chatglm","multi_query_attention": true,"multi_query_group_num": 2,"num_attention_heads": 32,"num_layers": 28,"original_rope": true,"pad_token_id": 0,"padded_vocab_size": 65024,"post_layer_norm": true,"pre_seq_len": null,"prefix_projection": false,"quantization_bit": 0,"rmsnorm": true,"seq_length": 8192,"tie_word_embeddings": false,"torch_dtype": "float16","transformers_version": "4.39.2","use_cache": true,"vocab_size": 65024

}03/30/2024 17:18:45 - INFO - llmtuner.model.patcher - Using KV cache for faster generation.

[INFO|modeling_utils.py:3280] 2024-03-30 17:18:45,287 >> loading weights file E:\\llm-train\\chatglm3-6b\model.safetensors.index.json

[INFO|modeling_utils.py:1417] 2024-03-30 17:18:45,288 >> Instantiating ChatGLMForConditionalGeneration model under default dtype torch.float16.

[INFO|configuration_utils.py:928] 2024-03-30 17:18:45,289 >> Generate config GenerationConfig {"eos_token_id": 2,"pad_token_id": 0

}Loading checkpoint shards: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 7/7 [00:04<00:00, 1.60it/s]

[INFO|modeling_utils.py:4024] 2024-03-30 17:18:49,890 >> All model checkpoint weights were used when initializing ChatGLMForConditionalGeneration.[INFO|modeling_utils.py:4032] 2024-03-30 17:18:49,890 >> All the weights of ChatGLMForConditionalGeneration were initialized from the model checkpoint at E:\\llm-train\\chatglm3-6b.

If your task is similar to the task the model of the checkpoint was trained on, you can already use ChatGLMForConditionalGeneration for predictions without further training.

[INFO|modeling_utils.py:3573] 2024-03-30 17:18:49,895 >> Generation config file not found, using a generation config created from the model config.

WARNING:root:Some parameters are on the meta device device because they were offloaded to the cpu.

03/30/2024 17:18:49 - INFO - llmtuner.model.adapter - Fine-tuning method: LoRA

INFO:llmtuner.model.adapter:Fine-tuning method: LoRA

03/30/2024 17:18:53 - INFO - llmtuner.model.adapter - Merged 1 adapter(s).

INFO:llmtuner.model.adapter:Merged 1 adapter(s).

03/30/2024 17:18:53 - INFO - llmtuner.model.adapter - Loaded adapter(s): E:\\llm-train\\LLaMA-Factory\\saves\\ChatGLM3-6B-Chat\\lora\\train_glm3\\checkpoint-200

INFO:llmtuner.model.adapter:Loaded adapter(s): E:\\llm-train\\LLaMA-Factory\\saves\\ChatGLM3-6B-Chat\\lora\\train_glm3\\checkpoint-200

03/30/2024 17:18:53 - INFO - llmtuner.model.loader - all params: 6243584000

INFO:llmtuner.model.loader:all params: 6243584000

[INFO|configuration_utils.py:471] 2024-03-30 17:18:53,066 >> Configuration saved in E:\\llm-train\\chatglm3-trained\config.json

[INFO|configuration_utils.py:697] 2024-03-30 17:18:53,066 >> Configuration saved in E:\\llm-train\\chatglm3-trained\generation_config.json

[INFO|modeling_utils.py:2482] 2024-03-30 17:19:08,637 >> The model is bigger than the maximum size per checkpoint (2GB) and is going to be split in 7 checkpoint shards. You can find where each parameters has been saved in th

e index located at E:\\llm-train\\chatglm3-trained\model.safetensors.index.json.

[INFO|tokenization_utils_base.py:2502] 2024-03-30 17:19:08,642 >> tokenizer config file saved in E:\\llm-train\\chatglm3-trained\tokenizer_config.json

[INFO|tokenization_utils_base.py:2511] 2024-03-30 17:19:08,643 >> Special tokens file saved in E:\\llm-train\\chatglm3-trained\special_tokens_map.json

(llm) PS E:\llm-train\LLaMA-Factory>

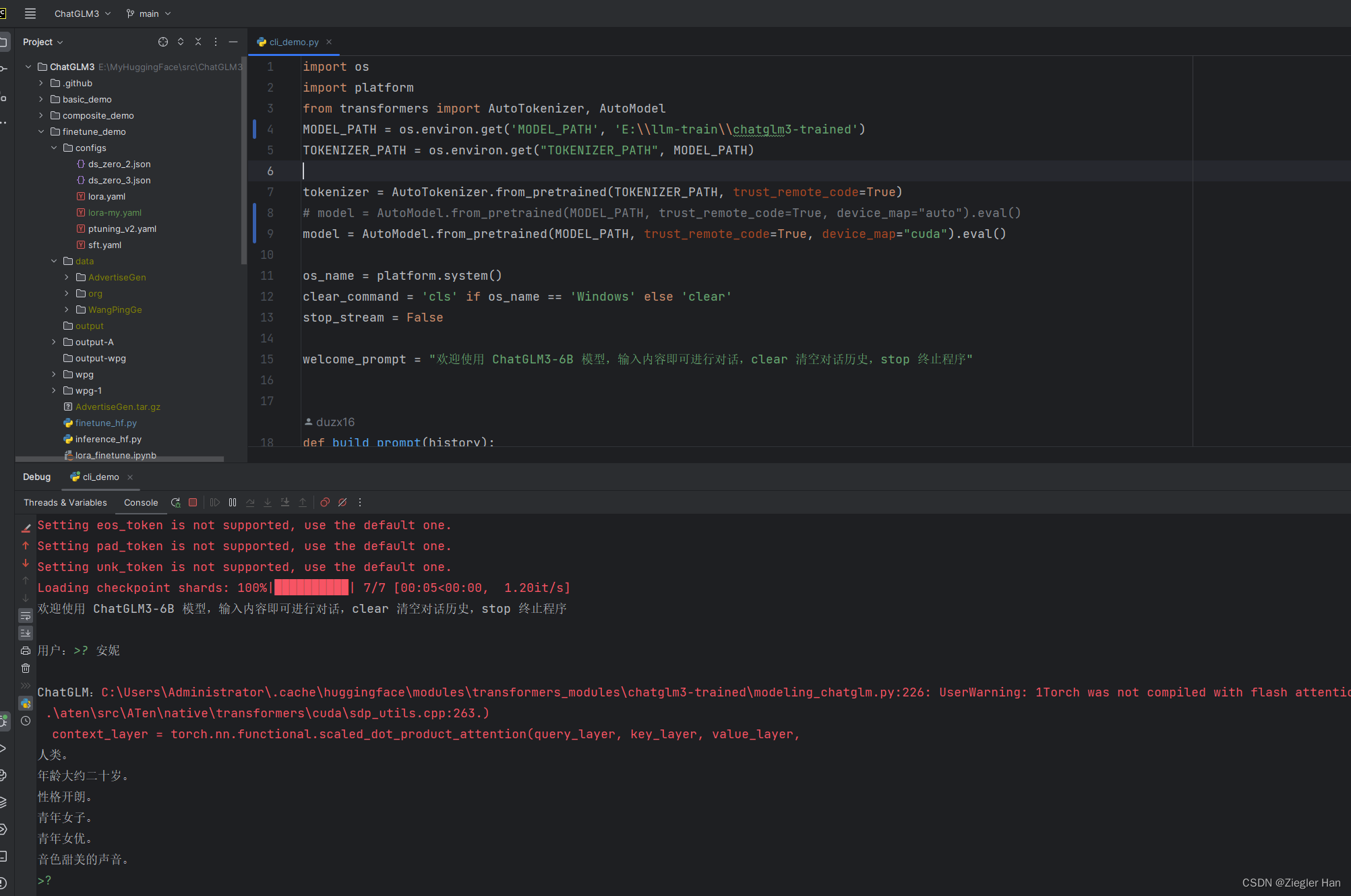

测试模型

1、修改训练后的模型路径:MODEL_PATH = os.environ.get(‘MODEL_PATH’, ‘E:\llm-train\chatglm3-trained’)

2、运行:ChatGLM3\basic_demo\cli_demo.py

用户:>? 安妮

人类。

年龄大约二十岁。

性格开朗。

青年女子。

青年女优。

音色甜美的声音。

用户:>? 魔魂

ChatGLM:亡灵。

年龄大约三十岁。

性格狡猾。

亡灵法师。

膂力过人。

声音低沉。

用户: