1.图像的读取与显示

1.1 加载并显示一张图片

#include<opencv2/opencv.hpp>

#include<iostream>using namespace cv;

using namespace std;

int main(int argc,char** argv){Mat src=imread("sonar.jpg");//读取图像if(src.empty()){printf("Could not open or find the image\n");return -1;}namedWindow("test opencv setup",CV_WINDOW_AUTOSIZE);imshow("test opencv setup",src);//显示图像,窗口命名为test opencv setupwaitKey(0);//显示按键,0表示无限等待return 0;

}

1.2 窗口大小自由调整

#include<opencv2/opencv.hpp>

#include<iostream>using namespace cv;

using namespace std;

int main(int argc,char** argv){Mat src=imread("sonar.jpg");//读取图像if(src.empty()){printf("Could not open or find the image\n");return -1;}namedWindow("test opencv setup",WINDOW_FREERATIO);//创建一个窗口,窗口名为“test opencv setup“,窗口属性为自由比例imshow("test opencv setup",src);//显示图像waitKey(0);//显示按键,0表示无限等待destroyAllWindows();return 0;

}

1.3 图像的读取方式

IMREAD_UNCHANGED:读取原图像,包括alpha通道

IMREAD_GRAYSCALE:以灰度图像读取

IMREAD_COLOR:以彩色图像读取

IMREAD_ANYDEPTH:以原图像深度读取

IMREAD_ANYCOLOR:以原图像颜色格式读取

IMREAD_LOAD_GDAL:使用GDAL读取图像

IMREAD_REDUCED_GRAYSCALE_2:以1/2的灰度图像读取

IMREAD_REDUCED_COLOR_2:以1/2的彩色图像读取

IMREAD_REDUCED_GRAYSCALE_4:以1/4的灰度图像读取

#include<opencv2/opencv.hpp>

#include<iostream>using namespace cv;

using namespace std;

int main(int argc,char** argv){Mat src=imread("sonar.jpg",IMREAD_GRAYSCALE);//读取图像if(src.empty()){printf("Could not open or find the image\n");return -1;}namedWindow("test opencv setup",WINDOW_AUTOSIZE);//创建一个窗口,窗口名为“test opencv setup“,窗口属性为自由比例imshow("test opencv setup",src);//显示图像waitKey(0);//显示按键,0表示无限等待destroyAllWindows();return 0;

}

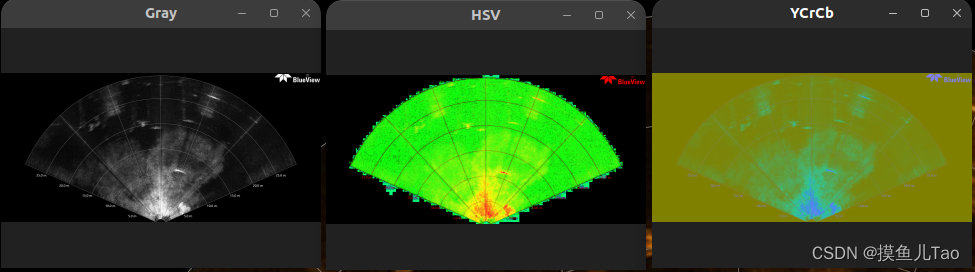

1.4 图像色彩空间转换

Testdemo.cpp

#include"Testdemo.h"TestDemo::TestDemo()

{}

TestDemo::~TestDemo()

{}void TestDemo::colorSpace_demo(Mat& image)

{cv::Mat gray,hsv,ycrcb;//创建三个窗口,窗口名分别为 “Gray” “HSV” “YCrCb”,窗口属性为自由比例namedWindow("Gray",WINDOW_FREERATIO);namedWindow("HSV",WINDOW_FREERATIO);namedWindow("YCrCb",WINDOW_FREERATIO);//转换图像颜色空间cvtColor(image,gray,COLOR_BGR2GRAY);//将图像转换为灰度图cvtColor(image,hsv,COLOR_BGR2HSV);//将图像转化为HSV图像cvtColor(image,ycrcb,COLOR_BGR2YCrCb);//将图像转化为YCrCb图像//显示图像imshow("Gray",gray);imshow("HSV",hsv);imshow("YCrCb",ycrcb);//保存转换后的图像imwrite("/home/liutao/Desktop/222/gray.png",gray);imwrite("/home/liutao/Desktop/222/hsv.png",hsv);imwrite("/home/liutao/Desktop/222/ycrcb.png",ycrcb);

}

Testdemo.h

#pragma once

#include<opencv2/opencv.hpp>using namespace cv;class TestDemo

{public:TestDemo();~TestDemo();void colorSpace_demo(Mat& image); //颜色空间转换、传入图像

};

main.cpp

#include<iostream>

#include<opencv2/opencv.hpp>

#include"Testdemo.h"

#include"test.h"

using namespace std;int main(int argc,char** argv){Mat img =imread("/home/liutao/Desktop/222/sonar.jpg");if(img.empty()){cout<<"could not open or find the image"<<endl;return -1;}namedWindow("Image",WINDOW_AUTOSIZE);imshow("Image",img);TestDemo testdemo;testdemo.colorSpace_demo(img);waitKey(0);destroyAllWindows();return 0;}

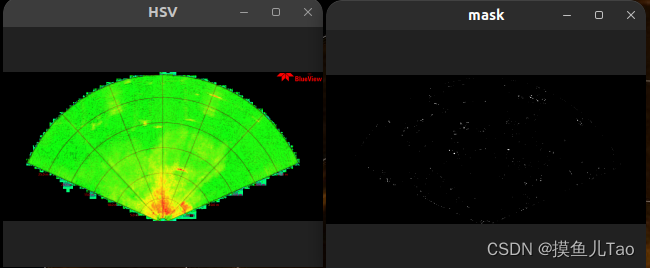

1.5 inrange

Scalar(B,G.R,C) Blue,Green,Red,Channels

Scalar(H,S.V) hue色调,saturation饱和度,value亮度

Scalar lower = Scalar(hmin, smin, vmin); // 效果等同于 Scalar lower = { hmin, smin, vmin}

Scalar upper = Scalar(hmax, smax,vmax);

inRange(imgHSV, lower, upper, mask);

void TestDemo::inrange_colorSpace_demo(Mat& image)

{cv::Mat hsv;cv::cvtColor(image, hsv, cv::COLOR_BGR2HSV); // 将图像转换为 HSV 图namedWindow("hsv", WINDOW_FREERATIO);imshow("hsv", hsv);cv::Mat mask;//提取偏红色区域inRange(hsv, cv::Scalar(0, 43, 46), cv::Scalar(10, 255, 255), mask);// 提取指定色彩范围的区域,参数为输入图像、颜色下限、颜色上限、输出图像cv::namedWindow("mask", cv::WINDOW_FREERATIO);cv::imshow("mask", mask);

}

1.6 数据访问

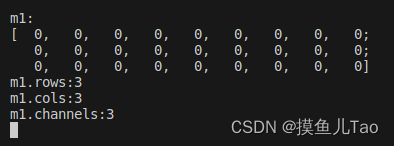

void TestDemo::mat_demo()

{Mat m1 = Mat::zeros(3, 3, CV_8UC3); // 创建一个 3x3 的 3 通道图像,像素值为 0//输出行数、列数、通道数std::cout << "m1:\n" << m1 << std::endl;std::cout << "m1.rows: " << m1.rows << std::endl;std::cout << "m1.cols: " << m1.cols << std::endl;std::cout << "m1.channels: " << m1.channels() << std::endl;}

用Mat创建一个底色为白色图像,通道为RGB

//通过scalar创建一个 255x255 的 3 通道图像,像素值为 255Mat m3 = Mat(255, 255, CV_8UC3, Scalar(255, 255, 255));std::cout << "m3:\n" << m3 << std::endl;imshow("m3", m3);cv::waitKey(0);cv::destroyAllWindows();

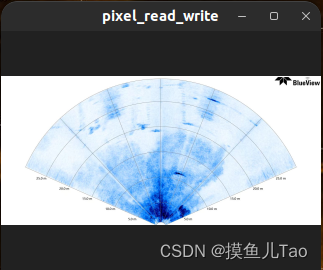

1.7图像像素访问

void TestDemo::pixel_read_write(Mat& image)

{int width = image.cols; // 获取图像宽度int height = image.rows; // 获取图像高度int channels = image.channels(); // 获取图像通道数//for (int row = 0; row < height; row++)//{// for (int col = 0; col < width; col++)// {// if (channels == 1) //单通道,图像为灰度// {// int pv = image.at<uchar>(row, col); // 获取像素值,at<uchar>表示获取灰度图像的像素值,取值范围为0-255// image.at<uchar>(row, col) = 255 - pv; // 修改像素值,取反// }// if (channels == 3) //三通道图像,彩色图像// {// Vec3b pv_bgr = image.at<Vec3b>(row, col); // 获取像素值,at<Vec3b>表示获取彩色图像的像素值,bgr通道// image.at<Vec3b>(row, col)[0] = 255 - pv_bgr[0]; // 修改像素值,取反,Vec3b[0]表示B通道// image.at<Vec3b>(row, col)[1] = 255 - pv_bgr[1]; // 修改像素值,取反,G通道// image.at<Vec3b>(row, col)[2] = 255 - pv_bgr[2]; // 修改像素值,取反,R通道// }// }//}//通过指针访问像素//指针访问像素for (int row = 0; row < height; row++){uchar* data = image.ptr<uchar>(row); // 获取图像第 row 行的指针for (int col = 0; col < width; col++){if (channels == 1) //单通道,图像为灰度{*data++ = 255 - *data; // 修改像素值,取反}if (channels == 3) //三通道图像,彩色图像{*data++ = 255 - *data; // 修改像素值,取反,B通道*data++ = 255 - *data; // 修改像素值,取反,G通道*data++ = 255 - *data; // 修改像素值,取反,R通道}}}namedWindow("pixel_read_write", WINDOW_FREERATIO);imshow("pixel_read_write", image);}

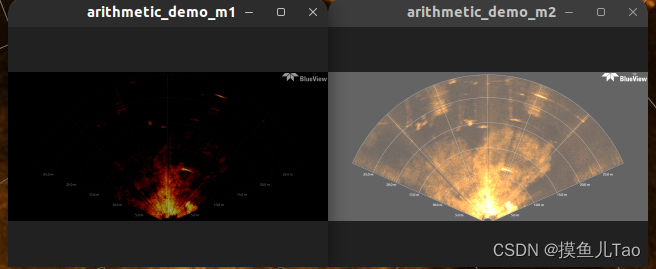

1.8 图像的算数操作

void TestDemo::arithmetic_demo(Mat& image)

{Mat m1;Mat m2;m1 = image - Scalar(100, 100, 100); // 图像减法m2 = image + Scalar(100, 100, 100); // 图像加法namedWindow("arithmetic_demo_m1", WINDOW_FREERATIO);imshow("arithmetic_demo_m1", m1);namedWindow("arithmetic_demo_m2", WINDOW_FREERATIO);imshow("arithmetic_demo_m2", m2);

}

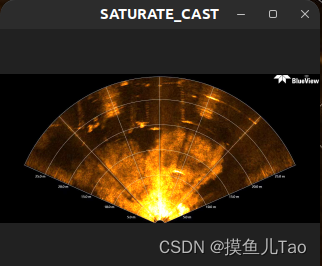

当进行像素值操作后,有些值超过了255(对于8位图像,像素值的范围是0到255)时,可以选择进行饱和操作(saturate),将超过范围的值强制截断到合法范围内。OpenCV 中的 cv::saturate_cast 函数可以完成这个任务

img=img * 2;//进行一些操作,可能导致像素值超过255//将图像像素值饱和到0到255的范围int height=img.rows;int width =img.cols;int channels=img.channels();for(int row=0;row<height;row++){uchar* data = img.ptr<uchar>(row);for(int col = 0;col<width;col++){*data++=saturate_cast<uchar>(*data);}}namedWindow("SATURATE_CAST",WINDOW_FREERATIO);imshow("SATURATE_CAST",img);

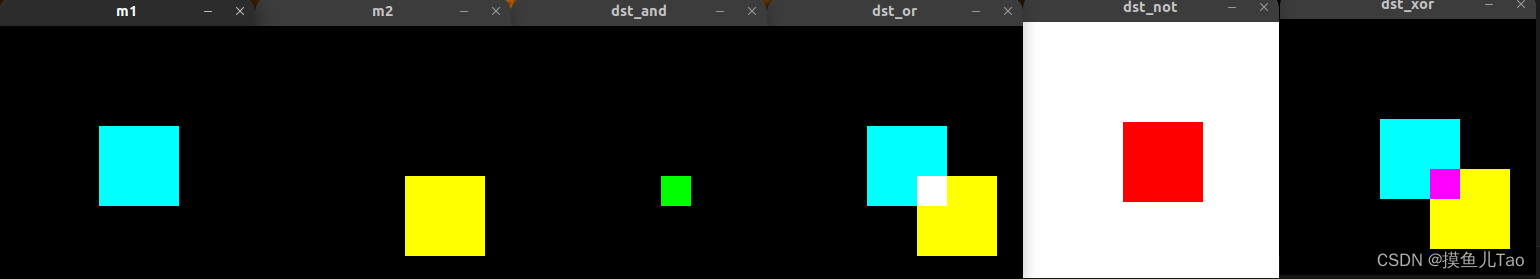

1.9 图像像素逻辑操作

void TestDemo::bitwise_demo(Mat& image1, Mat& image2)

{Mat m1 = Mat::zeros(Size(256, 256), CV_8UC3);Mat m2 = Mat::zeros(Size(256, 256), CV_8UC3);// image1 image2//创建矩形//线宽-1表示填充,线宽>0表示边框宽度rectangle(m1, Rect(100, 100, 80, 80), Scalar(255, 255, 0), -1, LINE_8, 0);// 矩形填充,颜色为黄色,线宽为-1,表示填充,线型为8连通,矩形左上角坐标为(100,100),宽高为80rectangle(m2, Rect(150, 150, 80, 80), Scalar(0, 255, 255), -1, LINE_8, 0);// 矩形填充,颜色为青色,线宽为-1,表示填充,线型为8连通,矩形左上角坐标为(150,150),宽高为80imshow("m1", m1);imshow("m2", m2);Mat dst_and, dst_or, dst_not, dst_xor;bitwise_and(m1, m2, dst_and); // 与操作bitwise_or(m1, m2, dst_or); // 或操作bitwise_not(m1, dst_not); // 非操作bitwise_xor(m1, m2, dst_xor); // 异或操作imshow("dst_and", dst_and);imshow("dst_or", dst_or);imshow("dst_not", dst_not);imshow("dst_xor", dst_xor);}

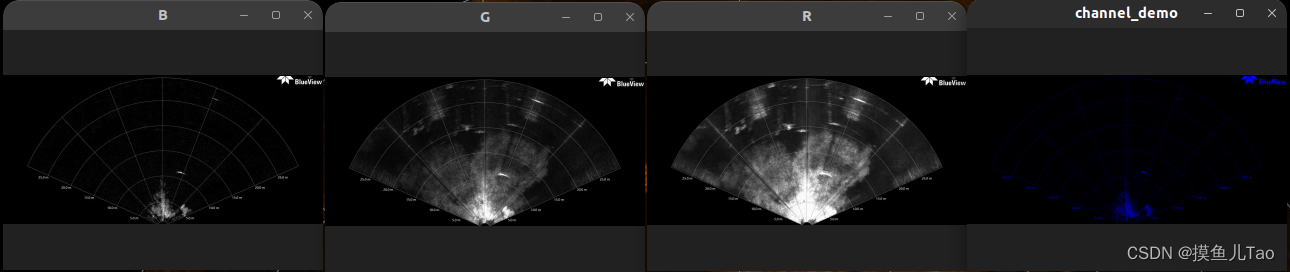

1.10通道分离与合

void TestDemo::channel_demo(Mat& image)

{Mat bgr[3];split(image, bgr); // 通道分离namedWindow("B", WINDOW_FREERATIO);namedWindow("G", WINDOW_FREERATIO);namedWindow("R", WINDOW_FREERATIO);imshow("B", bgr[0]);imshow("G", bgr[1]);imshow("R", bgr[2]);Mat dst;bgr[1] = 0; // 将 G 通道置为 0bgr[2] = 0; // 将 R 通道置为 0merge(bgr, 3, dst); // 通道合并namedWindow("channel_demo", WINDOW_FREERATIO);imshow("channel_demo", dst);

}

1.11 混合图像

//混合图像Mat src1 = image;Mat src2 = dst;Mat dst2;addWeighted(src1, 0.5, src2, 0.5, 0, dst2); // 图像混合namedWindow("channel_demo2", WINDOW_FREERATIO);imshow("channel_demo2", dst2);

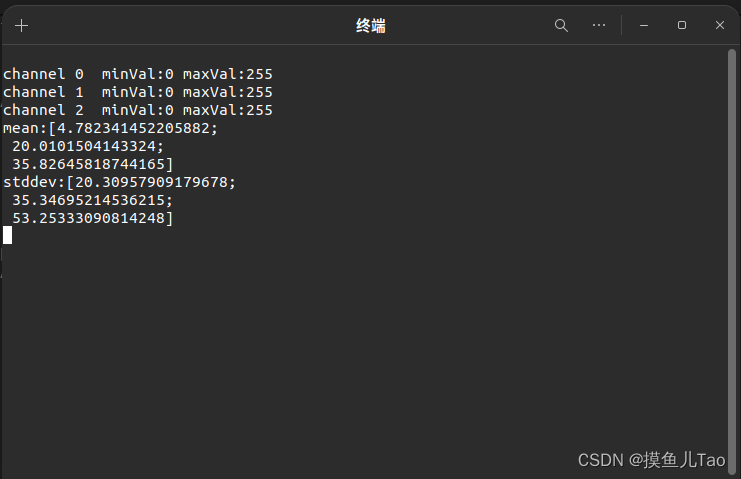

1.12 像素值统计

void TestDemo::pixel_statistics(Mat& image){double minVal,maxVal;Point minLoc,maxLoc;vector<Mat>mv;split(image,mv);//通道分离for(int i=0;i<mv.size();i++){minMaxLoc(mv[i],&minVal,&maxVal,&minLoc,&maxLoc);//src:输入数组或者向量,必须包含至少一个元素。// minVal:可选的输出参数,用于存储最小值的实际值。如果不需要这个值,可以设为0。// maxVal:可选的输出参数,用于存储最大值的实际值。如果不需要这个值,可以设为0。// minLoc:可选的输出参数,用于存储最小值的位置。如果不需要这个值,可以设为0。// maxLoc:可选的输出参数,用于存储最大值的位置。如果不需要这个值,可以设为0。// mask:可选的掩码,其大小和类型必须与 src 相同。如果指定了此参数,那么函数只查找具有非零掩码值的元素。cout<<"channel "<<i<<" minVal:"<<minVal<<" maxVal:"<<maxVal<<endl;}Mat mean,stddev;meanStdDev(image,mean,stddev);cout<<"mean:"<<mean<<endl<<"stddev:"<<stddev<<endl;}

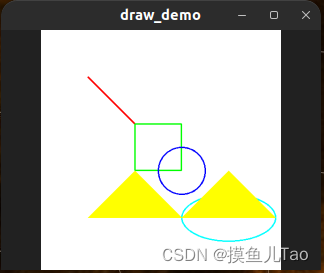

1.13 几何形状绘制

void TestDemo::draw_demo()

{//创建一个白色底板的图像Mat image = Mat::zeros(Size(512, 512), CV_8UC3);image = Scalar(255, 255, 255);//绘制直线line(image, Point(100, 100), Point(200, 200), Scalar(0, 0, 255), 2, LINE_8, 0);// 直线绘制,颜色为红色,线宽为2,线型为8连通,起点坐标为(100,100),终点坐标为(200,200)//绘制矩形rectangle(image, Rect(200, 200, 100, 100), Scalar(0, 255, 0), 2, LINE_8, 0);// 矩形绘制,颜色为绿色,线宽为2,线型为8连通,矩形左上角坐标为(200,200),宽高为100//绘制圆形circle(image, Point(300, 300), 50, Scalar(255, 0, 0), 2, LINE_8, 0);// 圆形绘制,颜色为蓝色,线宽为2,线型为8连通,圆心坐标为(300,300),半径为50//绘制椭圆ellipse(image, Point(400, 400), Size(100, 50), 0, 0, 360, Scalar(255, 255, 0), 2, LINE_8, 0);// 椭圆绘制,颜色为黄色,线宽为2,线型为8连通,椭圆中心坐标为(400,400),长轴宽度为100,短轴宽度为50//绘制多边形std::vector<Point> points;points.push_back(Point(100, 400));points.push_back(Point(200, 300));points.push_back(Point(300, 400));points.push_back(Point(400, 300));points.push_back(Point(500, 400));const Point* ppt[1] = { points.data() };int npt[] = { points.size() };fillPoly(image, ppt, npt, 1, Scalar(0, 255, 255), LINE_8, 0);//显示图像namedWindow("draw_demo", WINDOW_FREERATIO);imshow("draw_demo", image);

}

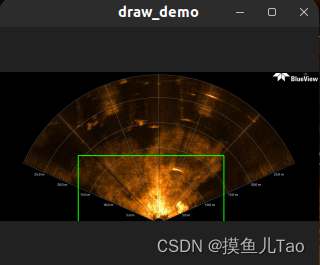

void TestDemo::draw_demo(Mat& image)

{//在图像的人脸上绘制矩形Mat img =image.clone();rectangle(img,Rect(380,400,700,700),Scalar(0,255,0),3,0,0);//显示图像namedWindow("draw_demo",WINDOW_FREERATIO);imshow("draw_demo",img);//保存图像imwrite("draw_demo.jpg",img);

}

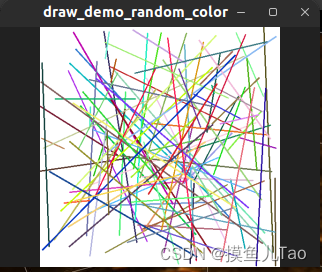

1.14 随机数与随机颜色

void TestDemo::draw_demo_random_color(Mat& image)

{//创建一个白色底板的图像Mat img = Mat::zeros(Size(512, 512), CV_8UC3);img = Scalar(255, 255, 255);//绘制随机颜色的直线RNG rng(12345);for (int i = 0; i < 100; i++){Point pt1, pt2;pt1.x = rng.uniform(0, 512);pt1.y = rng.uniform(0, 512);pt2.x = rng.uniform(0, 512);pt2.y = rng.uniform(0, 512);line(img, pt1, pt2, Scalar(rng.uniform(0, 255), rng.uniform(0, 255), rng.uniform(0, 255)), 2, LINE_8, 0);}//显示图像namedWindow("draw_demo_random_color", WINDOW_FREERATIO);imshow("draw_demo_random_color", img);//保存图像imwrite(image_path + "/draw_demo_random_color.jpg", img);

}

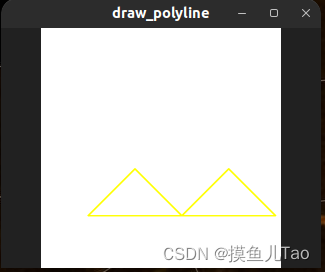

1.15 多边形填充与绘制

void TestDemo::draw_polyline()

{//创建一个白色底板的图像Mat img = Mat::zeros(Size(512, 512), CV_8UC3);img = Scalar(255, 255, 255);//绘制多边形std::vector<Point> points;points.push_back(Point(100, 400));points.push_back(Point(200, 300));points.push_back(Point(300, 400));points.push_back(Point(400, 300));points.push_back(Point(500, 400));const Point* ppt[1] = { points.data() };int npt[] = { points.size() };polylines(img, ppt, npt, 1, true, Scalar(0, 255, 255), 2, LINE_8, 0);//显示图像namedWindow("draw_polyline", WINDOW_FREERATIO);imshow("draw_polyline", img);}

1.16 鼠标操作与响应

void on_mouse(int event,int x,int y,int flags,void* data)

{Mat image= *(Mat*)data;cout<<"x: "<<x<<"y: "<<y<<endl;if(event == EVENT_LBUTTONDBLCLK){cout<<"Letf button of the mouse is clicked - position ("<<x<<","<<y<<")"<<endl;}if(event == EVENT_RBUTTONDBLCLK){cout<<"Right button of the mouse is clicked - position("<<x<<","<<y<<")"<<endl;}}void TestDemo::mouse_demo(Mat& image)

{//检测鼠标所在的位置,在图像右下角显示鼠标所在的位置,以及像素值namedWindow("mouse_demo",WINDOW_FREERATIO);imshow("mouse_demo",image);setMouseCallback("mouse_demo",on_mouse,(void*)(&image));

}鼠标事件函数原型:setMouseCallback(),此函数会在调用之后不断查询回调函数onMouse(),直到窗口销毁

void setMouseCallback(const String& winname, MouseCallback onMouse, void* userdata = 0

)参数解释:winname:窗口名称;onMouse:回调函数;userdata:传递给回调函数用户自定义的外部数据,userdata是一个 void 类型的指针;回调函数onMouse(),可随意命名,但是要与 setMouseCallback()函数里的回调函数名称一致

void onMouse(int event, int x, int y, int flags, void *userdata

) 参数解释:

event:表示鼠标事件类型的常量(#define CV_EVENT_MOUSEMOVE 0 //滑动#define CV_EVENT_LBUTTONDOWN 1 //左键点击#define CV_EVENT_RBUTTONDOWN 2 //右键点击#define CV_EVENT_MBUTTONDOWN 3 //中键点击#define CV_EVENT_LBUTTONUP 4 //左键放开#define CV_EVENT_RBUTTONUP 5 //右键放开#define CV_EVENT_MBUTTONUP 6 //中键放开#define CV_EVENT_LBUTTONDBLCLK 7 //左键双击#define CV_EVENT_RBUTTONDBLCLK 8 //右键双击#define CV_EVENT_MBUTTONDBLCLK 9 //中键双击

);

x和y:鼠标指针在图像坐标系的坐标;

flags:鼠标事件标志的常量;

userdata:回调函数接收,用户自定义的外部数据,userdata是一个 void 类型的指针;1.7 图像像素类型转化与归一化

void TestDemo:: pixel_type_conversion(Mat& image)

{namedWindow("pixel_type_conversion",WINDOW_FREERATIO);namedWindow("pixel_type_conversion_normalize",WINDOW_FREERATIO);//图像像素类型转化与归一化Mat gray;cvtColor(image,gray,COLOR_BGR2GRAY);//将图像转换为灰度图imshow("pixel_type_conversion",gray);//像素归一化Mat dst;normalize(gray,dst,0,255,NORM_MINMAX,CV_8UC1);imshow("pixel_type_conversion_normalize",dst);}

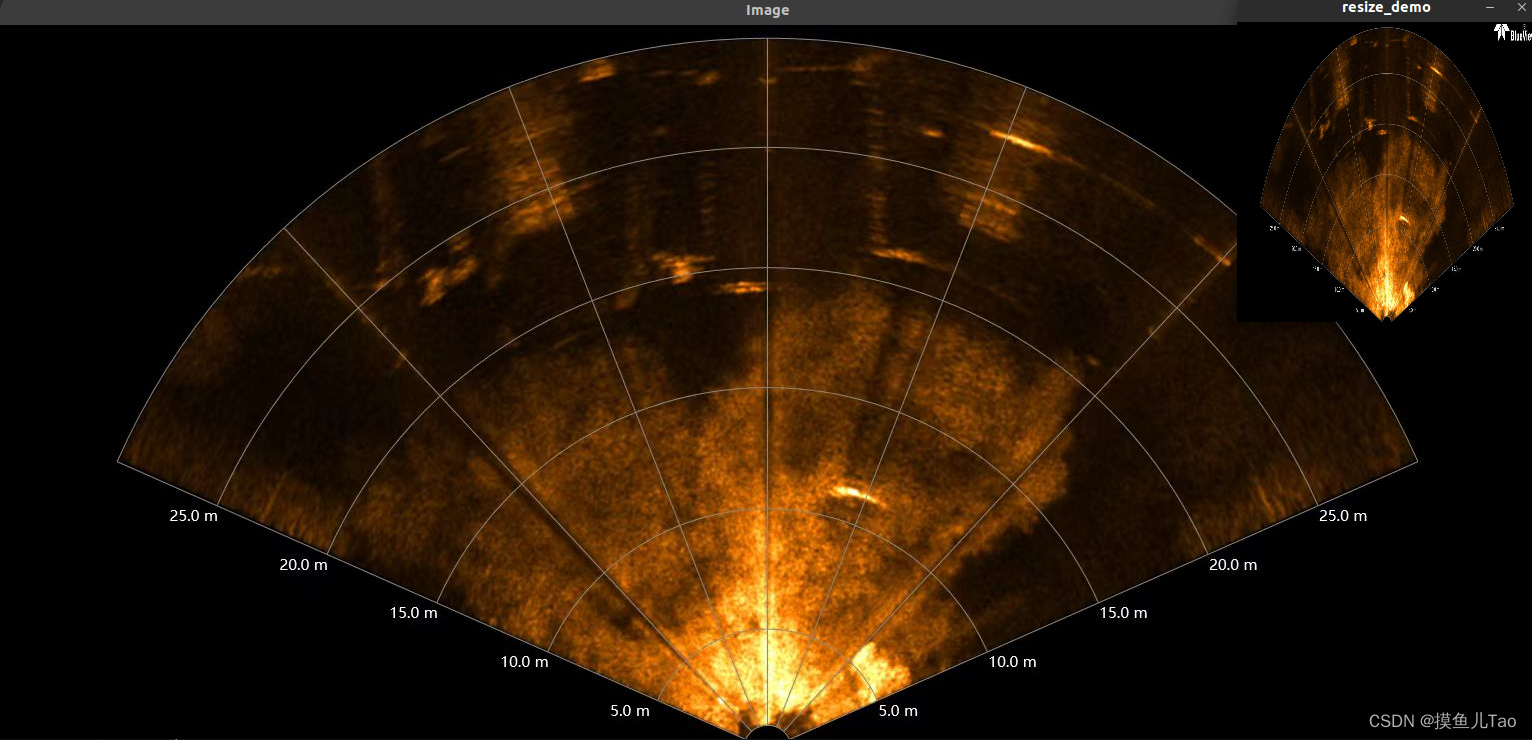

1.8 图像缩放与插值

void TestDemo::resize_demo(Mat& image,int x,int y)

{Mat dst;//图像缩放,参数为输入图像、输出图像、目标图像大小、x方向缩放因子、y方向缩放因子、插值方式resize(image,dst,Size(x,y),0,0,INTER_LINEAR);imshow("resize_demo",dst);

}C++ OpenCV中的resize函数用于调整图像的大小。它可以根据指定的尺寸和插值方法对图像进行缩放。函数原型如下:

void cv::resize(InputArray src, OutputArray dst, Size size, double fx = 0, double fy = 0, int interpolation = INTER_LINEAR);

参数说明:

src:输入图像,通常为cv::Mat类型。

dst:输出图像,与输入图像具有相同的类型。

size:目标图像的尺寸,表示为(width, height)。

fx:沿水平轴的缩放因子,默认为0,表示不进行水平缩放。

fy:沿垂直轴的缩放因子,默认为0,表示不进行垂直缩放。

interpolation:插值方法,有以下几种可选:cv::INTER_NEAREST:最近邻插值,速度最快,但质量最差。cv::INTER_LINEAR:线性插值,速度较快,质量较好。cv::INTER_AREA:区域插值,适用于图像缩小,速度较慢,质量最好。cv::INTER_CUBIC:三次样条插值,速度适中,质量较好。cv::INTER_LANCZOS4:Lanczos插值,速度适中,质量较好。

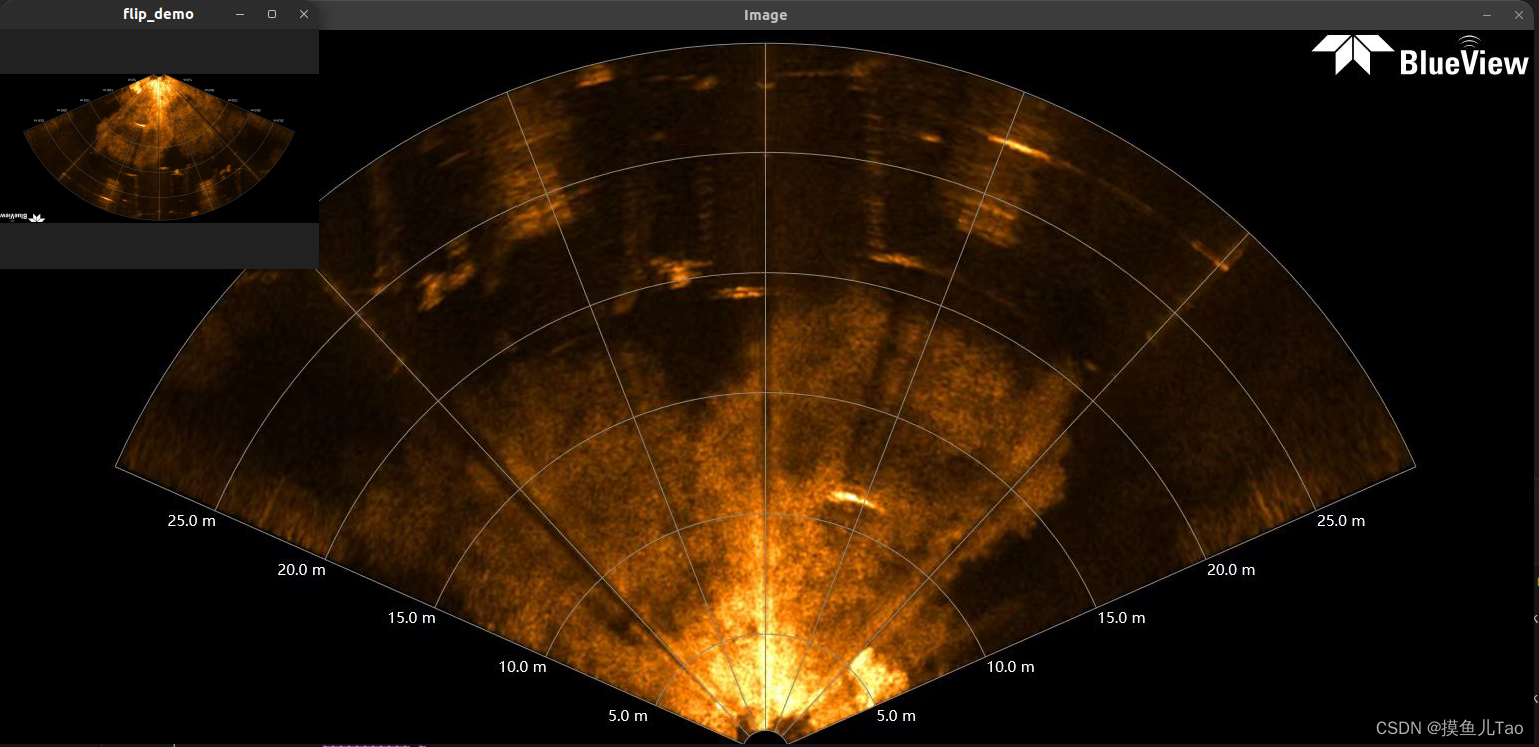

1.9 图像翻转

void TestDemo::flip_demo(Mat& image,int flipCode)

{Mat dst;//图像翻转,参数为输入图像、输出图像、翻转方式//flipCode=0表示绕x轴翻转,flipCode>0表示绕y轴翻转,flipCOde<0表示绕x轴和y轴同时翻转flip(image,dst,flipCode);namedWindow("flip_demo",WINDOW_FREERATIO);imshow("flip_demo",dst);

}

1.20 图像旋转

warpAffine函数介绍

warpAffine函数是OpenCV中的一个函数,用于对图像进行仿射变换,包括旋转、缩放、平移等操作。该函数可以对图像进行二维或三维的仿射变换,支持多种插值方法和输出图像格式。

warpAffine是OpenCV中的一个函数,用于对图像进行仿射变换,包括旋转、缩放、平移等操作。该函数可以对图像进行二维或三维的仿射变换,支持多种插值方法和输出图像格式。

void warpAffine(InputArray src, OutputArray dst, InputArray M, Size dsize, int flags=INTER_LINEAR, int borderMode=BORDER_CONSTANT, const Scalar& borderValue=Scalar())

Parameters:

src – Source image.

dst – Destination image that has the size dsize and the same type as src .

M – 2\times 3 transformation matrix.

dsize – Size of the destination image.

flags – Combination of interpolation methods (see resize() ) and the optional flag WARP_INVERSE_MAP that means that M is the inverse transformation ( \texttt{dst}\rightarrow\texttt{src} ).

borderMode – Pixel extrapolation method (see borderInterpolate() ). When borderMode=BORDER_TRANSPARENT , it means that the pixels in the destination image corresponding to the “outliers” in the source image are not modified by the function.

borderValue – Value used in case of a constant border. By default, it is 0.

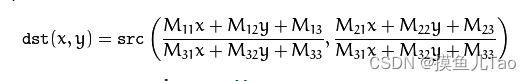

The function warpPerspective transforms the source image using the specified matrix:

getRotationMatrix2D函数介绍

Mat getRotationMatrix2D(Point2f center, double angle, double scale)

Parameters:

center – Center of the rotation in the source image.

angle – Rotation angle in degrees. Positive values mean counter-clockwise rotation (the coordinate origin is assumed to be the top-left corner).

scale – Isotropic scale factor.

mapMatrix – The output affine transformation, 2x3 floating-point matrix.

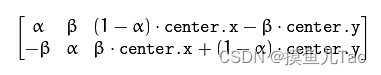

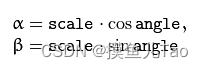

The function calculates the following matrix:

where

void TestDemo::rotate_demo(Mat& image,double angle,double scale)

{/*参数angle表示旋转角度,正值表示逆时针旋转,负值表示顺时针旋转参数scale表示缩放因子,大于1表示放大,小于1表示缩小*/Mat dst,M;int w = image.cols;int h = image.rows;M = getRotationMatrix2D(Point(w/2,h/2),angle,scale);//获取旋转矩阵double cos=abs(M.at<double>(0,0));double sin=abs(M.at<double>(0,1));int nw = cos * w + sin * h;int nh = sin * w + cos * h;M.at<double>(0,2) +=(nw-w)/2;M.at<double>(1,2) +=(nh-h)/2;warpAffine(image,dst,M,Size(nw,nh));//图像旋转namedWindow("rotate_demo",WINDOW_FREERATIO);imshow("rotate_demo",dst);}

1.21 视频文件/摄像头使用

void TestDemo::video_demo()

{VideoCapture capture(0);//打开摄像头if(!capture.isOpened())//摄像头打开失败{cout<<"摄像头打开失败"<<endl;return;}Mat frame;while(1){capture>>frame;//读取摄像头图像//翻转摄像头显示flip_demo(frame,1);imshow("video_demo",frame);//显示摄像头图像if(waitKey(30)==27)//按下esc键{break;}}capture.release();//释放摄像头destroyAllWindows();//销毁所有窗口}

1.22 视频处理与保存

void TestDemo::videowriter_demo()

{//保存视频VideoWriter writer;Mat frame;VideoCapture capture(0);Size size=Size(capture.get(CAP_PROP_FRAME_WIDTH),capture.get(CAP_PROP_FRAME_HEIGHT));writer.open("./video_demo.avi",VideoWriter::fourcc('M','J','p','G'),25,size);//fps 25 每秒帧数while(1){capture>>frame;//读取摄像头图像writer.write(frame);//写入视频imshow("videowrite_demo",frame);//显示摄像头图像if(waitKey(30)==27)//按下ESC键{break;}}writer.release();//释放视频capture.release();destroyAllWindows();

}