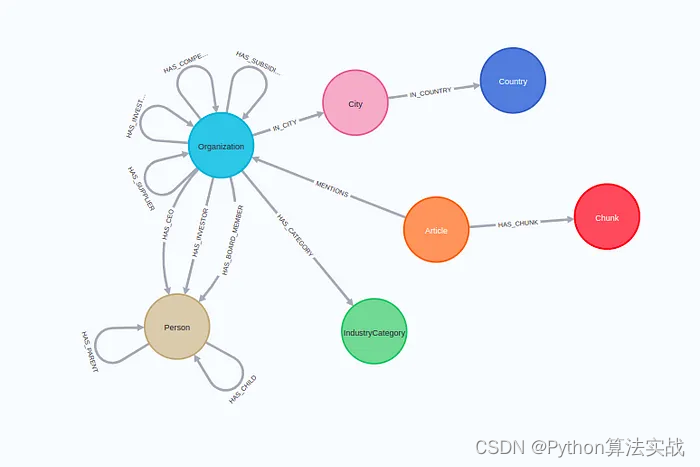

在自然语言处理和信息检索领域,多文档代理(MDAs)的出现带来了重大的进步。这些代理引入了一系列增强功能,包括在文档检索过程中重新排名和复杂查询规划工具,这代表了信息检索系统的范式转变。

传统上,搜索引擎或文档检索系统依赖于单一文档方法,限制了它们对复杂查询提供全面和细致响应的能力。然而,MDAs利用嵌入在多个文档中的集体知识,生成更准确、更有洞察力的响应。

在本文中,我们深入探讨了MDAs的定义,在 LlamaIndex 背景下的优势,并提供了关于其代码实现的见解。

技术交流

技术要学会分享、交流,不建议闭门造车。一个人可以走的很快、一堆人可以走的更远。

成立了大模型面试和技术交流群,相关源码、资料、技术交流&答疑,均可加我们的交流群获取,群友已超过2000人,添加时最好的备注方式为:来源+兴趣方向,方便找到志同道合的朋友。

方式①、微信搜索公众号:机器学习社区,后台回复:加群

方式②、添加微信号:mlc2040,备注:来自CSDN + 技术交流

定义

在深入探讨之前,让我们澄清一些关键概念:

多文档代理(MDAs):这些智能系统能够处理并综合来自多个文档的信息,以提供对用户查询的全面响应。

LlamaIndex:这是一个先进的平台,促进文档索引和检索,作为构建强大MDAs的支撑。

多文档代理与LlamaIndex的优势

全面的信息检索:通过利用跨多个文档的集体知识,由LlamaIndex支持的MDAs可以为用户提供更全面和准确的响应。这确保了更丰富的用户体验,并促进了更深入的理解。

增强的相关性:在检索过程中重新排列文档的能力使MDAs能够优先考虑最相关的信息,从而提高响应的质量。这确保用户获得最与其特定查询相关的信息。

高效的查询规划:包含查询规划工具使MDAs能够优化其搜索策略,实现更高效和有效的信息检索。这确保用户获得及时和相关的响应,提高整体用户满意度。

可伸缩性:LlamaIndex为大规模构建和部署MDAs提供了强大的基础设施。这种可伸缩性使MDAs能够处理大量数据并支持各种用例,使其成为适用于不同领域的应用程序的理想选择。

代码实现

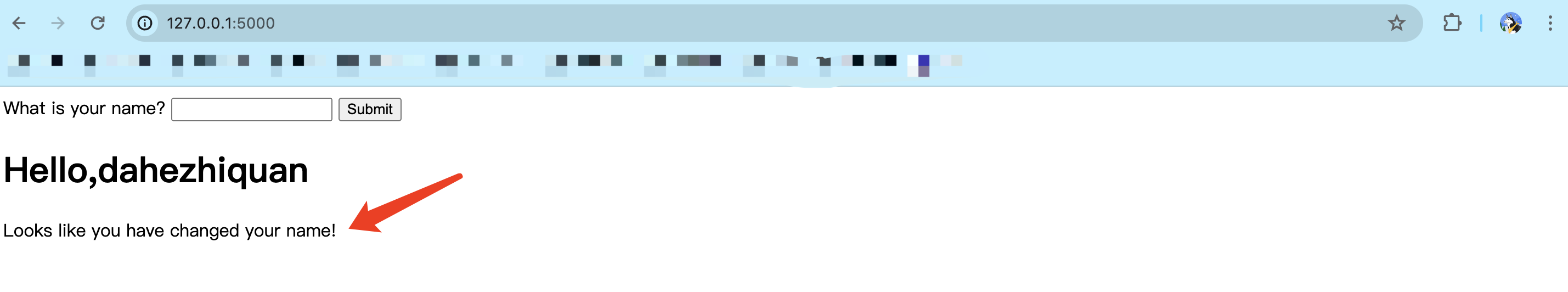

现在,让我们探索使用LlamaIndex的MDAs的基本实现:

步骤一:安装库

%pip install llama-index-core

%pip install llama-index-agent-openai

%pip install llama-index-readers-file

%pip install llama-index-postprocessor-cohere-rerank

%pip install llama-index-llms-openai

%pip install llama-index-embeddings-openai

%pip install llama-index-llms-anthropic

%pip install llama-index-embeddings-huggingface

%pip install unstructured[html]

步骤二:设置并下载数据

domain = "docs.llamaindex.ai"

docs_url = "https://docs.llamaindex.ai/en/latest/"

!wget -e robots=off --recursive --no-clobber --page-requisites --html-extension --convert-links --restrict-file-names=windows --domains {domain} --no-parent {docs_url}

from llama_index.readers.file import UnstructuredReader

from pathlib import Pathreader = UnstructuredReader()all_files_gen = Path("./docs.llamaindex.ai/").rglob("*")

all_files = [f.resolve() for f in all_files_gen]all_html_files = [f for f in all_files if f.suffix.lower() == ".html"]

步骤三:定义全局 LLM + 嵌入

import os

import nest_asynciofrom llama_index.llms.openai import OpenAI

from llama_index.embeddings.openai import OpenAIEmbedding

from llama_index.core import Settingsos.environ["OPENAI_API_KEY"] = "sk-..."nest_asyncio.apply()llm = OpenAI(model="gpt-3.5-turbo")

Settings.llm = llm

Settings.embed_model = OpenAIEmbedding(model="text-embedding-3-small", embed_batch_size=256

)

步骤四:构建多文档代理

from llama_index.agent.openai import OpenAIAgent

from llama_index.core import (load_index_from_storage,StorageContext,VectorStoreIndex,

)

from llama_index.core import SummaryIndex

from llama_index.core.tools import QueryEngineTool, ToolMetadata

from llama_index.core.node_parser import SentenceSplitter

import os

from tqdm.notebook import tqdm

import pickleasync def build_agent_per_doc(nodes, file_base):print(file_base)vi_out_path = f"./data/llamaindex_docs/{file_base}"summary_out_path = f"./data/llamaindex_docs/{file_base}_summary.pkl"if not os.path.exists(vi_out_path):Path("./data/llamaindex_docs/").mkdir(parents=True, exist_ok=True)# build vector indexvector_index = VectorStoreIndex(nodes)vector_index.storage_context.persist(persist_dir=vi_out_path)else:vector_index = load_index_from_storage(StorageContext.from_defaults(persist_dir=vi_out_path),)# build summary indexsummary_index = SummaryIndex(nodes)# define query enginesvector_query_engine = vector_index.as_query_engine(llm=llm)summary_query_engine = summary_index.as_query_engine(response_mode="tree_summarize", llm=llm)# extract a summaryif not os.path.exists(summary_out_path):Path(summary_out_path).parent.mkdir(parents=True, exist_ok=True)summary = str(await summary_query_engine.aquery("Extract a concise 1-2 line summary of this document"))pickle.dump(summary, open(summary_out_path, "wb"))else:summary = pickle.load(open(summary_out_path, "rb"))# define toolsquery_engine_tools = [QueryEngineTool(query_engine=vector_query_engine,metadata=ToolMetadata(name=f"vector_tool_{file_base}",description=f"Useful for questions related to specific facts",),),QueryEngineTool(query_engine=summary_query_engine,metadata=ToolMetadata(name=f"summary_tool_{file_base}",description=f"Useful for summarization questions",),),]# build agentfunction_llm = OpenAI(model="gpt-4")agent = OpenAIAgent.from_tools(query_engine_tools,llm=function_llm,verbose=True,system_prompt=f"""\

You are a specialized agent designed to answer queries about the `{file_base}.html` part of the LlamaIndex docs.

You must ALWAYS use at least one of the tools provided when answering a question; do NOT rely on prior knowledge.\

""",)return agent, summaryasync def build_agents(docs):node_parser = SentenceSplitter()# Build agents dictionaryagents_dict = {}extra_info_dict = {}# # this is for the baseline# all_nodes = []for idx, doc in enumerate(tqdm(docs)):nodes = node_parser.get_nodes_from_documents([doc])# all_nodes.extend(nodes)# ID will be base + parentfile_path = Path(doc.metadata["path"])file_base = str(file_path.parent.stem) + "_" + str(file_path.stem)agent, summary = await build_agent_per_doc(nodes, file_base)agents_dict[file_base] = agentextra_info_dict[file_base] = {"summary": summary, "nodes": nodes}return agents_dict, extra_info_dict

agents_dict, extra_info_dict = await build_agents(docs)

步骤五:构建支持检索的 OpenAI 代理

# define tool for each document agent

all_tools = []

for file_base, agent in agents_dict.items():summary = extra_info_dict[file_base]["summary"]doc_tool = QueryEngineTool(query_engine=agent,metadata=ToolMetadata(name=f"tool_{file_base}",description=summary,),)all_tools.append(doc_tool)print(all_tools[0].metadata)## Output

ToolMetadata(description='This document provides examples and documentation for

an agent on the llama index platform.', name='tool_latest_index',

fn_schema=)

步骤六:创建 ObjectIndex

from llama_index.core import VectorStoreIndex

from llama_index.core.objects import (ObjectIndex,ObjectRetriever,

)

from llama_index.postprocessor.cohere_rerank import CohereRerank

from llama_index.core.query_engine import SubQuestionQueryEngine

from llama_index.core.schema import QueryBundle

from llama_index.llms.openai import OpenAIllm = OpenAI(model_name="gpt-4-0613")obj_index = ObjectIndex.from_objects(all_tools,index_cls=VectorStoreIndex,

)

vector_node_retriever = obj_index.as_node_retriever(similarity_top_k=10,

)# define a custom object retriever that adds in a query planning tool

class CustomObjectRetriever(ObjectRetriever):def __init__(self,retriever,object_node_mapping,node_postprocessors=None,llm=None,):self._retriever = retrieverself._object_node_mapping = object_node_mappingself._llm = llm or OpenAI("gpt-4-0613")self._node_postprocessors = node_postprocessors or []def retrieve(self, query_bundle):if isinstance(query_bundle, str):query_bundle = QueryBundle(query_str=query_bundle)nodes = self._retriever.retrieve(query_bundle)for processor in self._node_postprocessors:nodes = processor.postprocess_nodes(nodes, query_bundle=query_bundle)tools = [self._object_node_mapping.from_node(n.node) for n in nodes]sub_question_engine = SubQuestionQueryEngine.from_defaults(query_engine_tools=tools, llm=self._llm)sub_question_description = f"""\

Useful for any queries that involve comparing multiple documents. ALWAYS use this tool for comparison queries - make sure to call this \

tool with the original query. Do NOT use the other tools for any queries involving multiple documents.

"""sub_question_tool = QueryEngineTool(query_engine=sub_question_engine,metadata=ToolMetadata(name="compare_tool", description=sub_question_description),)return tools + [sub_question_tool]# wrap it with ObjectRetriever to return objects

custom_obj_retriever = CustomObjectRetriever(vector_node_retriever,obj_index.object_node_mapping,node_postprocessors=[CohereRerank(top_n=5)],llm=llm,

)

from llama_index.agent.openai import OpenAIAgent

from llama_index.core.agent import ReActAgenttop_agent = OpenAIAgent.from_tools(tool_retriever=custom_obj_retriever,system_prompt=""" \

You are an agent designed to answer queries about the documentation.

Please always use the tools provided to answer a question. Do not rely on prior knowledge.\""",llm=llm,verbose=True,

)

步骤七:定义基线向量存储索引

all_nodes = [n for extra_info in extra_info_dict.values() for n in extra_info["nodes"]

]base_index = VectorStoreIndex(all_nodes)

base_query_engine = base_index.as_query_engine(similarity_top_k=4)

步骤八:运行顶级代理 vs 基线查询

response = top_agent.query("What types of agents are available in LlamaIndex?",

)#Output Added user message to memory: What types of agents are available in LlamaIndex?

=== Calling Function ===

Calling function: tool_agents_index with args: {"input":"types of agents"}

Added user message to memory: types of agents

=== Calling Function ===

Calling function: vector_tool_agents_index with args: {"input": "types of agents"

}

Got output: The types of agents mentioned in the provided context are ReActAgent, Native OpenAIAgent, OpenAIAgent with Query Engine Tools, OpenAIAgent Query Planning, OpenAI Assistant, OpenAI Assistant Cookbook, Forced Function Calling, Parallel Function Calling, and Context Retrieval.

========================Got output: The types of agents mentioned in the `agents_index.html` part of the LlamaIndex docs are:1. ReActAgent

2. Native OpenAIAgent

3. OpenAIAgent with Query Engine Tools

4. OpenAIAgent Query Planning

5. OpenAI Assistant

6. OpenAI Assistant Cookbook

7. Forced Function Calling

8. Parallel Function Calling

9. Context Retrieval

========================# 基线

response = base_query_engine.query("What types of agents are available in LlamaIndex?",

)

print(str(response))# 输出The types of agents available in LlamaIndex are ReActAgent, Native OpenAIAgent,and OpenAIAgent.

结论

多文档代理代表了信息检索技术的重大进步,特别是当与强大的平台如 LlamaIndex 集成时。通过利用嵌入在多个文档中的集体知识,MDAs 实现了更全面、相关和高效的信息检索。随着引入了具有重新排名和查询规划等增强功能的 V1 MDAs,MDAs 的潜在应用几乎是无限的。随着我们不断探索和完善这项技术,我们可以期待在自然语言处理和信息检索领域看到更大的进步。

总之,搭载 LlamaIndex 的 MDAs 有望彻底改变我们与广阔信息库交互和提取见解的方式,开启智能信息检索系统的新时代。

通俗易懂讲解大模型系列

-

重磅消息!《大模型面试宝典》(2024版) 正式发布!

-

重磅消息!《大模型实战宝典》(2024版) 正式发布!

-

做大模型也有1年多了,聊聊这段时间的感悟!

-

用通俗易懂的方式讲解:大模型算法工程师最全面试题汇总

-

用通俗易懂的方式讲解:不要再苦苦寻觅了!AI 大模型面试指南(含答案)的最全总结来了!

-

用通俗易懂的方式讲解:我的大模型岗位面试总结:共24家,9个offer

-

用通俗易懂的方式讲解:大模型 RAG 在 LangChain 中的应用实战

-

用通俗易懂的方式讲解:ChatGPT 开放的多模态的DALL-E 3功能,好玩到停不下来!

-

用通俗易懂的方式讲解:基于扩散模型(Diffusion),文生图 AnyText 的效果太棒了

-

用通俗易懂的方式讲解:在 CPU 服务器上部署 ChatGLM3-6B 模型

-

用通俗易懂的方式讲解:ChatGLM3-6B 部署指南

-

用通俗易懂的方式讲解:使用 LangChain 封装自定义的 LLM,太棒了

-

用通俗易懂的方式讲解:基于 Langchain 和 ChatChat 部署本地知识库问答系统

-

用通俗易懂的方式讲解:Llama2 部署讲解及试用方式

-

用通俗易懂的方式讲解:一份保姆级的 Stable Diffusion 部署教程,开启你的炼丹之路

-

用通俗易懂的方式讲解:LlamaIndex 官方发布高清大图,纵览高级 RAG技术

-

用通俗易懂的方式讲解:为什么大模型 Advanced RAG 方法对于AI的未来至关重要?

-

用通俗易懂的方式讲解:基于 Langchain 框架,利用 MongoDB 矢量搜索实现大模型 RAG 高级检索方法