参考资料:用Python写网络爬虫(第2版)

1、编写一个函数

(1)用于下载网页,且当下载网页发生错误时能及时报错。

# 导入库

import urllib.request

from urllib.error import URLError,HTTPError,ContentTooShortErrordef download(url):print("downloading: ",url)try:html=urllib.request.urlopen(url).read()except (URLError,HTTPError,ContentTooShortError) as e:print("Download error: ",e.reason)html=Nonereturn html# 函数测试

url="http://www.baidu.com"

download(url)(2)增加重试下载功能,当服务器端发生问题时,能自动重试下载。(4xx 错误发生在请求存在问题时,而 5xx 错误则发生在服务端存在问题时)

# 导入库

import urllib.request

from urllib.error import URLError,HTTPError,ContentTooShortErrordef download(url,num_retries=2):print("downloading: ",url)try:html=urllib.request.urlopen(url).read()except (URLError,HTTPError,ContentTooShortError) as e:print("download error: ",e.reason)html=Noneif num_retries>0:if hasattr(e,"code") and 500<=e.code<600:#当download函数遇到5xx错误码时,将会递归调用函数自身进行重试return download(url,num_retries-1)return html# 函数测试

download("http://httpstat.us/500")(3)设置用户代理。设定一个默认的用户代理“wswp”(即web scraping with python首字母缩写)。

# 导入库

import urllib.request

from urllib.error import URLError,HTTPError,ContentTooShortErrordef download(url,user_agent="wswp",num_retries=2):print("downloading: ",url)request=urllib.request.Request(url)request.add_header("User-agent",user_agent)try:html=urllib.request.urlopen(request).read()except (URLError,HTTPError,ContentTooShortError) as e:print("download error: ",e.reason)html=Noneif num_retries>0:if hasattr(e,"code") and 500<=e.code<600:return download(url,num_retries-1)return html

# 函数测试

url="http://www.baidu.com"

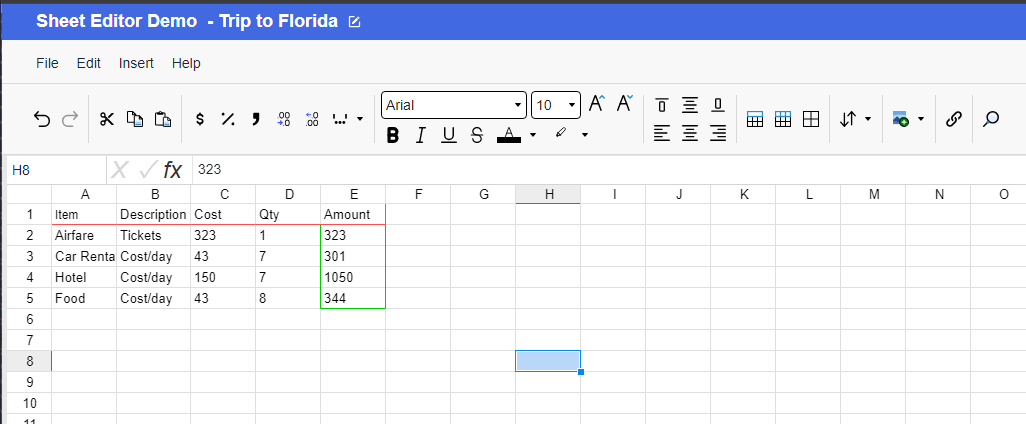

download(url)2、利用id遍历爬虫

import itertools

def crawl_site(url):for page in itertools.count(1):pg_url="{}-{}".format(url,page)html=download(pg_url) # download函数是上一案例中编写好的函数if html is None:break# 测试

url="http://example.python-scraping.com/view/"

crawl_site(url)上面的代码存在一个缺陷,就是必须确保网页地址中的id是连续的,假如某些记录已被删除,数据库id之间并不是连续的,其只要访问到某个间隔点,爬虫就会立即退出。下面对代码进行改进:当连续发生多次下载错误后才会退出程序。

def crawl_site(url,max_errors=5):for page in itertools.count(1):pg_url="{}{}".format(url,page)html=download(pg_url)if html is None:num_errors+=1if num_errors==max_errors:# 当连续html连续出现max_errors次None时,则停止循环breakelse:# 若成功抓取,则num_errors归零,用于下次重新技术num_errors=0

![DMAR: [INTR-REMAP] Present field in the IRTE entry is clear 的解决办法](https://img-blog.csdnimg.cn/direct/10c186405a1a47d78303ee8d067b38c4.png)

![贪心问题 难度[普及-]一赏](https://img-blog.csdnimg.cn/direct/6887678550a244e6831753ff58311647.png)