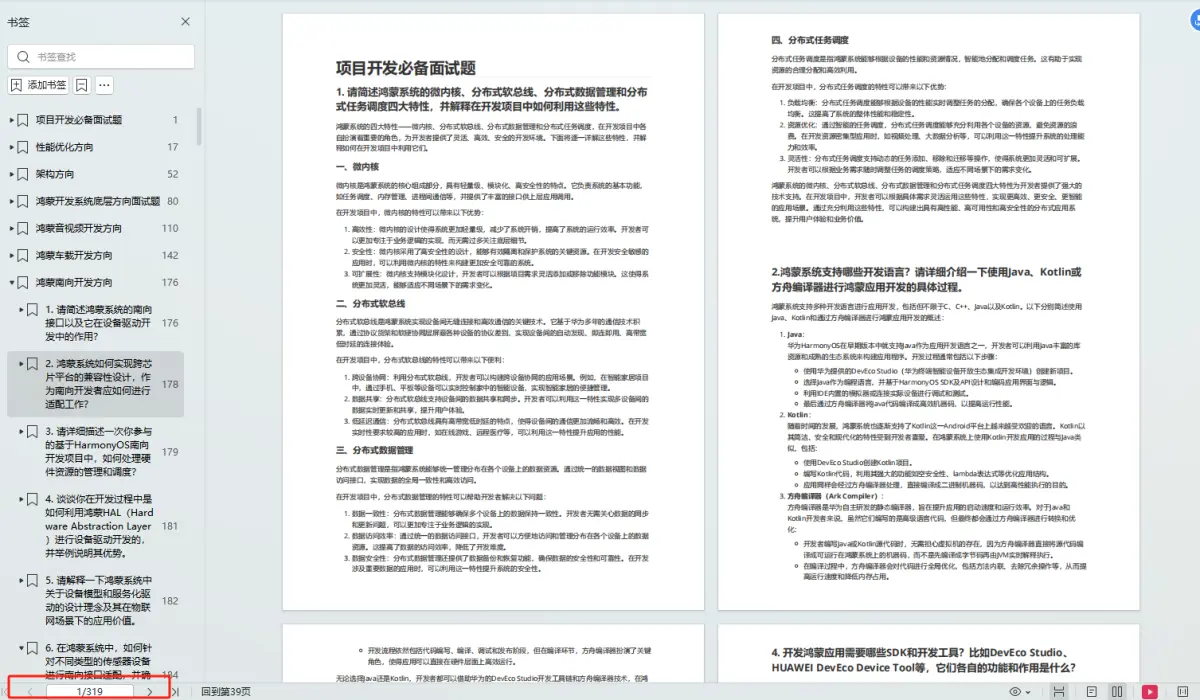

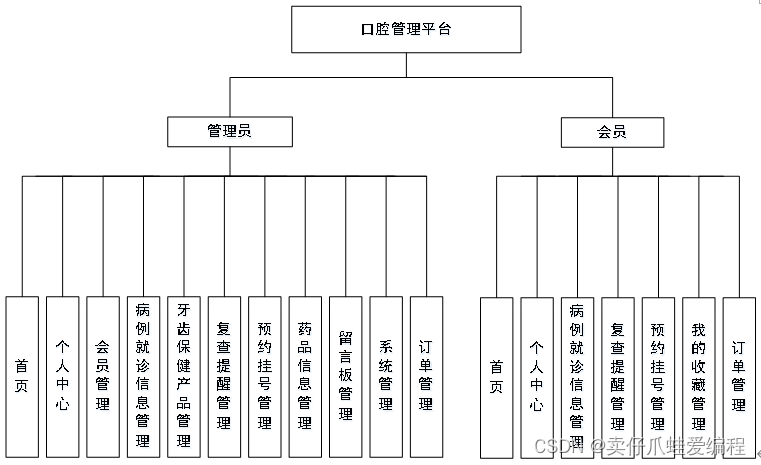

目录

1.项目代码如图所示:

2.代码详情

__init__.py

dbbook.py

__init__.py

items.py

pipelines.py

setting.py

main.py

scrapy.cfg

4.实验前准备:

1.项目代码如图所示:

2.代码详情

__init__.py

# This package will contain the spiders of your Scrapy project

#

# Please refer to the documentation for information on how to create and manage

# your spiders.

dbbook.py

# -*- coding: utf-8 -*-

import scrapy

import re

from doubanbook.items import DoubanbookItemclass DbbookSpider(scrapy.Spider):name = "dbbook"#allowed_domains = ["https://www.douban.com/doulist/1264675/"]start_urls = ('https://www.douban.com/doulist/1264675/',)URL = 'https://www.douban.com/doulist/1264675/?start=PAGE&sort=seq&sub_type='def parse(self, response):#print response.bodyitem = DoubanbookItem()selector = scrapy.Selector(response)books = selector.xpath('//div[@class="bd doulist-subject"]')for each in books:title = each.xpath('.//div[@class="title"]/a/text()').get().strip()rate = each.xpath('.//div[@class="rating"]/span[@class="rating_nums"]/text()').get().strip()abstract = each.xpath('.//div[@class="abstract"]').extract_first().strip()# 使用 XPath 提取作者、出版社和出版年信息author = each.xpath('.//div[@class="abstract"]/text()[contains(., "作者")]').extract_first()item['author'] = author.split(':')[1].strip()publisher = each.xpath('.//div[@class="abstract"]/text()[contains(., "出版社")]').extract_first()item['publisher'] = publisher.split(':')[1].strip()year = each.xpath('.//div[@class="abstract"]/text()[contains(., "出版年")]').extract_first()item['year'] = year.split(':')[1].strip()title = title.replace(' ','').replace('\n','')author = author.replace(' ','').replace('\n','')year = year.replace(' ', '').replace('\n', '')publisher = publisher.replace(' ', '').replace('\n', '')#雷同# author = each.xpath('.//div[@class="abstract"]/text()').get().strip().split('作者:')[-1].split('<br>')[# 0].strip()# publisher = each.xpath('.//div[@class="abstract"]/text()').get().strip().split('出版社:')[-1].split('<br>')[# 0].strip()# year = each.xpath('.//div[@class="abstract"]/text()').get().strip().split('出版年:')[-1].split('<br>')[# 0].strip()# publisher = each.xpath('.//div[@class="abstract"]/text()').get().strip().split('出版社:')[-1].split('<br>')[# 0].strip()# year = each.xpath('.//div[@class="abstract"]/text()').get().strip().split('出版年:')[-1].split('<br>')[# 0].strip()yield DoubanbookItem(title=title,rate=rate,author=author,publisher=publisher,year=year,)# for each in books:# title = each.xpath('div[@class="title"]/a/text()').extract()[0]# rate = each.xpath('div[@class="rating"]/span[@class="rating_nums"]/text()').extract()[0]# author = re.search('<div class="abstract">(.*?)<br',each.extract(),re.S).group(1)# abstract = each.xpath('.//div[@class="abstract"]/text()').get().strip()# publisher = re.search(r'出版社:(.*?)(?=<br|\Z)', abstract)# year = re.search(r'出版年:(\d{4}-\d+)(?=<br|\Z)', abstract)# item['title'] = title# item['rate'] = rate# item['author'] = author# item['year'] = year# item['publisher'] = publisher# # print 'title:' + title# # print 'rate:' + rate# # print author# # print ''# yield itemnextPage = selector.xpath('//span[@class="next"]/link/@href').extract()if nextPage:next = nextPage[0]printnextyield scrapy.http.Request(next,callback=self.parse)__init__.py

items.py

# -*- coding: utf-8 -*-# Define here the models for your scraped items

#

# See documentation in:

# http://doc.scrapy.org/en/latest/topics/items.htmlimport scrapyclass DoubanbookItem(scrapy.Item):# define the fields for your item here like:# name = scrapy.Field()title = scrapy.Field()rate = scrapy.Field()author = scrapy.Field()publisher = scrapy.Field()year = scrapy.Field()

pipelines.py

# -*- coding: utf-8 -*-# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.htmlclass DoubanbookPipeline(object):def process_item(self, item, spider):return item

setting.py

# -*- coding: utf-8 -*-# Scrapy settings for doubanbook project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# http://doc.scrapy.org/en/latest/topics/settings.html

# http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html

# http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.htmlBOT_NAME = 'doubanbook'SPIDER_MODULES = ['doubanbook.spiders']

NEWSPIDER_MODULE = 'doubanbook.spiders'# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'Mozilla/5.0 (Windows NT 6.3; WOW64; rv:45.0) Gecko/20100101 Firefox/45.0'FEEDS = {'file:///F:/2023-2024学年大三学期(2023.9.4至)/0.2023-202学年大三下学期(2023.2.22至)/douban.csv': {'format': 'csv','encoding': 'utf-8',},

}

# FEED_URI = u'file:///F:/2023-2024学年大三学期(2023.9.4至)/0.2023-202学年大三下学期(2023.2.22至)/douban.csv'

# FEED_FORMAT = 'CSV'

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS=32# Configure a delay for requests for the same website (default: 0)

# See http://scrapy.readthedocs.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY=3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN=16

#CONCURRENT_REQUESTS_PER_IP=16# Disable cookies (enabled by default)

#COOKIES_ENABLED=False# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED=False# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#}# Enable or disable spider middlewares

# See http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'doubanbook.middlewares.MyCustomSpiderMiddleware': 543,

#}# Enable or disable downloader middlewares

# See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'doubanbook.middlewares.MyCustomDownloaderMiddleware': 543,

#}# Enable or disable extensions

# See http://scrapy.readthedocs.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.telnet.TelnetConsole': None,

#}# Configure item pipelines

# See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html

#ITEM_PIPELINES = {

# 'doubanbook.pipelines.SomePipeline': 300,

#}# Enable and configure the AutoThrottle extension (disabled by default)

# See http://doc.scrapy.org/en/latest/topics/autothrottle.html

# NOTE: AutoThrottle will honour the standard settings for concurrency and delay

#AUTOTHROTTLE_ENABLED=True

# The initial download delay

#AUTOTHROTTLE_START_DELAY=5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY=60

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG=False# Enable and configure HTTP caching (disabled by default)

# See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED=True

#HTTPCACHE_EXPIRATION_SECS=0

#HTTPCACHE_DIR='httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES=[]

#HTTPCACHE_STORAGE='scrapy.extensions.httpcache.FilesystemCacheStorage'

main.py

from scrapy import cmdline

cmdline.execute("scrapy crawl dbbook".split())scrapy.cfg

# Automatically created by: scrapy startproject

#

# For more information about the [deploy] section see:

# https://scrapyd.readthedocs.org/en/latest/deploy.html[settings]

default = doubanbook.settings[deploy]

#url = http://localhost:6800/

project = doubanbook

3.运行结果图

4.实验前准备:

安装scrapy

一条命令解决所有问题,在终端输入:pip install scrapy

如果想了解代码和网站信息,建议打开网站,观察网页源代码,用f12,或者是点击更多工具,点击开发者工具,进行学习,这一个很重要。

例如:

源代码请在我上传的资源中免费下载,涉及到其他的相关知识,我将后续进行完善