学校的大作业要做一个视频图像处理相关的,就做了动态手势识别

VGG代码

import torch

import torch.nn as nnclass VGG_11_3D(nn.Module):def __init__(self, num_classes, pretrained=False):super(VGG_11_3D, self).__init__()self.conv1 = nn.Conv3d(3, 64, kernel_size=(3, 3, 3), padding=(1, 1, 1)) # 定义卷积层conv1self.pool1 = nn.MaxPool3d(kernel_size=(1, 2, 2), stride=(1, 2, 2)) # 定义池化层pool1self.conv2 = nn.Conv3d(64, 128, kernel_size=(3, 3, 3), padding=(1, 1, 1)) # 定义卷积层conv2self.pool2 = nn.MaxPool3d(kernel_size=(2, 2, 2), stride=(2, 2, 2)) # 定义池化层pool2self.conv3a = nn.Conv3d(128, 256, kernel_size=(3, 3, 3), padding=(1, 1, 1)) # conv3aself.conv3b = nn.Conv3d(256, 256, kernel_size=(3, 3, 3), padding=(1, 1, 1)) # conv3bself.pool3 = nn.MaxPool3d(kernel_size=(2, 2, 2), stride=(2, 2, 2)) # 定义池化层pooL3self.conv4a = nn.Conv3d(256, 512, kernel_size=(3, 3, 3), padding=(1, 1, 1)) # conv4aself.conv4b = nn.Conv3d(512, 512, kernel_size=(3, 3, 3), padding=(1, 1, 1)) # conv4bself.pool4 = nn.MaxPool3d(kernel_size=(2, 2, 2), stride=(2, 2, 2)) # 定义池化层pool4self.conv5a = nn.Conv3d(512, 512, kernel_size=(3, 3, 3), padding=(1, 1, 1)) # conv5aself.conv5b = nn.Conv3d(512, 512, kernel_size=(3, 3, 3), padding=(1, 1, 1)) # conv5bself.pool5 = nn.MaxPool3d(kernel_size=(2, 2, 2), stride=(2, 2, 2), padding=(0, 1, 1)) # 定义池化层pool5# self.fc6=nn.Linear(8192, 4096)# 定义线性全连接层fC6self.fc6 = nn.Linear(8192, 4096) # 定义线性全连接层fC6self.fc7 = nn.Linear(4096, 4096) # 定义线性全连接层fc7self.fc8 = nn.Linear(4096, num_classes) # 定义线性全连接层fc8self.relu = nn.ReLU() # 定义激活函数ReLUself.dropout = nn.Dropout(p=0.5) # 定义Dropout层self.__init_weight()def forward(self, x):x = self.relu(self.conv1(x)) # 数据经过conv1层后经过激活函数激活x = self.pool1(x) # 数据经过p0oL1层进行池化操作x = self.relu(self.conv2(x)) # 数据经过conv2层后经过激活函数激活x = self.pool2(x) # 数据经过pooL2层进行池化操作x = self.relu(self.conv3a(x)) # 数据经过conv3a层后经过激活函数激活x = self.relu(self.conv3b(x)) # 数据经过conv3b层后经过激活函数激活x = self.pool3(x) # 数据经过pooL3层进行池化操作x = self.relu(self.conv4a(x)) # 数据经过conv4a层后经过激活函数激活x = self.relu(self.conv4b(x)) # 数据经过conv4b层后经过激活函数激活x = self.pool4(x) # 数据经过pool4层进行池化操作x = self.relu(self.conv5a(x)) # 数据经过conv4a层后经过激活函数激活x = self.relu(self.conv5b(x)) # 数据经过conv4b层后经过激活函数激活x = self.pool5(x) # 数据经过pool4层进行池化操作# 经过p0o15池化以后特征图的大小为(512,1,4,4),利用vie函数将其转化为(1,8192)x = x.view(x.shape[0], -1)# x = x.view(-1, 73728) # 经过p0o15池化以后特征图的大小为(512,1,4,4),利用vie函数将其转化为(1,8192)x = self.relu(self.fc6(x)) # 维度转化以后的数据经过fc6层,并经过激活函数x = self.dropout(x) # 经过dropout层x = self.relu(self.fc7(x)) # 数据经过fc7层,并经过激活函数x = self.dropout(x) # 经过dropout层x = self.fc8(x) # 数据经过fc8层,并输出return xdef __init_weight(self):for m in self.modules():if isinstance(m, nn.Conv3d):torch.nn.init.kaiming_normal_(m.weight)if __name__ == "__main__":from torchsummary import summarydevice = torch.device("cuda" if torch.cuda.is_available() else "cpu")net = VGG_11_3D(num_classes=27, pretrained=False).to(device)print(summary(net, (3, 16, 96, 96)))

主函数:

import cv2

import pandas as pd

from torch import nnfrom VGG_11_3D import VGG_11_3D

import torch

import os

import numpy as npnum_classes = 27

# C3D模型实例化

model = VGG_11_3D(num_classes, pretrained=False)

# 将模型放入到训练设备中

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model.to(device)

pre_epoch = 50

checkpoint = torch.load(os.path.join('VGG-11-3D_epoch-' + str(pre_epoch) + '.pth.tar'))

# epoch = checkpoint['epoch']

model.load_state_dict(checkpoint['state_dict'])

# optimizer.load_state_dict(checkpoint['opt_dict'])

# 开始模型的测试

model.eval()# 获取对应视频的标签,并将标签转化为int的数字类型,同时转化为array格式

labels = list(pd.read_csv('./labels/labels.csv', header=None)[0].values)# 打开摄像头

cap = cv2.VideoCapture(0)

# cap.set(cv2.CAP_PROP_FPS, 16) # 设置帧速

# cap.set(cv2.CAP_PROP_FRAME_WIDTH, 176) # 设置宽度

# cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 100) # 设置高度

target_fps = 16 # 按16的帧率采样

cap_fps = cap.get(cv2.CAP_PROP_FPS)

delta = cap_fps // (target_fps - 1) # 每delta帧采样1帧print(delta)

cnt = 0

# 循环读取摄像头数据

buffer = []

while True:ret, frame = cap.read()frame_ = cv2.resize(frame, (96, 96))if cnt % delta == 0:buffer.append(np.array(frame_).astype(np.float64))if len(buffer) > 16:buffer.pop(0) # 弹出开头的帧if len(buffer) == 16:inputs = np.array(buffer, dtype='float32')inputs = np.expand_dims(inputs, axis=0)inputs = inputs.transpose((0, 4, 1, 2, 3))inputs = torch.from_numpy(inputs).contiguous()inputs = inputs.to(device)# 用模型进行识别zwith torch.no_grad():outputs = model(inputs)# 计算softmax的输出概率probs = nn.Softmax(dim=1)(outputs)# 计算最大概率值的标签preds = torch.max(probs, 1)[1]label_name = labels[preds.item()]print(label_name)cv2.putText(frame, label_name, (20, 20),cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0, 0, 255), 2)# 在窗口中显示摄像头画面cv2.imshow('Camera', frame)# print(frame.shape)cnt += 1# 按下 'q' 键停止摄像头if cv2.waitKey(1) & 0xFF == ord('q'):break# 关闭摄像头

cap.release()

cv2.destroyAllWindows()

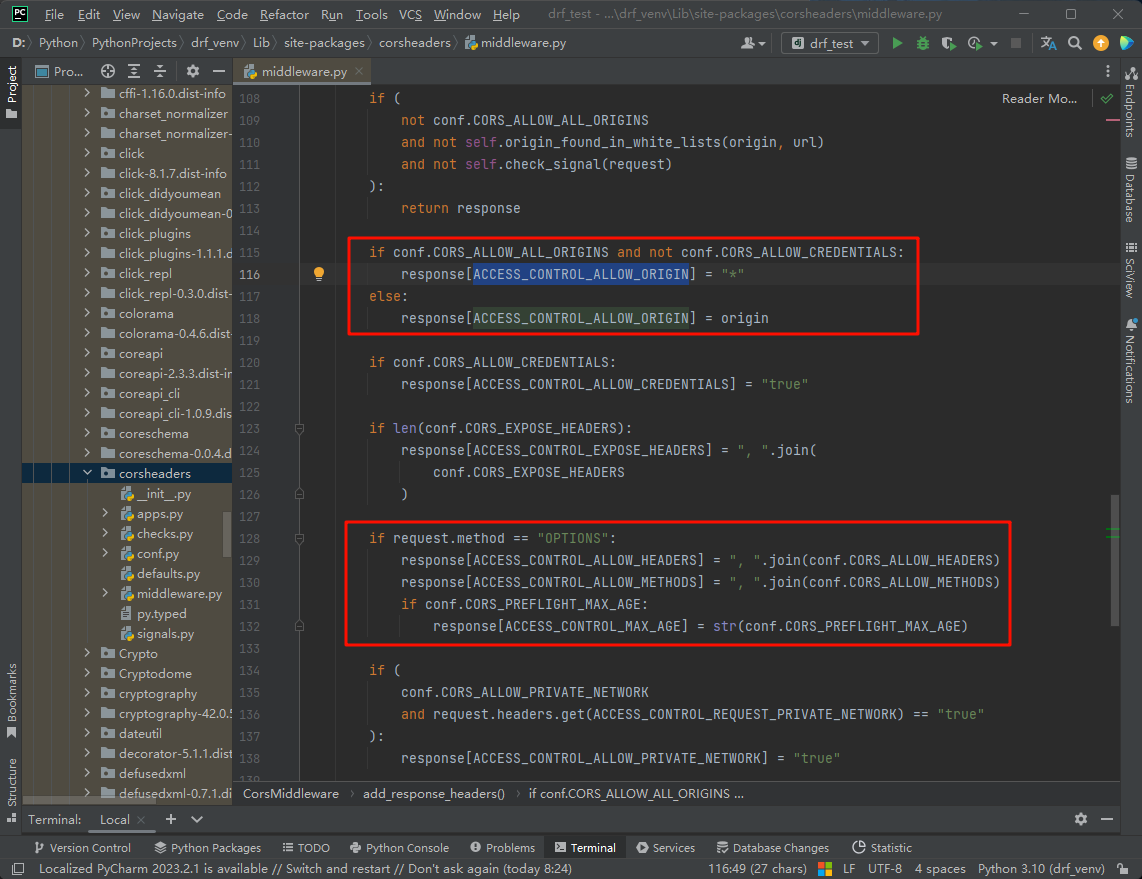

运行效果图:

需要的找我私信要完整文件