rook-ceph安装部署到位后,就可以开始来尝试使用StorageClass来动态创建pv了。

有状态的中间件在kubernetes上落地基本上都会用到StorageClass来动态创建pv(对于云上应用没有那么多烦恼,云硬盘很好用,但是对于自己学习和练习来说还是Ceph更加靠谱),这里小试一试动态创建pv的威力,为后续用它来玩转redis、zookeeper、elasticsearch做准备。

1、创建 StorageClass和存储池

kubectl create -f rook/deploy/examples/csi/rbd/storageclass.yaml查看创建的cephblockpool和StorageClass

kubectl get cephblockpool -n rook-ceph

kubectl get sc结果如下:

rook/deploy/examples/csi/rbd/storageclass.yaml内容如下:

apiVersion: ceph.rook.io/v1

kind: CephBlockPool

metadata:name: replicapoolnamespace: rook-ceph # namespace:cluster

spec:failureDomain: hostreplicated:size: 2# Disallow setting pool with replica 1, this could lead to data loss without recovery.# Make sure you're *ABSOLUTELY CERTAIN* that is what you wantrequireSafeReplicaSize: true# gives a hint (%) to Ceph in terms of expected consumption of the total cluster capacity of a given pool# for more info: https://docs.ceph.com/docs/master/rados/operations/placement-groups/#specifying-expected-pool-size#targetSizeRatio: .5

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:name: rook-ceph-block

# Change "rook-ceph" provisioner prefix to match the operator namespace if needed

provisioner: rook-ceph.rbd.csi.ceph.com

parameters:# clusterID is the namespace where the rook cluster is running# If you change this namespace, also change the namespace below where the secret namespaces are definedclusterID: rook-ceph # namespace:cluster# If you want to use erasure coded pool with RBD, you need to create# two pools. one erasure coded and one replicated.# You need to specify the replicated pool here in the `pool` parameter, it is# used for the metadata of the images.# The erasure coded pool must be set as the `dataPool` parameter below.#dataPool: ec-data-poolpool: replicapool# (optional) mapOptions is a comma-separated list of map options.# For krbd options refer# https://docs.ceph.com/docs/master/man/8/rbd/#kernel-rbd-krbd-options# For nbd options refer# https://docs.ceph.com/docs/master/man/8/rbd-nbd/#options# mapOptions: lock_on_read,queue_depth=1024# (optional) unmapOptions is a comma-separated list of unmap options.# For krbd options refer# https://docs.ceph.com/docs/master/man/8/rbd/#kernel-rbd-krbd-options# For nbd options refer# https://docs.ceph.com/docs/master/man/8/rbd-nbd/#options# unmapOptions: force# (optional) Set it to true to encrypt each volume with encryption keys# from a key management system (KMS)# encrypted: "true"# (optional) Use external key management system (KMS) for encryption key by# specifying a unique ID matching a KMS ConfigMap. The ID is only used for# correlation to configmap entry.# encryptionKMSID: <kms-config-id># RBD image format. Defaults to "2".imageFormat: "2"# RBD image features# Available for imageFormat: "2". Older releases of CSI RBD# support only the `layering` feature. The Linux kernel (KRBD) supports the# full complement of features as of 5.4# `layering` alone corresponds to Ceph's bitfield value of "2" ;# `layering` + `fast-diff` + `object-map` + `deep-flatten` + `exclusive-lock` together# correspond to Ceph's OR'd bitfield value of "63". Here we use# a symbolic, comma-separated format:# For 5.4 or later kernels:#imageFeatures: layering,fast-diff,object-map,deep-flatten,exclusive-lock# For 5.3 or earlier kernels:imageFeatures: layering# The secrets contain Ceph admin credentials. These are generated automatically by the operator# in the same namespace as the cluster.csi.storage.k8s.io/provisioner-secret-name: rook-csi-rbd-provisionercsi.storage.k8s.io/provisioner-secret-namespace: rook-ceph # namespace:clustercsi.storage.k8s.io/controller-expand-secret-name: rook-csi-rbd-provisionercsi.storage.k8s.io/controller-expand-secret-namespace: rook-ceph # namespace:clustercsi.storage.k8s.io/node-stage-secret-name: rook-csi-rbd-nodecsi.storage.k8s.io/node-stage-secret-namespace: rook-ceph # namespace:cluster# Specify the filesystem type of the volume. If not specified, csi-provisioner# will set default as `ext4`. Note that `xfs` is not recommended due to potential deadlock# in hyperconverged settings where the volume is mounted on the same node as the osds.csi.storage.k8s.io/fstype: ext4

# uncomment the following to use rbd-nbd as mounter on supported nodes

# **IMPORTANT**: CephCSI v3.4.0 onwards a volume healer functionality is added to reattach

# the PVC to application pod if nodeplugin pod restart.

# Its still in Alpha support. Therefore, this option is not recommended for production use.

#mounter: rbd-nbd

allowVolumeExpansion: true

reclaimPolicy: Delete2、创nginx的StatefulSet,通过storageclass来动态创建pv绑定到 /usr/share/nginx/html 有状态的Pod,每个Pod都要有自己的pv。 对于redis、zookeeper、elasticsearch来说都会使用到storageClass来动态创建pv。

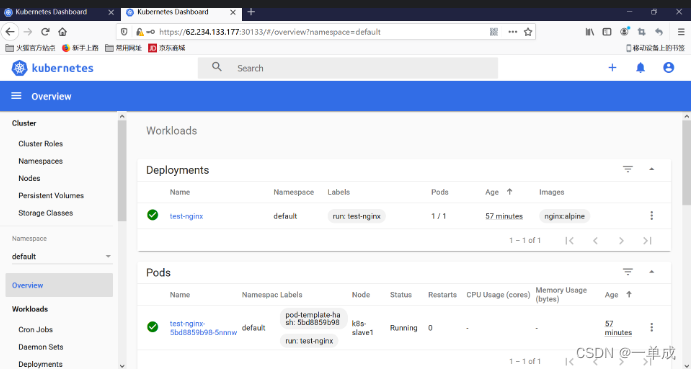

kubectl apply -f test_volumnClainTemplates.yaml 查看命令如下:

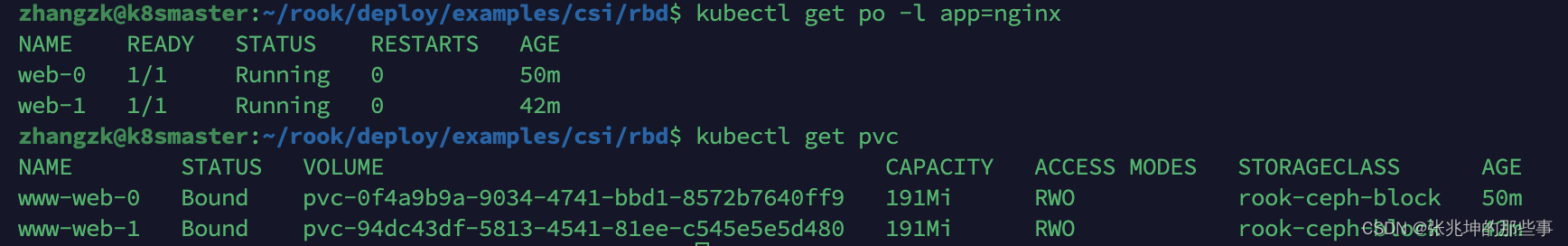

kubectl get po -l app=nginx

kubectl get pvc结果如下:

test_volumnClainTemplates.yaml的内容如下:

apiVersion: v1

kind: Service

metadata:name: nginxlabels:app: nginx

spec:ports:- port: 80name: webclusterIP: Noneselector:app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:name: web

spec:selector:matchLabels:app: nginxserviceName: "nginx"replicas: 2 template:metadata: labels:app: nginxspec:terminationGracePeriodSeconds: 10 containers:- name: nginx image: nginx ports:- containerPort: 80 name: webvolumeMounts:- name: wwwmountPath: /usr/share/nginx/htmlvolumeClaimTemplates:- metadata:name: www spec:accessModes: [ "ReadWriteOnce" ] storageClassName: "rook-ceph-block"resources:requests:storage: 200M