实验2

熟悉常用的HDFS操作

1.实验目的

(1)理解HDFS在Hadoop体系结构中的角色;

(2)熟练使用HDFS操作常用的Shell命令;

(3)熟悉HDFS操作常用的Java API。

2. 实验平台

(1)操作系统:Linux(建议Ubuntu16.04或Ubuntu18.04);

(2)Hadoop版本:3.1.3;

(3)JDK版本:1.8;

(4)Java IDE:Eclipse。

3. 实验步骤

(一)编程实现以下功能,并利用Hadoop提供的Shell命令完成相同任务:

(1) 向HDFS中上传任意文本文件,如果指定的文件在HDFS中已经存在,则由用户来指定是追加到原有文件末尾还是覆盖原有的文件;

Shell命令:hadoop fs -put localfile.txt /user/hadoop/

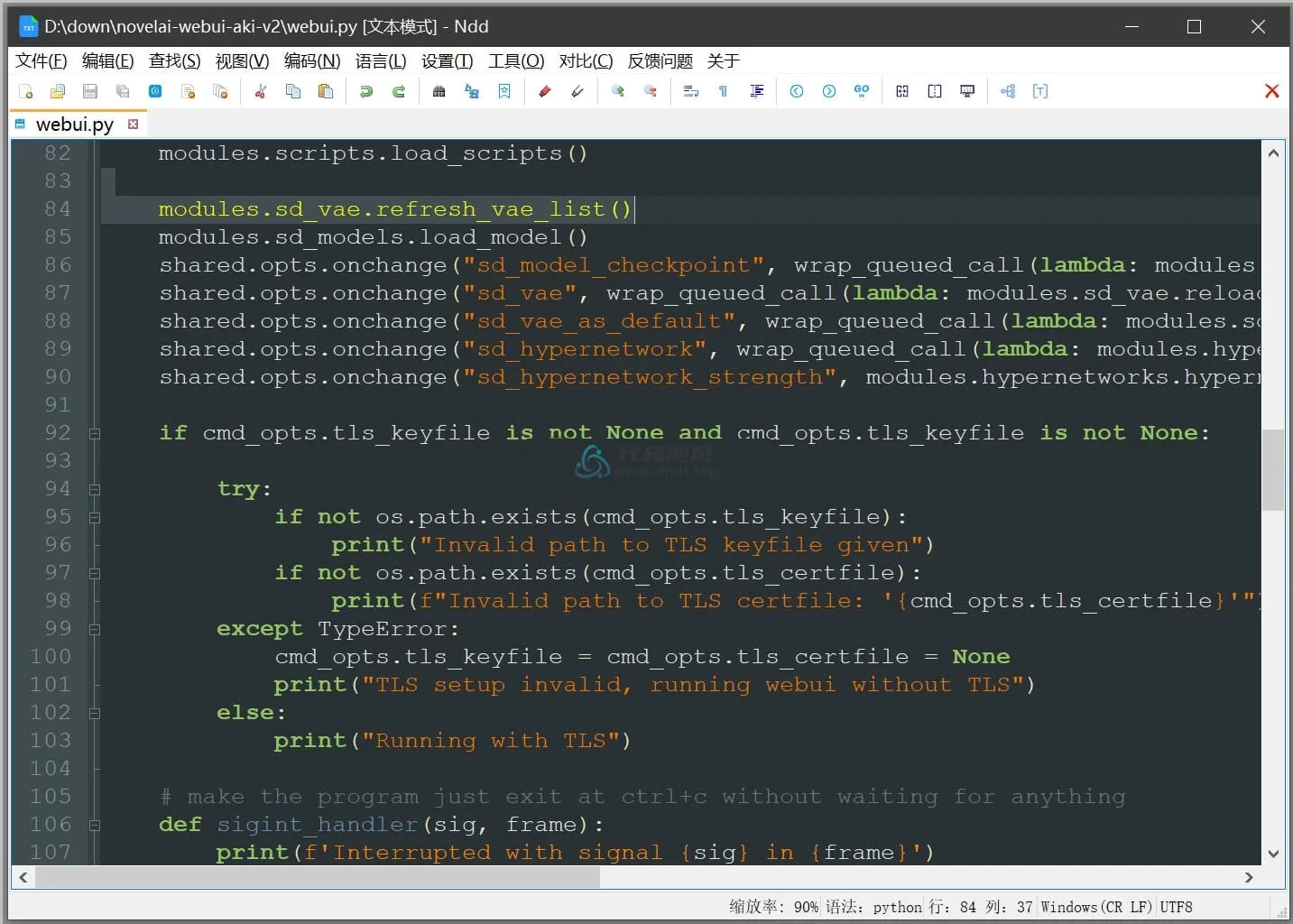

Java代码:

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class HDFSUpload {

public static void uploadFile(String localFilePath, String hdfsFilePath) throws Exception {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

Path srcPath = new Path(localFilePath);

Path destPath = new Path(hdfsFilePath);

fs.copyFromLocalFile(srcPath, destPath);

fs.close();

}

}

(2) 从HDFS中下载指定文件,如果本地文件与要下载的文件名称相同,则自动对下载的文件重命名;

Shell命令:hadoop fs -get /user/hadoop/test.txt /local/path/test.txt

Java代码:

public static void downloadFile(String hdfsFilePath, String localFilePath) throws Exception {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

Path hdfsPath = new Path(hdfsFilePath);

Path localPath = new Path(localFilePath);

fs.copyToLocalFile(hdfsPath, localPath);

fs.close();

}

(3) 将HDFS中指定文件的内容输出到终端中;

Shell命令:hadoop fs -cat /user/hadoop/test.txt

Java代码:

public static void readFile(String hdfsFilePath) throws Exception {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

FSDataInputStream inputStream = fs.open(new Path(hdfsFilePath));

BufferedReader br = new BufferedReader(new InputStreamReader(inputStream));

String line;

while ((line = br.readLine()) != null) {

System.out.println(line);

}

br.close();

fs.close();

}

(4) 显示HDFS中指定的文件的读写权限、大小、创建时间、路径等信息;

Shell命令:hadoop fs -ls /user/hadoop/test.txt

Java代码:

public static void getFileInfo(String hdfsFilePath) throws Exception {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

FileStatus fileStatus = fs.getFileStatus(new Path(hdfsFilePath));

System.out.println("Path: " + fileStatus.getPath());

System.out.println("Size: " + fileStatus.getLen());

System.out.println("Permissions: " + fileStatus.getPermission());

System.out.println("Modification Time: " + fileStatus.getModificationTime());

fs.close();

}

(5) 给定HDFS中某一个目录,输出该目录下的所有文件的读写权限、大小、创建时间、路径等信息,如果该文件是目录,则递归输出该目录下所有文件相关信息;

Shell命令:hadoop fs -ls -R /user/hadoop/

Java代码:

public static void listDirectory(String hdfsDirPath) throws Exception {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

RemoteIterator<LocatedFileStatus> fileStatusListIterator = fs.listFiles(new Path(hdfsDirPath), true);

while (fileStatusListIterator.hasNext()) {

LocatedFileStatus fileStatus = fileStatusListIterator.next();

System.out.println("Path: " + fileStatus.getPath());

System.out.println("Size: " + fileStatus.getLen());

System.out.println("Permissions: " + fileStatus.getPermission());

System.out.println("Modification Time: " + fileStatus.getModificationTime());

}

fs.close();

}

(6) 提供一个HDFS内的文件的路径,对该文件进行创建和删除操作。如果文件所在目录不存在,则自动创建目录;

Shell命令: hadoop fs -mkdir -p /user/hadoop/newdir

hadoop fs -touchz /user/hadoop/newdir/newfile.txt

hadoop fs -rm /user/hadoop/newdir/newfile.txt

Java代码:

public static void createFile(String hdfsFilePath) throws Exception {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

FSDataOutputStream outputStream = fs.create(new Path(hdfsFilePath));

outputStream.close();

fs.close();

}

public static void deleteFile(String hdfsFilePath) throws Exception {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

fs.delete(new Path(hdfsFilePath), false);

fs.close();

}

(7) 提供一个HDFS的目录的路径,对该目录进行创建和删除操作。创建目录时,如果目录文件所在目录不存在,则自动创建相应目录;删除目录时,由用户指定当该目录不为空时是否还删除该目录;

Shell命令: hadoop fs -mkdir -p /user/hadoop/newdir

hadoop fs -rmdir /user/hadoop/newdir

Java代码:

public static void createDirectory(String hdfsDirPath) throws Exception {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

fs.mkdirs(new Path(hdfsDirPath));

fs.close();

}

public static void deleteDirectory(String hdfsDirPath, boolean recursive) throws Exception {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

fs.delete(new Path(hdfsDirPath), recursive);

fs.close();

}

(8) 向HDFS中指定的文件追加内容,由用户指定内容追加到原有文件的开头或结尾;

Shell命令:echo "new content" | hadoop fs -appendToFile - /user/hadoop/test.txt

Java代码:

public static void appendToFile(String hdfsFilePath, String content) throws Exception {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

FSDataOutputStream outputStream = fs.append(new Path(hdfsFilePath));

outputStream.writeBytes(content);

outputStream.close();

fs.close();

}

(9) 删除HDFS中指定的文件;

Shell命令:hadoop fs -rm /user/hadoop/test.txt

Java代码:

public static void removeFile(String hdfsFilePath) throws Exception {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

fs.delete(new Path(hdfsFilePath), false);

fs.close();

}

(10) 在HDFS中,将文件从源路径移动到目的路径。

Shell命令:hadoop fs -mv /user/hadoop/test.txt /user/hadoop/moved_test.txt

Java代码:

public static void moveFile(String sourcePath, String destPath) throws Exception {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

fs.rename(new Path(sourcePath), new Path(destPath));

fs.close();

}

(二)编程实现一个类“MyFSDataInputStream”,该类继承“org.apache.hadoop.fs.FSDataInputStream”,要求如下:实现按行读取HDFS中指定文件的方法“readLine()”,如果读到文件末尾,则返回空,否则返回文件一行的文本。

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.conf.Configuration;

import java.io.BufferedReader;

import java.io.InputStreamReader;

public class MyFSDataInputStream extends FSDataInputStream {

public MyFSDataInputStream(FileSystem fs, Path path) throws IOException {

super(fs.open(path));

}

public String readLine() throws IOException {

BufferedReader br = new BufferedReader(new InputStreamReader(this));

return br.readLine();

}

}

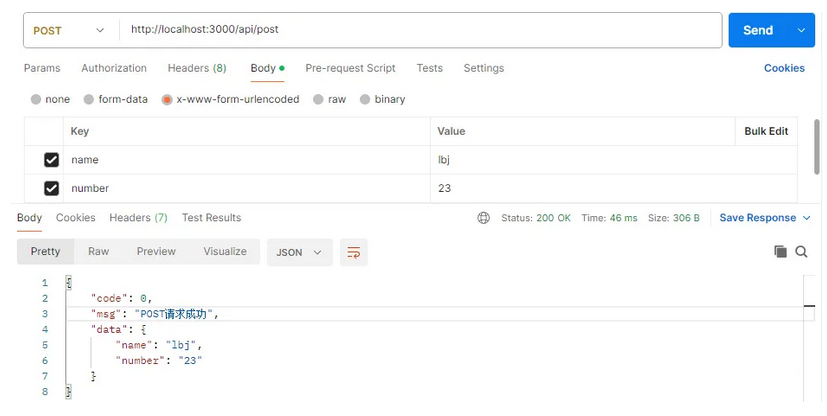

(三)查看Java帮助手册或其它资料,用“java.net.URL”和“org.apache.hadoop.fs.FsURLStreamHandlerFactory”编程完成输出HDFS中指定文件的文本到终端中。

import java.net.URL;

import java.net.URLConnection;

import org.apache.hadoop.fs.FsURLStreamHandlerFactory;

public class HDFSFileReader {

static {

URL.setURLStreamHandlerFactory(new FsURLStreamHandlerFactory());

}

public static void main(String[] args) throws Exception {

URL url = new URL("hdfs://namenode:port/user/hadoop/test.txt");

URLConnection connection = url.openConnection();

BufferedReader in = new BufferedReader(new InputStreamReader(connection.getInputStream()));

String line;

while ((line = in.readLine()) != null) {

System.out.println(line);

}

in.close();

}