GGML

ggml的函数

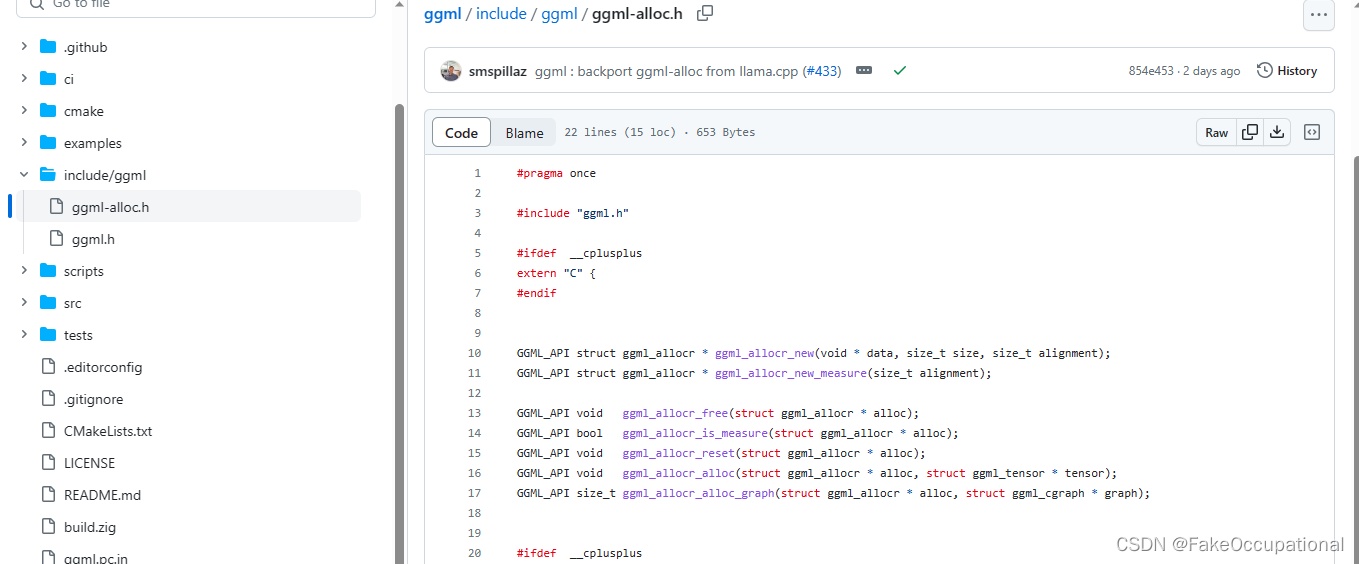

- 可以看到官方示例项目仅依赖于

#include "ggml/ggml.h",#include "common.h",可以阅读ggml.h获取ggml的使用帮助

| 函数 | 解释 | 注释 |

|---|---|---|

| ggml_tensor | 多维张量按行主顺序存储。ggml_tensor结构包含每个维度中元素数(“ne”)和字节数(“nb”,又称步幅)的字段。这允许在存储器中存储不连续的张量,这对于诸如换位和置换之类的操作是有用的。所有张量运算都必须考虑步长,而不是假设张量在内存中是连续的。 int64_t ne[GGML_MAX_DIMS]; // number of elements size_t nb[GGML_MAX_DIMS]; // stride in bytes | nb[0] = sizeof(type) nb[1] = nb[0] * ne[0] + padding nb[i] = nb[i-1] * ne[i-1] |

| ggml_context | 使用ggml_init_params 初始化ggml context(例如 mem_size,mem_buffer,mem_buffer_owned) | |

| ggml_init_params | ||

| ggml_type_sizef | ||

| ggml_init | ||

| ggml_new_tensor | ||

| ggml_new_tensor_1d | struct ggml_tensor * input = ggml_new_tensor_1d(ctx , GGML_TYPE_F32, 28*28); | |

| ggml_new_tensor_2d | 二维张量 | |

| ggml_new_tensor_3d | ||

| ggml_new_tensor_4d | ||

| ggml_nbytes | 返回读取的大小值 | |

| #define GGML_PAD(x, n) (((x) + (n) - 1) & ~((n) - 1)) | x按照n进行向上取整后的值,将x与n-1相加,然后再与~(n-1)进行按位与操作。 | |

| ggml_set_name | ||

| enum ggml_op | 所有已经实现和未实现的算子 | |

| ggml_mul_mat | mul op | ggml_tensor * temp = ggml_mul_mat(ctx0, model.fc1_weight, input) ; |

| ggml_add | add op | |

| ggml_add_inplace | ||

| ggml_soft_max | softmax op | |

| ggml_norm | norm op | |

| ggml_cpy | copy op | |

| ggml_permute | permute op | |

| ggml_flash_attn | attention op | |

| ggml_relu | relu op | |

| ggml_build_forward_expand | 构建计算图ggml_cgraph | |

| ggml_graph_compute_with_ctx | 运行计算图(最初的版本是没有这个函数的),而是ggml_graph_compute | |

| ggml_graph_dump_dot | ggml_graph_print | |

| ggml_graph_export | 导出计算图供以后使用,示例 “mnist-cpu” | |

| ggml_get_data_f32 | 从tensor中获取数值 | |

| ggml_set_f32 | 设置值,当前项目没有用到,大多使用直接赋值 fin.read(reinterpret_cast<char *>(model.fc1_weight->data), ggml_nbytes(model.fc1_weight)); | |

| ggml_time_init | 初始化GGML的时间测量 | |

| 本项目没有用到的函数 | ||

| ggml_set_param | ggml_set_param(ctx, x); // 反向传播时将x设置为变量 | The ggml_set_param() function marks a tensor as an input variable. This is used by the automatic differentiation and optimization algorithms. |

| ggml_graph_reset | 训练时的梯度归零 | |

| ggml_get_f32_1d | float (*ggml_get_f32_1d) (const struct ggml_tensor * tensor, int i) | 读取1d数据的index处的值,对应的也有set方法ggml_set_f32_1d |

| 未暴露的,但在机器学习中比较重要的函数 | ||

| ggml_opt_adam | result = ggml_opt_adam(ctx, opt, opt->params, f, gf, gb); | |

| ggml_opt_lbfgs | result = ggml_opt_lbfgs(ctx, opt, opt->params, f, gf, gb); |

ggml的使用

- 通过下面的例子可以看出使用ggml进行推理主要包括以下几个步骤:

- 上下文环境创建=>

- tensors数据初始化=>

- 构建计算图=>

- 设置tensor值=>

- 前向推理=>

- 输出值,释放上下文<=>

权重的读取与转换

-

https://github.com/ggerganov/ggml/tree/master/examples/mnist

-

git clone --recursive https://github.com/ggerganov/ggml.git

$:~/ggml/ggml/examples/mnist$ tree

.

├── CMakeLists.txt

├── convert-h5-to-ggml.py

├── main.cpp

├── main-cpu.cpp

├── main-mtl.cpp

├── main-mtl.h

├── main-mtl.m

├── models

│ └── mnist

│ ├── mnist_model.state_dict

│ └── t10k-images.idx3-ubyte

├── README.md

└── web└── index.html$:~/ggml/ggml/examples/mnist$ conda activate trt2

$:~/ggml/ggml/examples/mnist$ python3 ./convert-h5-to-ggml.py ./models/mnist/mnist_model.state_dictOrderedDict([('fc1.weight', tensor([[ 0.0130, 0.0034, -0.0287, ..., -0.0268, -0.0352, -0.0056],[-0.0134, 0.0077, -0.0028, ..., 0.0356, 0.0143, -0.0107],[-0.0329, 0.0154, -0.0167, ..., 0.0155, 0.0127, -0.0309],...,[-0.0216, -0.0302, 0.0085, ..., 0.0301, 0.0073, 0.0153],[ 0.0289, 0.0181, 0.0326, ..., 0.0107, -0.0314, -0.0349],[ 0.0273, 0.0127, 0.0105, ..., 0.0090, -0.0007, 0.0190]])), ('fc1.bias', tensor([ 1.9317e-01, -7.4255e-02, 8.3417e-02, 1.1681e-01, 7.5499e-03,8.7627e-02, -7.9260e-03, 6.8504e-02, 2.2217e-02, 9.7918e-02,1.5195e-01, 8.3765e-02, 1.4237e-02, 1.0847e-02, 9.6959e-02,-1.2500e-01, 4.2406e-02, -2.4611e-02, 5.9198e-03, 8.9767e-02,..., 1.3460e-03, 2.9106e-02, -4.0620e-02, 9.7568e-02, 8.5670e-02])), ('fc2.weight', tensor([[-0.0197, -0.0814, -0.3992, ..., 0.2697, 0.0386, -0.5380],[-0.4174, 0.0572, -0.1331, ..., -0.2564, -0.3926, -0.0514],...,[-0.2988, -0.1119, 0.0517, ..., 0.3296, 0.0800, 0.0651]])), ('fc2.bias', tensor([-0.1008, -0.1179, -0.0558, -0.0626, 0.0385, -0.0222, 0.0188, -0.1296,0.1507, 0.0033]))])

Processing variable: fc1.weight with shape: (500, 784)

Processing variable: fc1.bias with shape: (500,)

Processing variable: fc2.weight with shape: (10, 500)

Processing variable: fc2.bias with shape: (10,)

Done. Output file: models/mnist/ggml-model-f32.bin

$:~/ggml/ggml/examples/mnist$ tree

.

├── CMakeLists.txt

├── convert-h5-to-ggml.py

├── main.cpp

├── main-cpu.cpp

├── main-mtl.cpp

├── main-mtl.h

├── main-mtl.m

├── models

│ └── mnist

│ ├── ggml-model-f32.bin

│ ├── mnist_model.state_dict

│ └── t10k-images.idx3-ubyte

├── README.md

└── web└── index.html3 directories, 12 files

ggml进行推理

// https://github1s.com/ggerganov/ggml/blob/HEAD/examples/mnist/main.cpp#L1-L329

#include "ggml/ggml.h"#include "common.h"#include <cmath>

#include <cstdio>

#include <cstring>

#include <ctime>

#include <fstream>

#include <string>

#include <vector>

#include <algorithm>#if defined(_MSC_VER)

#pragma warning(disable: 4244 4267) // possible loss of data

#endif

模型的状态和超参数

- 定义默认超参数结构体 mnist_hparams,包括输入维度、隐藏层维度和类别数。定义 mnist_model 结构体,用于存储模型的状态和超参数。

// default hparams

struct mnist_hparams {int32_t n_input = 784;int32_t n_hidden = 500;int32_t n_classes = 10;

};struct mnist_model {mnist_hparams hparams;struct ggml_tensor * fc1_weight;struct ggml_tensor * fc1_bias;struct ggml_tensor * fc2_weight;struct ggml_tensor * fc2_bias;struct ggml_context * ctx;

};读取权重 mnist_model_load

- mnist_model_load 函数,用于加载模型文件。函数首先检查文件是否存在,然后读取模型文件的超参数,创建 ggml_context 对象,并从文件中加载模型的权重和偏置。

- 调用过程:

- ./bin/mnist

./models/mnist/ggml-model-f32.bin…/examples/mnist/models/mnist/t10k-images.idx3-ubyte - mnist_model_load(argv[1], model),model是一个未初始化的mnist_model 结构体,后续使用

.bin文件进行初始化。

// load the model's weights from a file

bool mnist_model_load(const std::string & fname, mnist_model & model) {printf("%s: loading model from '%s'\n", __func__, fname.c_str());auto fin = std::ifstream(fname, std::ios::binary);// std::ifstream用于读文件操作if (!fin) {fprintf(stderr, "%s: failed to open '%s'\n", __func__, fname.c_str());return false;}// verify magic{uint32_t magic;// 32位的无符号整型数 uint32_t i = 0x67676d6c;fin.read((char *) &magic, sizeof(magic));if (magic != GGML_FILE_MAGIC) {fprintf(stderr, "%s: invalid model file '%s' (bad magic)\n", __func__, fname.c_str());return false;}}auto & ctx = model.ctx;size_t ctx_size = 0;// compute ctx_size use mnist_hparams{const auto & hparams = model.hparams;const int n_input = hparams.n_input;const int n_hidden = hparams.n_hidden;const int n_classes = hparams.n_classes;ctx_size += n_input * n_hidden * ggml_type_sizef(GGML_TYPE_F32); // fc1 weightctx_size += n_hidden * ggml_type_sizef(GGML_TYPE_F32); // fc1 biasctx_size += n_hidden * n_classes * ggml_type_sizef(GGML_TYPE_F32); // fc2 weightctx_size += n_classes * ggml_type_sizef(GGML_TYPE_F32); // fc2 biasprintf("%s: ggml ctx size = %6.2f MB\n", __func__, ctx_size/(1024.0*1024.0));}// create the ggml context{struct ggml_init_params params = {/*.mem_size =*/ ctx_size + 1024*1024,/*.mem_buffer =*/ NULL,/*.no_alloc =*/ false,};model.ctx = ggml_init(params);if (!model.ctx) {fprintf(stderr, "%s: ggml_init() failed\n", __func__);return false;}}// Read FC1 layer 1{// Read dimensions and keep in a signed int// 读取sizeof(n_dims)个字节的数据,并将其存储到n_dims指向的内存空间中。`reinterpret_cast<char *>` 是一个类型转换操作符,它将 `&n_dims` 的地址强制转换为 `char *` 类型的指针,这样可以将 `int32_t` 类型的数据按字节读取。int32_t n_dims; fin.read(reinterpret_cast<char *>(&n_dims), sizeof(n_dims));{int32_t ne_weight[2] = { 1, 1 };for (int i = 0; i < n_dims; ++i) {fin.read(reinterpret_cast<char *>(&ne_weight[i]), sizeof(ne_weight[i]));}// FC1 dimensions taken from file, eg. 768x500model.hparams.n_input = ne_weight[0];model.hparams.n_hidden = ne_weight[1];model.fc1_weight = ggml_new_tensor_2d(ctx, GGML_TYPE_F32, model.hparams.n_input, model.hparams.n_hidden);fin.read(reinterpret_cast<char *>(model.fc1_weight->data), ggml_nbytes(model.fc1_weight));ggml_set_name(model.fc1_weight, "fc1_weight");}{int32_t ne_bias[2] = { 1, 1 };for (int i = 0; i < n_dims; ++i) {fin.read(reinterpret_cast<char *>(&ne_bias[i]), sizeof(ne_bias[i]));}model.fc1_bias = ggml_new_tensor_1d(ctx, GGML_TYPE_F32, model.hparams.n_hidden);fin.read(reinterpret_cast<char *>(model.fc1_bias->data), ggml_nbytes(model.fc1_bias));ggml_set_name(model.fc1_bias, "fc1_bias");// just for testing purposes, set some parameters to non-zeromodel.fc1_bias->op_params[0] = 0xdeadbeef;}}// Read FC2 layer 2{// Read dimensionsint32_t n_dims;fin.read(reinterpret_cast<char *>(&n_dims), sizeof(n_dims));{int32_t ne_weight[2] = { 1, 1 };for (int i = 0; i < n_dims; ++i) {fin.read(reinterpret_cast<char *>(&ne_weight[i]), sizeof(ne_weight[i]));}// FC1 dimensions taken from file, eg. 10x500model.hparams.n_classes = ne_weight[1];model.fc2_weight = ggml_new_tensor_2d(ctx, GGML_TYPE_F32, model.hparams.n_hidden, model.hparams.n_classes);fin.read(reinterpret_cast<char *>(model.fc2_weight->data), ggml_nbytes(model.fc2_weight));ggml_set_name(model.fc2_weight, "fc2_weight");}{int32_t ne_bias[2] = { 1, 1 };for (int i = 0; i < n_dims; ++i) {fin.read(reinterpret_cast<char *>(&ne_bias[i]), sizeof(ne_bias[i]));}model.fc2_bias = ggml_new_tensor_1d(ctx, GGML_TYPE_F32, model.hparams.n_classes);fin.read(reinterpret_cast<char *>(model.fc2_bias->data), ggml_nbytes(model.fc2_bias));ggml_set_name(model.fc2_bias, "fc2_bias");}}fin.close();return true;

}

构建模型的前向传递计算图 mnist_eval

- 定义 mnist_eval 函数,用于构建模型的前向传递计算图 评估模型,返回预测结果(0-9的数字)。

// evaluate the model

//

// - model: the model

// - n_threads: number of threads to use

// - digit: 784 pixel values

//

// returns 0 - 9 prediction

int mnist_eval(const mnist_model & model,const int n_threads,std::vector<float> digit,const char * fname_cgraph) {const auto & hparams = model.hparams;static size_t buf_size = hparams.n_input * sizeof(float) * 4;static void * buf = malloc(buf_size);struct ggml_init_params params = {/*.mem_size =*/ buf_size,/*.mem_buffer =*/ buf,/*.no_alloc =*/ false,};struct ggml_context * ctx0 = ggml_init(params);struct ggml_cgraph gf = {};struct ggml_tensor * input = ggml_new_tensor_1d(ctx0, GGML_TYPE_F32, hparams.n_input);memcpy(input->data, digit.data(), ggml_nbytes(input));ggml_set_name(input, "input");// fc1 MLP = Ax + bggml_tensor * fc1 = ggml_add(ctx0, ggml_mul_mat(ctx0, model.fc1_weight, input), model.fc1_bias);ggml_tensor * fc2 = ggml_add(ctx0, ggml_mul_mat(ctx0, model.fc2_weight, ggml_relu(ctx0, fc1)), model.fc2_bias);// soft maxggml_tensor * probs = ggml_soft_max(ctx0, fc2);ggml_set_name(probs, "probs");// build / export / run the computation graphggml_build_forward_expand(&gf, probs);ggml_graph_compute_with_ctx(ctx0, &gf, n_threads);//ggml_graph_print (&gf);ggml_graph_dump_dot(&gf, NULL, "mnist.dot");if (fname_cgraph) {// export the compute graph for later use// see the "mnist-cpu" exampleggml_graph_export(&gf, "mnist.ggml");fprintf(stderr, "%s: exported compute graph to '%s'\n", __func__, fname_cgraph);}const float * probs_data = ggml_get_data_f32(probs);const int prediction = std::max_element(probs_data, probs_data + 10) - probs_data;ggml_free(ctx0);return prediction;

}wasm_eval用于调用WebAssembly版本的神经网络模型评估函数,wasm_random_digit用于从测试数据集中随机读取一个数字。

#ifdef __cplusplus //如果编译器是C++编译器

extern "C" {

#endifint wasm_eval(uint8_t * digitPtr) {mnist_model model;if (!mnist_model_load("models/mnist/ggml-model-f32.bin", model)) {fprintf(stderr, "error loading model\n");return -1;}std::vector<float> digit(digitPtr, digitPtr + 784);int result = mnist_eval(model, 1, digit, nullptr);ggml_free(model.ctx);return result;

}int wasm_random_digit(char * digitPtr) {auto fin = std::ifstream("models/mnist/t10k-images.idx3-ubyte", std::ios::binary);if (!fin) {fprintf(stderr, "failed to open digits file\n");return 0;}srand(time(NULL));// Seek to a random digit: 16-byte header + 28*28 * (random 0 - 10000)fin.seekg(16 + 784 * (rand() % 10000));fin.read(digitPtr, 784);return 1;

}#ifdef __cplusplus

}

#endifmain

int main(int argc, char ** argv) {srand(time(NULL));ggml_time_init();if (argc != 3) {fprintf(stderr, "Usage: %s models/mnist/ggml-model-f32.bin models/mnist/t10k-images.idx3-ubyte\n", argv[0]);exit(0);}uint8_t buf[784];mnist_model model;std::vector<float> digit;// load the model{const int64_t t_start_us = ggml_time_us();if (!mnist_model_load(argv[1], model)) {fprintf(stderr, "%s: failed to load model from '%s'\n", __func__, "models/ggml-model-f32.bin");return 1;}const int64_t t_load_us = ggml_time_us() - t_start_us;fprintf(stdout, "%s: loaded model in %8.2f ms\n", __func__, t_load_us / 1000.0f);}// read a random digit from the test set{std::ifstream fin(argv[2], std::ios::binary);if (!fin) {fprintf(stderr, "%s: failed to open '%s'\n", __func__, argv[2]);return 1;}// seek to a random digit: 16-byte header + 28*28 * (random 0 - 10000)fin.seekg(16 + 784 * (rand() % 10000));fin.read((char *) &buf, sizeof(buf));}// render the digit in ASCII{digit.resize(sizeof(buf));for (int row = 0; row < 28; row++) {for (int col = 0; col < 28; col++) {fprintf(stderr, "%c ", (float)buf[row*28 + col] > 230 ? '*' : '_');digit[row*28 + col] = ((float)buf[row*28 + col]);}fprintf(stderr, "\n");}fprintf(stderr, "\n");}const int prediction = mnist_eval(model, 1, digit, "mnist.ggml");fprintf(stdout, "%s: predicted digit is %d\n", __func__, prediction);ggml_free(model.ctx);return 0;

}

运行

$:~/ggml/ggml$ mkdir build && cd build

$:~/ggml/ggml/build$ cmake ..

-- The C compiler identification is GNU 9.5.0

-- The CXX compiler identification is GNU 9.5.0

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Check for working C compiler: /usr/bin/cc - skipped

-- Detecting C compile features

-- Detecting C compile features - done

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /usr/bin/c++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Found Git: /usr/bin/git (found version "2.34.1")

-- Looking for pthread.h

-- Looking for pthread.h - found

-- Performing Test CMAKE_HAVE_LIBC_PTHREAD

-- Performing Test CMAKE_HAVE_LIBC_PTHREAD - Success

-- Found Threads: TRUE

-- CMAKE_SYSTEM_PROCESSOR: x86_64

-- x86 detected

-- Linux detected

-- x86 detected

-- Linux detected

-- Configuring done

-- Generating done

-- Build files have been written to: /home/pdd/ggml/ggml/build

(trt2) pdd@pdd-Dell-G15-5511:~/ggml/ggml/build$ make -j4 mnist

[ 16%] Building CXX object examples/CMakeFiles/common.dir/common.cpp.o

[ 33%] Building C object src/CMakeFiles/ggml.dir/ggml.c.o

[ 50%] Linking C static library libggml.a

[ 50%] Built target ggml

[ 66%] Linking CXX static library libcommon.a

[ 66%] Built target common

[ 83%] Building CXX object examples/mnist/CMakeFiles/mnist.dir/main.cpp.o

[100%] Linking CXX executable ../../bin/mnist

[100%] Built target mnist

$:~/ggml/ggml/build/bin$ ls -ahl

总用量 352K

drwxrwxr-x 2 pdd pdd 4.0K Aug 15 12:17 .

drwxrwxr-x 7 pdd pdd 4.0K Aug 15 12:20 ..

-rwxrwxr-x 1 pdd pdd 341K Aug 15 12:17 mnist

$:~/ggml/ggml/build$ ./bin/mnist /home/pdd/ggml/ggml/examples/mnist/models/mnist/ggml-model-f32.bin /home/pdd/ggml/ggml/examples/mnist/models/mnist/t10k-images.idx3-ubyte

mnist_model_load: loading model from '/home/pdd/ggml/ggml/examples/mnist/models/mnist/ggml-model-f32.bin'

mnist_model_load: ggml ctx size = 1.52 MB

main: loaded model in 3.82 ms

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

_ _ _ _ _ _ _ _ _ * * * * * * * * * _ _ _ _ _ _ _ _ _ _

_ _ _ _ _ _ _ _ _ _ _ _ _ _ * * * * * _ _ _ _ _ _ _ _ _

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ * * * _ _ _ _ _ _ _ _

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ * * * _ _ _ _ _ _ _

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ * * * _ _ _ _ _ _

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ * * * _ _ _ _ _

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ * * * _ _ _ _ _

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ * * * _ _ _ _ _

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ * * * _ _ _ _ _

_ _ _ _ _ _ _ _ _ * * * * * * _ _ _ _ _ * * * _ _ _ _ _

_ _ _ _ _ _ _ * * * * * * * * * * * * * * * _ _ _ _ _ _

_ _ _ _ _ _ * * * _ _ _ _ * * * * * * * * * * _ _ _ _ _

_ _ _ _ _ * * _ _ _ _ _ _ _ _ _ * * * * * * * _ _ _ _ _

_ _ _ _ * * * _ _ _ _ _ _ _ _ _ * * * * _ _ _ _ _ _ _ _

_ _ _ _ * * * _ _ _ _ _ _ _ * * * * * _ _ _ _ _ _ _ _ _

_ _ _ _ * * * _ _ _ _ _ * * * * * _ _ _ _ _ _ _ _ _ _ _

_ _ _ _ _ _ * * * * * * * * * _ _ _ _ _ _ _ _ _ _ _ _ _

_ _ _ _ _ _ _ * * * * * * * _ _ _ _ _ _ _ _ _ _ _ _ _ _

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ ggml_graph_dump_dot: dot -Tpng mnist.dot -o mnist.dot.png && open mnist.dot.pngmagic 67676d6c

version 1

leafs 5

nodes 6

eval 6144TYPE OP NDIMS NE0 NE1 NE2 NE3 NB0 NB1 NB2 NB3 DATA NAME

f32 NONE 2 500 10 1 1 4 2000 20000 20000 0x7feee8650870 fc2_weight

f32 NONE 2 784 500 1 1 4 3136 1568000 1568000 0x7feee84d1140 fc1_weight

f32 NONE 1 784 1 1 1 4 3136 3136 3136 0x55cb404f7ec0 input

f32 NONE 1 500 1 1 1 4 2000 2000 2000 0x7feee864ff70 fc1_bias

f32 NONE 1 10 1 1 1 4 40 40 40 0x7feee86557c0 fc2_biasARG TYPE OP NDIMS NE0 NE1 NE2 NE3 NB0 NB1 NB2 NB3 NTASKS DATA NAME

DST f32 MUL_MAT 2 500 1 1 1 4 2000 2000 2000 0x55cb404f8c30 node_0

SRC f32 NONE 2 784 500 1 1 4 3136 1568000 1568000 0x7feee84d1140 fc1_weight

SRC f32 NONE 1 784 1 1 1 4 3136 3136 3136 0x55cb404f7ec0 inputDST f32 ADD 2 500 1 1 1 4 2000 2000 2000 0x55cb404f9530 node_1

SRC f32 MUL_MAT 2 500 1 1 1 4 2000 2000 2000 0x55cb404f8c30 node_0

SRC f32 NONE 1 500 1 1 1 4 2000 2000 2000 0x7feee864ff70 fc1_biasDST f32 UNARY 2 500 1 1 1 4 2000 2000 2000 0x55cb404f9e30 node_2

SRC f32 ADD 2 500 1 1 1 4 2000 2000 2000 0x55cb404f9530 node_1DST f32 MUL_MAT 2 10 1 1 1 4 40 40 40 0x55cb404fa730 node_3

SRC f32 NONE 2 500 10 1 1 4 2000 20000 20000 0x7feee8650870 fc2_weight

SRC f32 UNARY 2 500 1 1 1 4 2000 2000 2000 0x55cb404f9e30 node_2DST f32 ADD 2 10 1 1 1 4 40 40 40 0x55cb404fa890 node_4

SRC f32 MUL_MAT 2 10 1 1 1 4 40 40 40 0x55cb404fa730 node_3

SRC f32 NONE 1 10 1 1 1 4 40 40 40 0x7feee86557c0 fc2_biasDST f32 SOFT_MAX 2 10 1 1 1 4 40 40 40 0x55cb404fa9f0 probs

SRC f32 ADD 2 10 1 1 1 4 40 40 40 0x55cb404fa890 node_4mnist_eval: exported compute graph to 'mnist.ggml'

main: predicted digit is 2CG

-

Extract images from MNIST idx3 ubyte file format in Python

-

2023.08.18今天发现ggml的引用文件变成两个了,这个库还在不断的更新中