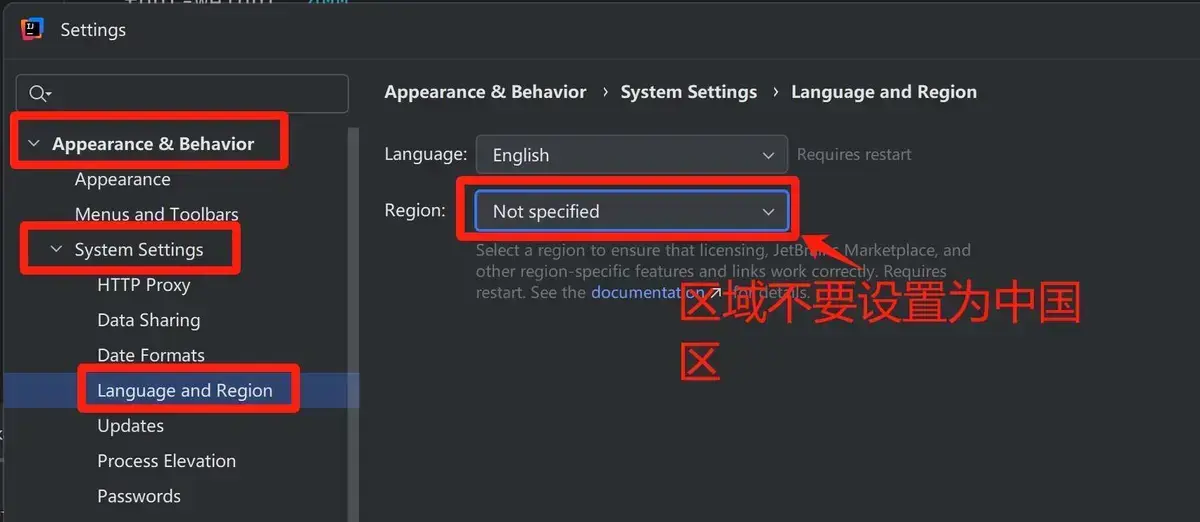

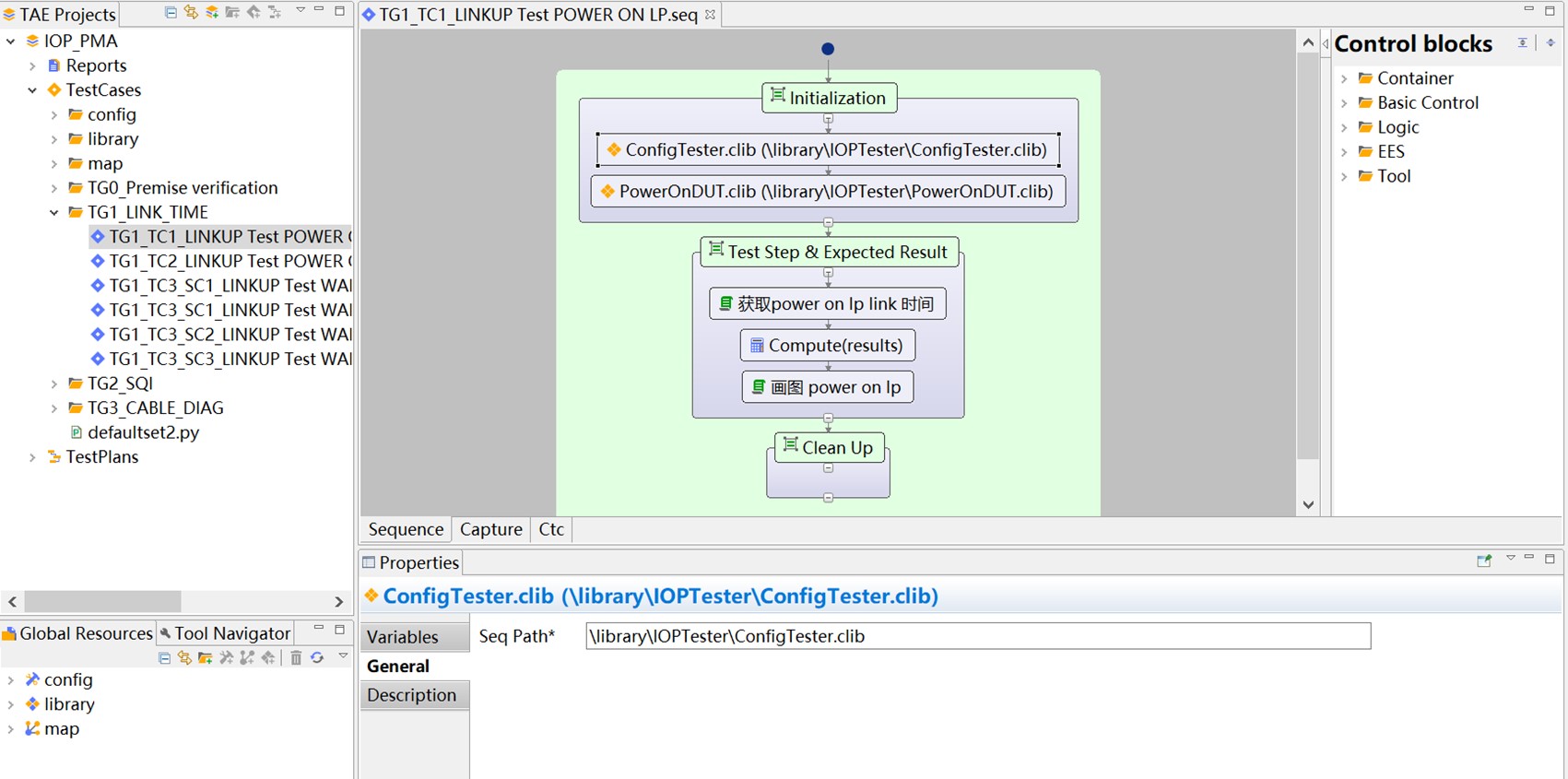

楼主的服务器是NVIDIA RTX 4090,可支持12.2CUDA驱动,但是TORCH-NGP中CUDA函数与TORCH=1.11.0版本适配,因此需要安装符合TORCH-NGP的CUDA环境。建议不要用github官网上的指令【https://github.com/ashawkey/torch-ngp】去下载,会出现一系列问题。

create -n torch-ngp python=3.9conda install pytorch==1.12.1 torchvision==0.13.1 torchaudio==0.12.1 cudatoolkit=11.3 -c pytorchbash scripts/install_ext.sh安装raymarchingpython main_nerf.py data/fox --workspace trial_nerf执行这条指令根据报错安装剩余的库,这里要尤其注意torchmetric,安装和pytotrch=1.11.0适配的0.7.0版本的torchmetric,pip install torchmetric=0.7.0,否则回导致安装新的torch覆盖原有torch和cuda环境,造成so链接失误。- 运行成功后会提示找不到data/fox,可以在 https://github.com/NVlabs/instant-ngp/tree/master 中下载。

### Instant-ngp NeRF

# train with different backbones (with slower pytorch ray marching)

# for the colmap dataset, the default dataset setting `--bound 2 --scale 0.33` is used.

python main_nerf.py data/fox --workspace trial_nerf # fp32 mode

python main_nerf.py data/fox --workspace trial_nerf --fp16 # fp16 mode (pytorch amp)

python main_nerf.py data/fox --workspace trial_nerf --fp16 --ff # fp16 mode + FFMLP (this repo's implementation)

python main_nerf.py data/fox --workspace trial_nerf --fp16 --tcnn # fp16 mode + official tinycudann's encoder & MLP# use CUDA to accelerate ray marching (much more faster!)

python main_nerf.py data/fox --workspace trial_nerf --fp16 --cuda_ray # fp16 mode + cuda raymarching# preload data into GPU, accelerate training but use more GPU memory.

python main_nerf.py data/fox --workspace trial_nerf --fp16 --preload# one for all: -O means --fp16 --cuda_ray --preload, which usually gives the best results balanced on speed & performance.

python main_nerf.py data/fox --workspace trial_nerf -O# test mode

python main_nerf.py data/fox --workspace trial_nerf -O --test# construct an error_map for each image, and sample rays based on the training error (slow down training but get better performance with the same number of training steps)

python main_nerf.py data/fox --workspace trial_nerf -O --error_map# use a background model (e.g., a sphere with radius = 32), can supress noises for real-world 360 dataset

python main_nerf.py data/firekeeper --workspace trial_nerf -O --bg_radius 32# start a GUI for NeRF training & visualization

# always use with `--fp16 --cuda_ray` for an acceptable framerate!

python main_nerf.py data/fox --workspace trial_nerf -O --gui# test mode for GUI

python main_nerf.py data/fox --workspace trial_nerf -O --gui --test# for the blender dataset, you should add `--bound 1.0 --scale 0.8 --dt_gamma 0`

# --bound means the scene is assumed to be inside box[-bound, bound]

# --scale adjusts the camera locaction to make sure it falls inside the above bounding box.

# --dt_gamma controls the adaptive ray marching speed, set to 0 turns it off.

python main_nerf.py data/nerf_synthetic/lego --workspace trial_nerf -O --bound 1.0 --scale 0.8 --dt_gamma 0

python main_nerf.py data/nerf_synthetic/lego --workspace trial_nerf -O --bound 1.0 --scale 0.8 --dt_gamma 0 --gui# for the LLFF dataset, you should first convert it to nerf-compatible format:

python scripts/llff2nerf.py data/nerf_llff_data/fern # by default it use full-resolution images, and write `transforms.json` to the folder

python scripts/llff2nerf.py data/nerf_llff_data/fern --images images_4 --downscale 4 # if you prefer to use the low-resolution images

# then you can train as a colmap dataset (you'll need to tune the scale & bound if necessary):

python main_nerf.py data/nerf_llff_data/fern --workspace trial_nerf -O

python main_nerf.py data/nerf_llff_data/fern --workspace trial_nerf -O --gui# for the Tanks&Temples dataset, you should first convert it to nerf-compatible format:

python scripts/tanks2nerf.py data/TanksAndTemple/Family # write `trainsforms_{split}.json` for [train, val, test]

# then you can train as a blender dataset (you'll need to tune the scale & bound if necessary)

python main_nerf.py data/TanksAndTemple/Family --workspace trial_nerf_family -O --bound 1.0 --scale 0.33 --dt_gamma 0

python main_nerf.py data/TanksAndTemple/Family --workspace trial_nerf_family -O --bound 1.0 --scale 0.33 --dt_gamma 0 --gui# for custom dataset, you should:

# 1. take a video / many photos from different views

# 2. put the video under a path like ./data/custom/video.mp4 or the images under ./data/custom/images/*.jpg.

# 3. call the preprocess code: (should install ffmpeg and colmap first! refer to the file for more options)

python scripts/colmap2nerf.py --video ./data/custom/video.mp4 --run_colmap # if use video

python scripts/colmap2nerf.py --images ./data/custom/images/ --run_colmap # if use images

python scripts/colmap2nerf.py --video ./data/custom/video.mp4 --run_colmap --dynamic # if the scene is dynamic (for D-NeRF settings), add the time for each frame.

# 4. it should create the transform.json, and you can train with: (you'll need to try with different scale & bound & dt_gamma to make the object correctly located in the bounding box and render fluently.)

python main_nerf.py data/custom --workspace trial_nerf_custom -O --gui --scale 2.0 --bound 1.0 --dt_gamma 0.02### Instant-ngp SDF

python main_sdf.py data/armadillo.obj --workspace trial_sdf

python main_sdf.py data/armadillo.obj --workspace trial_sdf --fp16

python main_sdf.py data/armadillo.obj --workspace trial_sdf --fp16 --ff

python main_sdf.py data/armadillo.obj --workspace trial_sdf --fp16 --tcnnpython main_sdf.py data/armadillo.obj --workspace trial_sdf --fp16 --test### TensoRF

# almost the same as Instant-ngp NeRF, just replace the main script.

python main_tensoRF.py data/fox --workspace trial_tensoRF -O

python main_tensoRF.py data/nerf_synthetic/lego --workspace trial_tensoRF -O --bound 1.0 --scale 0.8 --dt_gamma 0 ### CCNeRF

# training on single objects, turn on --error_map for better quality.

python main_CCNeRF.py data/nerf_synthetic/chair --workspace trial_cc_chair -O --bound 1.0 --scale 0.67 --dt_gamma 0 --error_map

python main_CCNeRF.py data/nerf_synthetic/ficus --workspace trial_cc_ficus -O --bound 1.0 --scale 0.67 --dt_gamma 0 --error_map

python main_CCNeRF.py data/nerf_synthetic/hotdog --workspace trial_cc_hotdog -O --bound 1.0 --scale 0.67 --dt_gamma 0 --error_map

# compose, use a larger bound and more samples per ray for better quality.

python main_CCNeRF.py data/nerf_synthetic/hotdog --workspace trial_cc_hotdog -O --bound 2.0 --scale 0.67 --dt_gamma 0 --max_steps 2048 --test --compose

# compose + gui, only about 1 FPS without dynamic resolution... just for quick verification of composition results.

python main_CCNeRF.py data/nerf_synthetic/hotdog --workspace trial_cc_hotdog -O --bound 2.0 --scale 0.67 --dt_gamma 0 --test --compose --gui### D-NeRF

# almost the same as Instant-ngp NeRF, just replace the main script.

# use deformation to model dynamic scene

python main_dnerf.py data/dnerf/jumpingjacks --workspace trial_dnerf_jumpingjacks -O --bound 1.0 --scale 0.8 --dt_gamma 0

python main_dnerf.py data/dnerf/jumpingjacks --workspace trial_dnerf_jumpingjacks -O --bound 1.0 --scale 0.8 --dt_gamma 0 --gui

# use temporal basis to model dynamic scene

python main_dnerf.py data/dnerf/jumpingjacks --workspace trial_dnerf_basis_jumpingjacks -O --bound 1.0 --scale 0.8 --dt_gamma 0 --basis

python main_dnerf.py data/dnerf/jumpingjacks --workspace trial_dnerf_basis_jumpingjacks -O --bound 1.0 --scale 0.8 --dt_gamma 0 --basis --gui

# for the hypernerf dataset, first convert it into nerf-compatible format:

python scripts/hyper2nerf.py data/split-cookie --downscale 2 # will generate transforms*.json

python main_dnerf.py data/split-cookie/ --workspace trial_dnerf_cookies -O --bound 1 --scale 0.3 --dt_gamma 0

数据集

nerf_synthetic https://drive.google.com/drive/folders/128yBriW1IG_3NJ5Rp7APSTZsJqdJdfc1

Tanks&Temples: https://dl.fbaipublicfiles.com/nsvf/dataset/TanksAndTemple.zip

LLFF: https://github.com/ashawkey/torch-ngp/blob/main/scripts/llff2nerf.py

Mip-NeRF 360: http://storage.googleapis.com/gresearch/refraw360/360_v2.zip

(dynamic) D-NeRF https://www.dropbox.com/s/0bf6fl0ye2vz3vr/data.zip?dl=0

(dynamic) Hyper-NeRF: https://github.com/google/hypernerf/releases/tag/v0.1