- 在有GPU的环境启动一个ollama大模型,非常简单:

注意,ollama启动时默认监听在127.0.0.1:11434上,可以通过配置OLLAMA_HOST环境变量修改

`export OLLAMA_HOST="0.0.0.0:11434"

ollama serve&

ollama run qwen2.5:7b-instruct`

- 然后就可以在远端访问:

`curl http://172.2.3.4:11434/api/generate -d '{"model": "qwen2.5:7b-instruct","prompt": "what can you do?","stream":false}'

curl http://172.2.3.4:11434/api/generate -d '{

"model": "qwen2.5:7b-instruct",

"prompt": "Why is the sky blue?",

"stream": false,

"options": {

"num_keep": 5,

"seed": 42,

"num_predict": 100,

"top_k": 20,

"top_p": 0.9,

"tfs_z": 0.5,

"typical_p": 0.7,

"repeat_last_n": 33,

"temperature": 0.8,

"repeat_penalty": 1.2,

"presence_penalty": 1.5,

"frequency_penalty": 1.0,

"mirostat": 1,

"mirostat_tau": 0.8,

"mirostat_eta": 0.6,

"penalize_newline": true,

"stop": ["\n", "user:"],

"numa": false,

"num_ctx": 1024,

"num_batch": 2,

"num_gqa": 1,

"num_gpu": 1,

"main_gpu": 0,

"low_vram": false,

"f16_kv": true,

"vocab_only": false,

"use_mmap": true,

"use_mlock": false,

"rope_frequency_base": 1.1,

"rope_frequency_scale": 0.8,

"num_thread": 8

}

}'`

-

通过openai代码接口访问:

`from openai import OpenAI

client = OpenAI(

base_url='http://172.2.3.4:11434/v1/',

api_key='ollama', # required but ignored

)

chat_completion = client.chat.completions.create(

messages=[

{

"role": "system",

"content": "你是一个能够理解中英文指令并帮助完成任务的智能助手。你的任务是根据用户的需求生成合适的分类任务或生成任务,并准确判断这些任务的类型。请确保你的回答简洁、准确且符合中英文语境。",

},

{

"role": "user",

"content": "Come up with a series of tasks:1. Link all the entities in the sentence (highlighted in brackets) to a Wikipedia page.For each entity, you should output the Wikipedia page title, or output None if you know.",

}

],model='llama3.2:1b',

model='qwen2.5:7b',

max_tokens=38192,

temperature=0.7,

top_p=0.5,

frequency_penalty=0,

presence_penalty=2,

)

print(chat_completion)

`

可能会报错:ImportError: cannot import name 'OpenAI' from 'openai',原因可能是python版本低,我用的python3.10环境的openai不行,当时用python3.12可以。

-

content填写为"Brainstorm a list of possible New Year's resolutions." llama server会报错400 不晓得为啥。一般400错误是客户端发送的请求有误,服务器无法处理。对于大模型API来说,这通常意味着请求格式不正确、缺少必要的参数、或者提供的参数值无效。但是我的格式没有问题啊,只是修改了content里面的字符串,只要里面有单引号就会报400。

等我搞明白了,我再来更新。 -

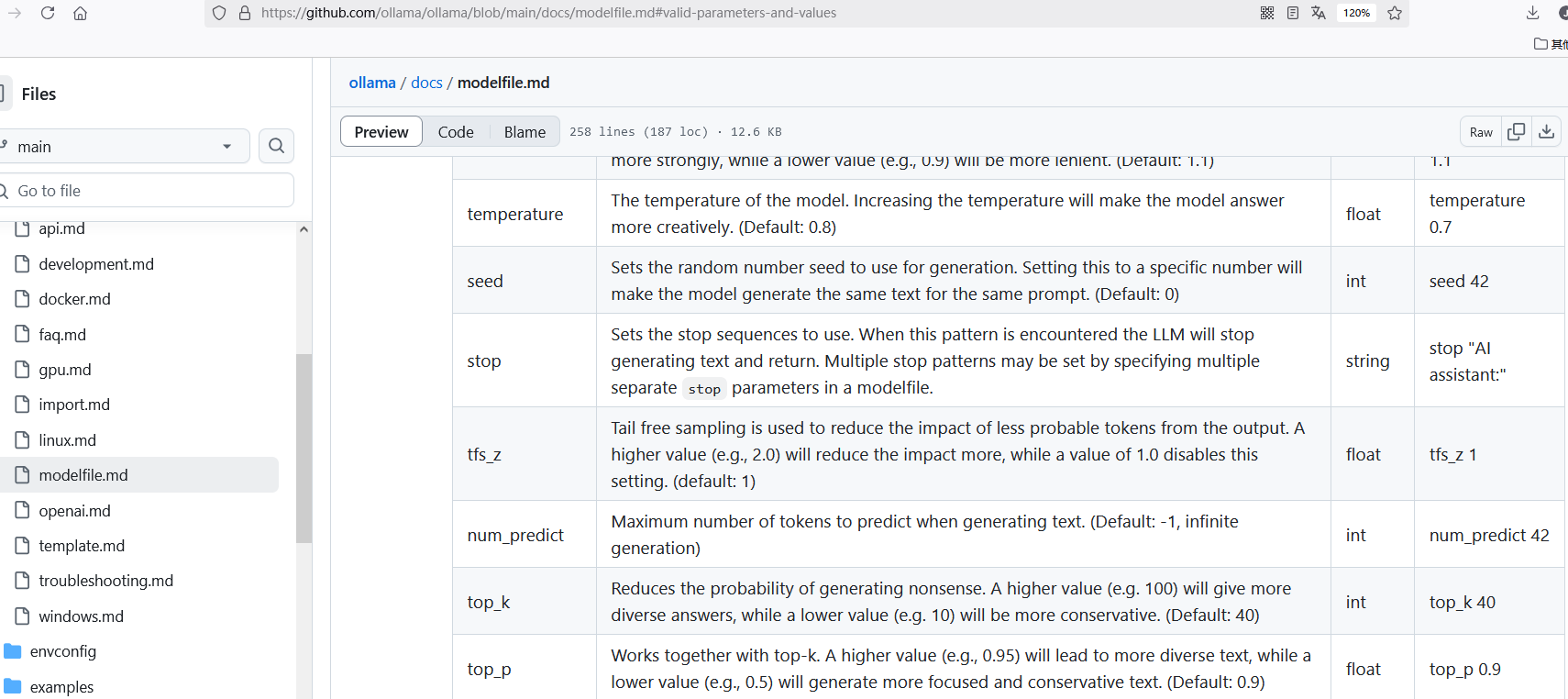

在调用API接口时还可以填写其他的参数,例如temperature等,可以参考如下链接:

ollama/docs/api.md at main · ollama/ollama · GitHub

ollama/docs/modelfile.md at main · ollama/ollama · GitHub

常用参数的意义,简单摘录如下:

全平台janeysj都是我