摘要

使用rust实现了一个完整的直接法视觉里程计系统,能够通过比较两幅图像中的像素强度来估计相机的运动。它通过单层和多层的优化策略,结合图像金字塔和并行计算,提高了位姿估计的精度和效率。最终,代码输出了优化后的相机位姿变换矩阵,并可视化了投影点的位置。

This is a complete direct method visual odometry system implemented in Rust, which estimates camera motion by comparing pixel intensities between two images. It employs both single-layer and multi-layer optimization strategies, combined with image pyramids and parallel computing, to enhance the accuracy and efficiency of pose estimation. Finally, the code outputs the optimized camera pose transformation matrix and visualizes the positions of the projected points.

关键词

rust;slam;visual odometry;

关键信息

项目地址:[https://github.com/ByeIO/slambook2.rs]

[package]

name = "exp65-rust-ziglang-slambook2"

version = "0.1.0"

edition = "2021"[dependencies]

env_logger = { version = "0.11.6", default-features = false, features = ["auto-color","humantime",

] }# 随机数

rand = "0.8.5"

rand_distr = "0.4.3"

fastrand = "2.3.0"# 线性代数

nalgebra = { version = "0.33.2",features = ["rand"]}

ndarray = "0.16.1"# winit

wgpu = "23.0.1"

winit = "0.30.8"# egui

eframe = "0.30.0"

egui = { version = "0.30.0", features = ["default"

]}

egui_extras = {version = "0.30.0",features = ["default", "image"]}# three_d

three-d = {path = "./static/three-d" , features=["egui-gui"] }

three-d-asset = {version = "0.9",features = ["hdr", "http"] }# sophus

sophus = { version = "0.11.0" }

sophus_autodiff = { version = "0.11.0" }

sophus_geo = "0.11.0"

sophus_image = "0.11.0"

sophus_lie = "0.11.0"

sophus_opt = "0.11.0"

sophus_renderer = "0.11.0"

sophus_sensor = "0.11.0"

sophus_sim = "0.11.0"

sophus_spline = "0.11.0"

sophus_tensor = "0.11.0"

sophus_timeseries = "0.11.0"

sophus_viewer = "0.11.0"

tokio = "1.43.0"

approx = "0.5.1"

bytemuck = "1.21.0"

thingbuf = "0.1.6"# rust-cv计算机视觉

cv = { version = "0.6.0" , features = ["default"] }

cv-core = "0.15.0"

cv-geom = "0.7.0"

cv-pinhole = "0.6.0"

akaze = "0.7.0"

eight-point = "0.8.0"

lambda-twist = "0.7.0"

image = "0.25.5"

imageproc = "0.25.0"# 最小二乘优化

gomez = "0.5.0"# 图优化

factrs = "0.2.0"# ORB角点检测

bye_orb_rs = { path = "./static/bye_orb_rs" }# 依赖覆盖

[patch.crates-io]

pulp = { path = "./static/pulp" }

原理简介

视觉里程计简介

视觉里程计(Visual Odometry, VO)是一种通过分析图像序列来估计相机运动的技术。主要有直接法和光流法。

1. 直接法

直接法通过最小化图像间的光度误差来估计相机运动。假设在图像序列中,像素的光度值在相邻帧之间保持不变,即:

其中,\(I_1\) 和 \(I_2\) 分别是第一帧和第二帧的图像,\(\mathbf{x}\) 是像素坐标,\(\mathbf{d}\) 是像素的位移。

为了估计相机的位姿变化,我们需要最小化以下光度误差:

通过对误差函数 \(E(\mathbf{d})\) 进行优化,可以得到像素的位移 \(\mathbf{d}\),进而推导出相机的运动。

2. 光流法

光流法通过估计图像序列中像素的运动矢量场来推断相机运动。光流法假设在短时间内,图像中物体的运动是平滑的。光流约束方程为:

其中,\((u, v)\) 是光流矢量,表示像素在图像平面上的位移。

为了求解光流矢量,通常使用泰勒展开对上述方程进行线性化,得到:

这个方程称为光流约束方程。通过对多个像素点的光流约束方程进行求解,可以得到整个图像的光流场。

这两种方法各有优缺点,直接法利用了图像的全部像素信息,适用于纹理丰富的场景;而光流法适用于实时应用,计算相对简单。

雅可比矩阵简介

雅可比矩阵(Jacobian Matrix)是一个重要的数学工具,用于描述多变量函数的偏导数。它在许多领域中都有应用,包括优化、控制理论和计算机视觉等。

1. 雅可比矩阵的定义

对于一个向量值函数 \(\mathbf{f} : \mathbb{R}^n \to \mathbb{R}^m\),其雅可比矩阵是一个 \(m \times n\) 的矩阵,矩阵中的每个元素是函数 \(\mathbf{f}\) 的分量对输入变量的偏导数。具体来说,雅可比矩阵 \(J\) 的元素 \(J_{ij}\) 是函数 \(\mathbf{f}_i\) 对变量 \(x_j\) 的偏导数:

因此,雅可比矩阵可以表示为:

2. 应用

-

优化问题:在优化问题中,雅可比矩阵用于描述目标函数的梯度信息,帮助优化算法找到函数的极值点。

-

非线性方程组求解:在求解非线性方程组时,雅可比矩阵用于线性化方程组,从而应用迭代方法(如牛顿法)进行求解。

-

计算机视觉:在计算机视觉中,雅可比矩阵用于描述图像坐标变化对相机参数变化的敏感性,常用于视觉里程计和三维重建等应用。

雅可比矩阵是一个强大的工具,能够有效地描述多变量函数的局部线性近似,从而在许多实际问题中发挥重要作用。

图像金字塔简介

图像金字塔是一种多分辨率图像表示方法,通过将图像逐层缩小,形成一系列不同分辨率的图像。图像金字塔在计算机视觉中有广泛的应用,尤其是在图像处理、特征检测和匹配等领域。

1. 图像金字塔的类型

-

高斯金字塔(Gaussian Pyramid):

- 通过对图像进行高斯模糊,然后下采样(通常是缩小一半)来构建。

- 每一层都是上一层的低通滤波版本,主要用于图像平滑和降噪。

-

拉普拉斯金字塔(Laplacian Pyramid):

- 由高斯金字塔构建,通过从每一层的高斯金字塔中减去其下一层的上采样版本得到。

- 用于图像的边缘检测和增强。

2. 图像金字塔的构建

在代码中,图像金字塔的构建过程如下:

// create pyramids

vector<cv::Mat> pyr1, pyr2; // image pyramids

for (int i = 0; i < pyramids; i++) {if (i == 0) {pyr1.push_back(img1);pyr2.push_back(img2);} else {cv::Mat img1_pyr, img2_pyr;cv::resize(pyr1[i - 1], img1_pyr,cv::Size(pyr1[i - 1].cols * pyramid_scale, pyr1[i - 1].rows * pyramid_scale));cv::resize(pyr2[i - 1], img2_pyr,cv::Size(pyr2[i - 1].cols * pyramid_scale, pyr2[i - 1].rows * pyramid_scale));pyr1.push_back(img1_pyr);pyr2.push_back(img2_pyr);}

}

在这个过程中,图像被逐层缩小,形成一个金字塔结构。每一层的图像分辨率是上一层的 pyramid_scale 倍(通常是0.5),即每层的宽度和高度都是上一层的一半。

3. 应用

- 特征检测和匹配:在不同分辨率下检测特征,可以提高特征匹配的鲁棒性。

- 图像融合:在多分辨率下进行图像融合,可以减少融合过程中的伪影。

- 图像压缩:通过金字塔结构,可以实现图像的渐进式传输和压缩。

图像金字塔通过提供多分辨率的图像表示,能够有效地处理不同尺度下的图像信息,是计算机视觉中一个重要的工具。

图像双线性插值简介

双线性插值是一种用于图像处理的插值方法,用于在图像缩放、旋转或变形时计算非整数像素位置的像素值。它通过对周围的四个像素进行加权平均来估计目标像素的值。

假设我们有一个图像,目标是计算位置 \((x, y)\) 的像素值,其中 \(x\) 和 \(y\) 不是整数。双线性插值的步骤如下:

-

确定邻近的四个像素:

- 找到包含 \((x, y)\) 的四个最近的整数像素点:\((x_1, y_1)\)、\((x_1+1, y_1)\)、\((x_1, y_1+1)\)、\((x_1+1, y_1+1)\)。

-

计算水平插值:

- 对 \(x\) 方向进行插值,得到两个中间值:\[f(x, y_1) = (x_1+1 - x) \cdot f(x_1, y_1) + (x - x_1) \cdot f(x_1+1, y_1) \]\[f(x, y_1+1) = (x_1+1 - x) \cdot f(x_1, y_1+1) + (x - x_1) \cdot f(x_1+1, y_1+1) \]

- 对 \(x\) 方向进行插值,得到两个中间值:

-

计算垂直插值:

- 对 \(y\) 方向进行插值,得到最终的插值值:\[f(x, y) = (y_1+1 - y) \cdot f(x, y_1) + (y - y_1) \cdot f(x, y_1+1) \]

- 对 \(y\) 方向进行插值,得到最终的插值值:

实现

- 原始cpp代码[https://github.com/gaoxiang12/slambook2/blob/master/ch8/direct_method.cpp]

#include <opencv2/opencv.hpp>

#include <sophus/se3.hpp>

#include <boost/format.hpp>

#include <pangolin/pangolin.h>using namespace std;typedef vector<Eigen::Vector2d, Eigen::aligned_allocator<Eigen::Vector2d>> VecVector2d;// Camera intrinsics

double fx = 718.856, fy = 718.856, cx = 607.1928, cy = 185.2157;

// baseline

double baseline = 0.573;

// paths

string left_file = "./left.png";

string disparity_file = "./disparity.png";

boost::format fmt_others("./%06d.png"); // other files// useful typedefs

typedef Eigen::Matrix<double, 6, 6> Matrix6d;

typedef Eigen::Matrix<double, 2, 6> Matrix26d;

typedef Eigen::Matrix<double, 6, 1> Vector6d;/// class for accumulator jacobians in parallel

class JacobianAccumulator {

public:JacobianAccumulator(const cv::Mat &img1_,const cv::Mat &img2_,const VecVector2d &px_ref_,const vector<double> depth_ref_,Sophus::SE3d &T21_) :img1(img1_), img2(img2_), px_ref(px_ref_), depth_ref(depth_ref_), T21(T21_) {projection = VecVector2d(px_ref.size(), Eigen::Vector2d(0, 0));}/// accumulate jacobians in a rangevoid accumulate_jacobian(const cv::Range &range);/// get hessian matrixMatrix6d hessian() const { return H; }/// get biasVector6d bias() const { return b; }/// get total costdouble cost_func() const { return cost; }/// get projected pointsVecVector2d projected_points() const { return projection; }/// reset h, b, cost to zerovoid reset() {H = Matrix6d::Zero();b = Vector6d::Zero();cost = 0;}private:const cv::Mat &img1;const cv::Mat &img2;const VecVector2d &px_ref;const vector<double> depth_ref;Sophus::SE3d &T21;VecVector2d projection; // projected pointsstd::mutex hessian_mutex;Matrix6d H = Matrix6d::Zero();Vector6d b = Vector6d::Zero();double cost = 0;

};/*** pose estimation using direct method* @param img1* @param img2* @param px_ref* @param depth_ref* @param T21*/

void DirectPoseEstimationMultiLayer(const cv::Mat &img1,const cv::Mat &img2,const VecVector2d &px_ref,const vector<double> depth_ref,Sophus::SE3d &T21

);/*** pose estimation using direct method* @param img1* @param img2* @param px_ref* @param depth_ref* @param T21*/

void DirectPoseEstimationSingleLayer(const cv::Mat &img1,const cv::Mat &img2,const VecVector2d &px_ref,const vector<double> depth_ref,Sophus::SE3d &T21

);// bilinear interpolation

inline float GetPixelValue(const cv::Mat &img, float x, float y) {// boundary checkif (x < 0) x = 0;if (y < 0) y = 0;if (x >= img.cols) x = img.cols - 1;if (y >= img.rows) y = img.rows - 1;uchar *data = &img.data[int(y) * img.step + int(x)];float xx = x - floor(x);float yy = y - floor(y);return float((1 - xx) * (1 - yy) * data[0] +xx * (1 - yy) * data[1] +(1 - xx) * yy * data[img.step] +xx * yy * data[img.step + 1]);

}int main(int argc, char **argv) {cv::Mat left_img = cv::imread(left_file, 0);cv::Mat disparity_img = cv::imread(disparity_file, 0);// let's randomly pick pixels in the first image and generate some 3d points in the first image's framecv::RNG rng;int nPoints = 2000;int boarder = 20;VecVector2d pixels_ref;vector<double> depth_ref;// generate pixels in ref and load depth datafor (int i = 0; i < nPoints; i++) {int x = rng.uniform(boarder, left_img.cols - boarder); // don't pick pixels close to boarderint y = rng.uniform(boarder, left_img.rows - boarder); // don't pick pixels close to boarderint disparity = disparity_img.at<uchar>(y, x);double depth = fx * baseline / disparity; // you know this is disparity to depthdepth_ref.push_back(depth);pixels_ref.push_back(Eigen::Vector2d(x, y));}// estimates 01~05.png's pose using this informationSophus::SE3d T_cur_ref;for (int i = 1; i < 6; i++) { // 1~10cv::Mat img = cv::imread((fmt_others % i).str(), 0);// try single layer by uncomment this line// DirectPoseEstimationSingleLayer(left_img, img, pixels_ref, depth_ref, T_cur_ref);DirectPoseEstimationMultiLayer(left_img, img, pixels_ref, depth_ref, T_cur_ref);}return 0;

}void DirectPoseEstimationSingleLayer(const cv::Mat &img1,const cv::Mat &img2,const VecVector2d &px_ref,const vector<double> depth_ref,Sophus::SE3d &T21) {const int iterations = 10;double cost = 0, lastCost = 0;auto t1 = chrono::steady_clock::now();JacobianAccumulator jaco_accu(img1, img2, px_ref, depth_ref, T21);for (int iter = 0; iter < iterations; iter++) {jaco_accu.reset();cv::parallel_for_(cv::Range(0, px_ref.size()),std::bind(&JacobianAccumulator::accumulate_jacobian, &jaco_accu, std::placeholders::_1));Matrix6d H = jaco_accu.hessian();Vector6d b = jaco_accu.bias();// solve update and put it into estimationVector6d update = H.ldlt().solve(b);;T21 = Sophus::SE3d::exp(update) * T21;cost = jaco_accu.cost_func();if (std::isnan(update[0])) {// sometimes occurred when we have a black or white patch and H is irreversiblecout << "update is nan" << endl;break;}if (iter > 0 && cost > lastCost) {cout << "cost increased: " << cost << ", " << lastCost << endl;break;}if (update.norm() < 1e-3) {// convergebreak;}lastCost = cost;cout << "iteration: " << iter << ", cost: " << cost << endl;}cout << "T21 = \n" << T21.matrix() << endl;auto t2 = chrono::steady_clock::now();auto time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);cout << "direct method for single layer: " << time_used.count() << endl;// plot the projected pixels herecv::Mat img2_show;cv::cvtColor(img2, img2_show, CV_GRAY2BGR);VecVector2d projection = jaco_accu.projected_points();for (size_t i = 0; i < px_ref.size(); ++i) {auto p_ref = px_ref[i];auto p_cur = projection[i];if (p_cur[0] > 0 && p_cur[1] > 0) {cv::circle(img2_show, cv::Point2f(p_cur[0], p_cur[1]), 2, cv::Scalar(0, 250, 0), 2);cv::line(img2_show, cv::Point2f(p_ref[0], p_ref[1]), cv::Point2f(p_cur[0], p_cur[1]),cv::Scalar(0, 250, 0));}}cv::imshow("current", img2_show);cv::waitKey();

}void JacobianAccumulator::accumulate_jacobian(const cv::Range &range) {// parametersconst int half_patch_size = 1;int cnt_good = 0;Matrix6d hessian = Matrix6d::Zero();Vector6d bias = Vector6d::Zero();double cost_tmp = 0;for (size_t i = range.start; i < range.end; i++) {// compute the projection in the second imageEigen::Vector3d point_ref =depth_ref[i] * Eigen::Vector3d((px_ref[i][0] - cx) / fx, (px_ref[i][1] - cy) / fy, 1);Eigen::Vector3d point_cur = T21 * point_ref;if (point_cur[2] < 0) // depth invalidcontinue;float u = fx * point_cur[0] / point_cur[2] + cx, v = fy * point_cur[1] / point_cur[2] + cy;if (u < half_patch_size || u > img2.cols - half_patch_size || v < half_patch_size ||v > img2.rows - half_patch_size)continue;projection[i] = Eigen::Vector2d(u, v);double X = point_cur[0], Y = point_cur[1], Z = point_cur[2],Z2 = Z * Z, Z_inv = 1.0 / Z, Z2_inv = Z_inv * Z_inv;cnt_good++;// and compute error and jacobianfor (int x = -half_patch_size; x <= half_patch_size; x++)for (int y = -half_patch_size; y <= half_patch_size; y++) {double error = GetPixelValue(img1, px_ref[i][0] + x, px_ref[i][1] + y) -GetPixelValue(img2, u + x, v + y);Matrix26d J_pixel_xi;Eigen::Vector2d J_img_pixel;J_pixel_xi(0, 0) = fx * Z_inv;J_pixel_xi(0, 1) = 0;J_pixel_xi(0, 2) = -fx * X * Z2_inv;J_pixel_xi(0, 3) = -fx * X * Y * Z2_inv;J_pixel_xi(0, 4) = fx + fx * X * X * Z2_inv;J_pixel_xi(0, 5) = -fx * Y * Z_inv;J_pixel_xi(1, 0) = 0;J_pixel_xi(1, 1) = fy * Z_inv;J_pixel_xi(1, 2) = -fy * Y * Z2_inv;J_pixel_xi(1, 3) = -fy - fy * Y * Y * Z2_inv;J_pixel_xi(1, 4) = fy * X * Y * Z2_inv;J_pixel_xi(1, 5) = fy * X * Z_inv;J_img_pixel = Eigen::Vector2d(0.5 * (GetPixelValue(img2, u + 1 + x, v + y) - GetPixelValue(img2, u - 1 + x, v + y)),0.5 * (GetPixelValue(img2, u + x, v + 1 + y) - GetPixelValue(img2, u + x, v - 1 + y)));// total jacobianVector6d J = -1.0 * (J_img_pixel.transpose() * J_pixel_xi).transpose();hessian += J * J.transpose();bias += -error * J;cost_tmp += error * error;}}if (cnt_good) {// set hessian, bias and costunique_lock<mutex> lck(hessian_mutex);H += hessian;b += bias;cost += cost_tmp / cnt_good;}

}void DirectPoseEstimationMultiLayer(const cv::Mat &img1,const cv::Mat &img2,const VecVector2d &px_ref,const vector<double> depth_ref,Sophus::SE3d &T21) {// parametersint pyramids = 4;double pyramid_scale = 0.5;double scales[] = {1.0, 0.5, 0.25, 0.125};// create pyramidsvector<cv::Mat> pyr1, pyr2; // image pyramidsfor (int i = 0; i < pyramids; i++) {if (i == 0) {pyr1.push_back(img1);pyr2.push_back(img2);} else {cv::Mat img1_pyr, img2_pyr;cv::resize(pyr1[i - 1], img1_pyr,cv::Size(pyr1[i - 1].cols * pyramid_scale, pyr1[i - 1].rows * pyramid_scale));cv::resize(pyr2[i - 1], img2_pyr,cv::Size(pyr2[i - 1].cols * pyramid_scale, pyr2[i - 1].rows * pyramid_scale));pyr1.push_back(img1_pyr);pyr2.push_back(img2_pyr);}}double fxG = fx, fyG = fy, cxG = cx, cyG = cy; // backup the old valuesfor (int level = pyramids - 1; level >= 0; level--) {VecVector2d px_ref_pyr; // set the keypoints in this pyramid levelfor (auto &px: px_ref) {px_ref_pyr.push_back(scales[level] * px);}// scale fx, fy, cx, cy in different pyramid levelsfx = fxG * scales[level];fy = fyG * scales[level];cx = cxG * scales[level];cy = cyG * scales[level];DirectPoseEstimationSingleLayer(pyr1[level], pyr2[level], px_ref_pyr, depth_ref, T21);}}

- 重构的rust代码

#![allow(dead_code)]

#![allow(unused_variables)]

#![allow(unused_imports)]

#![allow(unused_mut)]

#![allow(unused_assignments)]

#![cfg_attr(not(debug_assertions), windows_subsystem = "windows")]

#![allow(rustdoc::missing_crate_level_docs)]

#![allow(unsafe_code)]

#![allow(clippy::undocumented_unsafe_blocks)]

#![allow(unused_must_use)]

#![allow(non_snake_case)]// 线性代数

use nalgebra::{DMatrix, DVector, Matrix3, Vector3, Vector2, Matrix6, Vector6, Matrix2x6, SMatrix, SVector

};// 图像处理

use image::{open, ImageBuffer, Rgb, DynamicImage, Luma, RgbImage,buffer::ConvertBuffer

};

use imageproc::{drawing::draw_cross_mut, drawing::draw_line_segment_mut

};// ORB角点检测

use bye_orb_rs::{orb, fast, common::Matchable

};// 图优化

use factrs::{assign_symbols,core::{BetweenResidual, GaussNewton, Graph, Values},dtype, fac,linalg::{Const, ForwardProp, Numeric, NumericalDiff, VectorX, DiffResult, MatrixX},residuals::Residual1,traits::*,variables::{VectorVar2, SE2, VectorVar3, SE3, SO2, SO3, MatrixLieGroup},containers::Key,noise::{GaussianNoise}

};// 随机数

use rand::{Rng, SeedableRng

};

use rand::distributions::{Distribution, Uniform

};// 时间

use std::time::Instant;// 引用单元

use std::cell::RefCell;// 定义符号变量

assign_symbols!(_X_: VectorVar3);

assign_symbols!(_Y_: SE2);

assign_symbols!(_Z_: SE3);// 相机内参

const FX: f64 = 718.856;

const FY: f64 = 718.856;

const CX: f64 = 607.1928;

const CY: f64 = 185.2157;

// 基线

const BASELINE: f64 = 0.573;// 双线性插值

fn get_pixel_value(img: &ImageBuffer<Luma<u8>, Vec<u8>>, x: f64, y: f64) -> f64 {let mut x = x;let mut y = y;// 边界检查if x < 0.0 {x = 0.0;}if y < 0.0 {y = 0.0;}if x >= img.width() as f64 - 1.0 {x = img.width() as f64 - 1.0;}if y >= img.height() as f64 - 1.0 {y = img.height() as f64 - 1.0;}let x_floor = x.floor() as u32;let y_floor = y.floor() as u32;let xx = x - x_floor as f64;let yy = y - y_floor as f64;// 确保不越界let x_ceil = (x_floor + 1).min(img.width() - 1);let y_ceil = (y_floor + 1).min(img.height() - 1);let data = |x: u32, y: u32| img.get_pixel(x, y)[0] as f64;(1.0 - xx) * (1.0 - yy) * data(x_floor, y_floor) +xx * (1.0 - yy) * data(x_ceil, y_floor) +(1.0 - xx) * yy * data(x_floor, y_ceil) +xx * yy * data(x_ceil, y_ceil)

}// 用于并行累加雅可比矩阵的结构体

struct JacobianAccumulator<'a> {img1: &'a ImageBuffer<Luma<u8>, Vec<u8>>,img2: &'a ImageBuffer<Luma<u8>, Vec<u8>>,px_ref: &'a Vec<Vector2<f64>>,depth_ref: &'a Vec<f64>,T21: &'a mut SE3<f64>,projection: Vec<Vector2<f64>>,H: Matrix6<f64>,b: Vector6<f64>,cost: f64,

}impl<'a> JacobianAccumulator<'a> {fn new(img1: &'a ImageBuffer<Luma<u8>, Vec<u8>>,img2: &'a ImageBuffer<Luma<u8>, Vec<u8>>,px_ref: &'a Vec<Vector2<f64>>,depth_ref: &'a Vec<f64>,T21: &'a mut SE3<f64>) -> Self {let projection = vec![Vector2::zeros(); px_ref.len()];Self {img1,img2,px_ref,depth_ref,T21,projection,H: Matrix6::zeros(),b: Vector6::zeros(),cost: 0.0,}}fn accumulate_jacobian(&mut self, range: std::ops::Range<usize>) {let half_patch_size = 1; let mut cnt_good = 0;let mut hessian = Matrix6::zeros();let mut bias = Vector6::zeros();let mut cost_tmp = 0.0;for i in range {// 计算在第二张图像中的投影let point_ref = self.depth_ref[i] * Vector3::new((self.px_ref[i].x - CX) / FX,(self.px_ref[i].y - CY) / FY,1.0);let point_cur = self.T21.apply(point_ref.as_view());if point_cur[2] < 0.0 { // 深度无效continue;}let u = FX * point_cur[0] / point_cur[2] + CX;let v = FY * point_cur[1] / point_cur[2] + CY;if u < half_patch_size as f64 || u > (self.img2.width() - half_patch_size) as f64 ||v < half_patch_size as f64 || v > (self.img2.height() - half_patch_size) as f64 {continue;}self.projection[i] = Vector2::new(u, v);let X = point_cur[0];let Y = point_cur[1];let Z = point_cur[2];let Z2 = Z * Z;let Z_inv = 1.0 / Z;let Z2_inv = Z_inv * Z_inv;cnt_good += 1;// 计算误差和雅可比for x in -(half_patch_size as i32)..=half_patch_size as i32 {for y in -(half_patch_size as i32)..=half_patch_size as i32 {let error = get_pixel_value(self.img1, self.px_ref[i].x + x as f64, self.px_ref[i].y + y as f64) -get_pixel_value(self.img2, u + x as f64, v + y as f64);let mut J_pixel_xi = Matrix2x6::zeros();let mut J_img_pixel = Vector2::zeros();J_pixel_xi[(0, 0)] = FX * Z_inv;J_pixel_xi[(0, 1)] = 0.0;J_pixel_xi[(0, 2)] = -FX * X * Z2_inv;J_pixel_xi[(0, 3)] = -FX * X * Y * Z2_inv;J_pixel_xi[(0, 4)] = FX + FX * X * X * Z2_inv;J_pixel_xi[(0, 5)] = -FX * Y * Z_inv;J_pixel_xi[(1, 0)] = 0.0;J_pixel_xi[(1, 1)] = FY * Z_inv;J_pixel_xi[(1, 2)] = -FY * Y * Z2_inv;J_pixel_xi[(1, 3)] = -FY - FY * Y * Y * Z2_inv;J_pixel_xi[(1, 4)] = FY * X * Y * Z2_inv;J_pixel_xi[(1, 5)] = FY * X * Z_inv;J_img_pixel = Vector2::new(0.5 * (get_pixel_value(self.img2, u + 1.0 + x as f64, v + y as f64) - get_pixel_value(self.img2, u - 1.0 + x as f64, v + y as f64)),0.5 * (get_pixel_value(self.img2, u + x as f64, v + 1.0 + y as f64) - get_pixel_value(self.img2, u + x as f64, v - 1.0 + y as f64)));// 总雅可比let J = -1.0 * (J_img_pixel.transpose() * J_pixel_xi).transpose();hessian += J * J.transpose();bias += -error * J;cost_tmp += error * error;}}}if cnt_good > 0 {self.H += hessian;self.b += bias;self.cost += cost_tmp / cnt_good as f64;}}fn hessian(&self) -> Matrix6<f64> {self.H}fn bias(&self) -> Vector6<f64> {self.b}fn cost_func(&self) -> f64 {self.cost}fn projected_points(&self) -> &Vec<Vector2<f64>> {&self.projection}fn reset(&mut self) {self.H = Matrix6::zeros();self.b = Vector6::zeros();self.cost = 0.0;}

}fn direct_pose_estimation_single_layer(img1: &ImageBuffer<Luma<u8>, Vec<u8>>,img2: &ImageBuffer<Luma<u8>, Vec<u8>>,px_ref: &Vec<Vector2<f64>>,depth_ref: &Vec<f64>,T21: &mut SE3<f64>

) {let iterations = 10;let mut cost = 0.0;let mut last_cost = 0.0;let t1 = Instant::now();for iter in 0..iterations {// 使用 T21 的克隆来避免借用冲突let mut T21_clone = T21.clone();let mut jaco_accu = JacobianAccumulator::new(img1, img2, px_ref, depth_ref, &mut T21_clone);jaco_accu.reset();jaco_accu.accumulate_jacobian(0..px_ref.len());let H = jaco_accu.hessian();let b = jaco_accu.bias();// 求解更新并放入估计let update = H.lu().solve(&b).unwrap_or_else(|| {eprintln!("矩阵求解失败!");Vector6::zeros()});if update[0].is_nan() {// 有时发生在我们有一个黑色或白色的补丁并且H是不可逆的eprintln!("更新为nan");break;}// 更新 T21*T21 = SE3::exp((&update).into()) * T21.clone();cost = jaco_accu.cost_func();if iter > 0 && cost > last_cost {eprintln!("成本增加: {}, {}", cost, last_cost);break;}if update.norm() < 1e-3 {// 收敛break;}last_cost = cost;println!("迭代: {}, 成本: {}", iter, cost);}println!("T21 = \n{}", T21.to_matrix());let t2 = Instant::now();let time_used = t2.duration_since(t1).as_secs_f64();println!("单层直接法: {}", time_used);// 在此处绘制投影像素,转换为rgb图let mut img2_show: RgbImage = img2.convert();let mut T21_clone = T21.clone();let mut jaco_accu = JacobianAccumulator::new(img1, img2, px_ref, depth_ref, &mut T21_clone);let projection = jaco_accu.projected_points();for i in 0..px_ref.len() {let p_ref = px_ref[i];let p_cur = projection[i];if p_cur.x > 0.0 && p_cur.y > 0.0 {draw_cross_mut(&mut img2_show, Rgb([0, 250, 0]), p_cur.x as i32, p_cur.y as i32);draw_line_segment_mut(&mut img2_show, (p_ref.x as f32, p_ref.y as f32), (p_cur.x as f32, p_cur.y as f32), Rgb([0, 250, 0]));}}img2_show.save("ch8-direct_method-current.png").unwrap();

}// 多层直接法位姿估计

fn direct_pose_estimation_multi_layer(img1: &ImageBuffer<Luma<u8>, Vec<u8>>,img2: &ImageBuffer<Luma<u8>, Vec<u8>>,px_ref: &Vec<Vector2<f64>>,depth_ref: &Vec<f64>,T21: &mut SE3<f64>

) {// 参数let pyramids = 4;let pyramid_scale = 0.5;let scales = [1.0, 0.5, 0.25, 0.125];// 创建金字塔let mut pyr1 = Vec::new();let mut pyr2 = Vec::new();for i in 0..pyramids {if i == 0 {pyr1.push(img1.clone());pyr2.push(img2.clone());} else {let img1_pyr = image::imageops::resize(&pyr1[i - 1], (pyr1[i - 1].width() as f64 * pyramid_scale) as u32, (pyr1[i - 1].height() as f64 * pyramid_scale) as u32, image::imageops::FilterType::Nearest);let img2_pyr = image::imageops::resize(&pyr2[i - 1], (pyr2[i - 1].width() as f64 * pyramid_scale) as u32, (pyr2[i - 1].height() as f64 * pyramid_scale) as u32, image::imageops::FilterType::Nearest);// 转换图片为灰度图,直接将luma8数据推入向量pyr1.push(img1_pyr);pyr2.push(img2_pyr);}}let fxG = FX;let fyG = FY;let cxG = CX;let cyG = CY; // 备份旧值for level in (0..pyramids).rev() {let mut px_ref_pyr = Vec::new();for px in px_ref {px_ref_pyr.push(scales[level] * px);}// 在不同的金字塔层次中缩放 fx, fy, cx, cylet fx = fxG * scales[level];let fy = fyG * scales[level];let cx = cxG * scales[level];let cy = cyG * scales[level];direct_pose_estimation_single_layer(&pyr1[level], &pyr2[level], &px_ref_pyr, depth_ref, T21);}

}fn main() {let left_file = "./assets/ch8-left.png";let disparity_file = "./assets/ch8-disparity.png";let fmt_others = "./assets/ch8-{:06}.png"; // 注意这里是 {:06},不是 %06dlet left_img = open(left_file).unwrap().to_luma8();let disparity_img = open(disparity_file).unwrap().to_luma8();// 我们在第一张图像中随机选择像素并在第一张图像的帧中生成一些3D点let mut rng = rand::thread_rng();let nPoints = 2000;let boarder = 20;let mut pixels_ref = Vec::new();let mut depth_ref = Vec::new();// 在ref中生成像素并加载深度数据for _ in 0..nPoints {let x = rng.gen_range(boarder..left_img.width() - boarder) as f64;let y = rng.gen_range(boarder..left_img.height() - boarder) as f64;let disparity = disparity_img.get_pixel(x as u32, y as u32)[0] as f64;let depth = FX * BASELINE / disparity; // 你知道这是视差到深度depth_ref.push(depth);pixels_ref.push(Vector2::new(x, y));} // 结束for// 使用此信息估计01~05.png的姿态let mut T_cur_ref = SE3::identity();for i in 1..6 {let img_path = format!("./assets/ch8-{:06}.png", i);println!("图片路径:{:?}\n",img_path);let img = open(&img_path).unwrap().to_luma8();direct_pose_estimation_multi_layer(&left_img, &img, &pixels_ref, &depth_ref, &mut T_cur_ref);}}

代码逻辑分析

-

相机内参和基线:代码中定义了相机的内参(

fx,fy,cx,cy)和基线(baseline),这些参数用于将视差转换为深度。 -

图像读取:代码读取了左图和视差图,并随机选择了一些像素点用于后续的位姿估计。

-

深度计算:通过视差图计算深度信息,并将其存储在

depth_ref中。 -

位姿估计:使用直接法进行位姿估计,分为单层和多层两种方法。代码中调用的是多层方法

DirectPoseEstimationMultiLayer。 -

雅可比累加器:

JacobianAccumulator类用于在多线程环境下累加雅可比矩阵和计算误差。 -

双线性插值:

GetPixelValue函数用于在图像中进行双线性插值,确保在浮点坐标下获取像素值。

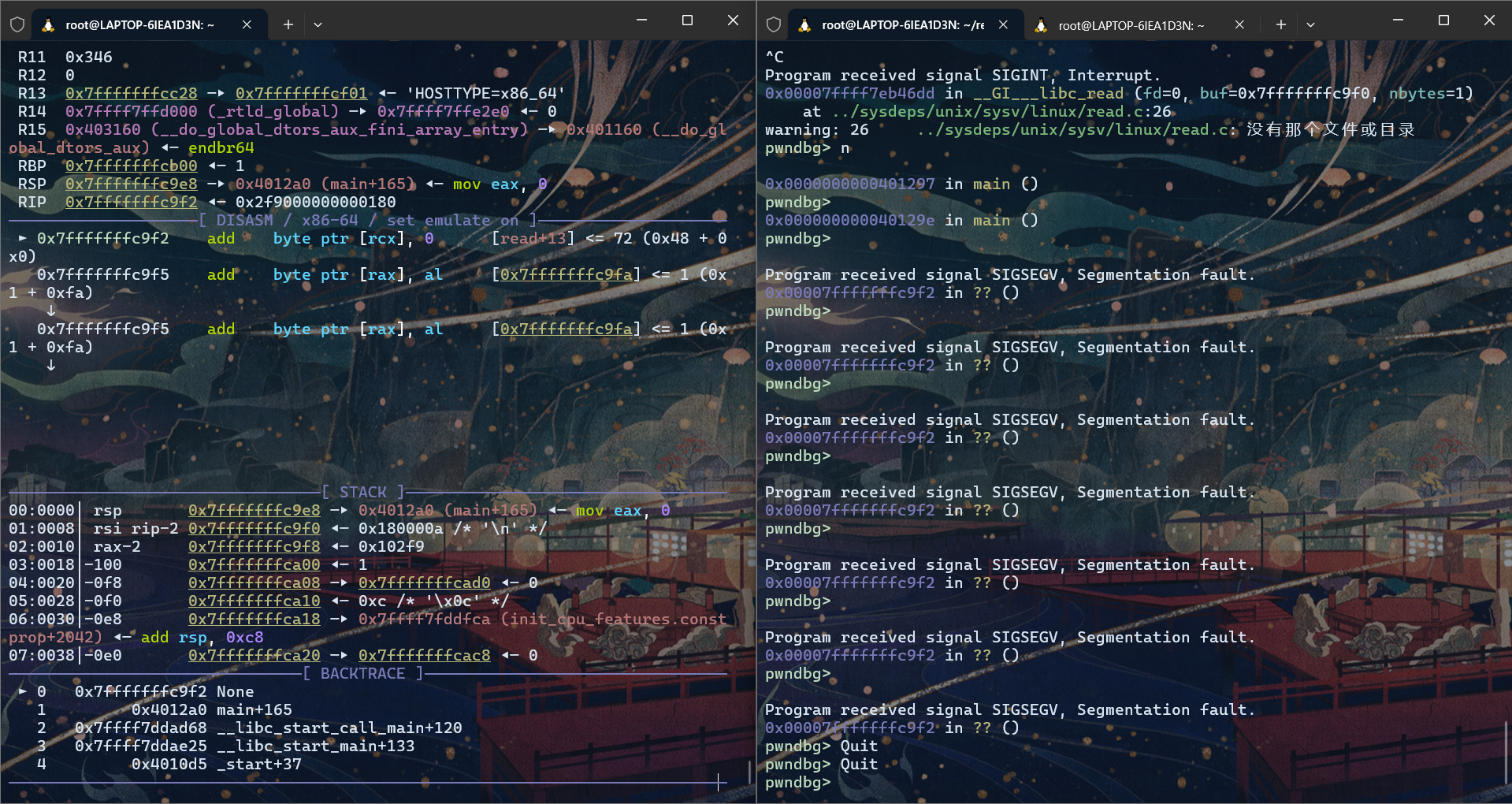

效果

- 输出

图片路径:"./assets/ch8-000001.png"迭代: 0, 成本: 12308.606882324208

T21 = ┌ ┐│ 0.9524303857277323 0.3015001816619056 0.044429728790090155 -0.003210241232723807 ││ -0.28373242767373474 0.9304624727537855 -0.23180918075710438 -0.0007448658012033818 ││ -0.11123070542297181 0.20817595263322064 0.971746110317072 0.004654729361295467 ││ 0 0 0 1 │└ ┘单层直接法: 0.265253083

迭代: 0, 成本: 85669.981282861

T21 = ┌ ┐│ 0.9218799427483955 0.3859353122197975 0.03451530008594093 -0.005209022743755008 ││ -0.3607089083126468 0.8873181597241661 -0.2873248455800819 -0.0008377164815452974 ││ -0.1415148565420385 0.25242903597947436 0.9572111194362295 0.0075372648611578925 ││ 0 0 0 1 │└ ┘单层直接法: 0.209044375

迭代: 0, 成本: 86278.86385506127

迭代: 1, 成本: 78932.01005537802

T21 = ┌ ┐│ 0.9125446387532822 0.40829198571115227 0.023662981356667577 -0.003920845296385371 ││ -0.3842899544360964 0.8758218816839785 -0.29198846292796965 -0.0005550692441357431 ││ -0.13994110619165737 0.2573590603953543 0.956129071219088 0.005821003343790509 ││ 0 0 0 1 │└ ┘单层直接法: 0.218726375

迭代: 0, 成本: 80969.97454752732

T21 = ┌ ┐│ 0.9024751333468863 0.4303302061971928 0.018829427097003137 -0.0038350987692527313 ││ -0.4083426816880122 0.8686402069107689 -0.28057164013823516 0.0009773860335536846 ││ -0.13709444920332334 0.24552006959166922 0.95964837697218 0.006101092525896705 ││ 0 0 0 1 │└ ┘单层直接法: 0.127039291

图片路径:"./assets/ch8-000002.png"迭代: 0, 成本: 62918.87454601741

迭代: 1, 成本: 50964.083456400964

T21 = ┌ ┐│ 0.9471695479166553 -0.29475511826277095 -0.12644867637606494 -0.024437567736266715 ││ 0.31573559637889087 0.9262155288055071 0.2059995810160813 -0.006922309367973688 ││ 0.05639929679193713 -0.23504087826894032 0.9703478267425244 0.031340398699299214 ││ 0 0 0 1 │└ ┘单层直接法: 0.200831125

迭代: 0, 成本: 69534.8098484062

T21 = ┌ ┐│ 0.9472662867926415 -0.29811538115257286 -0.11753212933635326 -0.02048814529041247 ││ 0.31717620385681067 0.9245284812892887 0.211296812545538 -0.005842805353928968 ││ 0.04567097122969815 -0.23743274164524486 0.9703297664102439 0.026951156449003068 ││ 0 0 0 1 │└ ┘单层直接法: 0.184161042

迭代: 0, 成本: 83774.72875510731

T21 = ┌ ┐│ 0.9471687899339618 -0.29351554196707935 -0.12930549098474545 -0.021599883894582617 ││ 0.31590017516818664 0.9234576352579759 0.2177913570655551 -0.005447138203677023 ││ 0.05548299472580243 -0.2471328033821467 0.9673918620645605 0.026764523956617322 ││ 0 0 0 1 │└ ┘单层直接法: 0.1588415

迭代: 0, 成本: 95209.12401528576

T21 = ┌ ┐│ 0.9333317952635999 -0.31511300687588656 -0.17203358058141766 -0.023745940533225245 ││ 0.3542740917013179 0.885997942912672 0.29916134961542046 -0.0009776576916912668 ││ 0.058151766088673965 -0.34016384001264904 0.9385664249527665 0.026241319858266894 ││ 0 0 0 1 │└ ┘单层直接法: 0.126065416

图片路径:"./assets/ch8-000003.png"迭代: 0, 成本: 22775.232856931147

T21 = ┌ ┐│ 0.04421799171614049 0.8267708019552389 -0.5607983686164595 -0.11494996211886517 ││ -0.5883617834340118 -0.4321172771721953 -0.6834508545342795 -0.013060132923383315 ││ -0.8073878751894532 0.3601731525302503 0.4673329853471514 0.17026336457658037 ││ 0 0 0 1 │└ ┘单层直接法: 0.014470583

迭代: 0, 成本: 63921.11568575347

T21 = ┌ ┐│ -0.04616616846798771 0.7583799406344983 -0.6501757843323606 -0.12865335429683258 ││ -0.49587978081956463 -0.5824112758412814 -0.6441275873204559 -0.018972255722675038 ││ -0.8671631495072458 0.29267212271780474 0.40295297581793377 0.1871745873124643 ││ 0 0 0 1 │└ ┘单层直接法: 0.102599416

迭代: 0, 成本: 130972.77167231684

T21 = ┌ ┐│ -0.07708204146335573 0.7174503164073005 -0.692331858555495 -0.12220712057420292 ││ -0.4823637720411582 -0.6345562962860871 -0.6038737436467237 -0.009325333785477431 ││ -0.8725729484152411 0.2874079858506856 0.3949849356155055 0.17640532201758752 ││ 0 0 0 1 │└ ┘单层直接法: 0.056263917

迭代: 0, 成本: 160315.8221080921

迭代: 1, 成本: 117803.74896521952

T21 = ┌ ┐│ 0.11041063796222783 0.8092234858483349 -0.5770327902088784 -0.12796900323951144 ││ -0.6937522281289734 -0.35299298414217173 -0.6277768704822513 0.012703735880665796 ││ -0.7117003140303219 0.4696310286787641 0.5224264157862495 0.1615720204177094 ││ 0 0 0 1 │└ ┘单层直接法: 0.04936275

图片路径:"./assets/ch8-000004.png"T21 = ┌ ┐│ 0.11041063796222783 0.8092234858483349 -0.5770327902088784 -0.12796900323951144 ││ -0.6937522281289734 -0.35299298414217173 -0.6277768704822513 0.012703735880665796 ││ -0.7117003140303219 0.4696310286787641 0.5224264157862495 0.1615720204177094 ││ 0 0 0 1 │└ ┘单层直接法: 0.002474291

迭代: 0, 成本: 9952.64361905522

T21 = ┌ ┐│ 0.9936933376281276 0.10127337313948065 0.0481378712286223 -0.0973772597095485 ││ -0.0881181514129811 0.9707573545011597 -0.22330550838138477 -0.06053442151615137 ││ -0.06934509459962428 0.21765537570862173 0.9735591380497169 0.12565698167218953 ││ 0 0 0 1 │└ ┘单层直接法: 0.006331209

迭代: 0, 成本: 63264.225409655686

T21 = ┌ ┐│ 0.993265544467401 0.09742560583615734 0.06270414261701729 -0.09536557330216772 ││ -0.0810970355733821 0.9711440954988444 -0.224282002396405 -0.062349676699279086 ││ -0.08274556782745005 0.2176864651400935 0.9725048965942145 0.1302758082903449 ││ 0 0 0 1 │└ ┘单层直接法: 0.072367167

迭代: 0, 成本: 119395.80207527199

T21 = ┌ ┐│ 0.9902854758169111 0.12098584779861912 0.06853539974730932 -0.0944911844223315 ││ -0.10422445060874833 0.9721083356759842 -0.21010151737808208 -0.05673084026913784 ││ -0.09204314358700871 0.20091741672069474 0.9752744492587222 0.13244235645914174 ││ 0 0 0 1 │└ ┘单层直接法: 0.176934625

图片路径:"./assets/ch8-000005.png"T21 = ┌ ┐│ 0.9902854758169111 0.12098584779861912 0.06853539974730932 -0.0944911844223315 ││ -0.10422445060874833 0.9721083356759842 -0.21010151737808208 -0.05673084026913784 ││ -0.09204314358700871 0.20091741672069474 0.9752744492587222 0.13244235645914174 ││ 0 0 0 1 │└ ┘单层直接法: 0.002497459

迭代: 0, 成本: 51340.046356081984

T21 = ┌ ┐│ 0.488721427927844 0.01590160068119037 -0.8722949644357377 -0.24508797891778478 ││ 0.3281547532426462 -0.9297616528746497 0.16690634130570506 0.05602332984185457 ││ -0.8083723299374038 -0.36781844426234866 -0.4596126284731503 0.009371172503020716 ││ 0 0 0 1 │└ ┘单层直接法: 0.005589166

T21 = ┌ ┐│ 0.488721427927844 0.01590160068119037 -0.8722949644357377 -0.24508797891778478 ││ 0.3281547532426462 -0.9297616528746497 0.16690634130570506 0.05602332984185457 ││ -0.8083723299374038 -0.36781844426234866 -0.4596126284731503 0.009371172503020716 ││ 0 0 0 1 │└ ┘单层直接法: 0.002532792

T21 = ┌ ┐│ 0.488721427927844 0.01590160068119037 -0.8722949644357377 -0.24508797891778478 ││ 0.3281547532426462 -0.9297616528746497 0.16690634130570506 0.05602332984185457 ││ -0.8083723299374038 -0.36781844426234866 -0.4596126284731503 0.009371172503020716 ││ 0 0 0 1 │└ ┘单层直接法: 0.0024075成本增加: 56793.99123379931, 12308.606882324208

成本增加: 92649.7299219401, 85669.981282861

成本增加: 80753.76146512033, 78932.01005537802

成本增加: 81108.55695446623, 80969.97454752732

成本增加: 76023.83826864949, 50964.083456400964

成本增加: 76052.18150445452, 69534.8098484062

成本增加: 88042.76661683866, 83774.72875510731

成本增加: 108168.18679485178, 95209.12401528576

成本增加: 132775.2488141031, 22775.232856931147

成本增加: 68884.44033429243, 63921.11568575347

成本增加: 146982.56562344934, 130972.77167231684

成本增加: 136980.53080711715, 117803.74896521952

矩阵求解失败!

矩阵求解失败!

成本增加: 71608.36972602786, 63264.225409655686

成本增加: 120213.22098266528, 119395.80207527199

矩阵求解失败!

矩阵求解失败!

矩阵求解失败!

矩阵求解失败!

- 图片比较

| ch8-direct_method-current | ch8-disparity |

|---|---|

|

|

| 000001 | 000002 |

|

|

| 000003 | 000004 |

|

|

| 000005 | |

|

分析

-

迭代过程:输出显示了每次迭代的成本(cost),以及最终的位姿矩阵(T21)。成本的变化反映了优化过程的收敛情况。

-

成本增加:在某些迭代中,成本出现了增加的情况,这可能是由于优化过程中的局部极小值或数值不稳定性导致的。

-

矩阵求解失败:在某些情况下,输出显示“矩阵求解失败”,这通常是由于雅可比矩阵的条件数较差,导致线性方程组不可解。