PVE RAID模拟恢复案例

2024年10月10日

14:28

https://www.bilibili.com/read/cv32149324/

1、正常环境现象,看raid磁盘信息和状态

root@pve:/var/lib/vz/dump# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 238.5G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 1G 0 part

└─sda3 8:3 0 237.5G 0 part

sdb 8:16 0 238.5G 0 disk

├─sdb1 8:17 0 1007K 0 part

├─sdb2 8:18 0 1G 0 part

└─sdb3 8:19 0 237.5G 0 part

sdc 8:32 1 57.6G 0 disk

├─sdc1 8:33 1 57.6G 0 part

└─sdc2 8:34 1 32M 0 part

root@pve:/var/lib/vz/dump# zpool status

pool: rpool

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-HS-SSD-C260_256G_30147651612-part3 ONLINE 0 0 0

ata-HS-SSD-C260_256G_30148251328-part3 ONLINE 0 0 0

errors: No known data errors

root@pve:/var/lib/vz/dump# cd /dev/disk/by-id/

root@pve:/dev/disk/by-id# ls -l

total 0

lrwxrwxrwx 1 root root 9 Oct 10 12:20 ata-HS-SSD-C260_256G_30147651612 -> ../../sda

lrwxrwxrwx 1 root root 10 Oct 10 12:20 ata-HS-SSD-C260_256G_30147651612-part1 -> ../../sda1

lrwxrwxrwx 1 root root 10 Oct 10 12:20 ata-HS-SSD-C260_256G_30147651612-part2 -> ../../sda2

lrwxrwxrwx 1 root root 10 Oct 10 12:20 ata-HS-SSD-C260_256G_30147651612-part3 -> ../../sda3

lrwxrwxrwx 1 root root 9 Oct 10 12:20 ata-HS-SSD-C260_256G_30148251328 -> ../../sdb

lrwxrwxrwx 1 root root 10 Oct 10 12:20 ata-HS-SSD-C260_256G_30148251328-part1 -> ../../sdb1

lrwxrwxrwx 1 root root 10 Oct 10 12:20 ata-HS-SSD-C260_256G_30148251328-part2 -> ../../sdb2

lrwxrwxrwx 1 root root 10 Oct 10 12:20 ata-HS-SSD-C260_256G_30148251328-part3 -> ../../sdb3

lrwxrwxrwx 1 root root 9 Oct 10 12:20 usb-Kingston_DataTraveler_3.0_E0D55EA574C1F510C8320163-0:0 -> ../../sdc

lrwxrwxrwx 1 root root 10 Oct 10 12:20 usb-Kingston_DataTraveler_3.0_E0D55EA574C1F510C8320163-0:0-part1 -> ../../sdc1

lrwxrwxrwx 1 root root 10 Oct 10 12:20 usb-Kingston_DataTraveler_3.0_E0D55EA574C1F510C8320163-0:0-part2 -> ../../sdc2

lrwxrwxrwx 1 root root 9 Oct 10 12:20 wwn-0x5000000123456789 -> ../../sdb

lrwxrwxrwx 1 root root 10 Oct 10 12:20 wwn-0x5000000123456789-part1 -> ../../sdb1

lrwxrwxrwx 1 root root 10 Oct 10 12:20 wwn-0x5000000123456789-part2 -> ../../sdb2

lrwxrwxrwx 1 root root 10 Oct 10 12:20 wwn-0x5000000123456789-part3 -> ../../sdb3

lrwxrwxrwx 1 root root 9 Oct 10 12:20 wwn-0x5000000123456819 -> ../../sda

lrwxrwxrwx 1 root root 10 Oct 10 12:20 wwn-0x5000000123456819-part1 -> ../../sda1

lrwxrwxrwx 1 root root 10 Oct 10 12:20 wwn-0x5000000123456819-part2 -> ../../sda2

lrwxrwxrwx 1 root root 10 Oct 10 12:20 wwn-0x5000000123456819-part3 -> ../../sda3

root@pve:/dev/disk/by-id#

2、模拟故障,在线手动拔掉一块盘后,重启开机,再查看磁盘信息和状态

root@pve:/dev/disk/by-id# zpool status

pool: rpool

state: DEGRADED

status: One or more devices could not be used because the label is missing or

invalid. Sufficient replicas exist for the pool to continue

functioning in a degraded state.

action: Replace the device using 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-4J

config:

NAME STATE READ WRITE CKSUM

rpool DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

ata-HS-SSD-C260_256G_30147651612-part3 ONLINE 0 0 0

ata-HS-SSD-C260_256G_30148251328-part3 UNAVAIL 3 160 0

errors: No known data errors

root@pve:/dev/disk/by-id# ls -l

total 0

lrwxrwxrwx 1 root root 9 Oct 10 12:20 ata-HS-SSD-C260_256G_30147651612 -> ../../sda

lrwxrwxrwx 1 root root 10 Oct 10 12:20 ata-HS-SSD-C260_256G_30147651612-part1 -> ../../sda1

lrwxrwxrwx 1 root root 10 Oct 10 12:20 ata-HS-SSD-C260_256G_30147651612-part2 -> ../../sda2

lrwxrwxrwx 1 root root 10 Oct 10 12:20 ata-HS-SSD-C260_256G_30147651612-part3 -> ../../sda3

lrwxrwxrwx 1 root root 9 Oct 10 12:20 usb-Kingston_DataTraveler_3.0_E0D55EA574C1F510C8320163-0:0 -> ../../sdc

lrwxrwxrwx 1 root root 10 Oct 10 12:20 usb-Kingston_DataTraveler_3.0_E0D55EA574C1F510C8320163-0:0-part1 -> ../../sdc1

lrwxrwxrwx 1 root root 10 Oct 10 12:20 usb-Kingston_DataTraveler_3.0_E0D55EA574C1F510C8320163-0:0-part2 -> ../../sdc2

lrwxrwxrwx 1 root root 9 Oct 10 12:20 wwn-0x5000000123456819 -> ../../sda

lrwxrwxrwx 1 root root 10 Oct 10 12:20 wwn-0x5000000123456819-part1 -> ../../sda1

lrwxrwxrwx 1 root root 10 Oct 10 12:20 wwn-0x5000000123456819-part2 -> ../../sda2

lrwxrwxrwx 1 root root 10 Oct 10 12:20 wwn-0x5000000123456819-part3 -> ../../sda3

root@pve:/dev/disk/by-id# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 238.5G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 1G 0 part

└─sda3 8:3 0 237.5G 0 part

sdc 8:32 1 57.6G 0 disk

├─sdc1 8:33 1 57.6G 0 part

└─sdc2 8:34 1 32M 0 part

root@pve:/dev/disk/by-id#

3、关机,在故障盘被拔出后,再插入新盘到坏盘上,开机,查看磁盘信息新加入的裸盘已经识别到/dev/sdb

注意:如果插入的新盘内带系统(属于无效数据)可以将盘插入到正常的pve的服务器上,再在web页面上刷新磁盘信息将识别到的新盘的进行格式化。

root@pve:/dev/disk/by-id# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 238.5G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 1G 0 part

└─sda3 8:3 0 237.5G 0 part

sdb 8:16 0 238.5G 0 disk

sdc 8:32 1 57.6G 0 disk

├─sdc1 8:33 1 57.6G 0 part

└─sdc2 8:34 1 32M 0 part

zd0 230:0 0 4M 0 disk

zd16 230:16 0 4M 0 disk

zd32 230:32 0 60G 0 disk

├─zd32p1 230:33 0 100M 0 part

├─zd32p2 230:34 0 16M 0 part

└─zd32p3 230:35 0 39.9G 0 part

zd48 230:48 0 100G 0 disk

├─zd48p1 230:49 0 100M 0 part

├─zd48p2 230:50 0 16M 0 part

└─zd48p3 230:51 0 19.2G 0 part

zd64 230:64 0 100G 0 disk

├─zd64p1 230:65 0 100M 0 part

├─zd64p2 230:66 0 16M 0 part

└─zd64p3 230:67 0 19.2G 0 part

zd80 230:80 0 1M 0 disk

root@pve:/dev/disk/by-id#

root@pve:/dev/disk/by-id#

root@pve:/dev/disk/by-id#

root@pve:/dev/disk/by-id#

root@pve:/dev/disk/by-id#

root@pve:/dev/disk/by-id#

root@pve:/dev/disk/by-id#

root@pve:/dev/disk/by-id# ls -l

total 0

lrwxrwxrwx 1 root root 9 Oct 10 13:26 ata-HS-SSD-C260_256G_30147651609 -> ../../sdb

lrwxrwxrwx 1 root root 9 Oct 10 13:26 ata-HS-SSD-C260_256G_30147651612 -> ../../sda

lrwxrwxrwx 1 root root 10 Oct 10 13:26 ata-HS-SSD-C260_256G_30147651612-part1 -> ../../sda1

lrwxrwxrwx 1 root root 10 Oct 10 13:26 ata-HS-SSD-C260_256G_30147651612-part2 -> ../../sda2

lrwxrwxrwx 1 root root 10 Oct 10 13:26 ata-HS-SSD-C260_256G_30147651612-part3 -> ../../sda3

lrwxrwxrwx 1 root root 9 Oct 10 13:26 usb-Kingston_DataTraveler_3.0_E0D55EA574C1F510C8320163-0:0 -> ../../sdc

lrwxrwxrwx 1 root root 10 Oct 10 13:26 usb-Kingston_DataTraveler_3.0_E0D55EA574C1F510C8320163-0:0-part1 -> ../../sdc1

lrwxrwxrwx 1 root root 10 Oct 10 13:26 usb-Kingston_DataTraveler_3.0_E0D55EA574C1F510C8320163-0:0-part2 -> ../../sdc2

lrwxrwxrwx 1 root root 9 Oct 10 13:26 wwn-0x5000000123456816 -> ../../sdb

lrwxrwxrwx 1 root root 9 Oct 10 13:26 wwn-0x5000000123456819 -> ../../sda

lrwxrwxrwx 1 root root 10 Oct 10 13:26 wwn-0x5000000123456819-part1 -> ../../sda1

lrwxrwxrwx 1 root root 10 Oct 10 13:26 wwn-0x5000000123456819-part2 -> ../../sda2

lrwxrwxrwx 1 root root 10 Oct 10 13:26 wwn-0x5000000123456819-part3 -> ../../sda3

root@pve:/dev/disk/by-id# zpool status

pool: rpool

state: DEGRADED

status: One or more devices could not be used because the label is missing or

invalid. Sufficient replicas exist for the pool to continue

functioning in a degraded state.

action: Replace the device using 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-4J

config:

NAME STATE READ WRITE CKSUM

rpool DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

ata-HS-SSD-C260_256G_30147651612-part3 ONLINE 0 0 0

17753432011831091831 UNAVAIL 0 0 0 was /dev/disk/by-id/ata-HS-SSD-C260_256G_30148251328-part3

errors: No known data errors

root@pve:/dev/disk/by-id#

4、将正常盘的分区表信息复制到新磁盘

/dev/sda 为正常盘的物理路径

/dev/sdb 为新盘的物理路径

在新盘被复制分区表信息后,会跟正常盘的磁盘结构一样包括磁盘大小

root@pve:/dev/disk/by-id# sgdisk /dev/sda -R /dev/sdb

The operation has completed successfully.

root@pve:/dev/disk/by-id#

root@pve:/dev/disk/by-id# ls -l

total 0

lrwxrwxrwx 1 root root 9 Oct 10 13:28 ata-HS-SSD-C260_256G_30147651609 -> ../../sdb

lrwxrwxrwx 1 root root 10 Oct 10 13:28 ata-HS-SSD-C260_256G_30147651609-part1 -> ../../sdb1

lrwxrwxrwx 1 root root 10 Oct 10 13:28 ata-HS-SSD-C260_256G_30147651609-part2 -> ../../sdb2

lrwxrwxrwx 1 root root 10 Oct 10 13:28 ata-HS-SSD-C260_256G_30147651609-part3 -> ../../sdb3

lrwxrwxrwx 1 root root 9 Oct 10 13:26 ata-HS-SSD-C260_256G_30147651612 -> ../../sda

lrwxrwxrwx 1 root root 10 Oct 10 13:26 ata-HS-SSD-C260_256G_30147651612-part1 -> ../../sda1

lrwxrwxrwx 1 root root 10 Oct 10 13:26 ata-HS-SSD-C260_256G_30147651612-part2 -> ../../sda2

lrwxrwxrwx 1 root root 10 Oct 10 13:26 ata-HS-SSD-C260_256G_30147651612-part3 -> ../../sda3

lrwxrwxrwx 1 root root 9 Oct 10 13:26 usb-Kingston_DataTraveler_3.0_E0D55EA574C1F510C8320163-0:0 -> ../../sdc

lrwxrwxrwx 1 root root 10 Oct 10 13:26 usb-Kingston_DataTraveler_3.0_E0D55EA574C1F510C8320163-0:0-part1 -> ../../sdc1

lrwxrwxrwx 1 root root 10 Oct 10 13:26 usb-Kingston_DataTraveler_3.0_E0D55EA574C1F510C8320163-0:0-part2 -> ../../sdc2

lrwxrwxrwx 1 root root 9 Oct 10 13:28 wwn-0x5000000123456816 -> ../../sdb

lrwxrwxrwx 1 root root 10 Oct 10 13:28 wwn-0x5000000123456816-part1 -> ../../sdb1

lrwxrwxrwx 1 root root 10 Oct 10 13:28 wwn-0x5000000123456816-part2 -> ../../sdb2

lrwxrwxrwx 1 root root 10 Oct 10 13:28 wwn-0x5000000123456816-part3 -> ../../sdb3

lrwxrwxrwx 1 root root 9 Oct 10 13:26 wwn-0x5000000123456819 -> ../../sda

lrwxrwxrwx 1 root root 10 Oct 10 13:26 wwn-0x5000000123456819-part1 -> ../../sda1

lrwxrwxrwx 1 root root 10 Oct 10 13:26 wwn-0x5000000123456819-part2 -> ../../sda2

lrwxrwxrwx 1 root root 10 Oct 10 13:26 wwn-0x5000000123456819-part3 -> ../../sda3

root@pve:/dev/disk/by-id#

加载新盘分区信息

root@pve:/dev/disk/by-id# sgdisk -G /dev/sdb

The operation has completed successfully.

root@pve:/dev/disk/by-id#

5、将正常盘的数据信息复制到新的分区表中,需要将之前的坏盘磁盘名称替换成新盘磁盘名称

17753432011831091831 坏盘磁盘名称

ata-HS-SSD-C260_256G_30147651609-part3 新盘磁盘名称(这个名称可以通过/dev/disk/by-id目录下查看sdb被复制分区表后所对应正常盘的分区)

root@pve:/dev/disk/by-id# zpool replace -f rpool 17753432011831091831 ata-HS-SSD-C260_256G_30147651609-part3

root@pve:/dev/disk/by-id# zpool status

pool: rpool

state: DEGRADED

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Thu Oct 10 13:29:24 2024

49.7G scanned at 5.52G/s, 513M issued at 57.0M/s, 49.7G total

528M resilvered, 1.01% done, 00:14:44 to go

config:

NAME STATE READ WRITE CKSUM

rpool DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

ata-HS-SSD-C260_256G_30147651612-part3 ONLINE 0 0 0

replacing-1 DEGRADED 0 0 0

17753432011831091831 UNAVAIL 0 0 0 was /dev/disk/by-id/ata-HS-SSD-C260_256G_30148251328-part3

ata-HS-SSD-C260_256G_30147651609-part3 ONLINE 0 0 0 (resilvering)

errors: No known data errors

root@pve:/dev/disk/by-id#

复制数据时可以通过zpool status查看复制进度

root@pve:/dev/disk/by-id# zpool status

pool: rpool

state: DEGRADED

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Thu Oct 10 13:29:24 2024

49.7G scanned at 117M/s, 45.7G issued at 107M/s, 49.7G total

46.1G resilvered, 91.91% done, 00:00:38 to go

config:

NAME STATE READ WRITE CKSUM

rpool DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

ata-HS-SSD-C260_256G_30147651612-part3 ONLINE 0 0 0

replacing-1 DEGRADED 0 0 0

17753432011831091831 UNAVAIL 0 0 0 was /dev/disk/by-id/ata-HS-SSD-C260_256G_30148251328-part3

ata-HS-SSD-C260_256G_30147651609-part3 ONLINE 0 0 0 (resilvering)

errors: No known data errors

root@pve:/dev/disk/by-id#

root@pve:/dev/disk/by-id# zpool status

pool: rpool

state: ONLINE

scan: resilvered 50.1G in 00:08:37 with 0 errors on Thu Oct 10 13:38:01 2024

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-HS-SSD-C260_256G_30147651612-part3 ONLINE 0 0 0

ata-HS-SSD-C260_256G_30147651609-part3 ONLINE 0 0 0

errors: No known data errors

root@pve:/dev/disk/by-id#

root@pve:/dev/disk/by-id#

6、构建新盘的EFI引导分区,保证两块盘都能正常启动(新盘磁盘名称也可以通过web页面的磁盘信息,可以看出ZFS、EFI所对应的磁盘路径)

ata-HS-SSD-C260_256G_30147651609-part2 新盘磁盘名称(这个名称可以通过/dev/disk/by-id目录下查看sdb被复制分区表后所对应正常盘的分区)

root@pve:/dev/disk/by-id# proxmox-boot-tool format ata-HS-SSD-C260_256G_30147651609-part2

UUID="" SIZE="1073741824" FSTYPE="" PARTTYPE="c12a7328-f81f-11d2-ba4b-00a0c93ec93b" PKNAME="sdb" MOUNTPOINT=""

Formatting 'ata-HS-SSD-C260_256G_30147651609-part2' as vfat..

mkfs.fat 4.2 (2021-01-31)

Done.

root@pve:/dev/disk/by-id# proxmox-boot-tool init ata-HS-SSD-C260_256G_30147651609-part2

Re-executing '/usr/sbin/proxmox-boot-tool' in new private mount namespace..

UUID="8936-46A7" SIZE="1073741824" FSTYPE="vfat" PARTTYPE="c12a7328-f81f-11d2-ba4b-00a0c93ec93b" PKNAME="sdb" MOUNTPOINT=""

Mounting 'ata-HS-SSD-C260_256G_30147651609-part2' on '/var/tmp/espmounts/8936-46A7'.

Installing grub i386-pc target..

Installing for i386-pc platform.

Installation finished. No error reported.

Unmounting 'ata-HS-SSD-C260_256G_30147651609-part2'.

Adding 'ata-HS-SSD-C260_256G_30147651609-part2' to list of synced ESPs..

Refreshing kernels and initrds..

Running hook script 'proxmox-auto-removal'..

Running hook script 'zz-proxmox-boot'..

Copying and configuring kernels on /dev/disk/by-uuid/5162-40DC

Copying kernel 5.15.102-1-pve

Generating grub configuration file ...

Found linux image: /boot/vmlinuz-5.15.102-1-pve

Found initrd image: /boot/initrd.img-5.15.102-1-pve

Warning: os-prober will not be executed to detect other bootable partitions.

Systems on them will not be added to the GRUB boot configuration.

Check GRUB_DISABLE_OS_PROBER documentation entry.

done

WARN: /dev/disk/by-uuid/5162-CE50 does not exist - clean '/etc/kernel/proxmox-boot-uuids'! - skipping

Copying and configuring kernels on /dev/disk/by-uuid/8936-46A7

Copying kernel 5.15.102-1-pve

Generating grub configuration file ...

Found linux image: /boot/vmlinuz-5.15.102-1-pve

Found initrd image: /boot/initrd.img-5.15.102-1-pve

Warning: os-prober will not be executed to detect other bootable partitions.

Systems on them will not be added to the GRUB boot configuration.

Check GRUB_DISABLE_OS_PROBER documentation entry.

done

root@pve:/dev/disk/by-id#

7、检查EFI分区启动状态

root@pve:/dev/disk/by-id# proxmox-boot-tool refresh

Running hook script 'proxmox-auto-removal'..

Running hook script 'zz-proxmox-boot'..

Re-executing '/etc/kernel/postinst.d/zz-proxmox-boot' in new private mount namespace..

Copying and configuring kernels on /dev/disk/by-uuid/5162-40DC

Copying kernel 5.15.102-1-pve

Generating grub configuration file ...

Found linux image: /boot/vmlinuz-5.15.102-1-pve

Found initrd image: /boot/initrd.img-5.15.102-1-pve

Warning: os-prober will not be executed to detect other bootable partitions.

Systems on them will not be added to the GRUB boot configuration.

Check GRUB_DISABLE_OS_PROBER documentation entry.

done

WARN: /dev/disk/by-uuid/5162-CE50 does not exist - clean '/etc/kernel/proxmox-boot-uuids'! - skipping

Copying and configuring kernels on /dev/disk/by-uuid/8936-46A7

Copying kernel 5.15.102-1-pve

Generating grub configuration file ...

Found linux image: /boot/vmlinuz-5.15.102-1-pve

Found initrd image: /boot/initrd.img-5.15.102-1-pve

Warning: os-prober will not be executed to detect other bootable partitions.

Systems on them will not be added to the GRUB boot configuration.

Check GRUB_DISABLE_OS_PROBER documentation entry.

done

root@pve:/dev/disk/by-id#

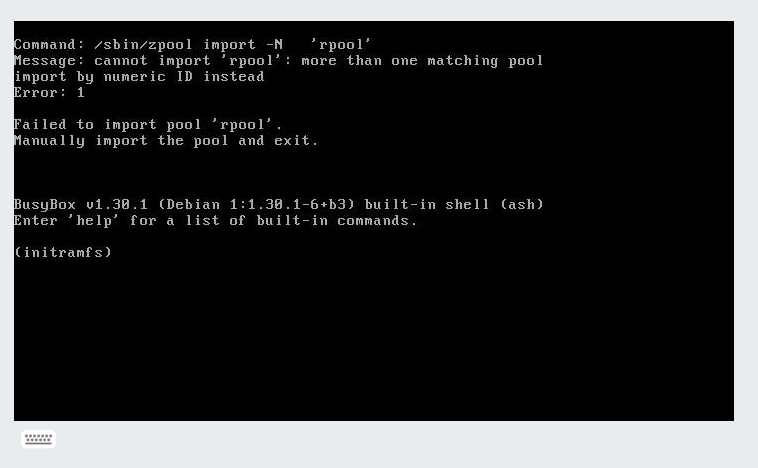

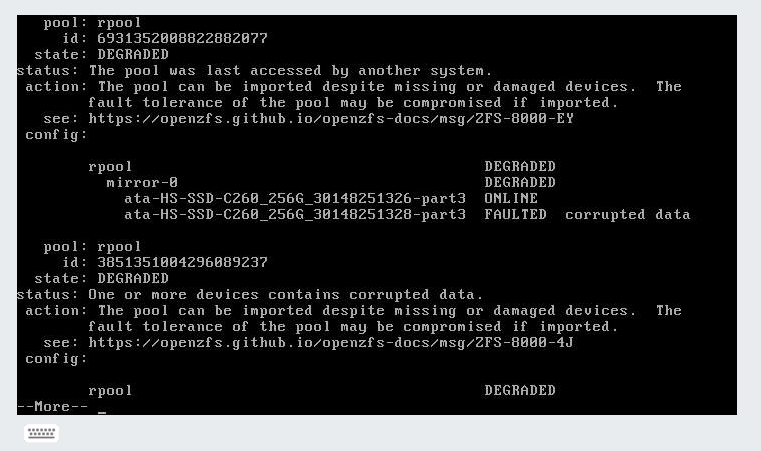

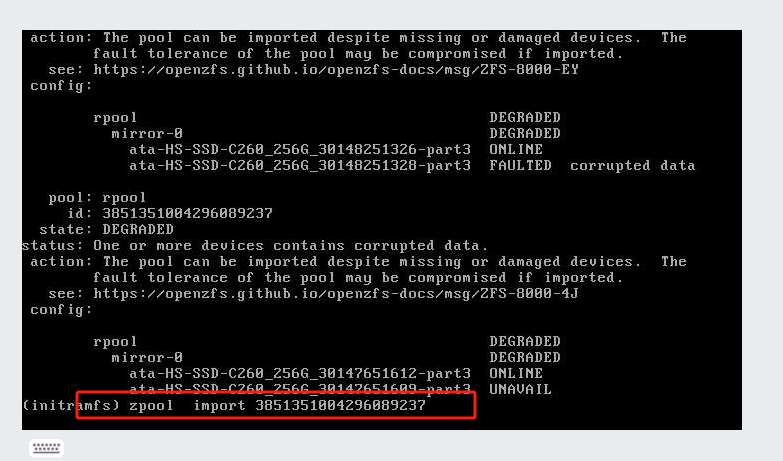

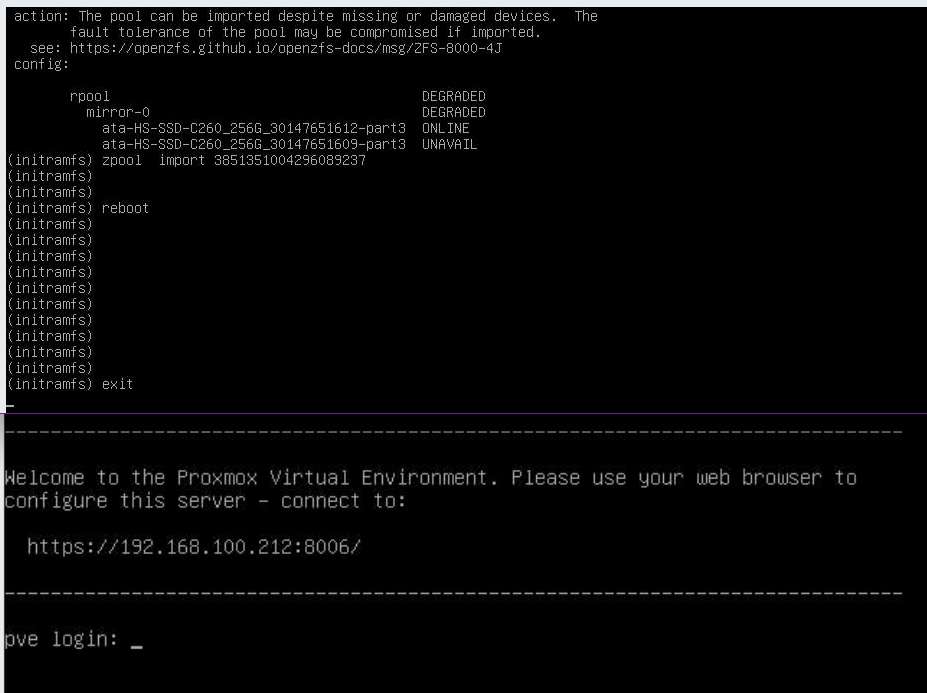

附加故障现象

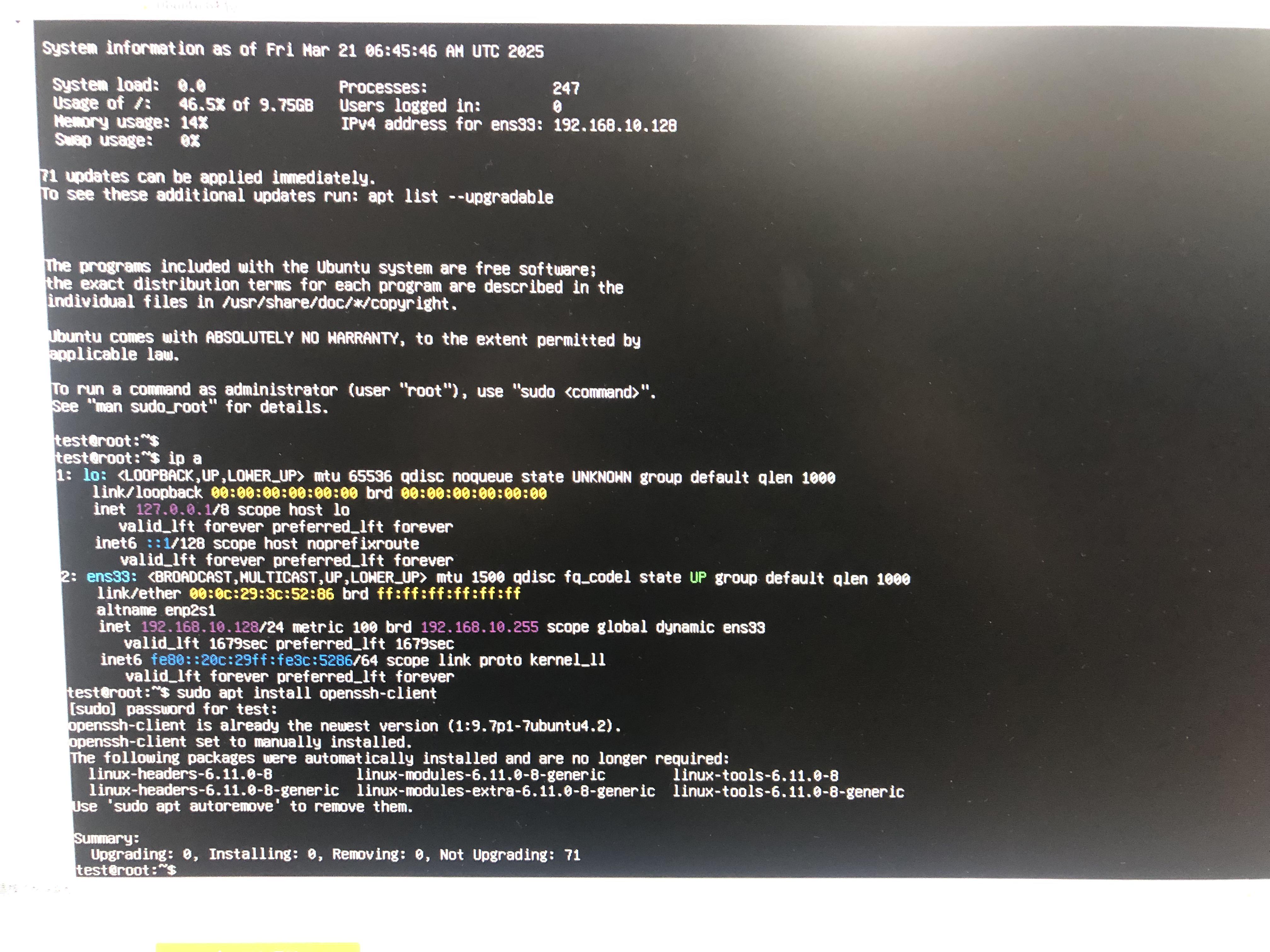

1、在插入新盘后无法进入系统提示rpool丢失

出现这种情况时可能是新插入的磁盘也有同样类似的系统

使用zpool import查看需要使用的系统,

输入zpool import 6931352008822882077,回车后正常就可以exit退出,进入到系统中 #6931352008822882077为raid的ID号

![B3708 [语言月赛202302] 神树大人挥动魔杖](https://img2024.cnblogs.com/blog/3619440/202503/3619440-20250328141952449-312541226.png)

![P1059 [NOIP 2006 普及组] 明明的随机数](https://img2024.cnblogs.com/blog/3619440/202503/3619440-20250328141002789-1043756064.png)