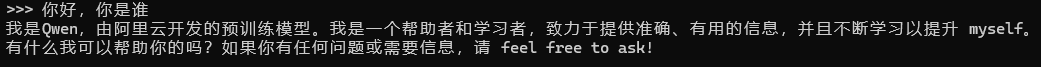

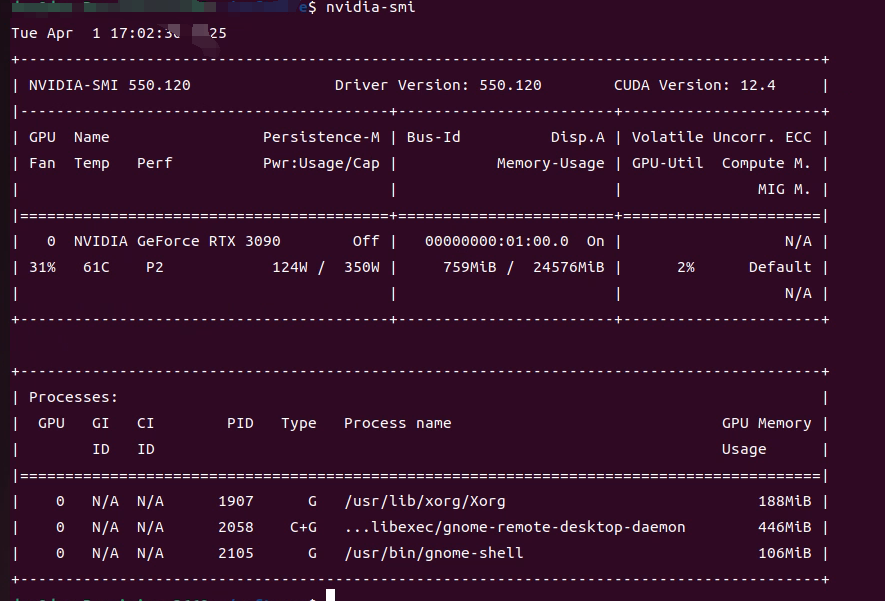

一、 检查驱动状态

nvidia-smi

如果没有以下输出就要安装一下驱动

在ubutnu22.04中安装3090使用以下命令

sudo apt update && sudo apt upgrade && sudo apt install gcc makesudo apt install nvidia-driver-550

驱动搞定就重启

sudo reboot

二、安装cua 12.4

下载安装脚本

wget https://developer.download.nvidia.com/compute/cuda/12.4.1_550.54.15/local_installers/cuda_12.4.1_550.54.15_linux.run

或者下我这里的附件 。。

运行刚下载的脚本

sudo sh cuda_12.4.1_550.54.15_linux.run --silent --toolkits

配置环境变量

echo 'export PATH=/usr/local/cuda-12.4/bin:$PATH' >> ~/.bashrc echo 'export LD_LIBRARY_PATH=/usr/local/cuda-12.4/lib64:$LD_LIBRARY_PATH' >> ~/.bashrc echo 'export CUDA_HOME=/usr/local/cuda-12.4' >> ~/.bashrc source ~/.bashrc

验证CUDA

nvcc -V

下载cuDNN

wget https://developer.download.nvidia.com/compute/cudnn/redist/cudnn/linux-x86_64/cudnn-linux-x86_64-9.7.1.26_cuda12-archive.tar.xz -O ~/cudnn.tar.xz

配置环境并启用

mkdir -p ~/cudnn tar -xf ~/cudnn.tar.xz -C ~/cudnn sudo cp ~/cudnn/cudnn-linux-x86_64-9.7.1.26_cuda12-archive/include/* /usr/local/cuda-12.4/include/ sudo cp ~/cudnn/cudnn-linux-x86_64-9.7.1.26_cuda12-archive/lib/* /usr/local/cuda-12.4/lib64/ sudo chmod a+r /usr/local/cuda-12.4/include/cudnn*.h /usr/local/cuda-12.4/lib64/libcudnn*

验证安装对PyTorch GPU支持

python -c "import torch; print('PyTorch版本:', torch.__version__); print('CUDA是否可用:', torch.cuda.is_available()); print('CUDA版本:', torch.version.cuda); print('GPU数量:', torch.cuda.device_count()); print('GPU名称:', torch.cuda.get_device_name(0))"

验证对TensorFlow GPU支持

python -c "import tensorflow as tf; print('TensorFlow版本:', tf.__version__); print('GPU是否可用:', tf.config.list_physical_devices('GPU')); print('列出所有可用GPU:'); [print(gpu) for gpu in tf.config.list_physical_devices('GPU')]"