前言

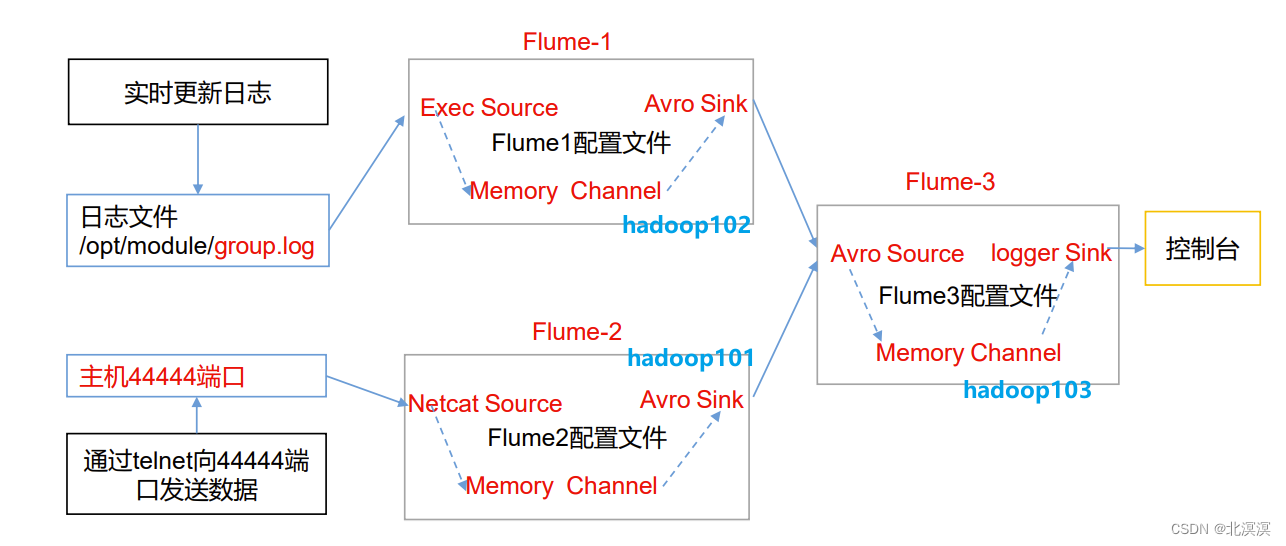

本节内容我们主要介绍一下Flume数据采集过程中,如何把多个数据采集点的数据聚合到一个地方供分析使用。我们使用hadoop101服务器采集nc数据,hadoop102采集文件数据,将hadoop101和hadoop102服务器采集的数据聚合到hadoop103服务器输出到控制台。其整体架构如下:

正文

①在hadoop101服务器的/opt/module/apache-flume-1.9.0/job/group1目录下创建job-nc-flume-avro.conf配置文件,用于监控nc发送的数据,通过avro sink传输到avro source

- job-nc-flume-avro.conf配置文件

# Name the components on this agent a1.sources = r1 a1.sinks = k1 a1.channels = c1 # Describe/configure the source a1.sources.r1.type = exec a1.sources.r1.command = tail -F /opt/module/apache-flume-1.9.0/a.log a1.sources.r1.shell = /bin/bash -c # Describe the sink a1.sinks.k1.type = avro a1.sinks.k1.hostname = hadoop103 a1.sinks.k1.port = 4141 # Describe the channel a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

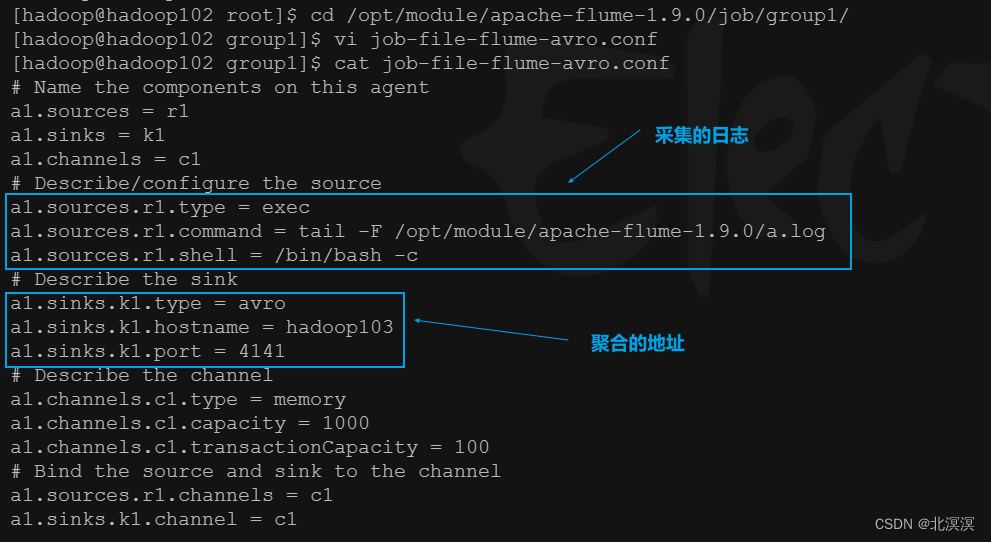

②在hadoop102服务器的/opt/module/apache-flume-1.9.0/job/group1目录下创建job-file-flume-avro.conf配置文件,用于监控目录/opt/module/apache-flume-1.9.0/a.log的数据,通过avro sink传输到avro source

- job-file-flume-avro.conf配置文件

# Name the components on this agent a1.sources = r1 a1.sinks = k1 a1.channels = c1 # Describe/configure the source a1.sources.r1.type = exec a1.sources.r1.command = tail -F /opt/module/apache-flume-1.9.0/a.log a1.sources.r1.shell = /bin/bash -c # Describe the sink a1.sinks.k1.type = avro a1.sinks.k1.hostname = hadoop103 a1.sinks.k1.port = 4141 # Describe the channel a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

③在hadoop103服务器的/opt/module/apache-flume-1.9.0/job/group1目录下创建job-avro-flume-console.conf配置文件,用户将avro source聚合的数据输出到控制台

- job-avro-flume-console.conf配置文件

# Name the components on this agent a1.sources = r1 a1.sinks = k1 a1.channels = c1 # Describe/configure the source a1.sources.r1.type = avro a1.sources.r1.bind = hadoop103 a1.sources.r1.port = 4141 # Describe the sink # Describe the sink a1.sinks.k1.type = logger # Describe the channel a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

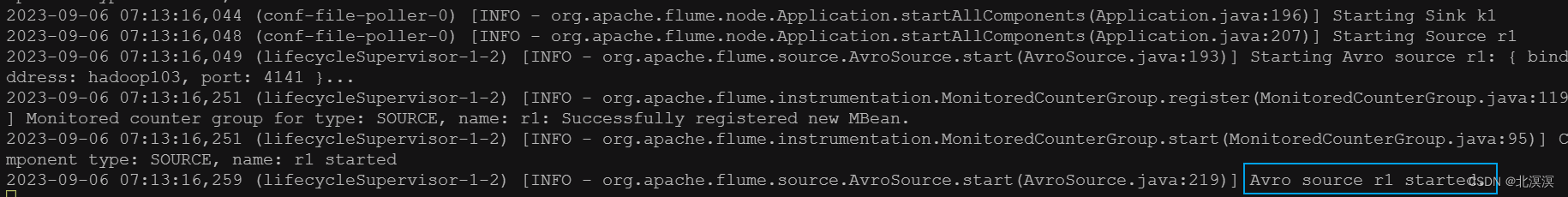

④ 在hadoop103启动job-avro-flume-console.conf任务

- 命令:

bin/flume-ng agent -c conf/ -n a1 -f job/group1/job-avro-flume-console.conf -Dflume.root.logger=INFO,console

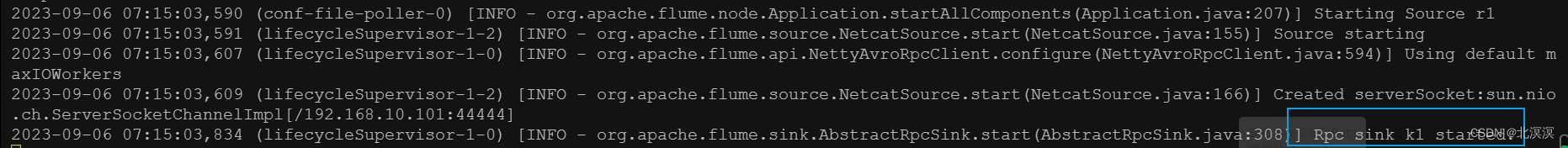

⑤在hadoop101启动job-nc-flume-avro.conf任务

- 命令:

bin/flume-ng agent -c conf/ -n a1 -f job/group1/job-nc-flume-avro.conf -Dflume.root.logger=INFO,console

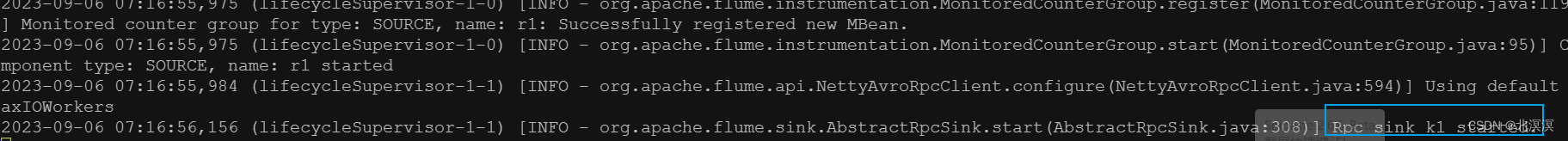

⑥在hadoop102启动job-file-flume-avro.conf任务

- 命令:

bin/flume-ng agent -c conf/ -n a1 -f job/group1/job-file-flume-avro.conf -Dflume.root.logger=INFO,console

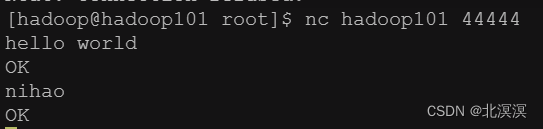

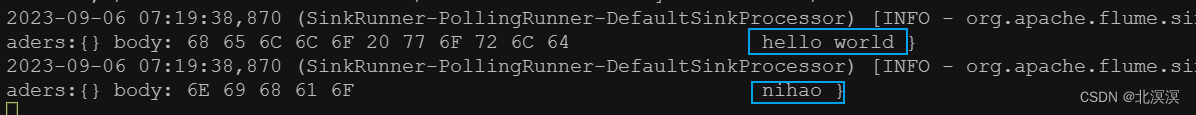

⑦使用nc工具向hadoop101发送数据

- nc发送数据

- hadoop103接收到数据

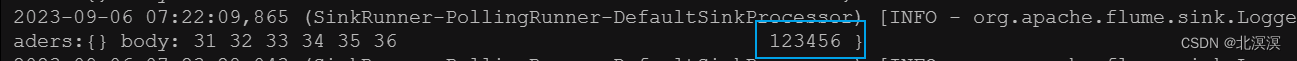

⑧在hadoop102的a.log中写入数据

- 写入数据

- hadoop103接收到数据

结语

flume数据聚合就是为了将具有相同属性的数据聚合到一起,便于管理、分析、统计等。至此,关于Flume数据采集之采集数据聚合案例实战到这里就结束了,我们下期见。。。。。。