一般的CNN网络的参数量估计代码

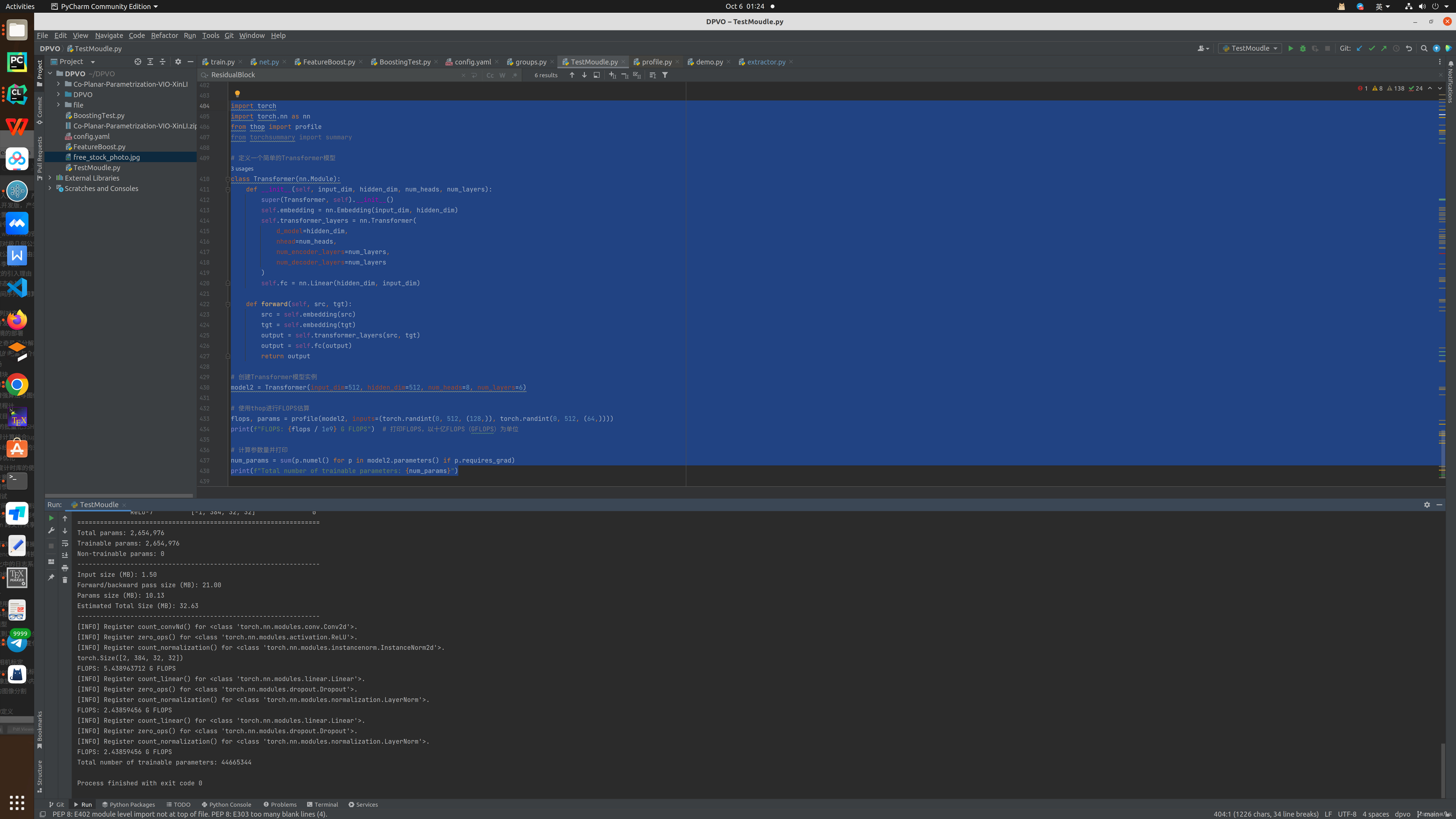

class ResidualBlock(nn.Module):def __init__(self, in_planes, planes, norm_fn='group', stride=1):super(ResidualBlock, self).__init__()print(in_planes, planes, norm_fn, stride)self.conv1 = nn.Conv2d(in_planes, planes, kernel_size=3, padding=1, stride=stride)self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, padding=1)self.relu = nn.ReLU(inplace=True)num_groups = planes // 8if norm_fn == 'group':self.norm1 = nn.GroupNorm(num_groups=num_groups, num_channels=planes)self.norm2 = nn.GroupNorm(num_groups=num_groups, num_channels=planes)if not stride == 1:self.norm3 = nn.GroupNorm(num_groups=num_groups, num_channels=planes)elif norm_fn == 'batch':self.norm1 = nn.BatchNorm2d(planes)self.norm2 = nn.BatchNorm2d(planes)if not stride == 1:self.norm3 = nn.BatchNorm2d(planes)elif norm_fn == 'instance':self.norm1 = nn.InstanceNorm2d(planes)self.norm2 = nn.InstanceNorm2d(planes)if not stride == 1:self.norm3 = nn.InstanceNorm2d(planes)elif norm_fn == 'none':self.norm1 = nn.Sequential()self.norm2 = nn.Sequential()if not stride == 1:self.norm3 = nn.Sequential()if stride == 1:self.downsample = Noneelse:self.downsample = nn.Sequential(nn.Conv2d(in_planes, planes, kernel_size=1, stride=stride), self.norm3)def forward(self, x):print(x.shape)#exit()y = xy = self.relu(self.norm1(self.conv1(y)))y = self.relu(self.norm2(self.conv2(y)))if self.downsample is not None:x = self.downsample(x)return self.relu(x + y)R=ResidualBlock(384, 384, norm_fn='instance', stride=1)

summary(R.to("cuda" if torch.cuda.is_available() else "cpu"), (384, 32, 32))

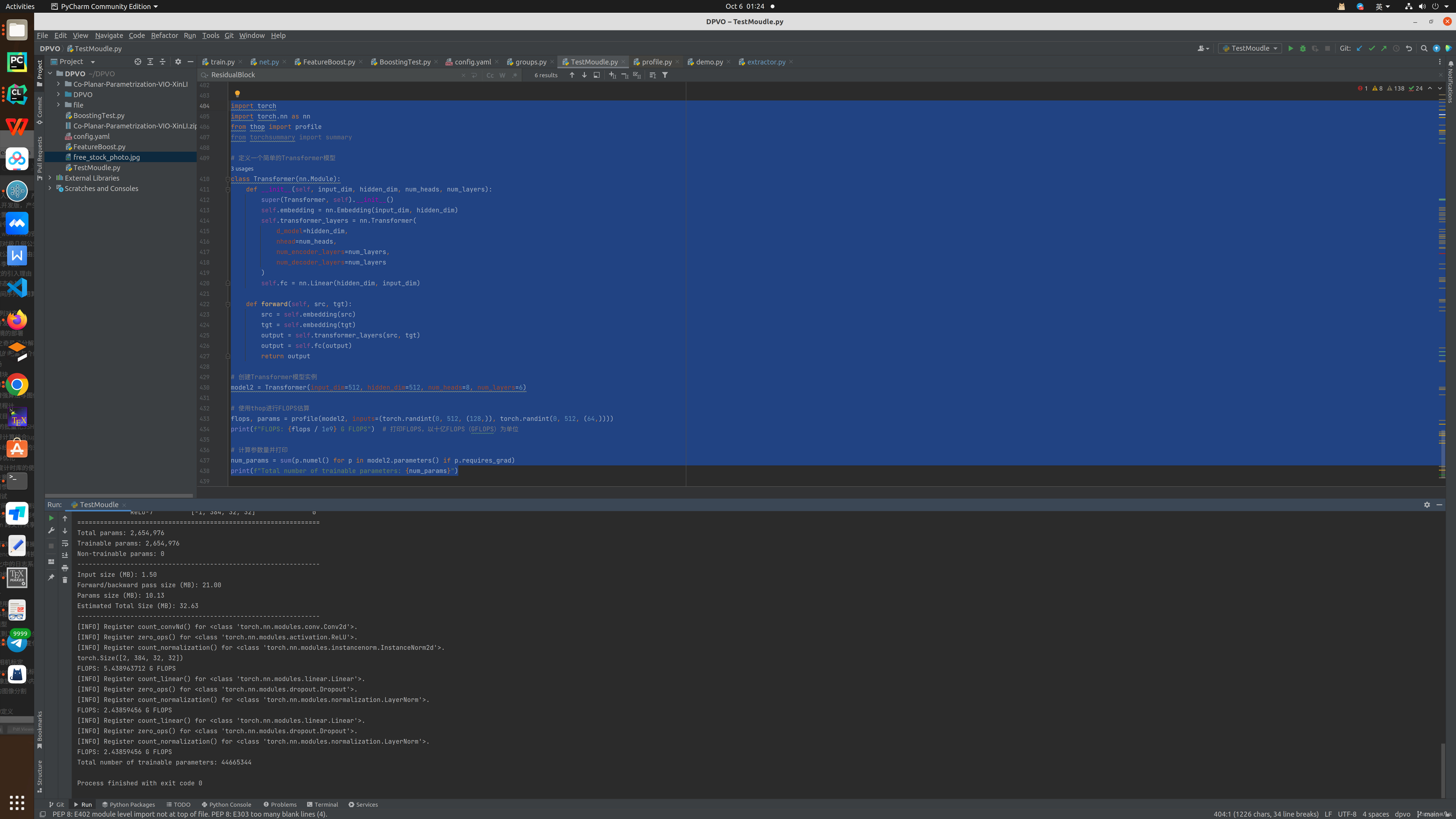

transformer结构的参数量的估计结果

import torch

import torch.nn as nn

from thop import profile

from torchsummary import summary# 定义一个简单的Transformer模型

class Transformer(nn.Module):def __init__(self, input_dim, hidden_dim, num_heads, num_layers):super(Transformer, self).__init__()self.embedding = nn.Embedding(input_dim, hidden_dim)self.transformer_layers = nn.Transformer(d_model=hidden_dim,nhead=num_heads,num_encoder_layers=num_layers,num_decoder_layers=num_layers)self.fc = nn.Linear(hidden_dim, input_dim)def forward(self, src, tgt):src = self.embedding(src)tgt = self.embedding(tgt)output = self.transformer_layers(src, tgt)output = self.fc(output)return output# 创建Transformer模型实例

model2 = Transformer(input_dim=512, hidden_dim=512, num_heads=8, num_layers=6)# 使用thop进行FLOPS估算

flops, params = profile(model2, inputs=(torch.randint(0, 512, (128,)), torch.randint(0, 512, (64,))))

print(f"FLOPS: {flops / 1e9} G FLOPS") # 打印FLOPS,以十亿FLOPS(GFLOPS)为单位# 计算参数量并打印

num_params = sum(p.numel() for p in model2.parameters() if p.requires_grad)

print(f"Total number of trainable parameters: {num_params}")