文章目录

- 一、导读

- 二、部署环境

- 三、预测结果

- 3.1 使用检测模型

- 3.2 使用分割模型

- 3.3 使用分类模型

- 3.4 使用pose检测模型

- 四、COCO val 数据集

- 4.1 在 COCO128 val 上验证 YOLOv8n

- 4.2 在COCO128上训练YOLOv8n

- 五、自己训练

- 5.1 训练检测模型

- 5.2 训练分割模型

- 5.3 训练分类模型

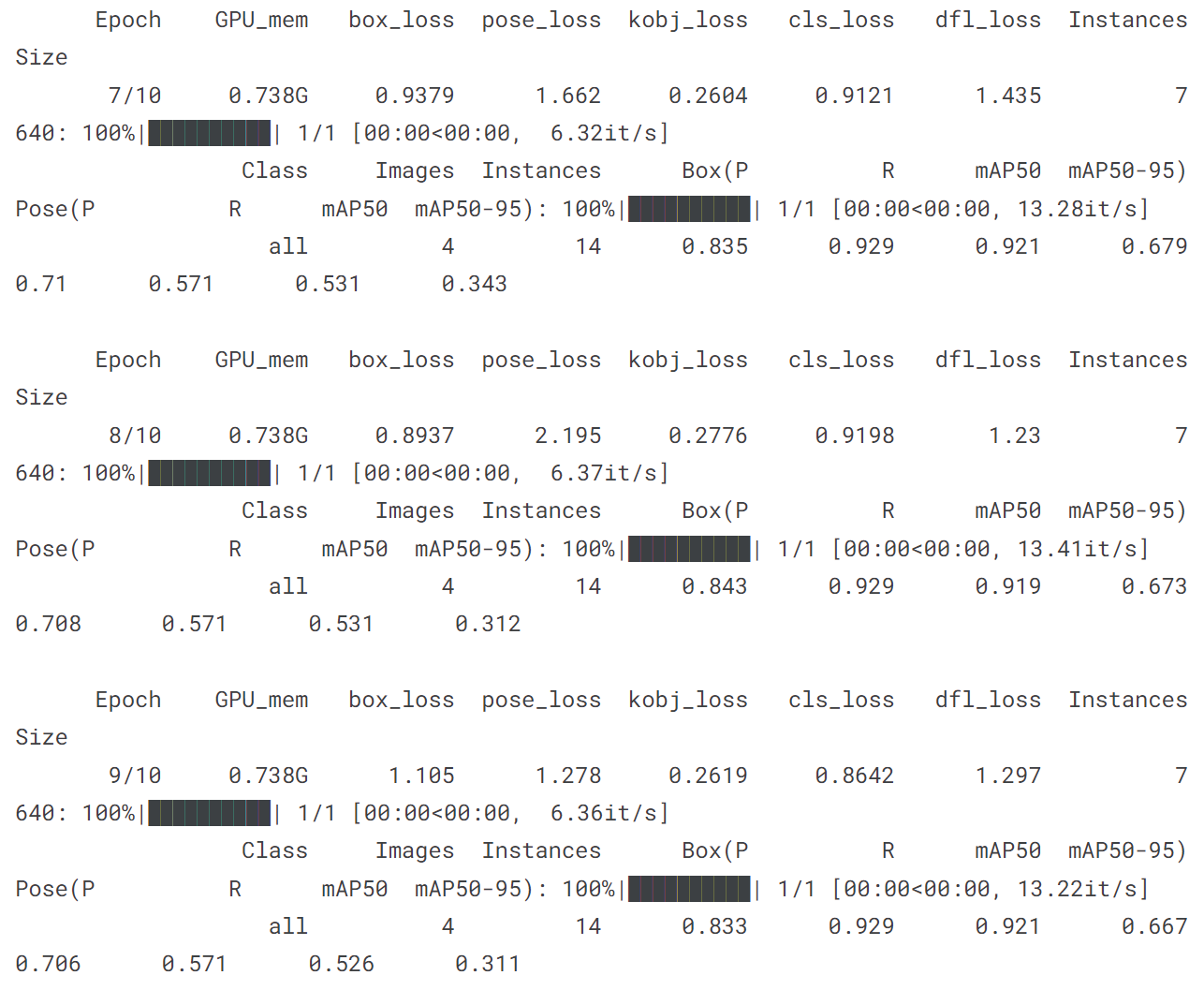

- 5.4 训练pose模型

一、导读

YOLOv8是来自Ultralytics的最新的基于YOLO的对象检测模型系列,提供最先进的性能。

利用以前的 YOLO 版本,YOLOv8模型更快、更准确,同时为训练模型提供统一框架,以执行:

- 物体检测

- 实例分割

- 图像分类

Ultralytics为YOLO模型发布了一个全新的存储库。它被构建为 用于训练对象检测、实例分割和图像分类模型的统一框架。

以下是有关新版本的一些主要功能:

- 用户友好的 API(命令行 + Python)。

- 更快更准确。

- 支持:物体检测、实例分割和图像分类

- 可扩展到所有以前的版本。

- 新骨干网络。

- 新的无锚头。

- 新的损失函数。

YOLOv8 还高效灵活地支持多种导出格式,并且该模型可以在 CPU 和 GPU 上运行。

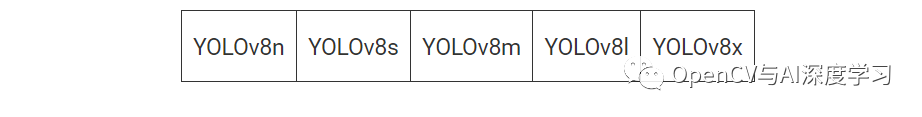

YOLOv8 模型的每个类别中有五个模型用于检测、分割和分类。YOLOv8 Nano 是最快和最小的,而 YOLOv8 Extra Large (YOLOv8x) 是其中最准确但最慢的。

YOLOv8 捆绑了以下预训练模型:

- 在图像分辨率为 640 的 COCO 检测数据集上训练的对象检测检查点。

- 在图像分辨率为 640 的 COCO 分割数据集上训练的实例分割检查点。

- 在图像分辨率为 224 的 ImageNet 数据集上预训练的图像分类模型。

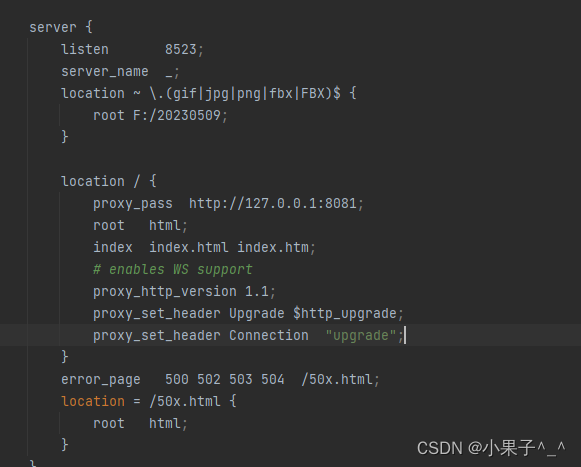

二、部署环境

要充分发挥YOLOv8的潜力,需要从存储库和ultralytics包中安装要求。要安装要求,我们首先需要克隆存储库。

git clone https://github.com/ultralytics/ultralytics.git

pip install -r requirements.txt

在最新版本中,Ultralytics YOLOv8提供了完整的命令行界面 (CLI) API 和 Python SDK,用于执行训练、验证和推理。要使用yoloCLI,我们需要安装ultralytics包。

pip install ultralytics

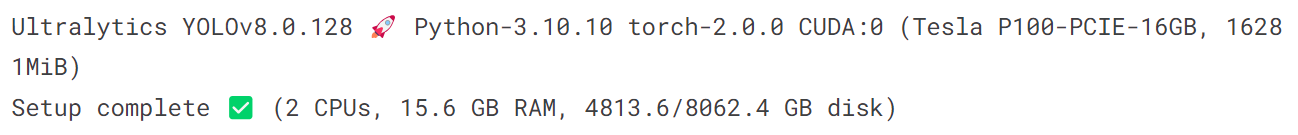

我们的环境部署为:

%pip install ultralytics

import ultralytics

ultralytics.checks()

三、预测结果

YOLOv8 可以直接在命令行界面 (CLI) 中使用“yolo”命令来执行各种任务和模式,并接受其他参数,即“imgsz=640”。 查看可用 yolo 参数 的完整列表以及 YOLOv8 预测文档 中的其他详细信息 /train/)。

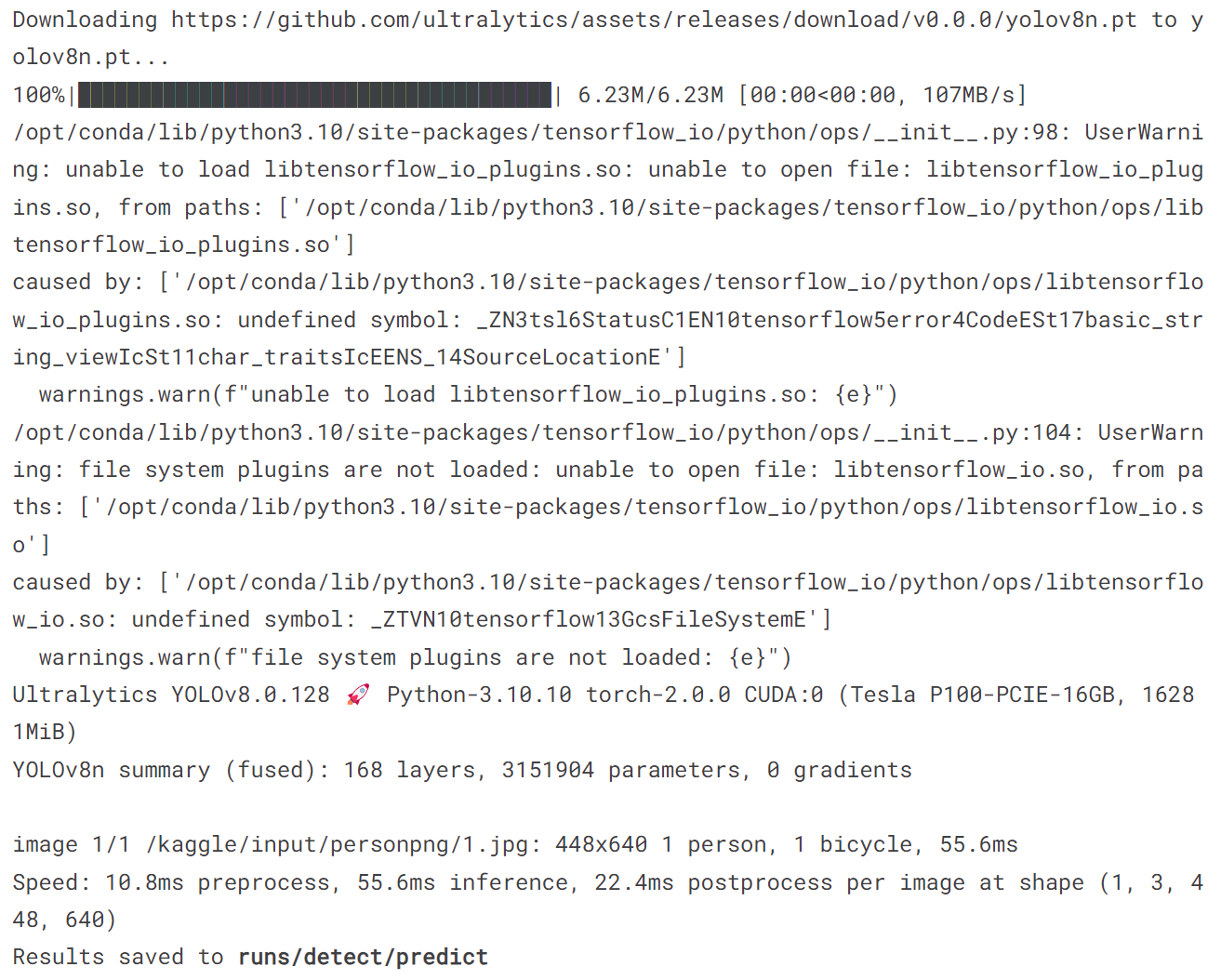

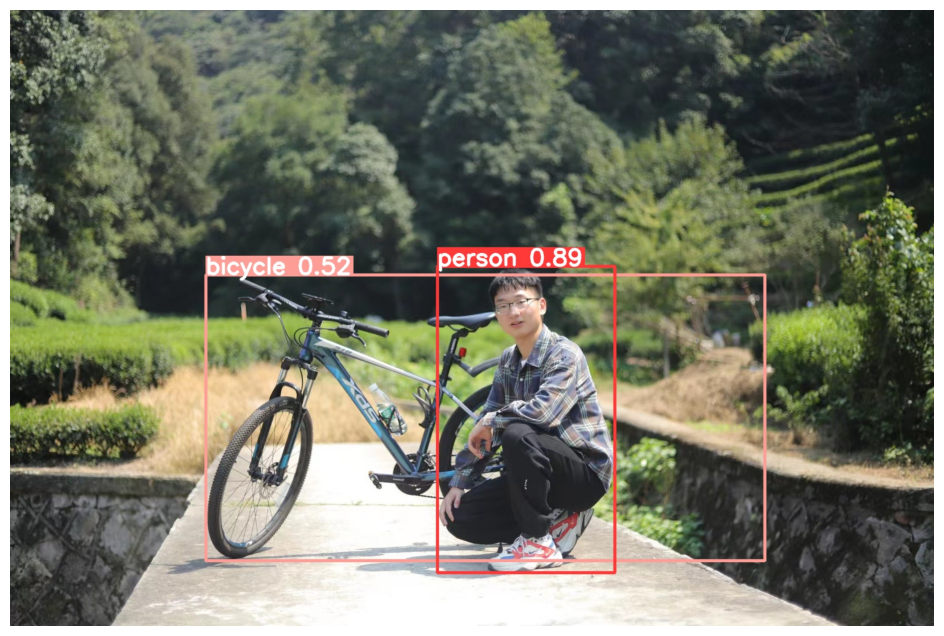

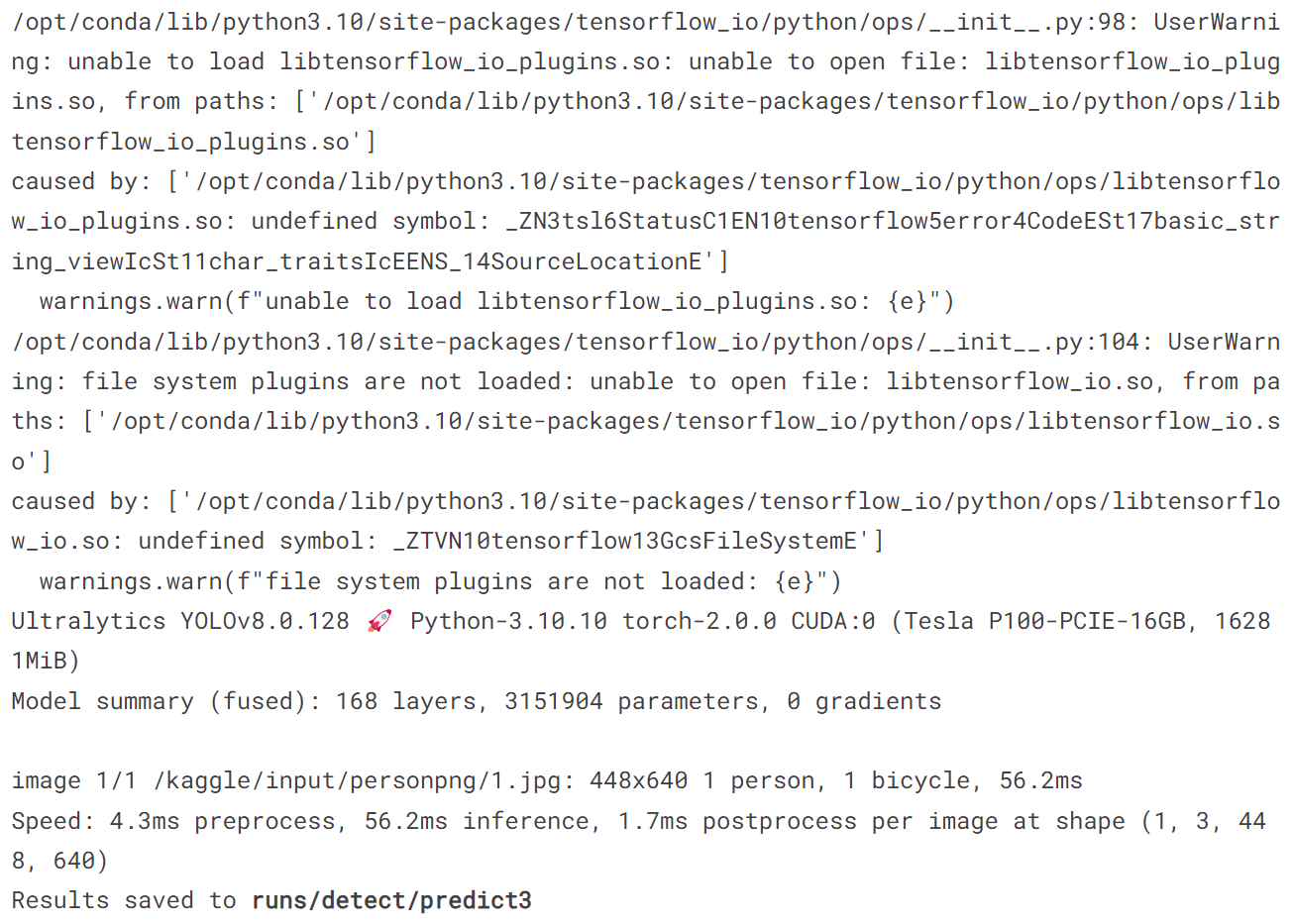

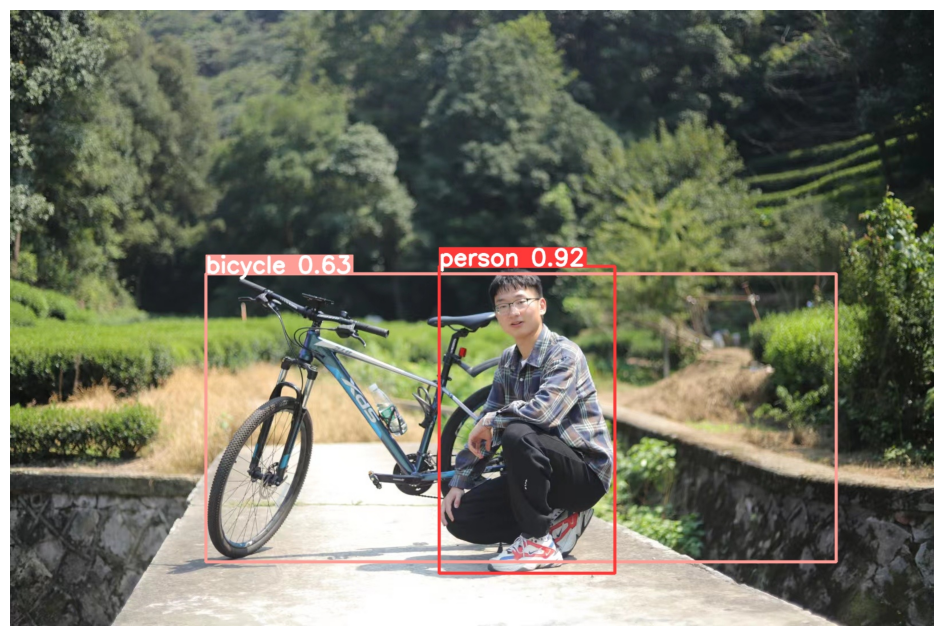

3.1 使用检测模型

!yolo predict model = yolov8n.pt source = '/kaggle/input/personpng/1.jpg'

import matplotlib.pyplot as plt

from PIL import Imageimage = Image.open('/kaggle/working/runs/detect/predict/1.jpg')

plt.figure(figsize=(12, 8))

plt.imshow(image)

plt.axis('off')

plt.show()

结果展示为:

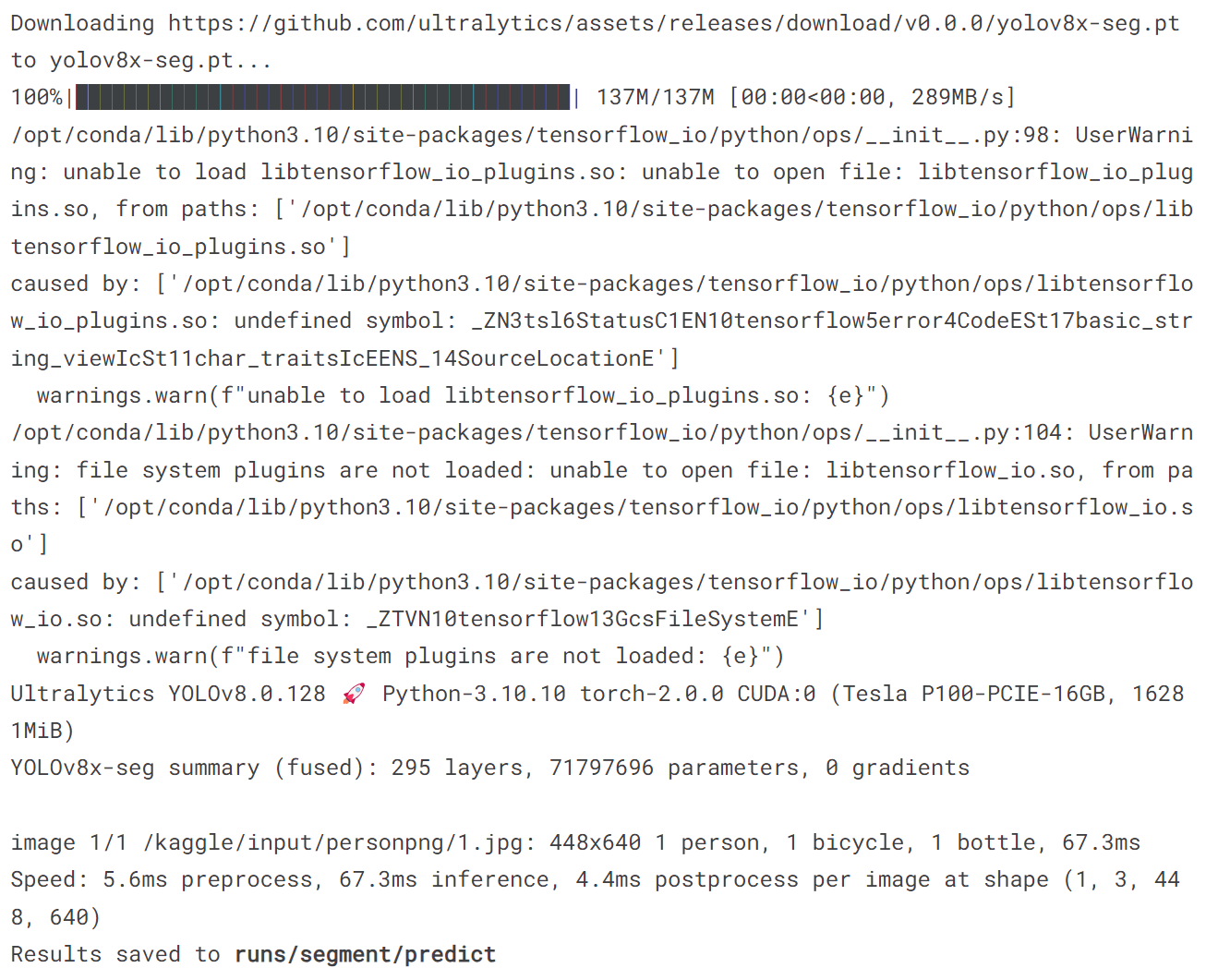

3.2 使用分割模型

!yolo task = segment mode = predict model = yolov8x-seg.pt source = '/kaggle/input/personpng/1.jpg'

image = Image.open('/kaggle/working/runs/segment/predict/1.jpg')

plt.figure(figsize=(12, 8))

plt.imshow(image)

plt.axis('off')

plt.show()

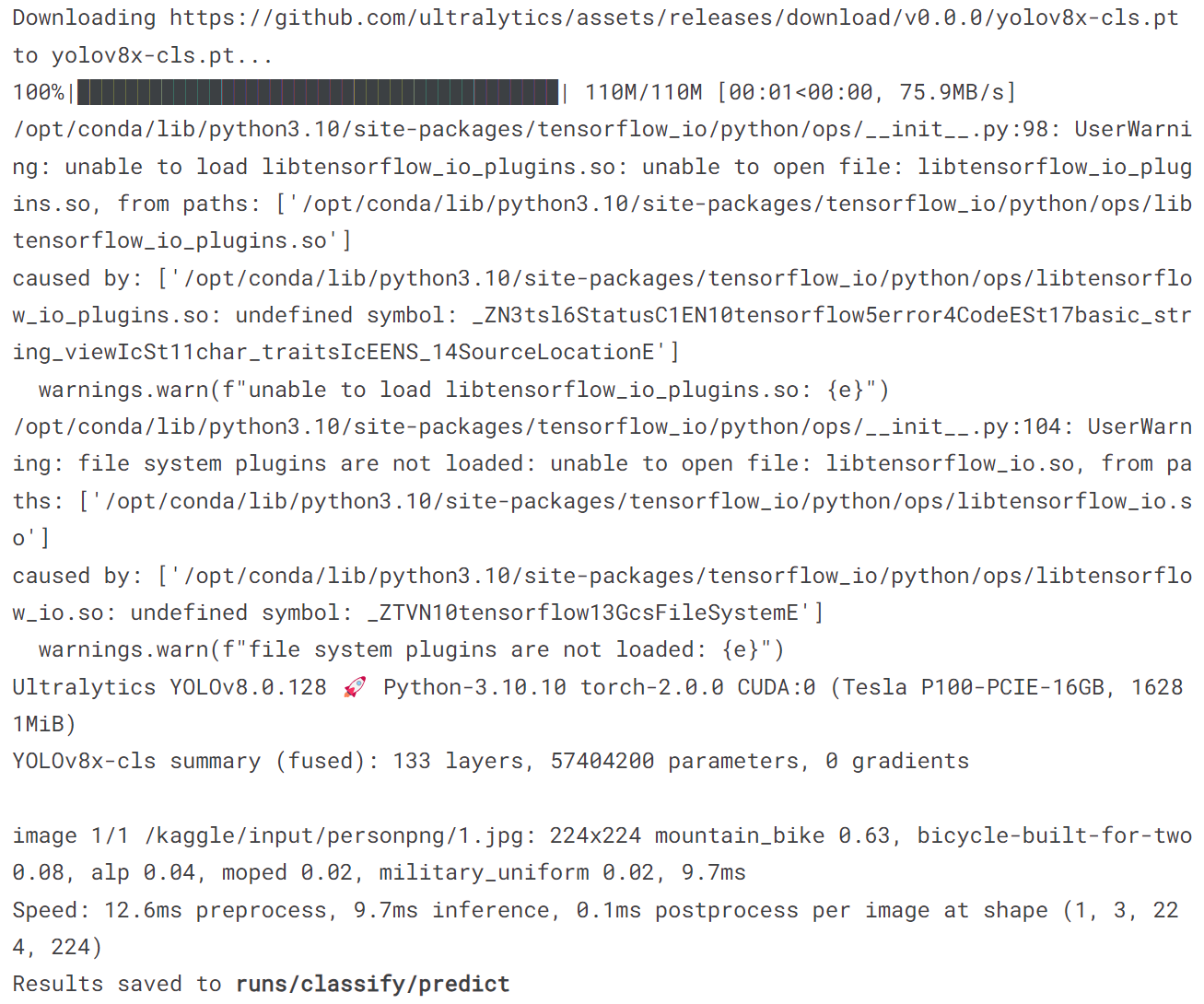

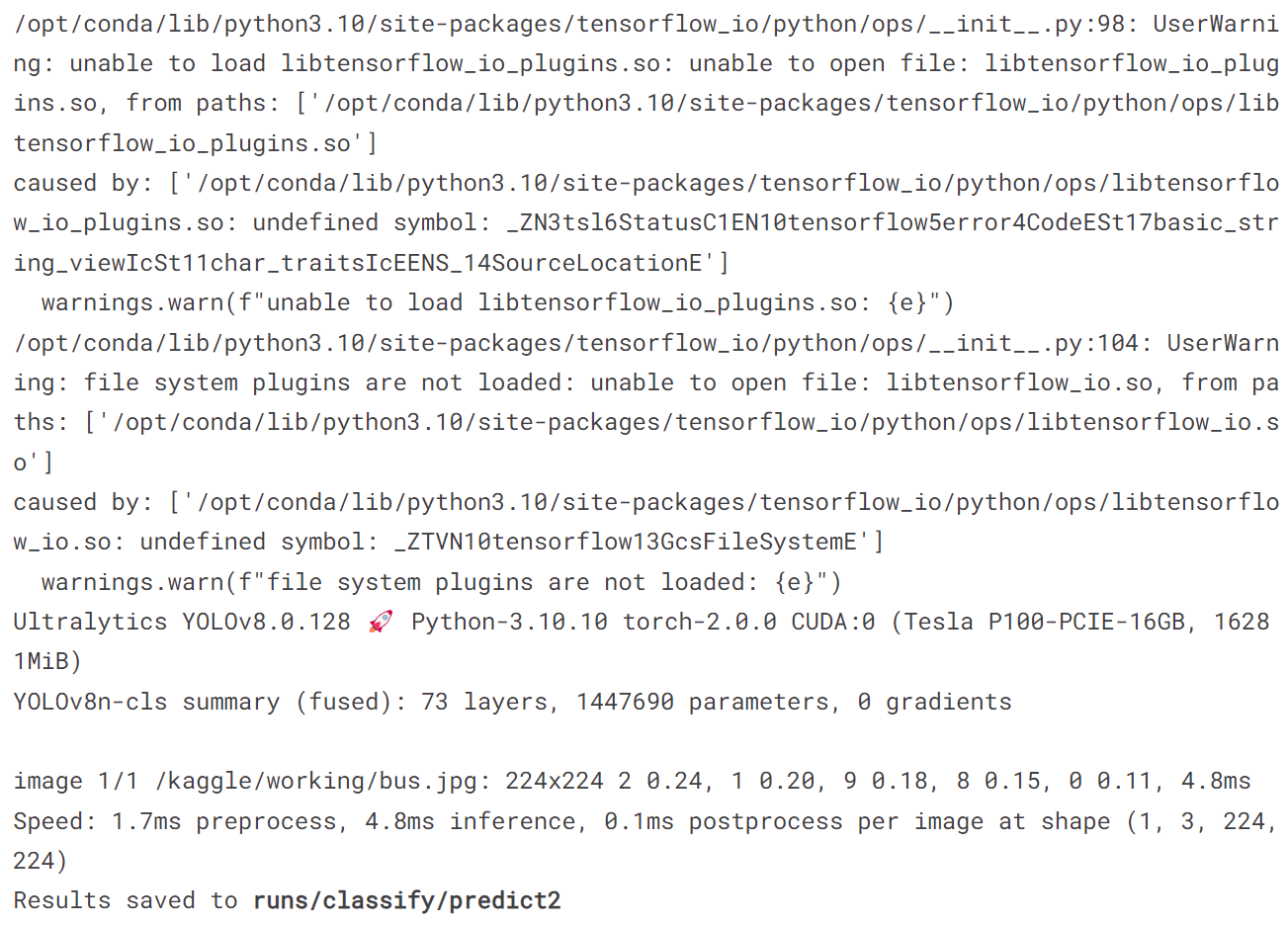

3.3 使用分类模型

!yolo task = classify mode = predict model = yolov8x-cls.pt source = '/kaggle/input/personpng/1.jpg'

image = Image.open('/kaggle/working/runs/classify/predict/1.jpg')

plt.figure(figsize=(20, 10))

plt.imshow(image)

plt.axis('off')

plt.show()

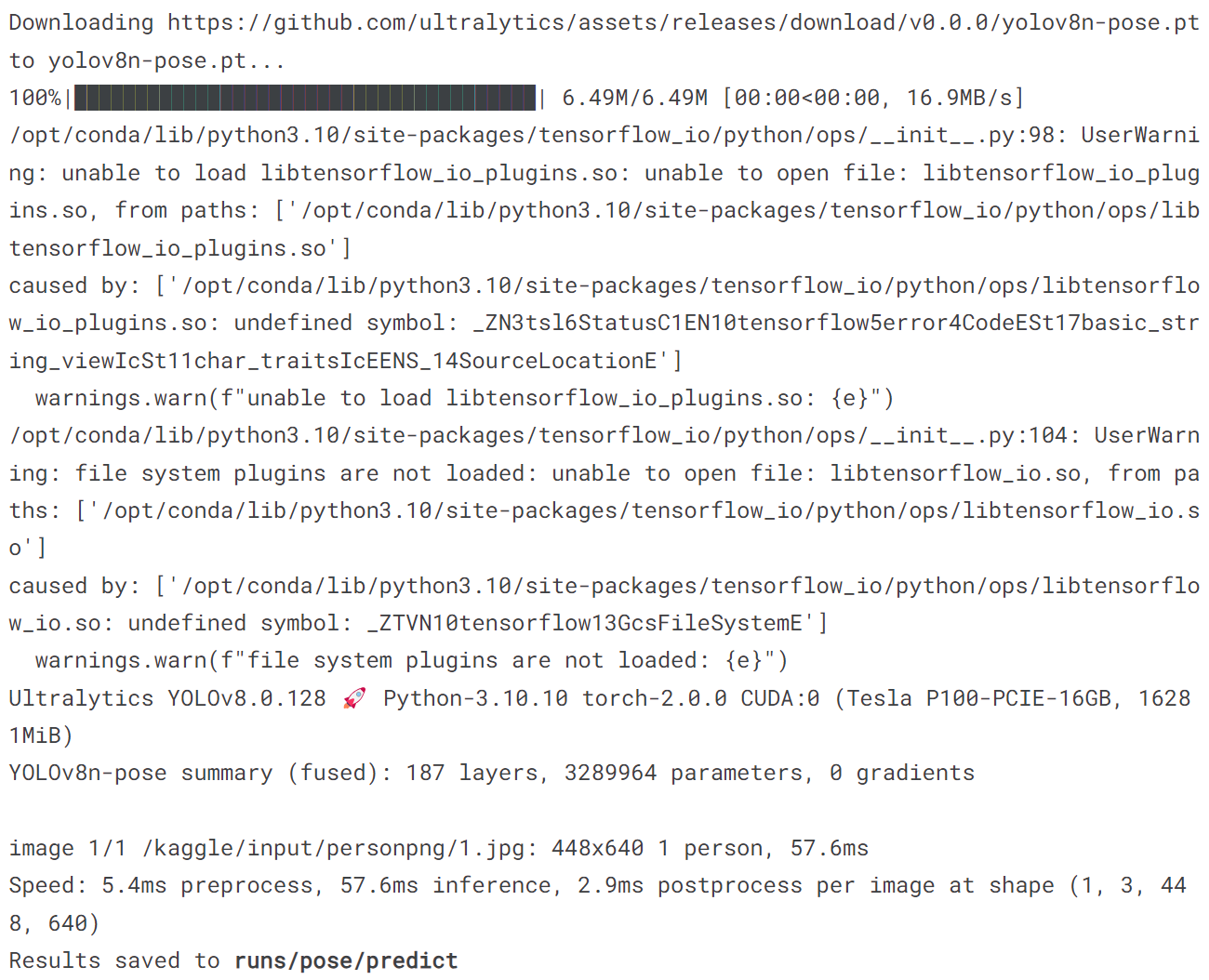

3.4 使用pose检测模型

!yolo task = pose mode = predict model = yolov8n-pose.pt source = '/kaggle/input/personpng/1.jpg'

image = Image.open('/kaggle/working/runs/pose/predict/1.jpg')

plt.figure(figsize=(12, 8))

plt.imshow(image)

plt.axis('off')

plt.show()

四、COCO val 数据集

文件的大小为780M,共计5000张图像。

import torch

torch.hub.download_url_to_file('https://ultralytics.com/assets/coco2017val.zip', 'tmp.zip')

!unzip -q tmp.zip -d datasets && rm tmp.zip

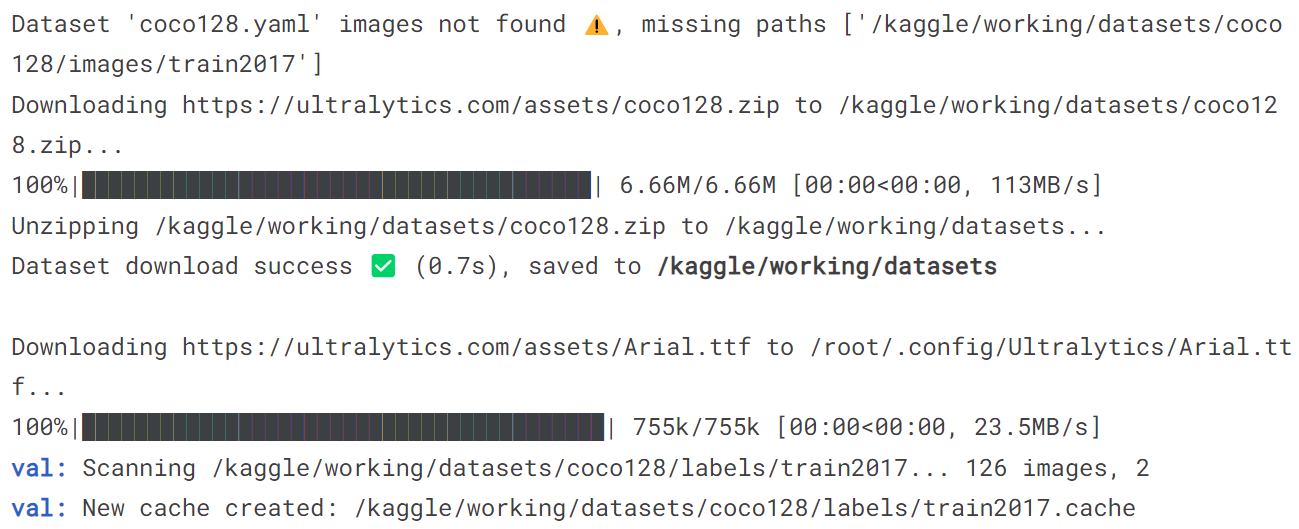

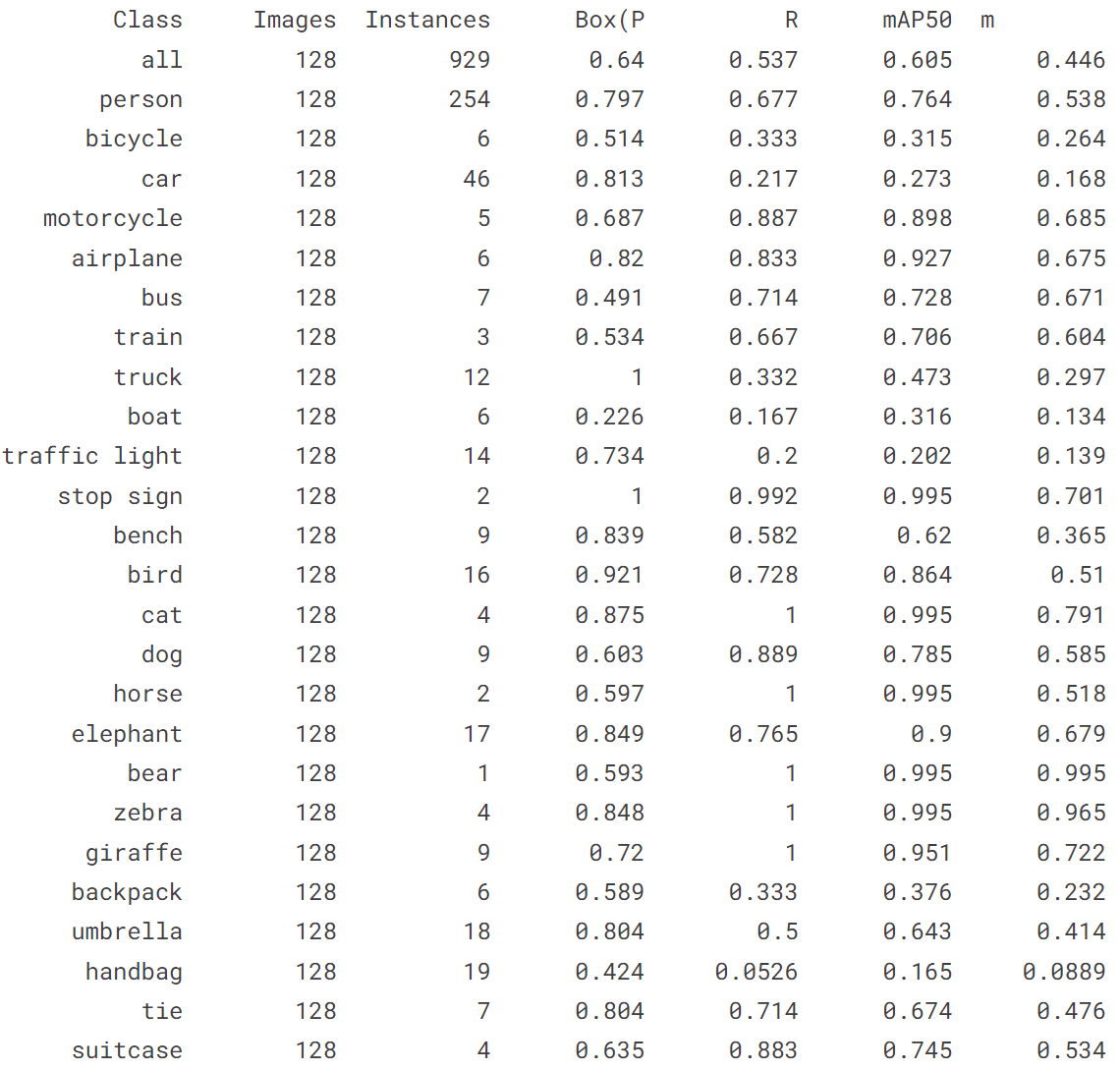

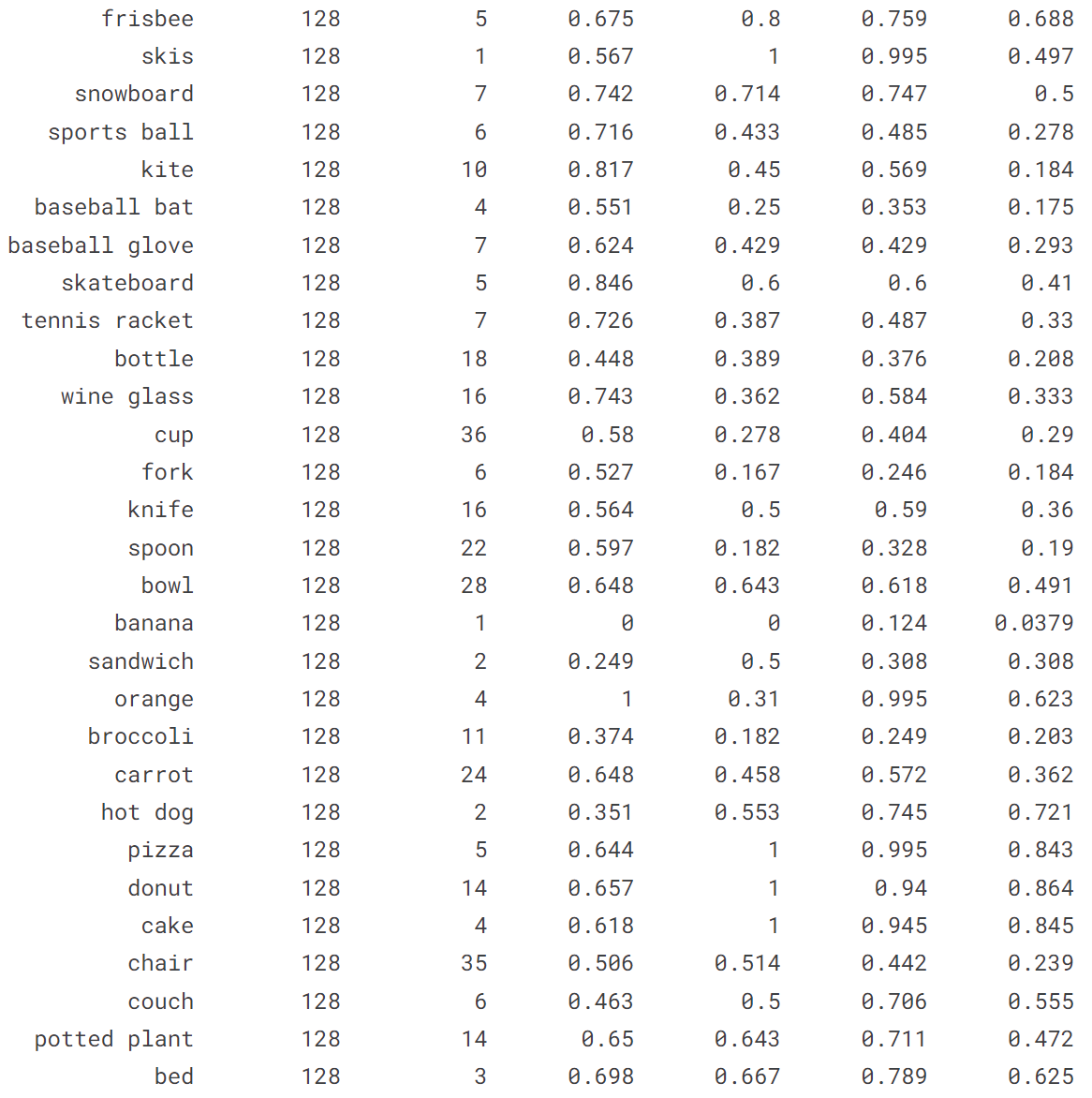

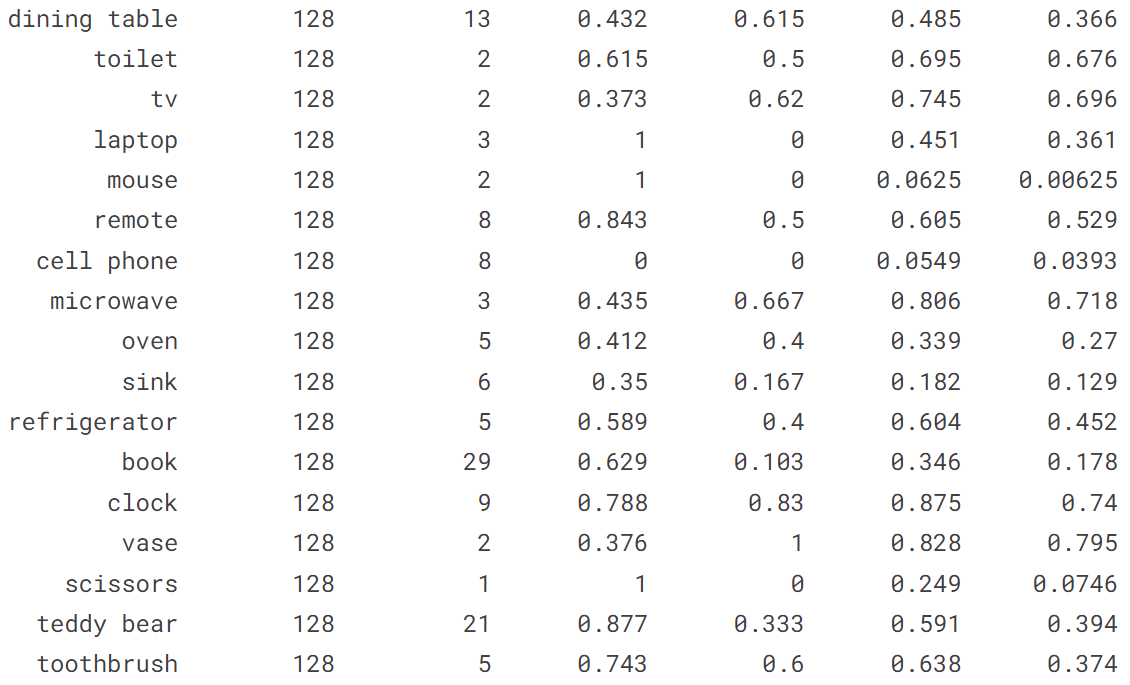

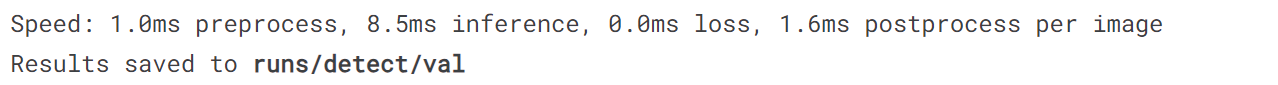

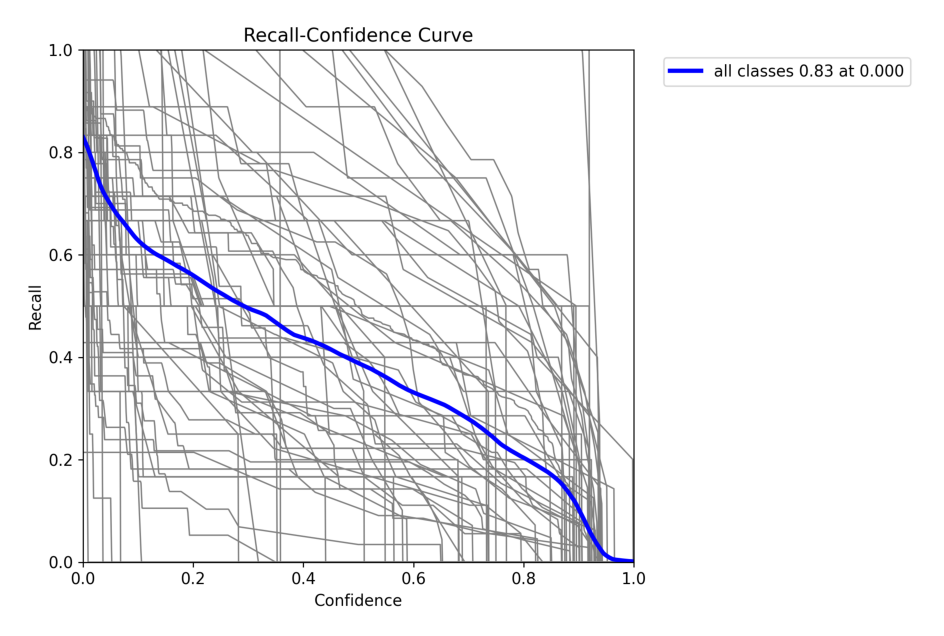

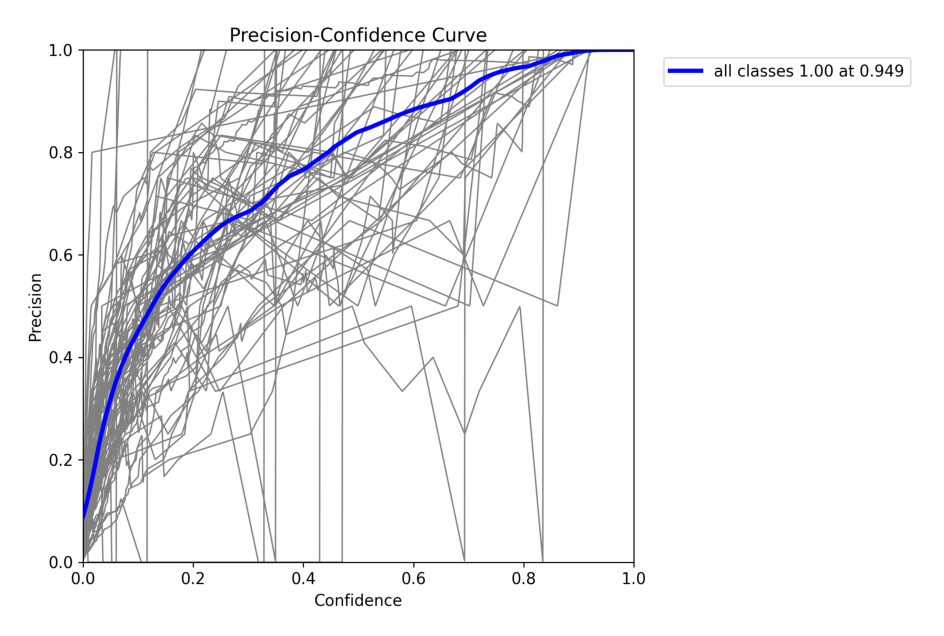

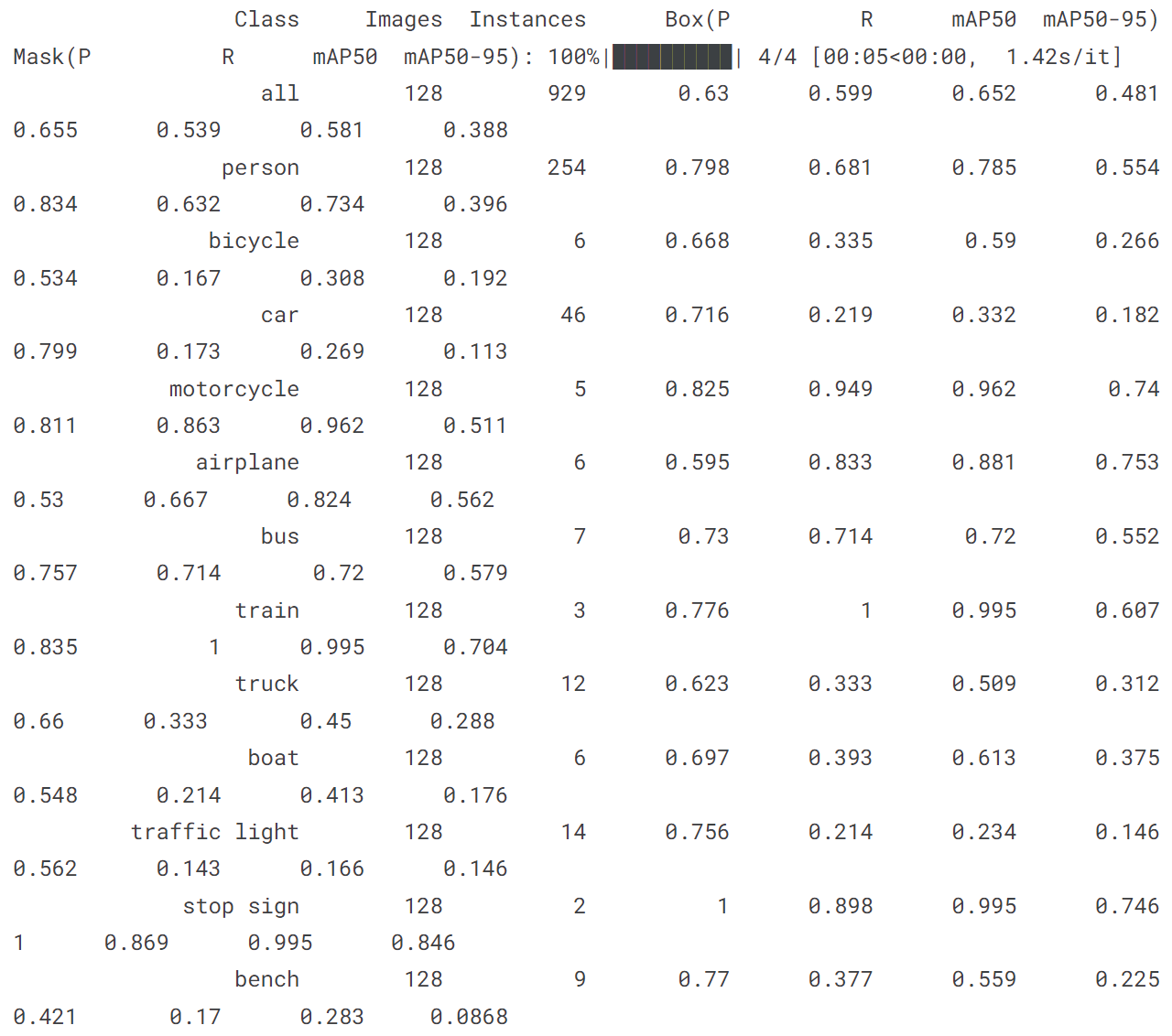

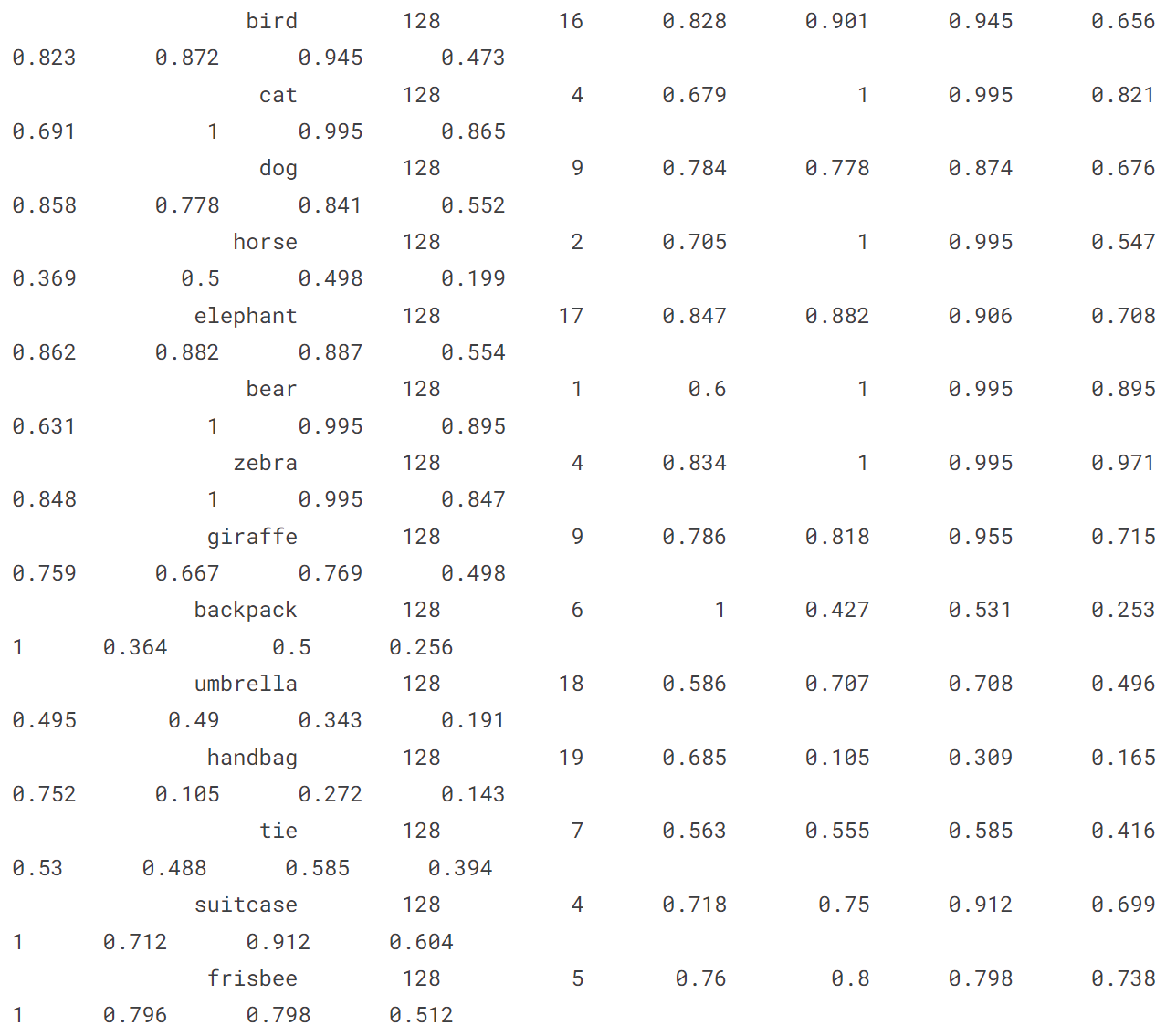

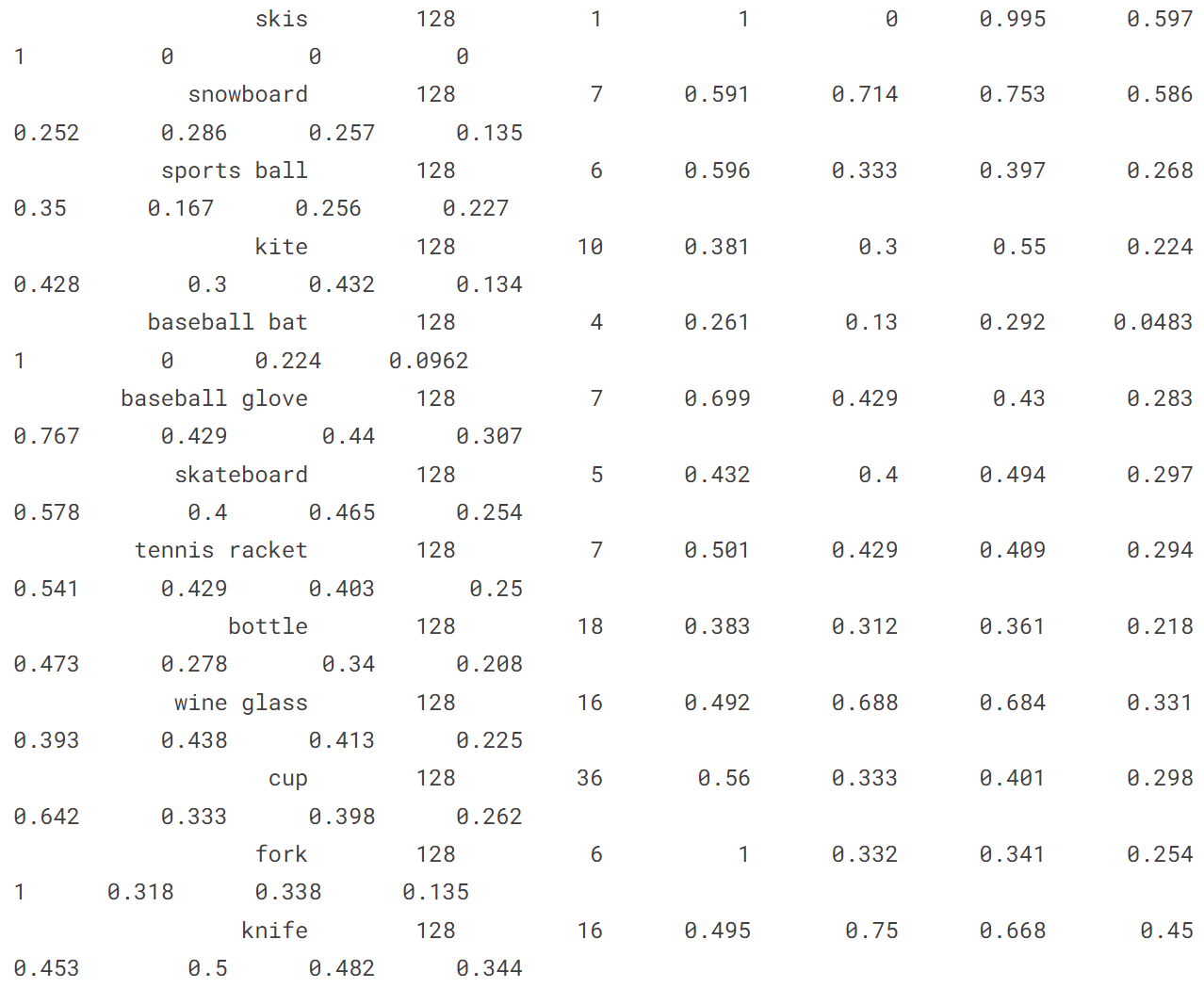

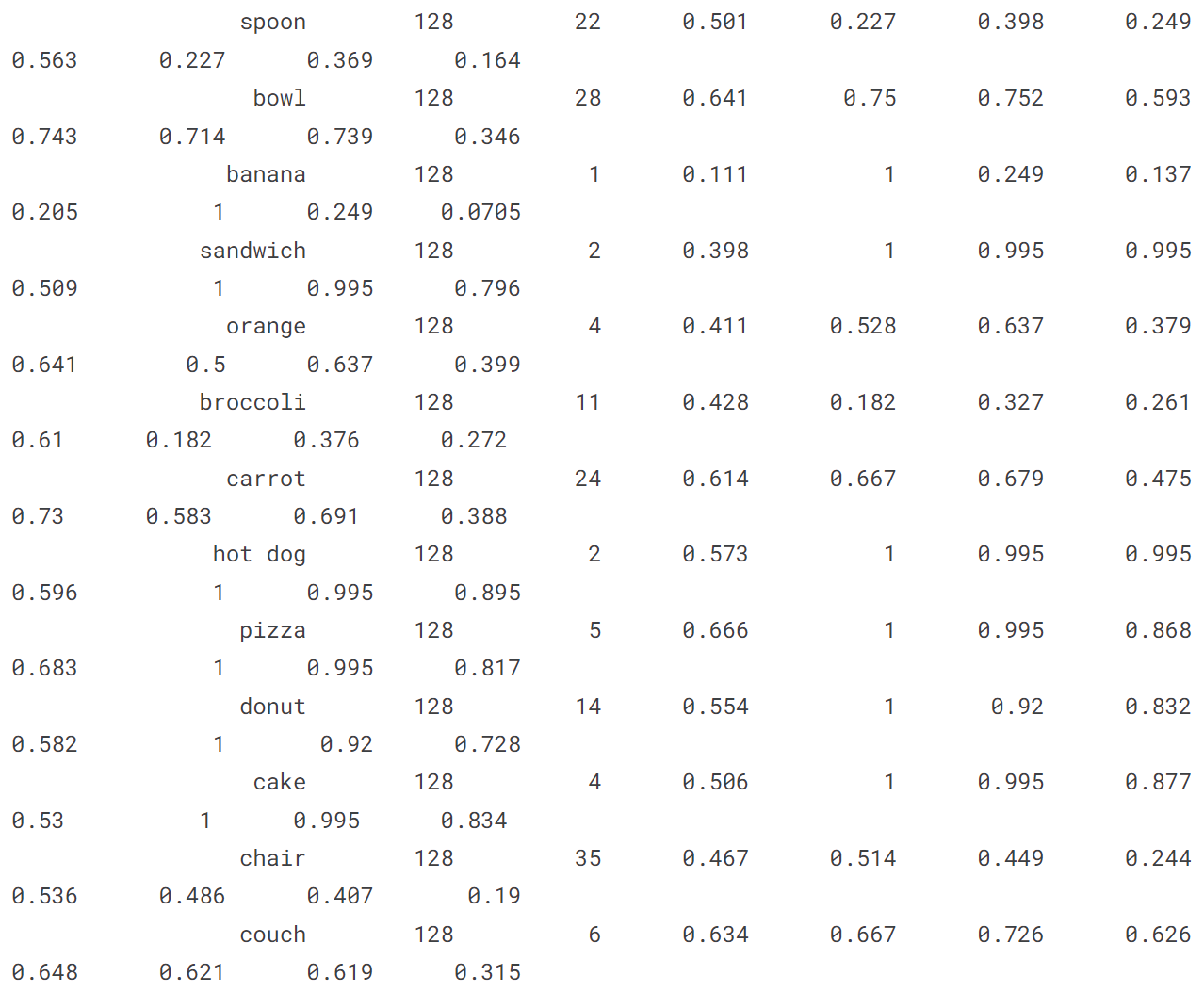

4.1 在 COCO128 val 上验证 YOLOv8n

!yolo val model = yolov8n.pt data = coco128.yaml

import osfolder_path = '/kaggle/working/runs/detect/val'

image_extensions = ['.jpg', '.jpeg', '.png'] # 支持的图片文件扩展名

image_paths = []

for file in os.listdir(folder_path):if any(file.endswith(extension) for extension in image_extensions):image_paths.append(os.path.join(folder_path, file))

for image_path in image_paths:image = plt.imread(image_path)plt.figure(figsize=(12, 8))plt.imshow(image)plt.axis('off')plt.show()

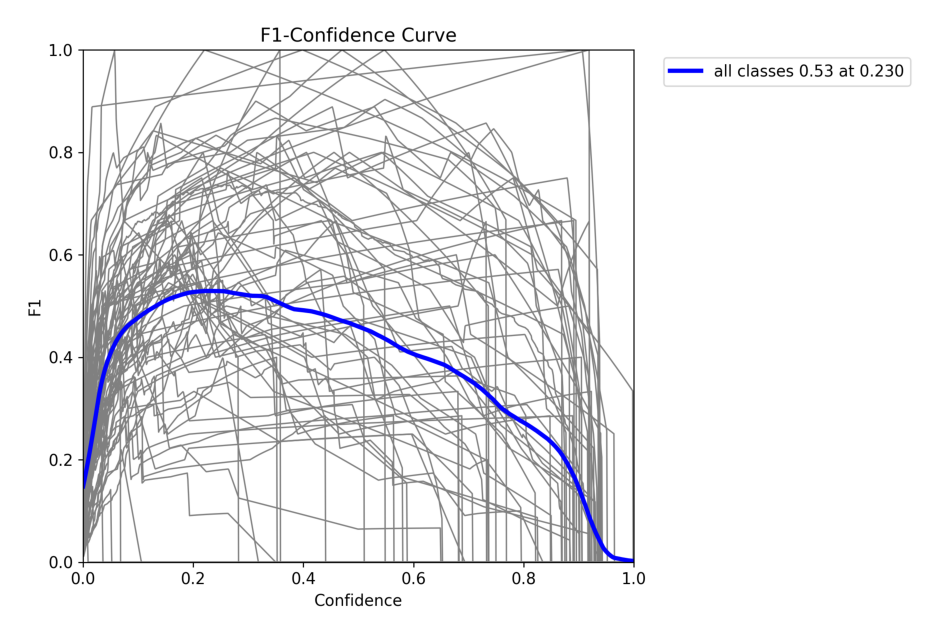

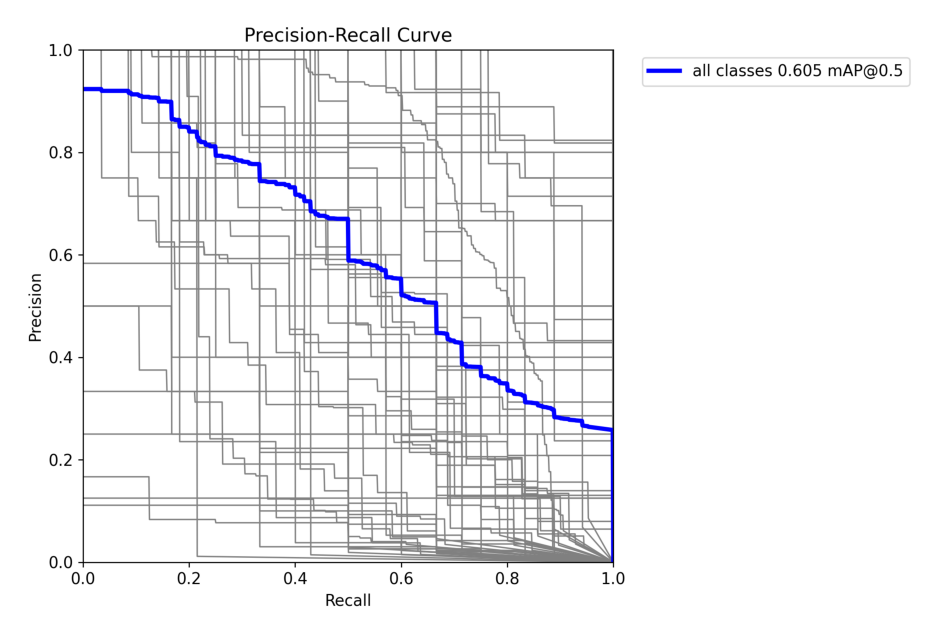

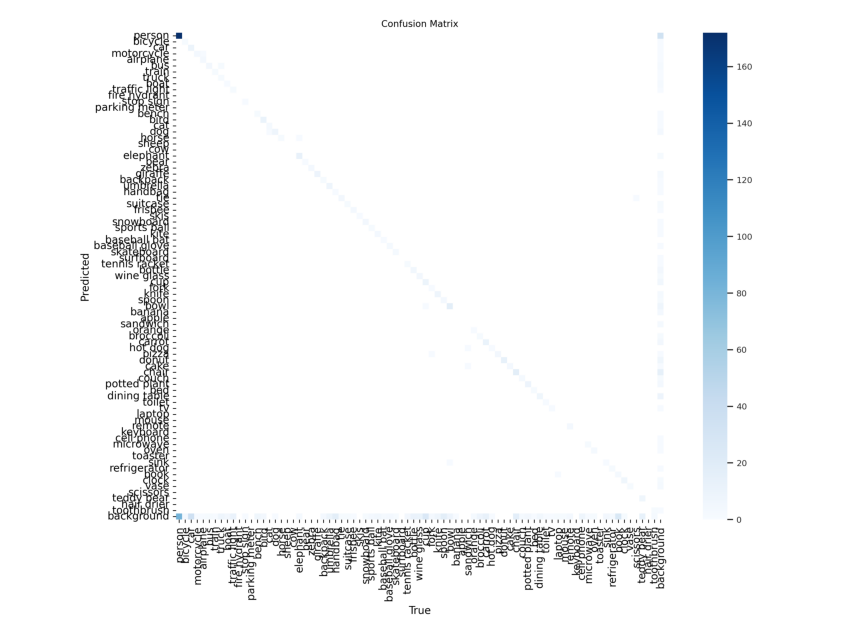

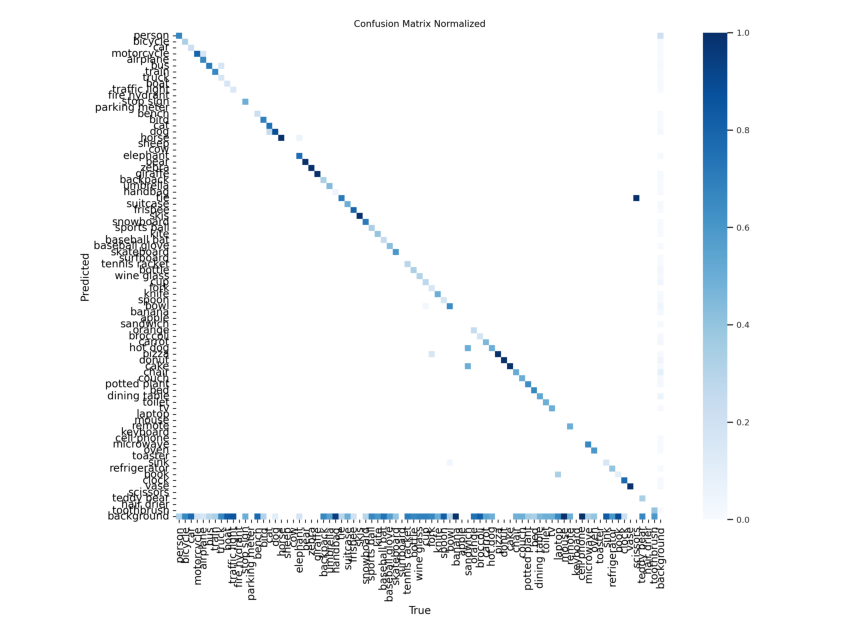

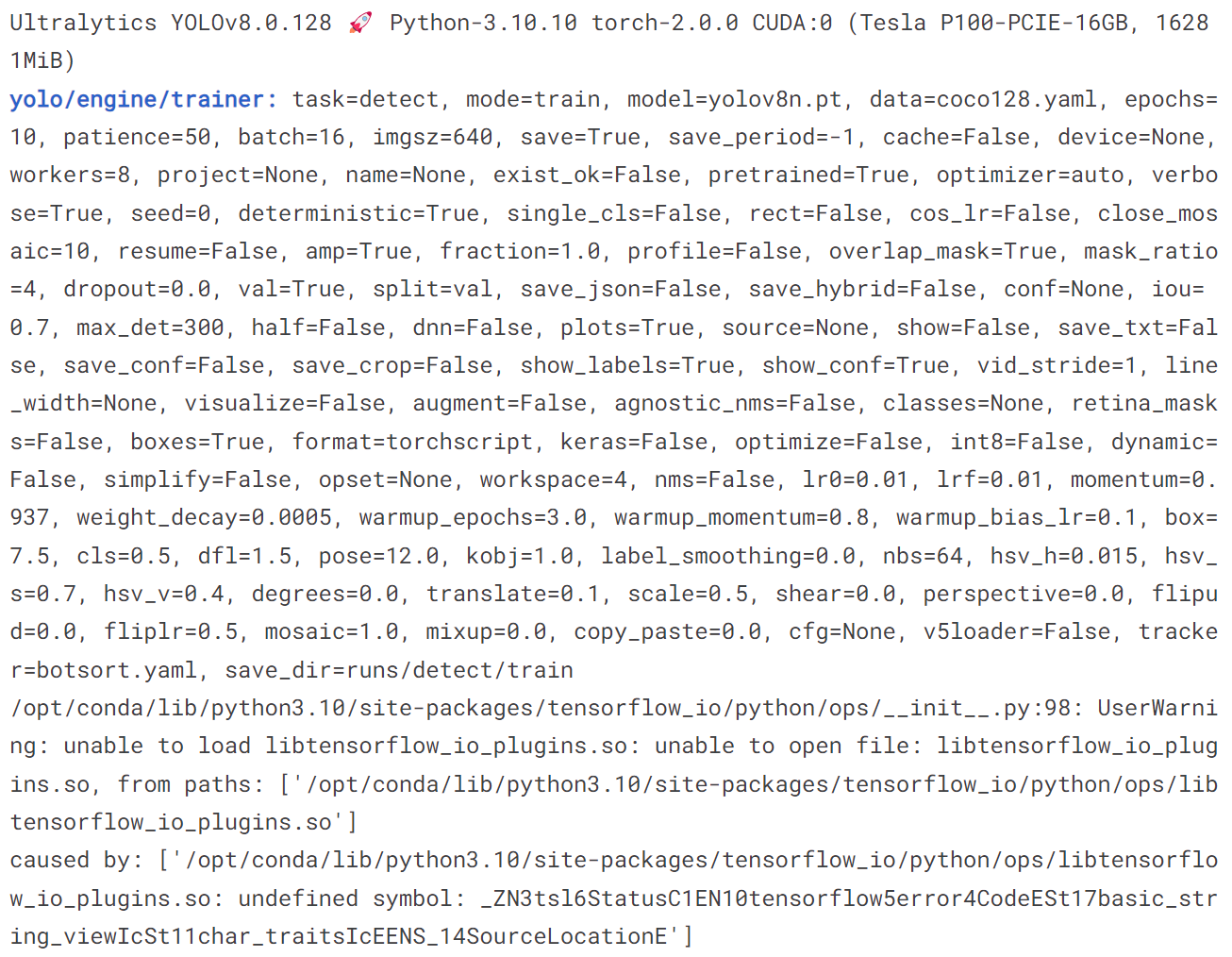

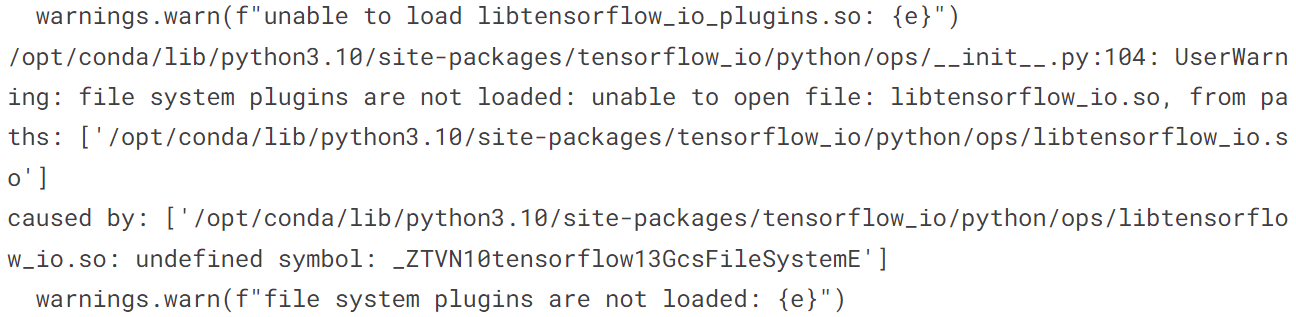

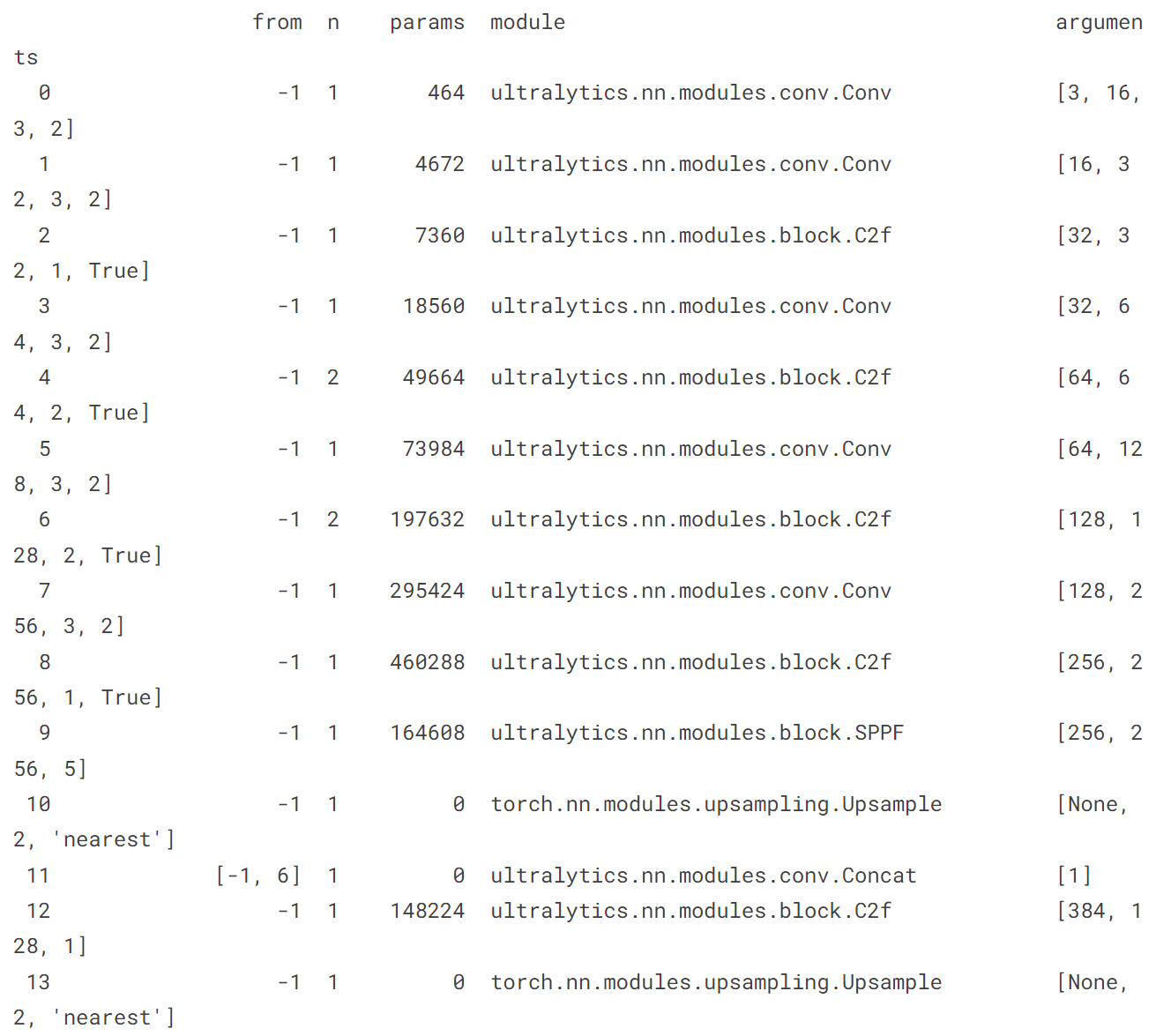

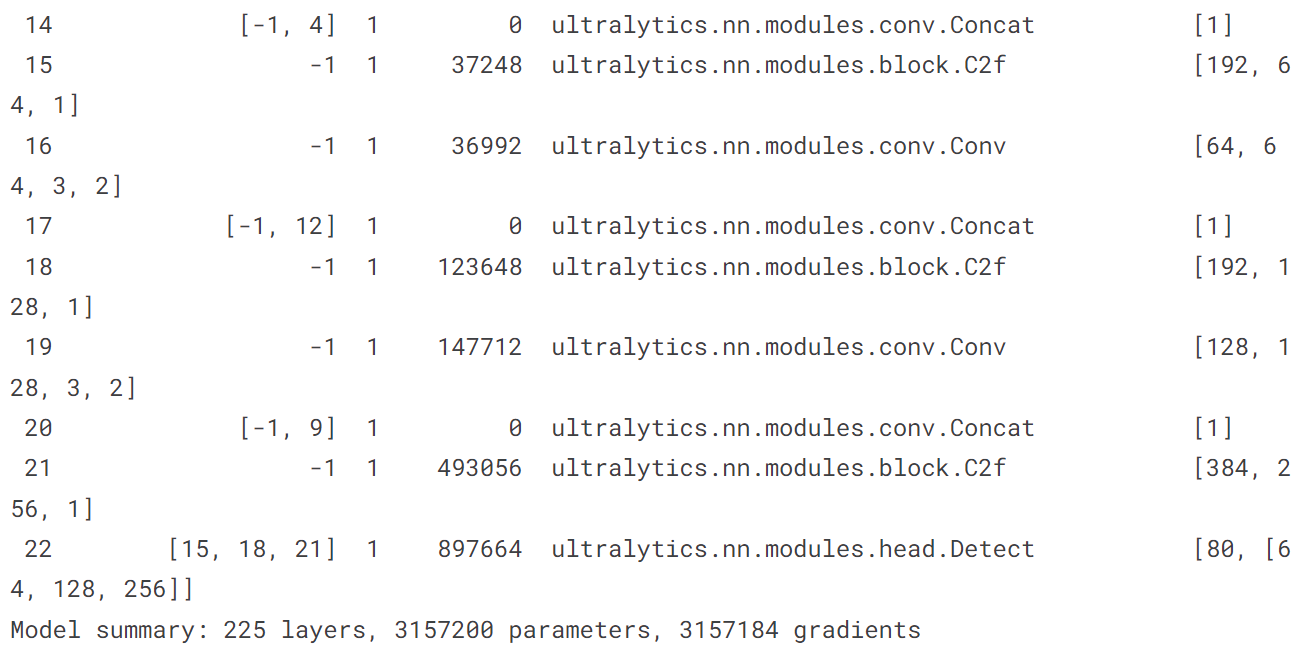

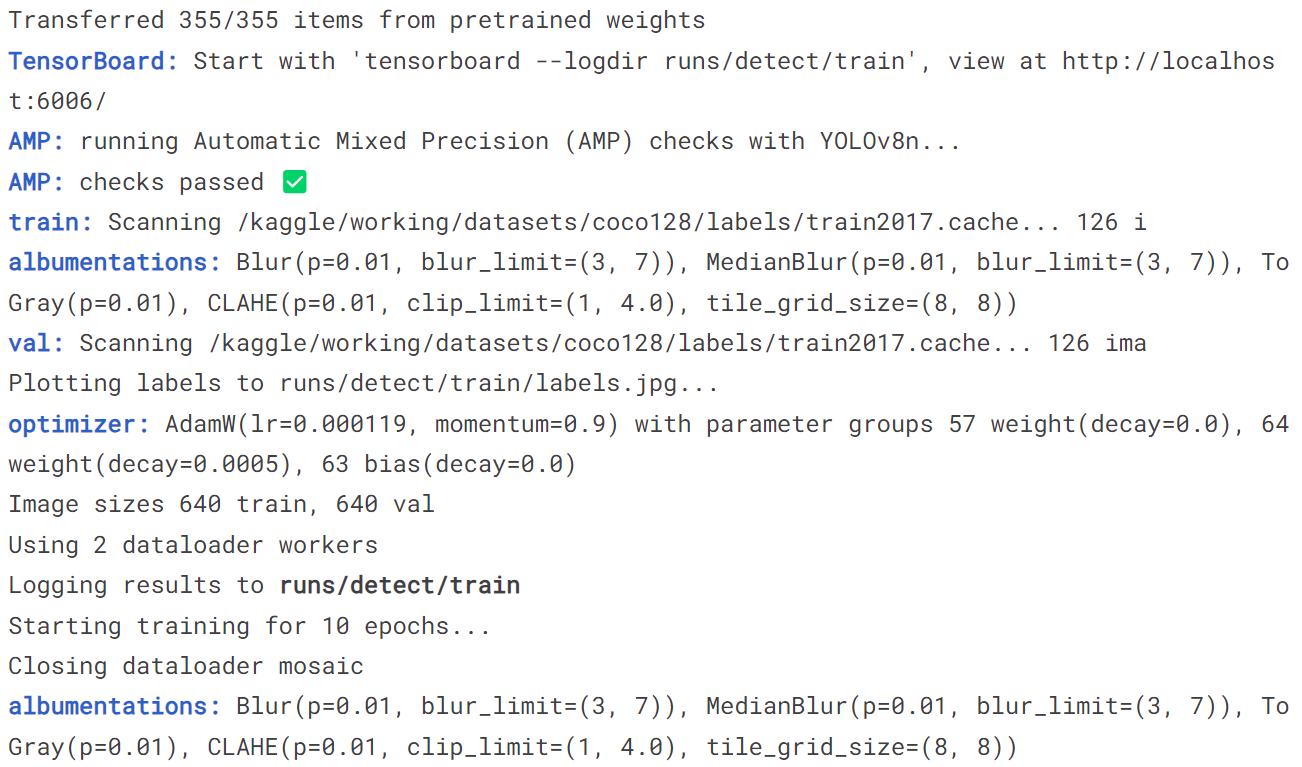

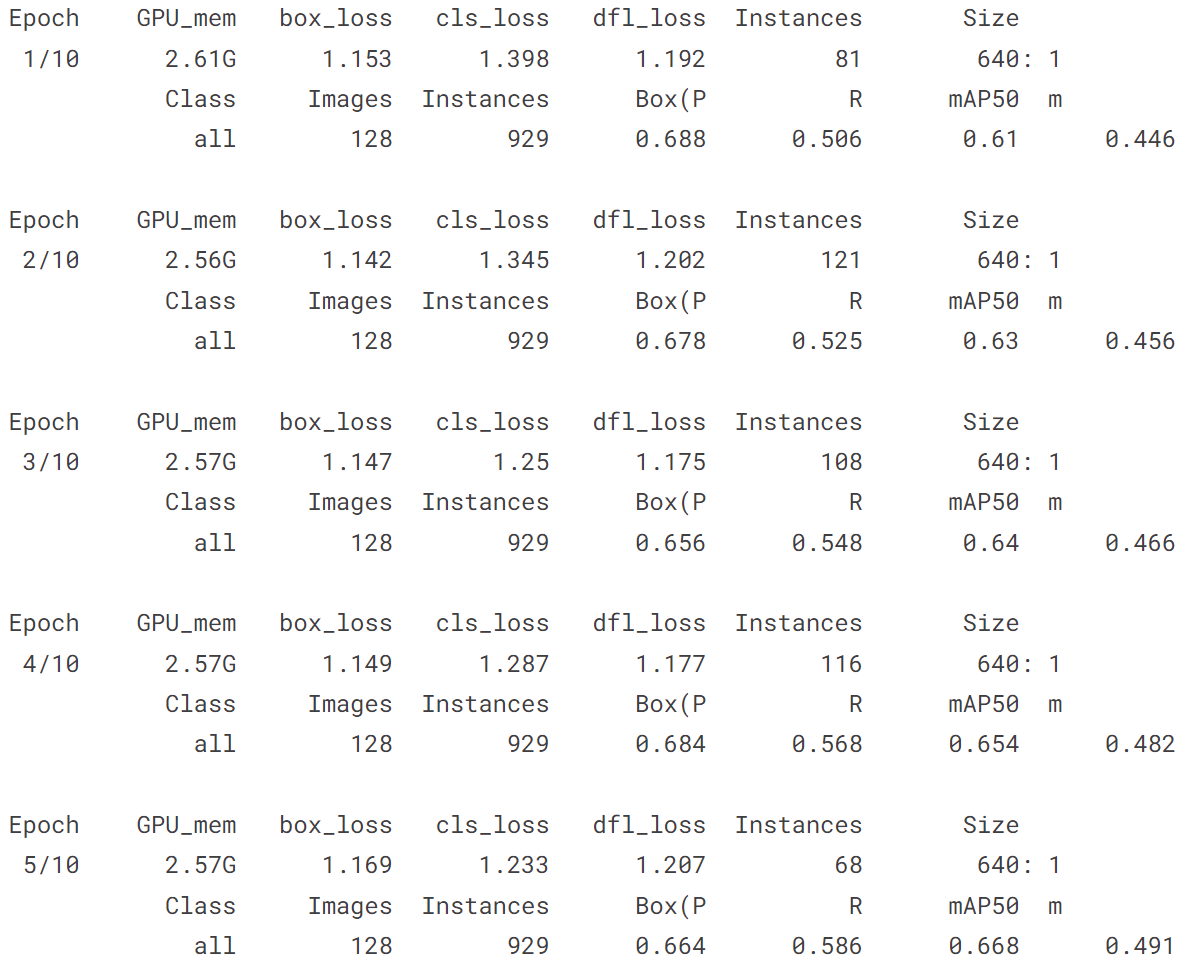

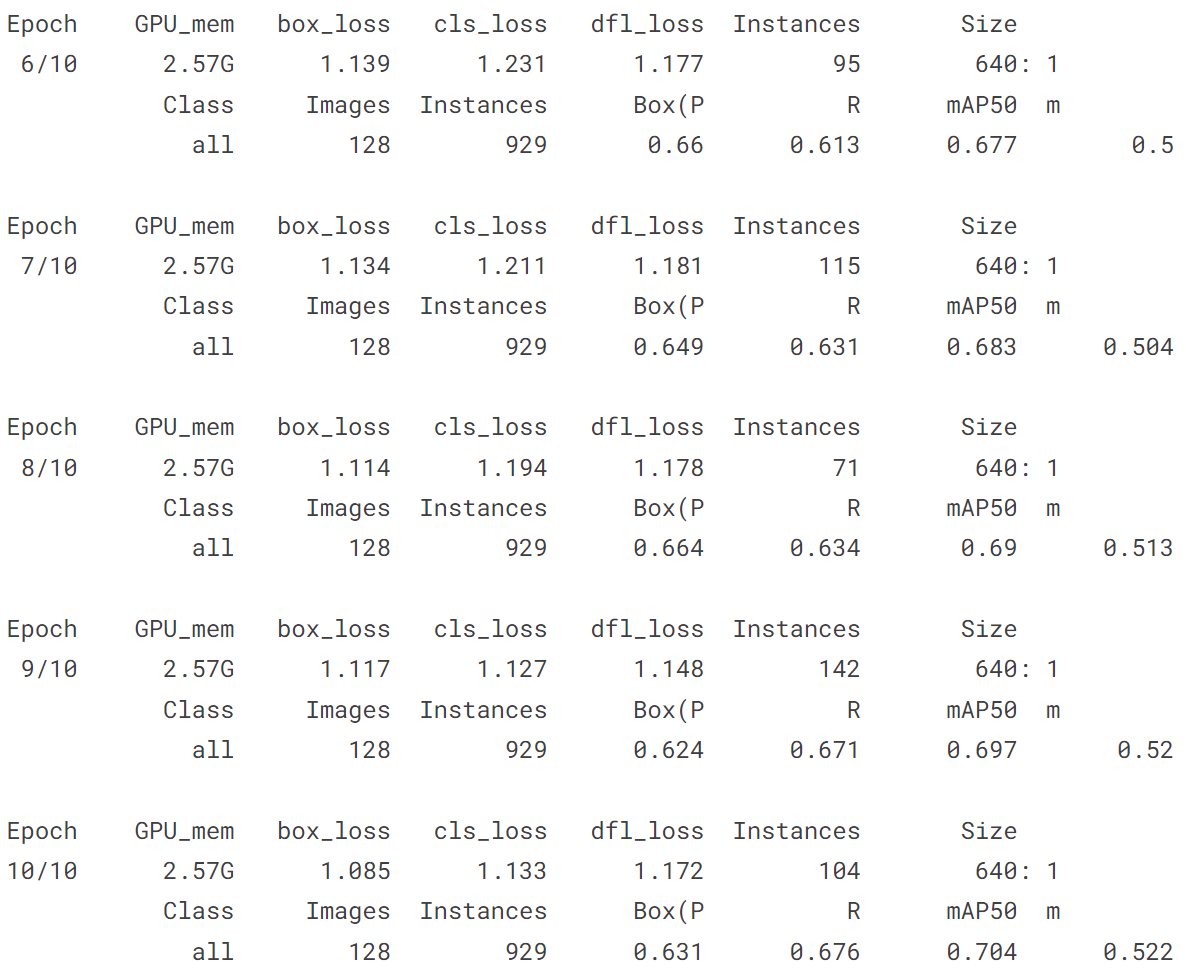

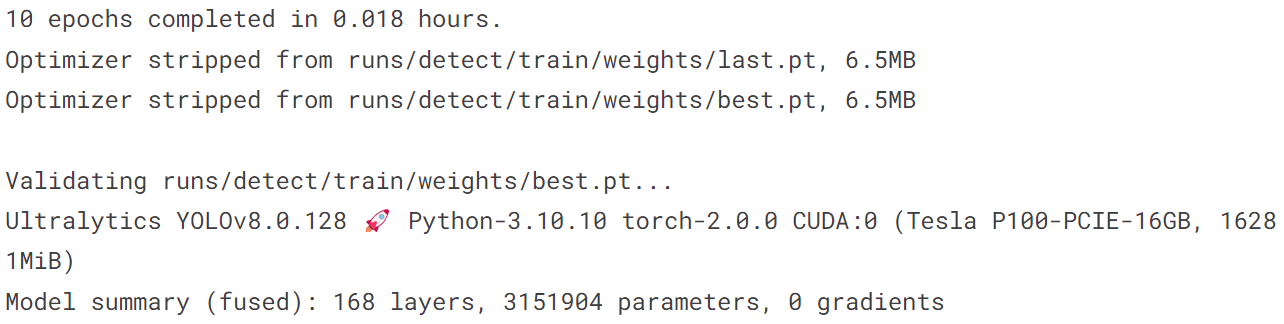

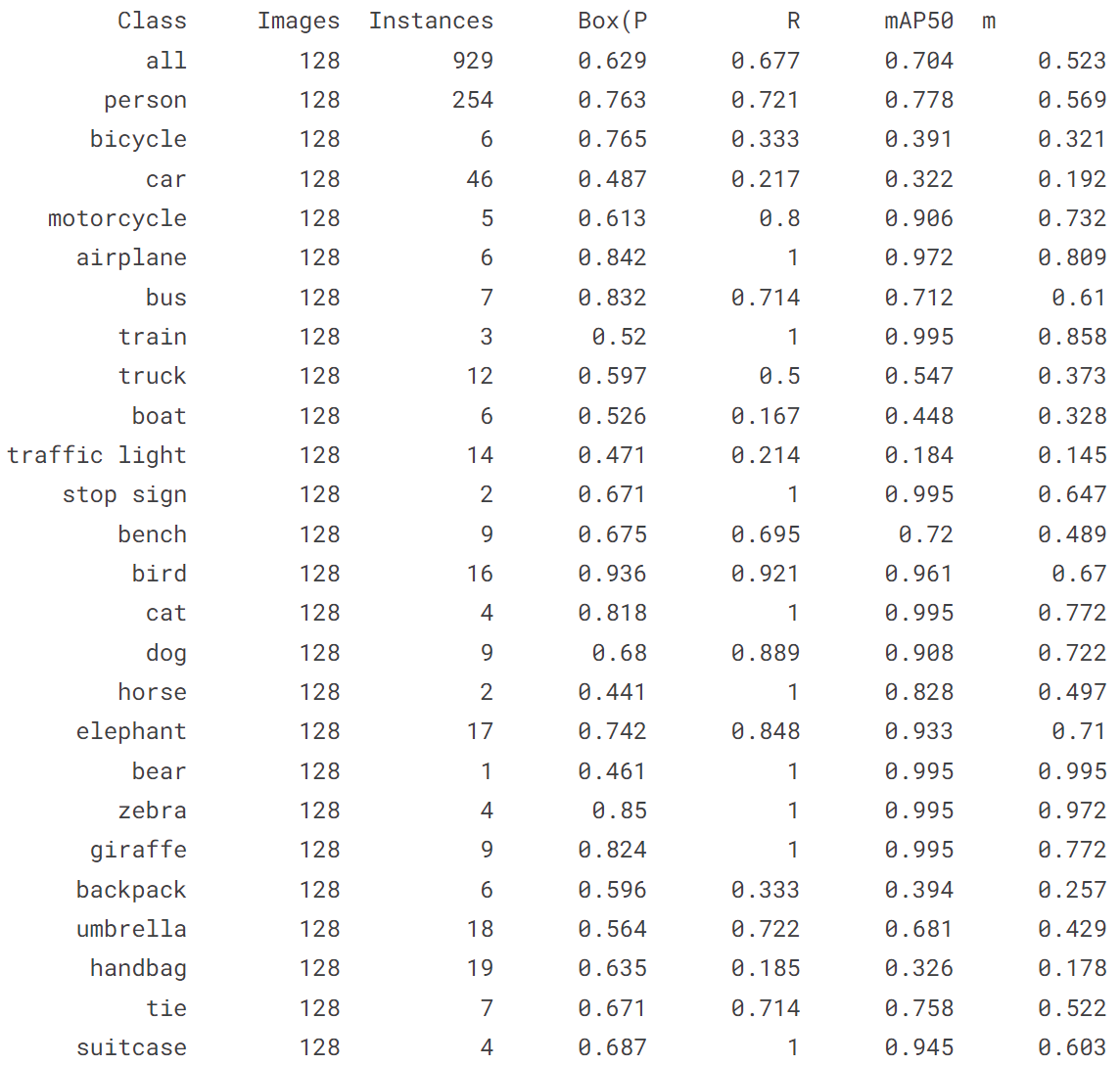

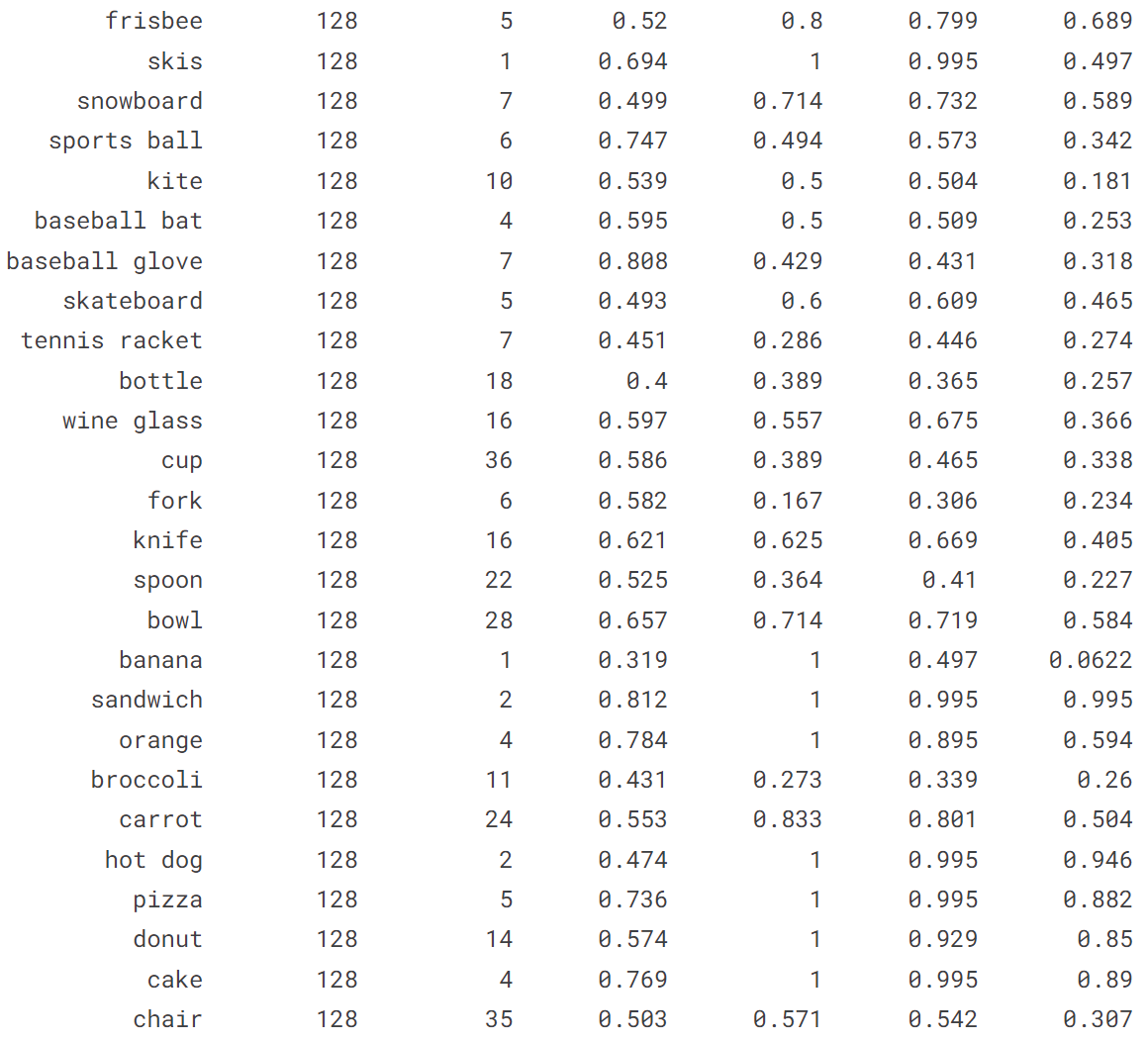

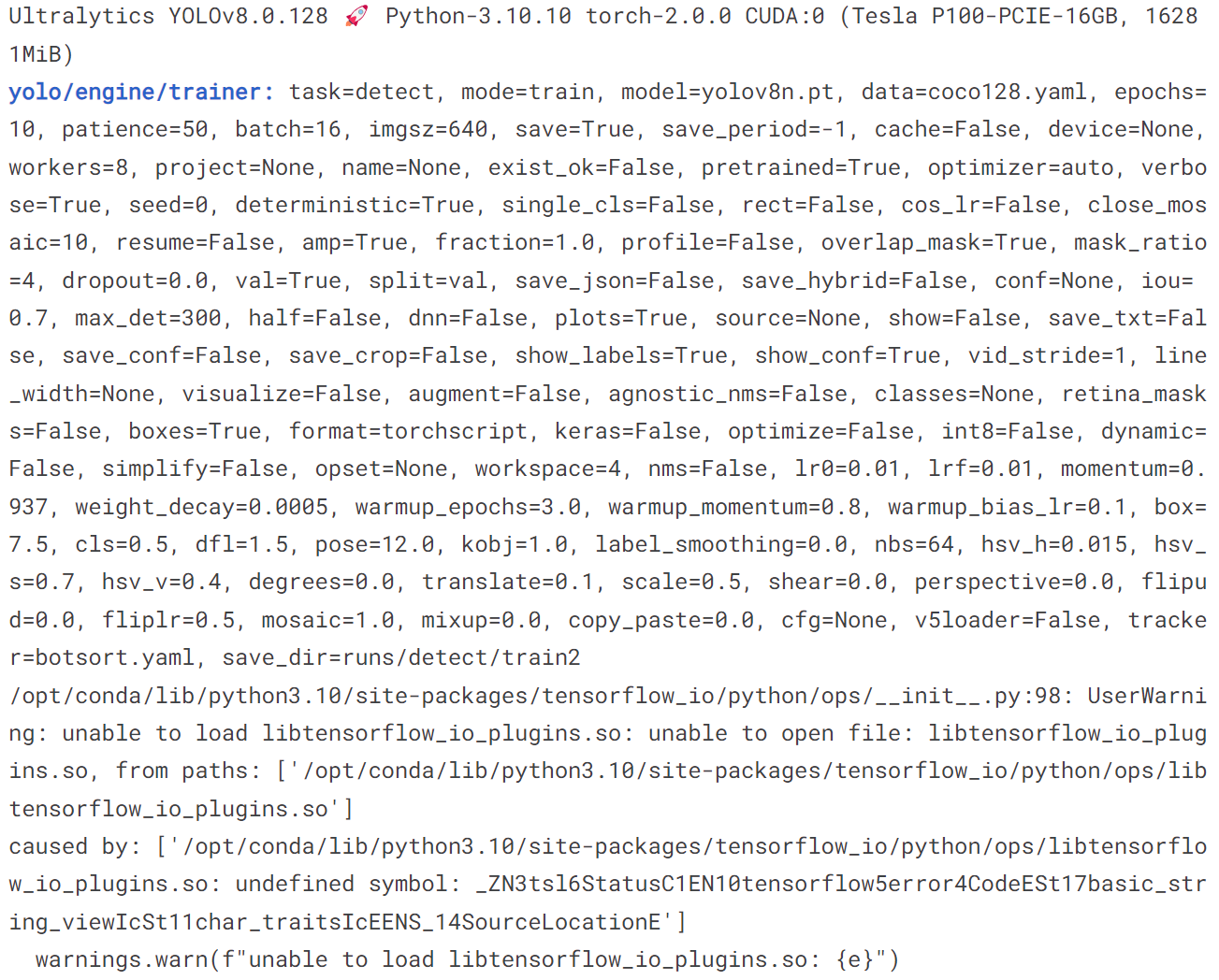

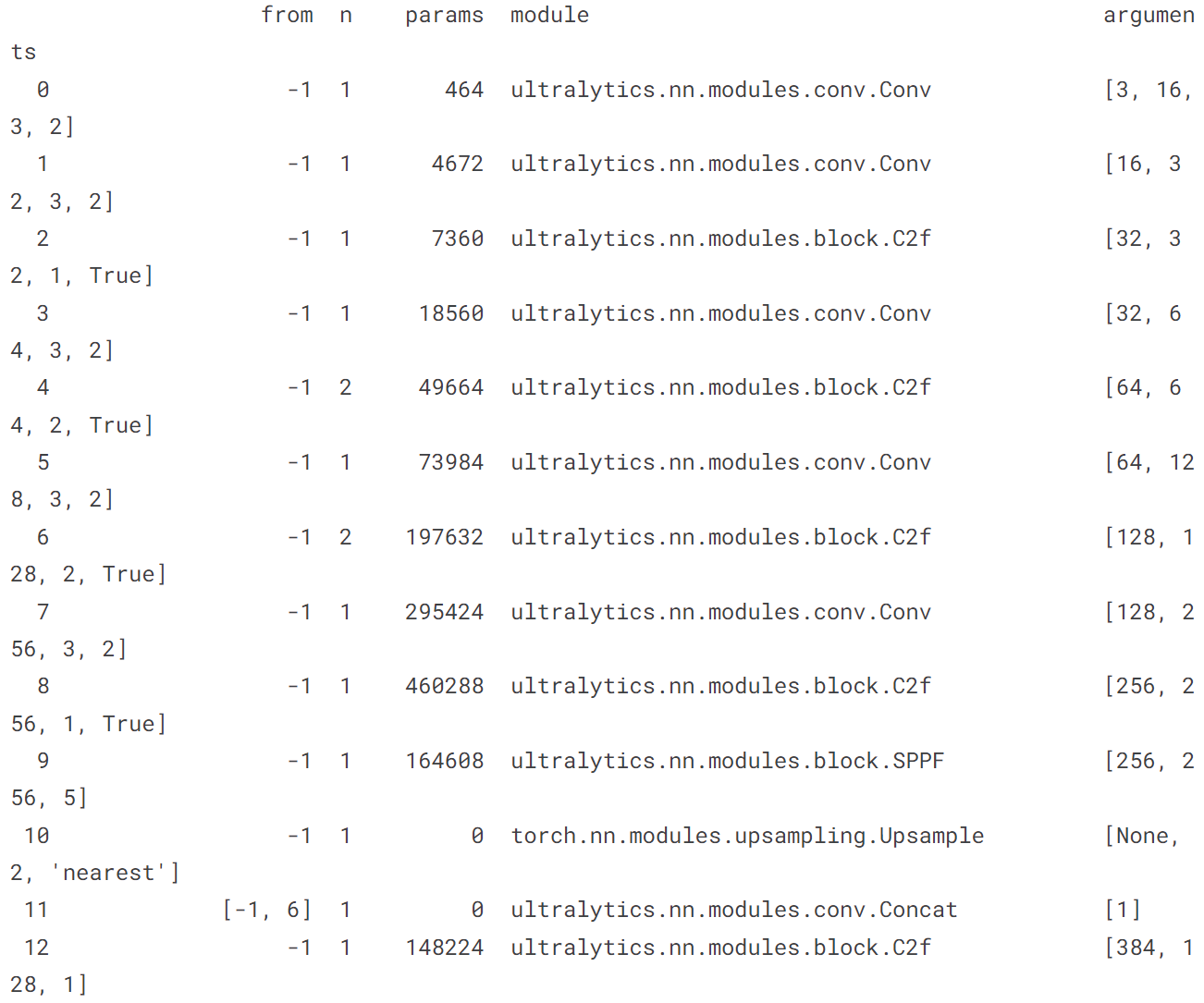

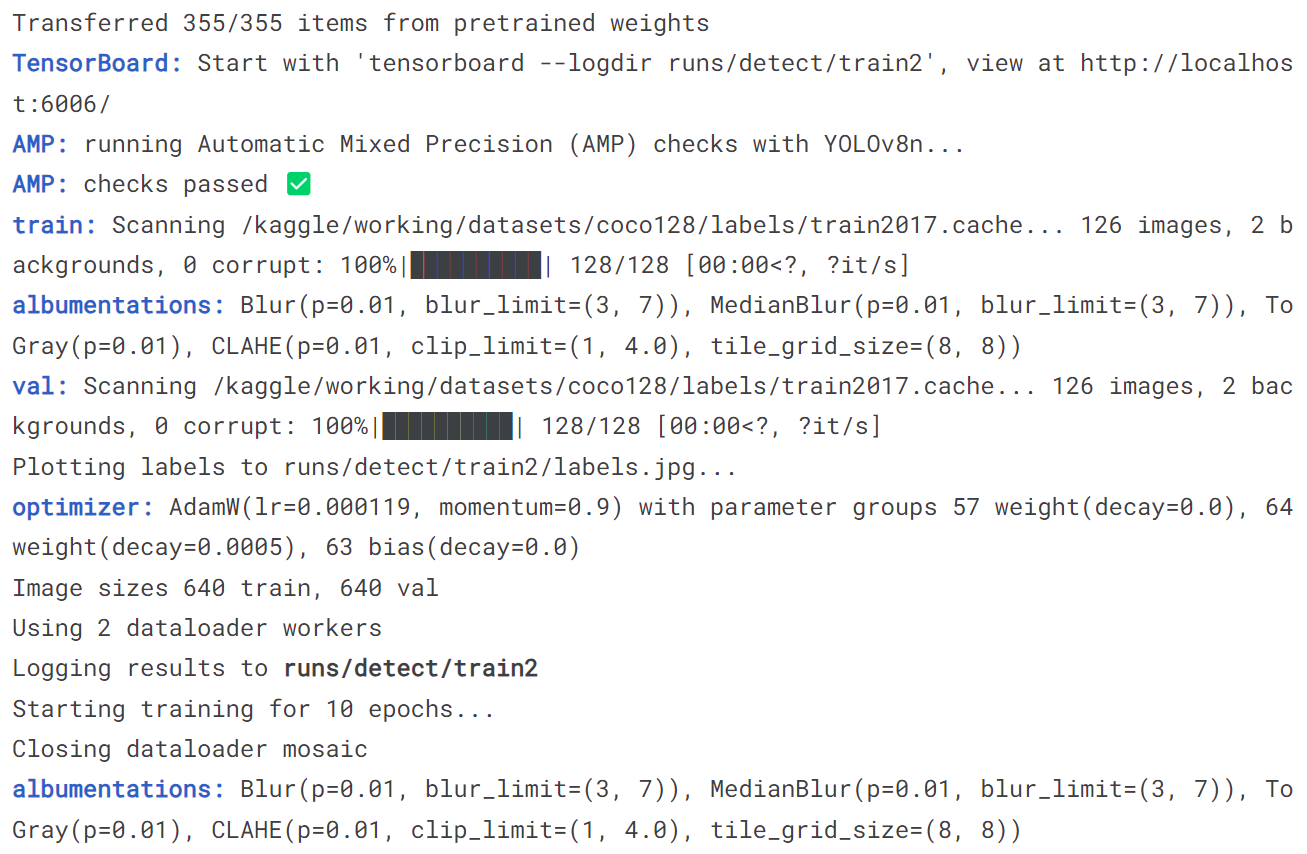

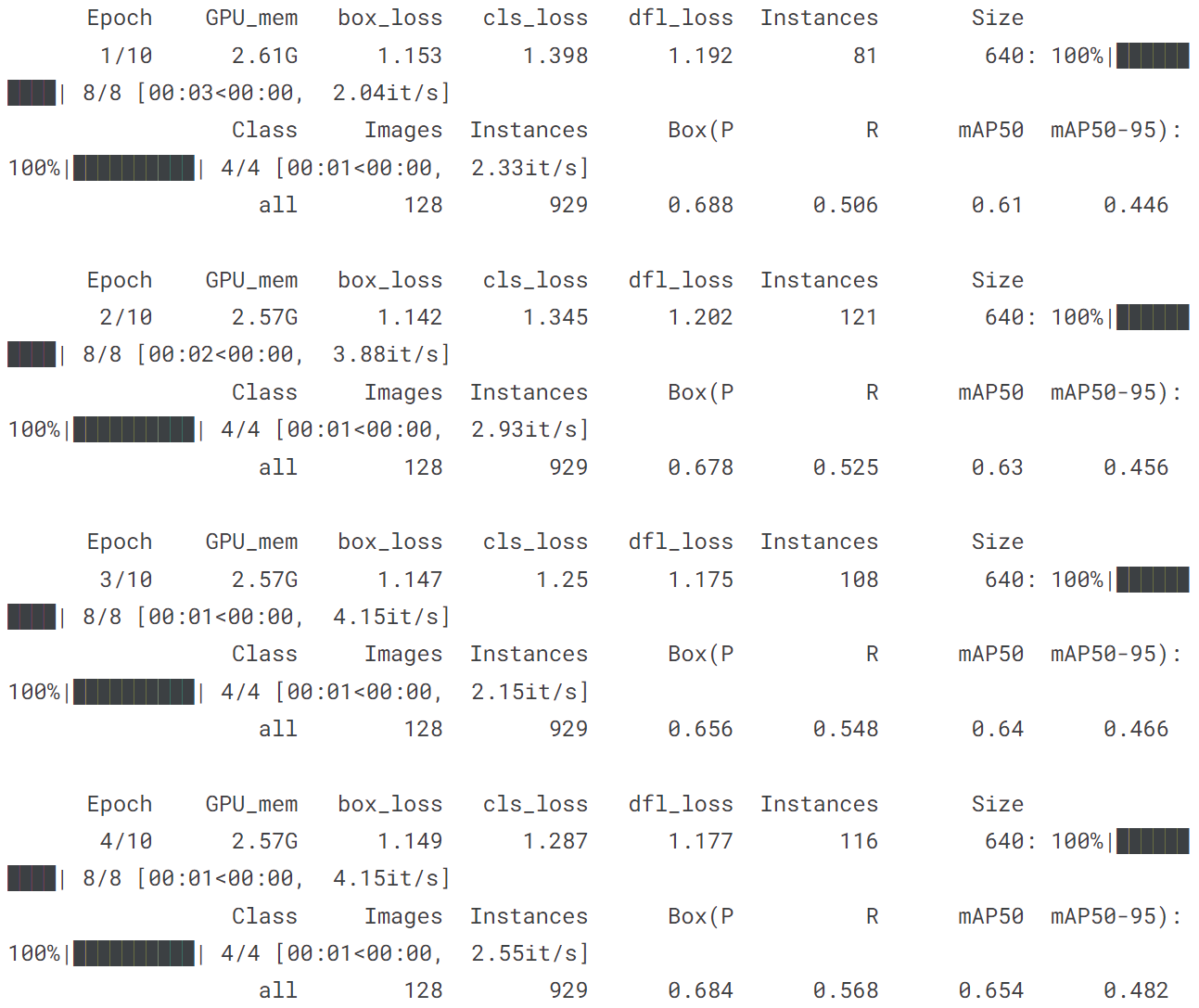

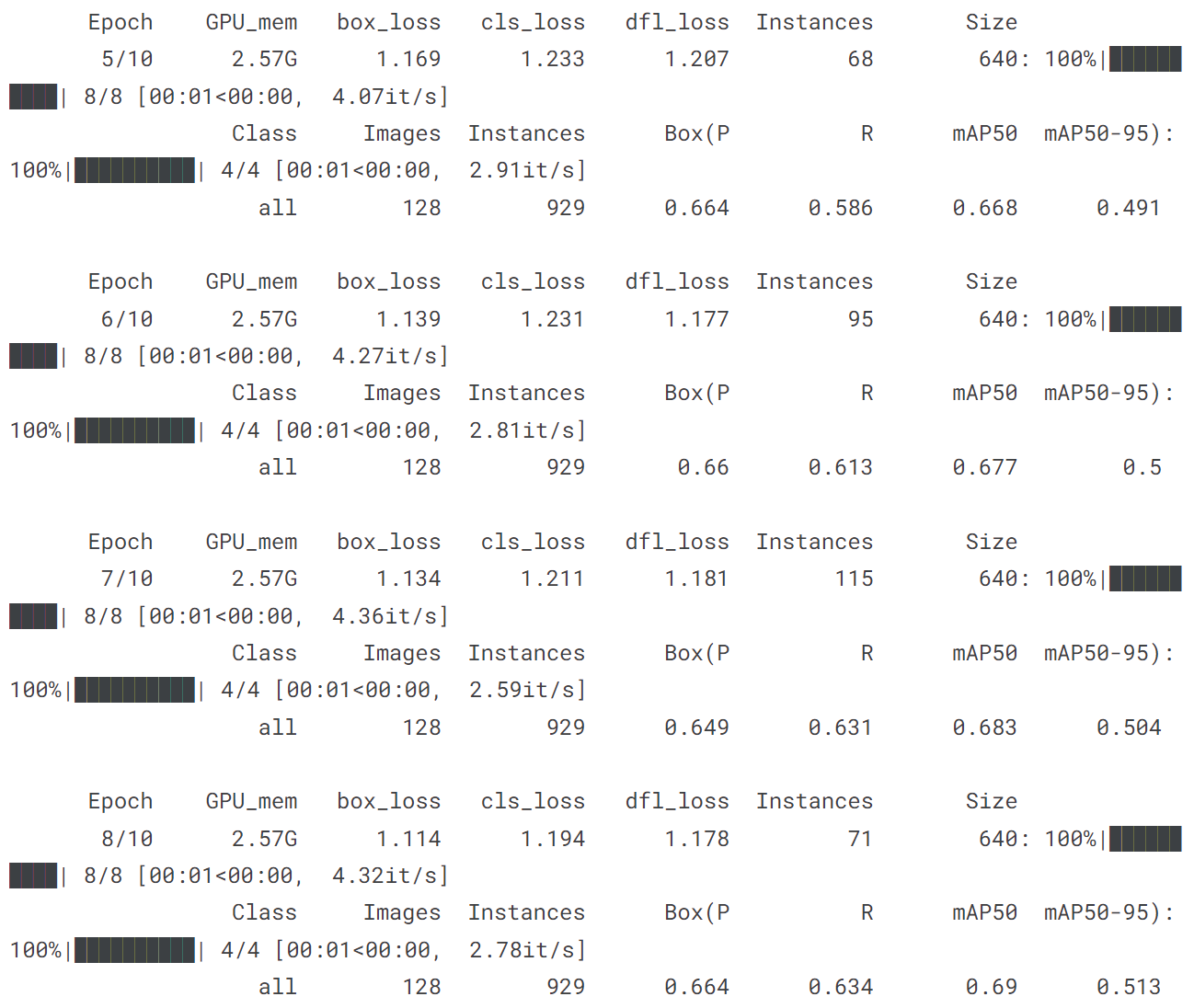

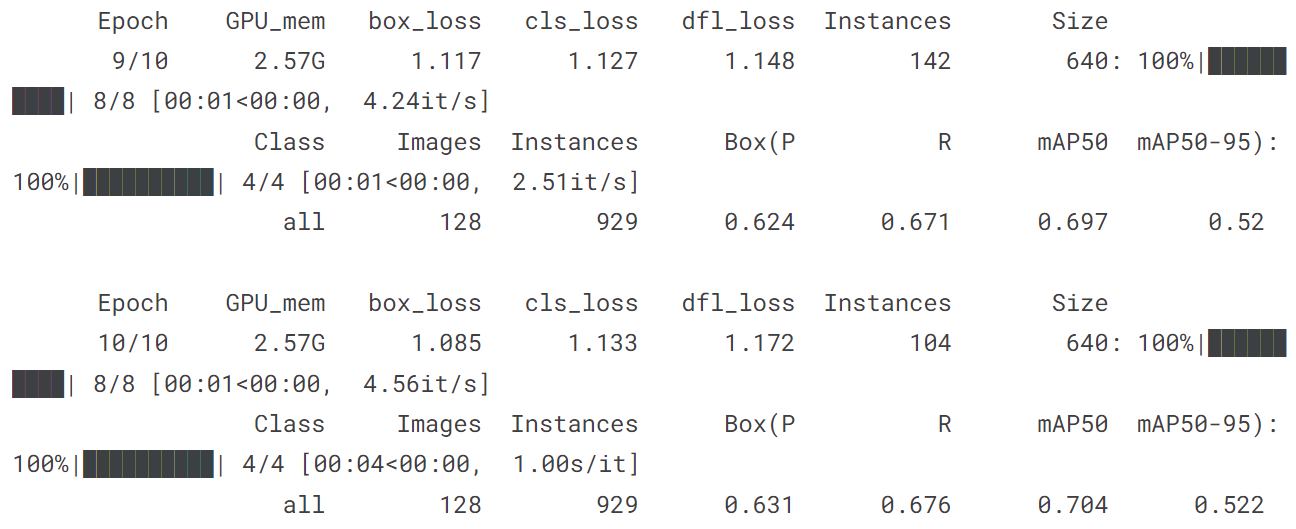

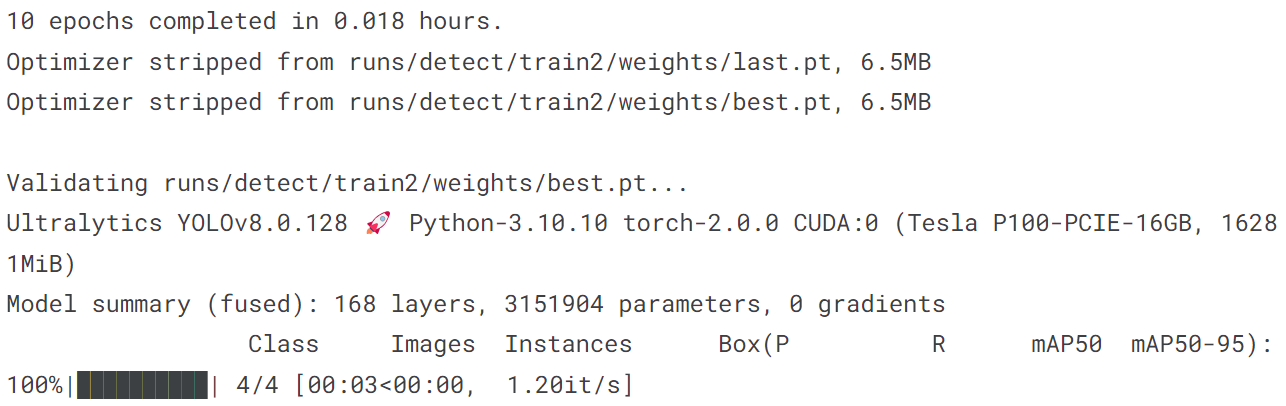

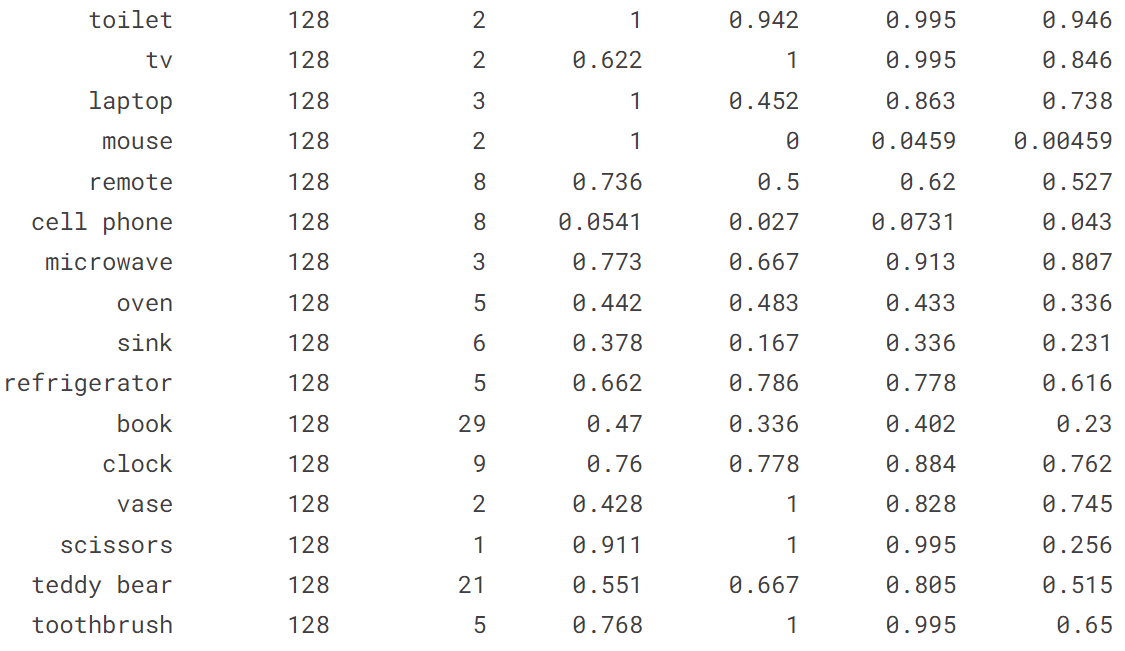

4.2 在COCO128上训练YOLOv8n

!yolo train model = yolov8n.pt data = coco128.yaml epochs = 10 imgsz = 640

五、自己训练

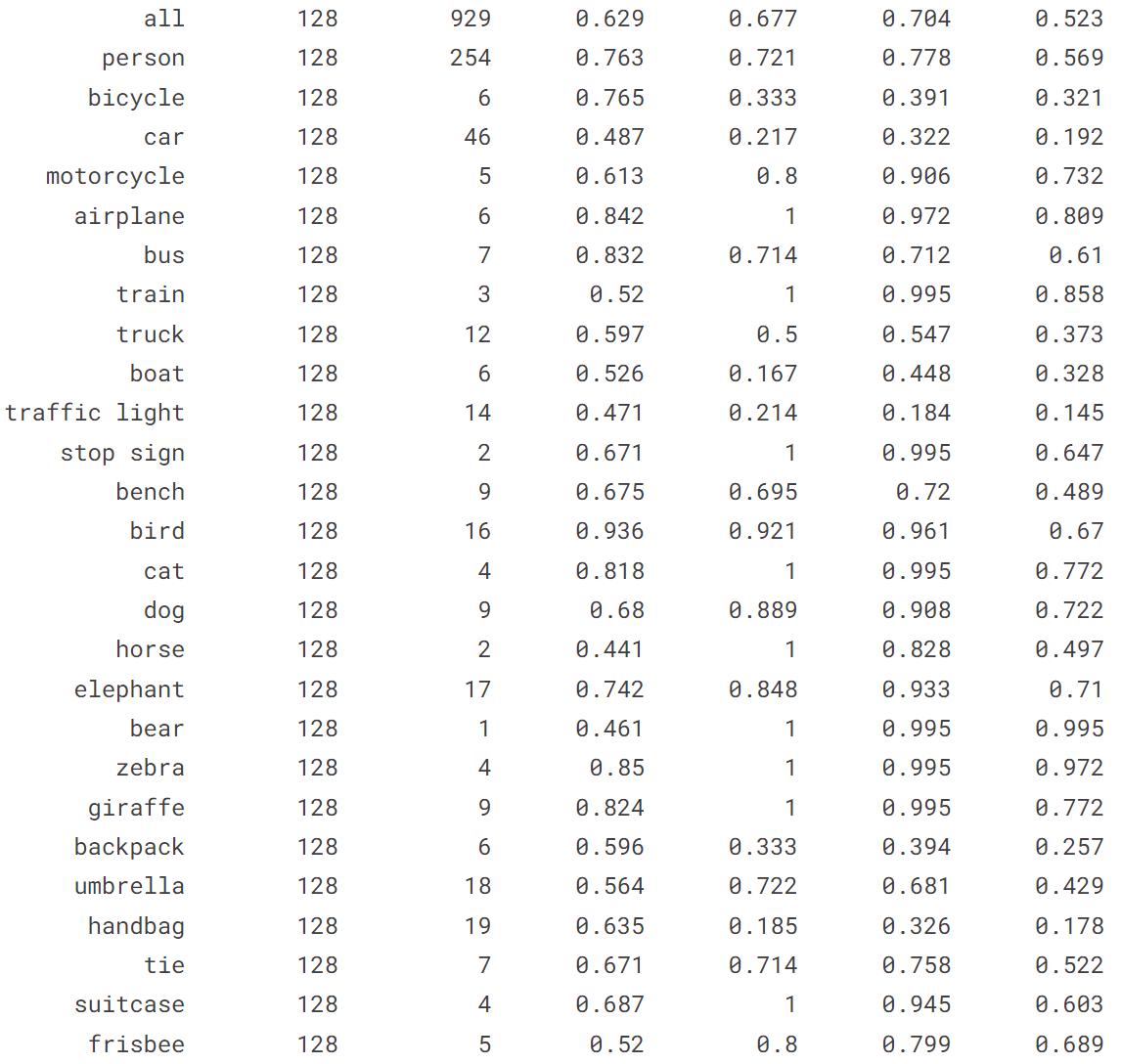

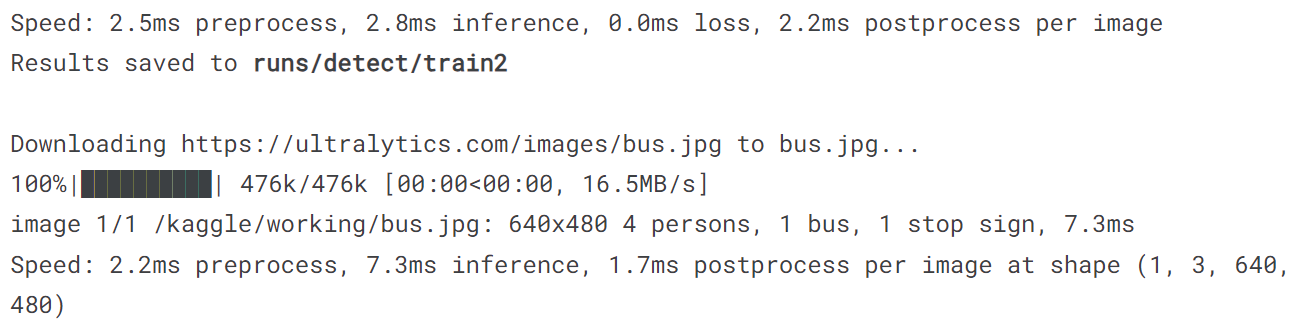

5.1 训练检测模型

# 导入YOLOv8n, 在COCO128上训练10个epochs,最后用一张图片预测

from ultralytics import YOLOmodel = YOLO('yolov8n.pt')

model.train(data = 'coco128.yaml', epochs = 10)

model('https://ultralytics.com/images/bus.jpg')

输出结果:

[ultralytics.yolo.engine.results.Results object with attributes:boxes: ultralytics.yolo.engine.results.Boxes objectkeypoints: Nonekeys: ['boxes']masks: Nonenames: {0: 'person', 1: 'bicycle', 2: 'car', 3: 'motorcycle', 4: 'airplane', 5: 'bus', 6: 'train', 7: 'truck', 8: 'boat', 9: 'traffic light', 10: 'fire hydrant', 11: 'stop sign', 12: 'parking meter', 13: 'bench', 14: 'bird', 15: 'cat', 16: 'dog', 17: 'horse', 18: 'sheep', 19: 'cow', 20: 'elephant', 21: 'bear', 22: 'zebra', 23: 'giraffe', 24: 'backpack', 25: 'umbrella', 26: 'handbag', 27: 'tie', 28: 'suitcase', 29: 'frisbee', 30: 'skis', 31: 'snowboard', 32: 'sports ball', 33: 'kite', 34: 'baseball bat', 35: 'baseball glove', 36: 'skateboard', 37: 'surfboard', 38: 'tennis racket', 39: 'bottle', 40: 'wine glass', 41: 'cup', 42: 'fork', 43: 'knife', 44: 'spoon', 45: 'bowl', 46: 'banana', 47: 'apple', 48: 'sandwich', 49: 'orange', 50: 'broccoli', 51: 'carrot', 52: 'hot dog', 53: 'pizza', 54: 'donut', 55: 'cake', 56: 'chair', 57: 'couch', 58: 'potted plant', 59: 'bed', 60: 'dining table', 61: 'toilet', 62: 'tv', 63: 'laptop', 64: 'mouse', 65: 'remote', 66: 'keyboard', 67: 'cell phone', 68: 'microwave', 69: 'oven', 70: 'toaster', 71: 'sink', 72: 'refrigerator', 73: 'book', 74: 'clock', 75: 'vase', 76: 'scissors', 77: 'teddy bear', 78: 'hair drier', 79: 'toothbrush'}orig_img: array([[[122, 148, 172],[120, 146, 170],[125, 153, 177],...,[157, 170, 184],[158, 171, 185],[158, 171, 185]],[[127, 153, 177],[124, 150, 174],[127, 155, 179],...,[158, 171, 185],[159, 172, 186],[159, 172, 186]],[[128, 154, 178],[126, 152, 176],[126, 154, 178],...,[158, 171, 185],[158, 171, 185],[158, 171, 185]],...,[[185, 185, 191],[182, 182, 188],[179, 179, 185],...,[114, 107, 112],[115, 105, 111],[116, 106, 112]],[[157, 157, 163],[180, 180, 186],[185, 186, 190],...,[107, 97, 103],[102, 92, 98],[108, 98, 104]],[[112, 112, 118],[160, 160, 166],[169, 170, 174],...,[ 99, 89, 95],[ 96, 86, 92],[102, 92, 98]]], dtype=uint8)orig_shape: (1080, 810)path: '/kaggle/working/bus.jpg'probs: Nonesave_dir: Nonespeed: {'preprocess': 2.184629440307617, 'inference': 7.320880889892578, 'postprocess': 1.7354488372802734}]

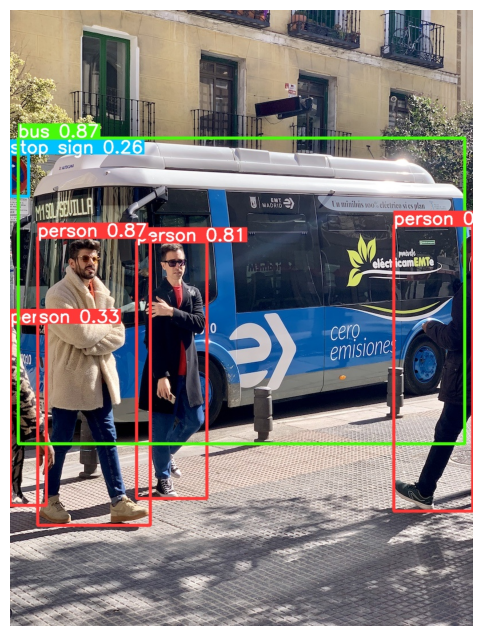

我们测试的原图为:

image = Image.open('/kaggle/working/bus.jpg')

plt.figure(figsize=(12, 8))

plt.imshow(image)

plt.axis('off')

plt.show()

!yolo predict model = '/kaggle/working/runs/detect/train2/weights/best.pt' source = '/kaggle/working/bus.jpg'

image = Image.open('/kaggle/working/runs/detect/predict2/bus.jpg')

plt.figure(figsize=(12, 8))

plt.imshow(image)

plt.axis('off')

plt.show()

!yolo predict model = '/kaggle/working/runs/detect/train2/weights/best.pt' source = '/kaggle/input/personpng/1.jpg'

image = Image.open('/kaggle/working/runs/detect/predict3/1.jpg')

plt.figure(figsize=(12, 8))

plt.imshow(image)

plt.axis('off')

plt.show()

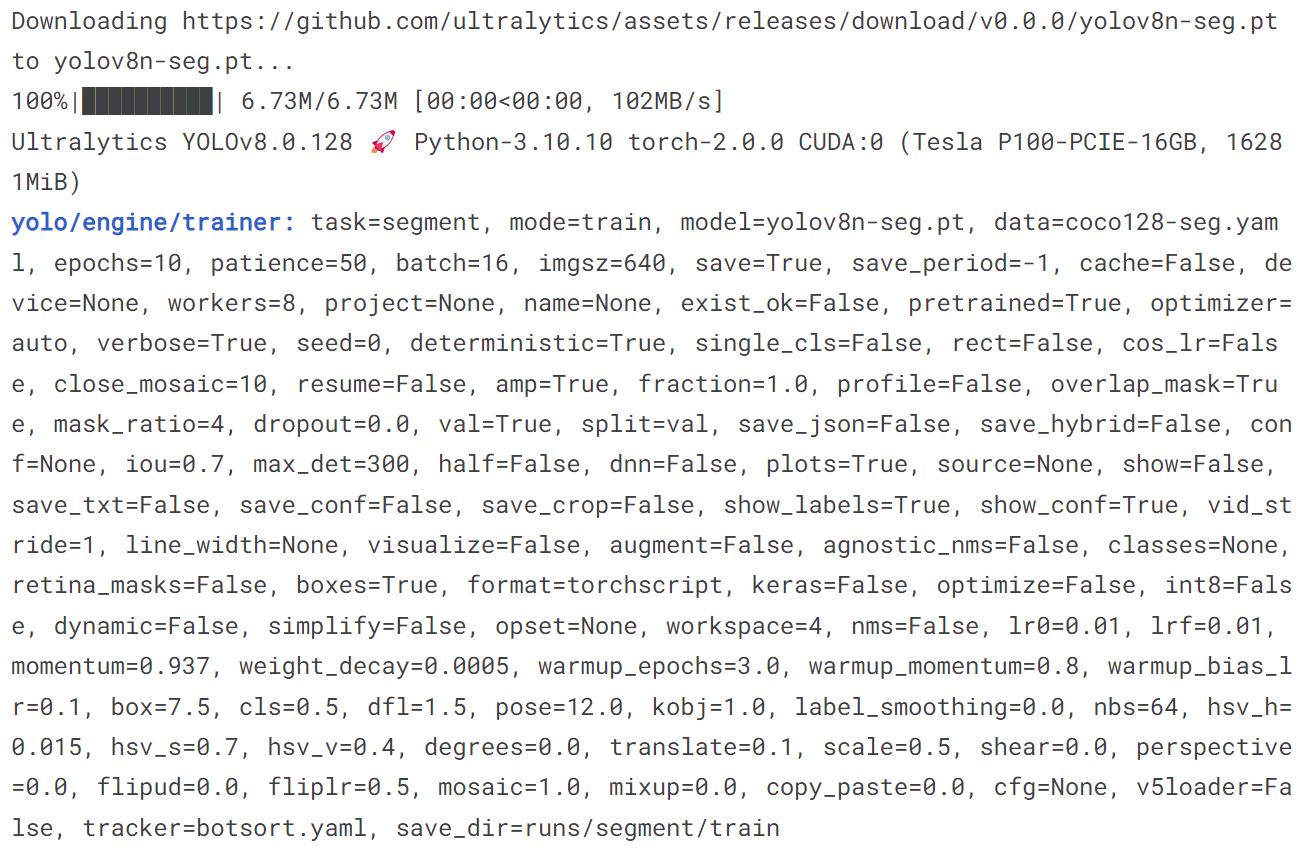

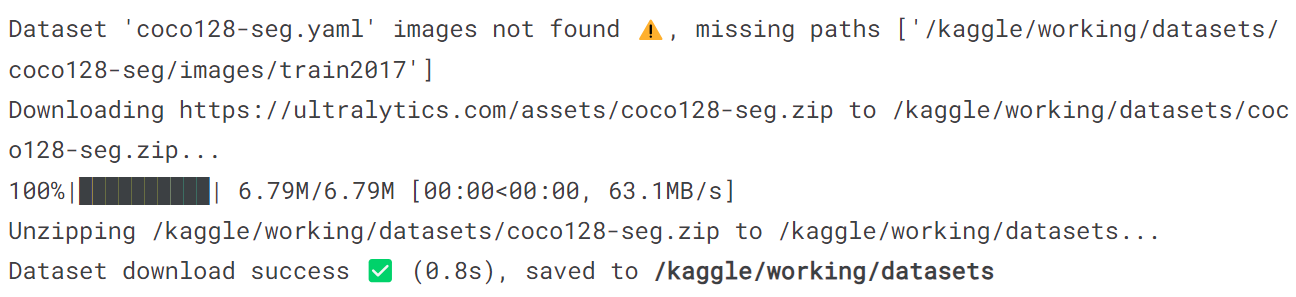

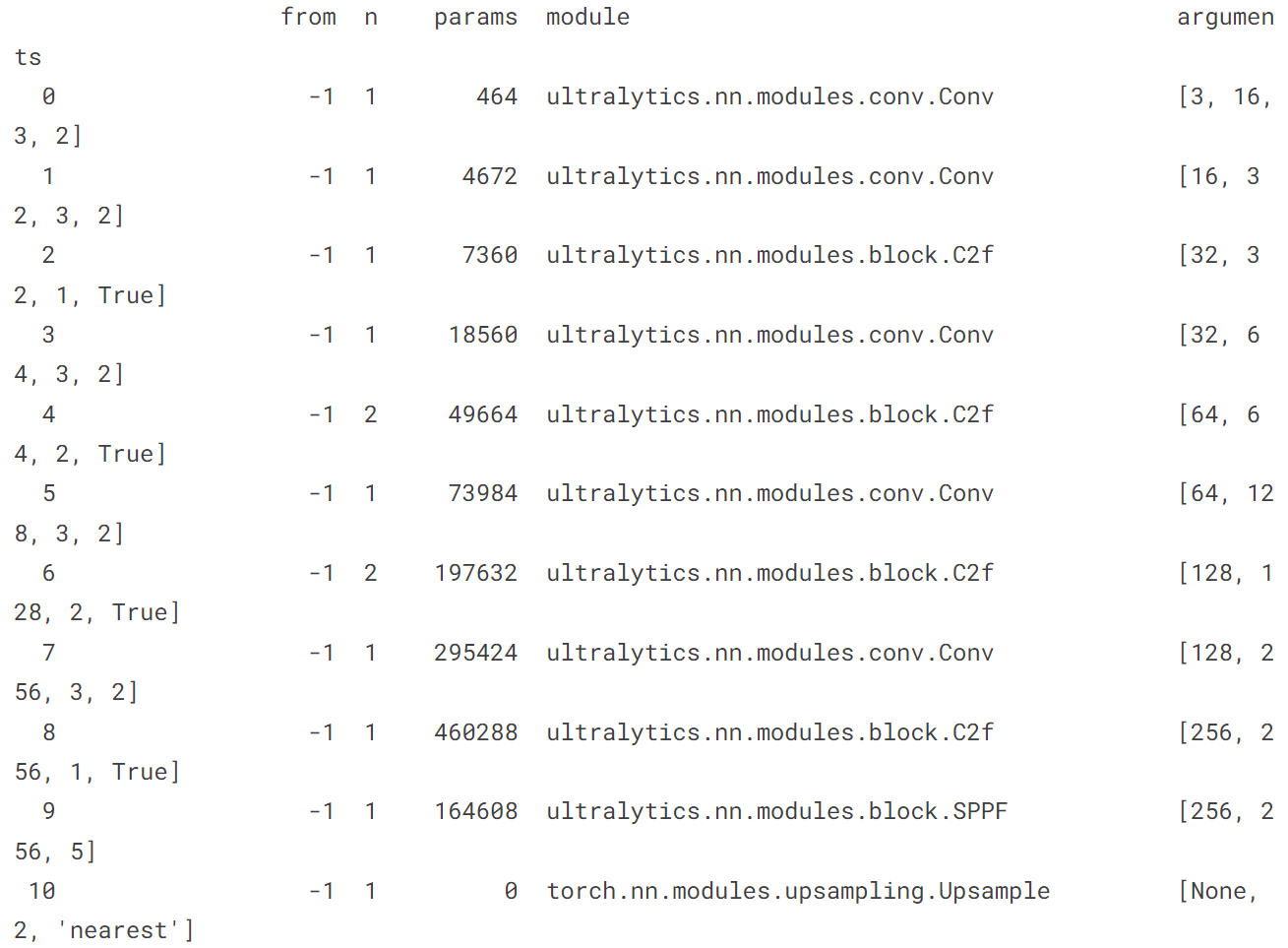

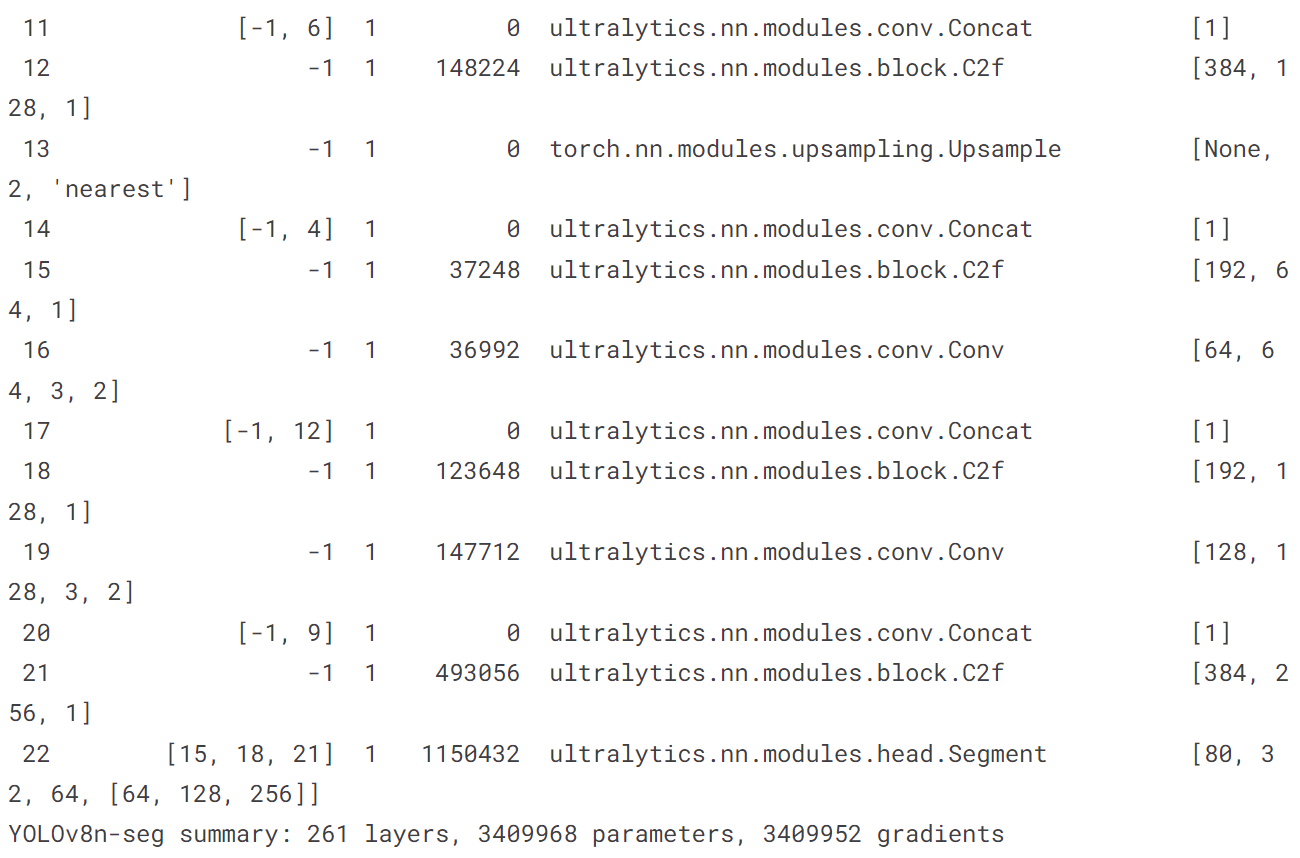

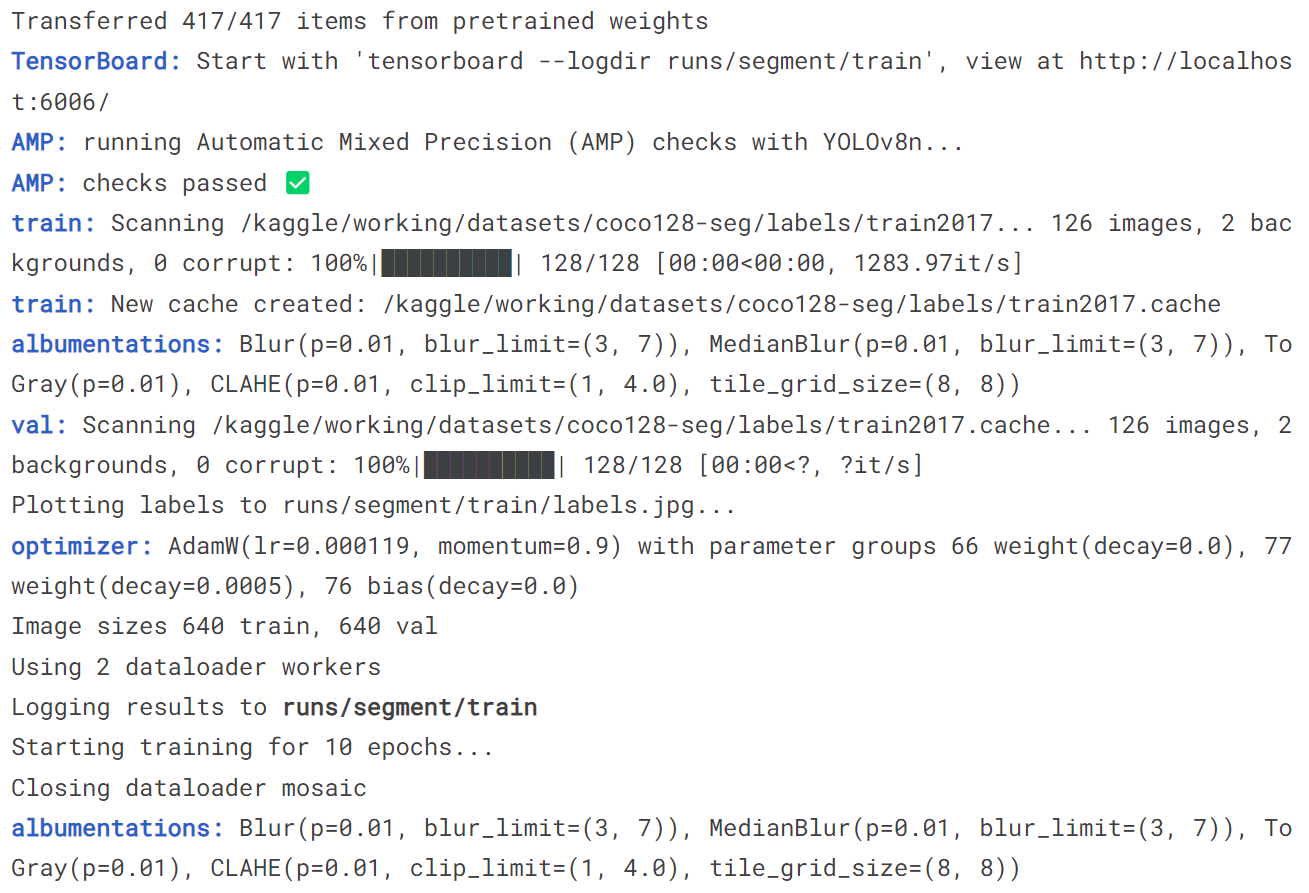

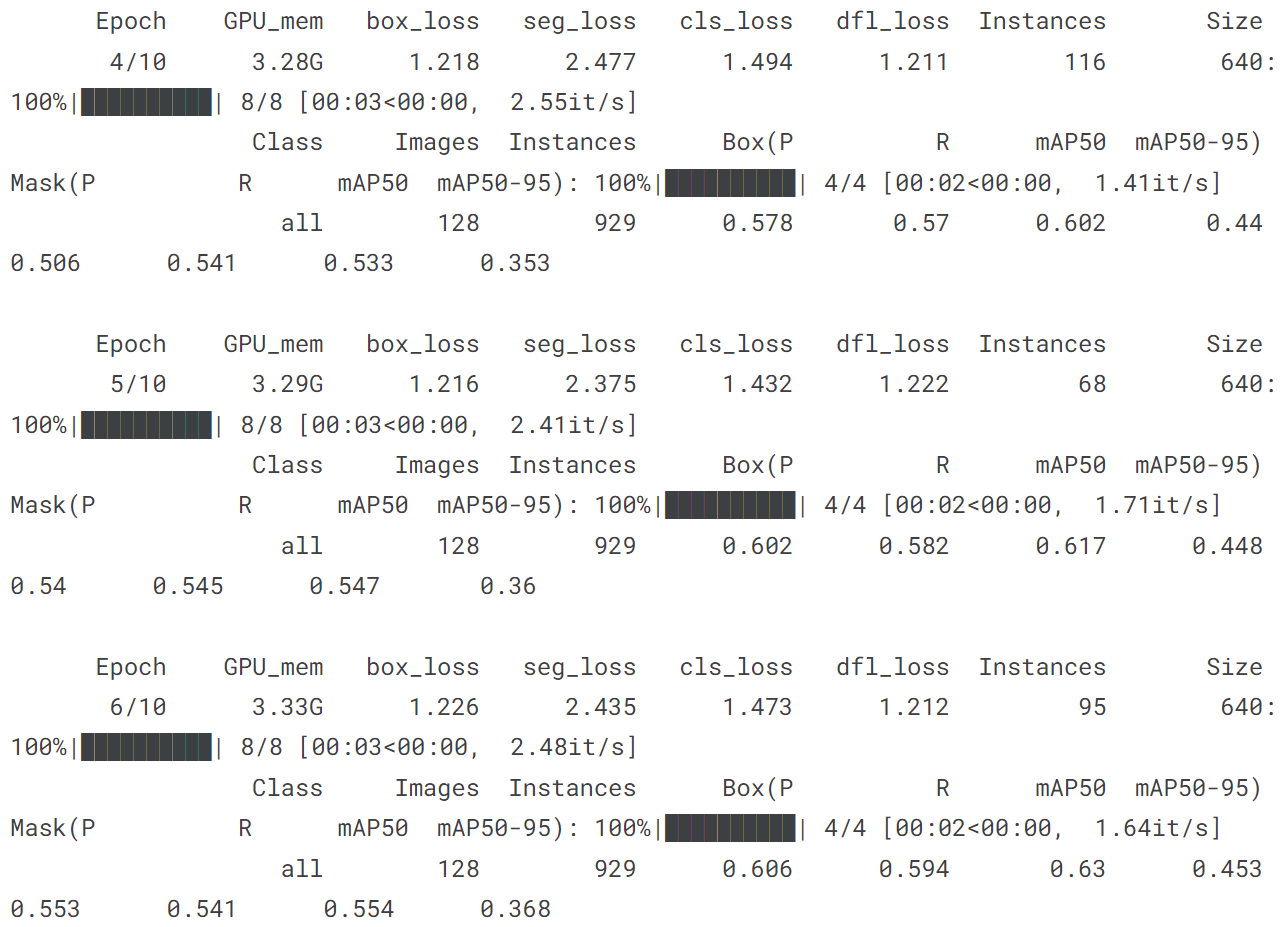

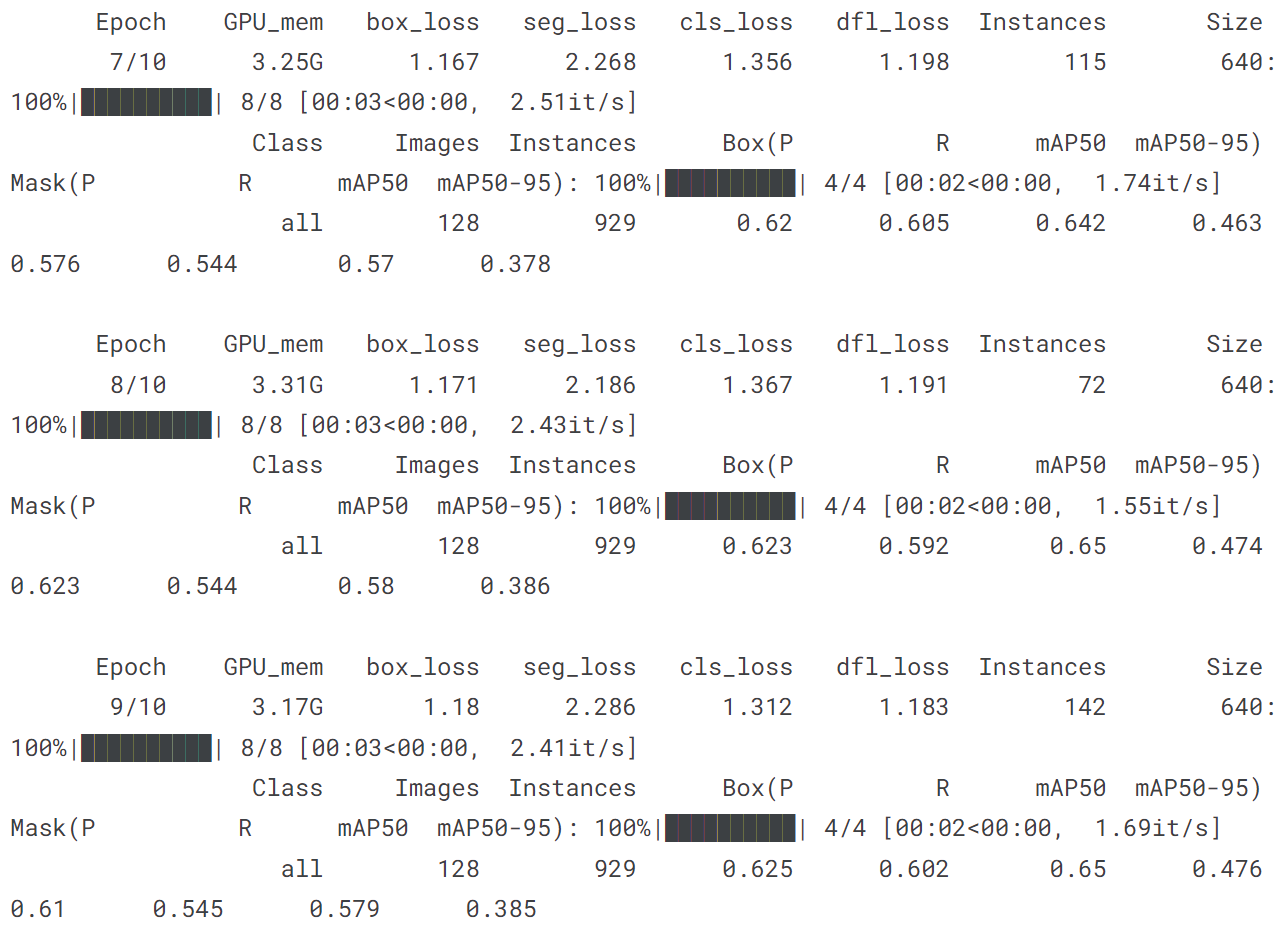

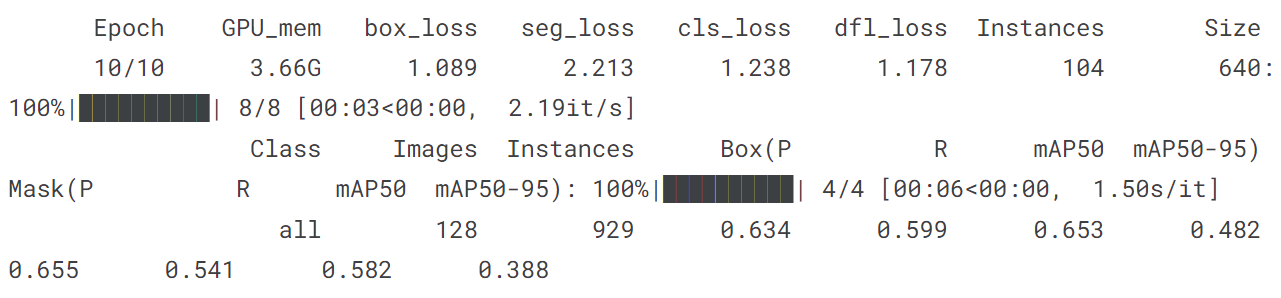

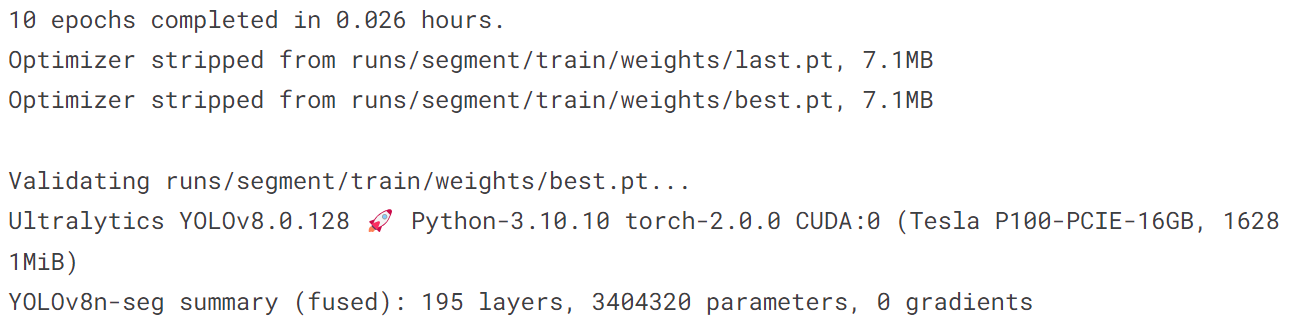

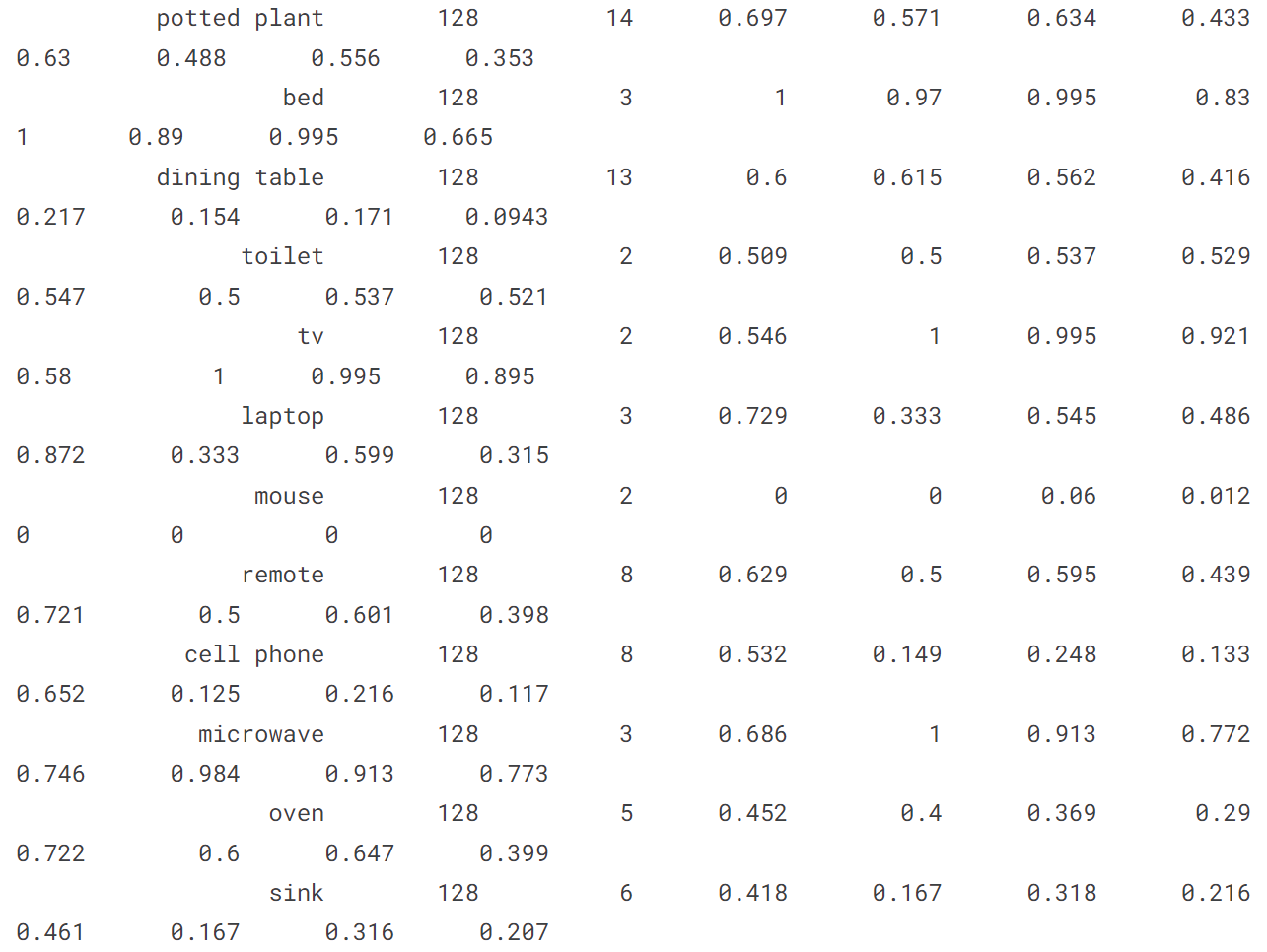

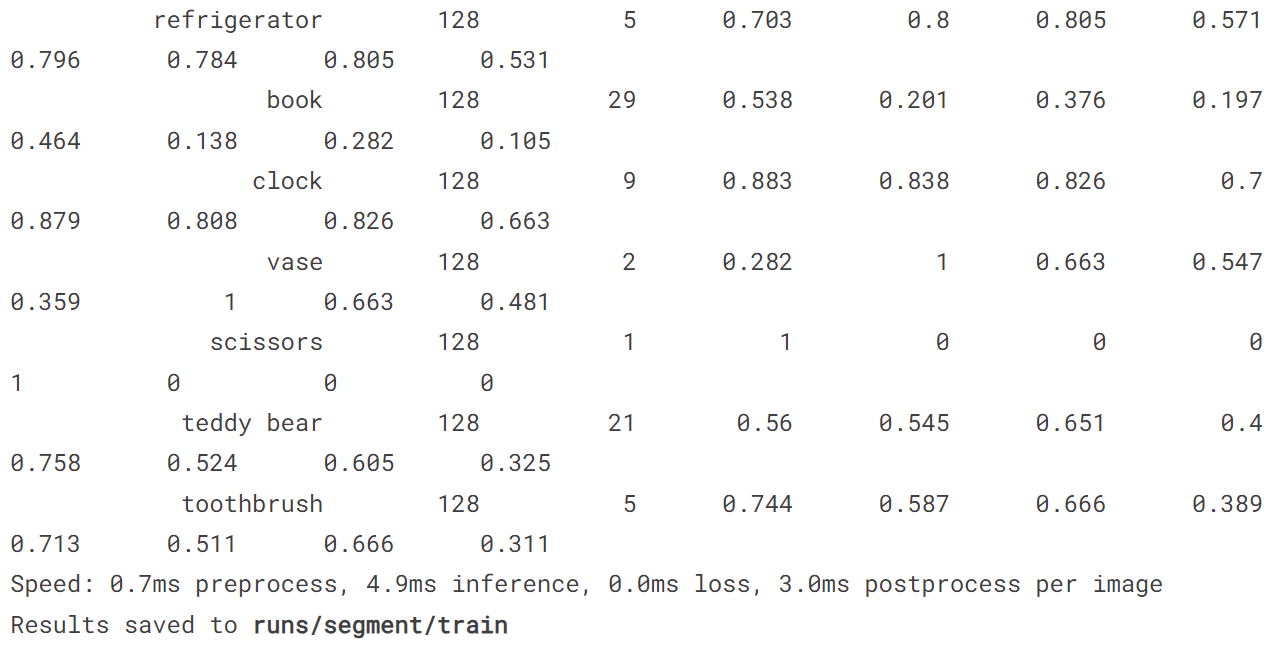

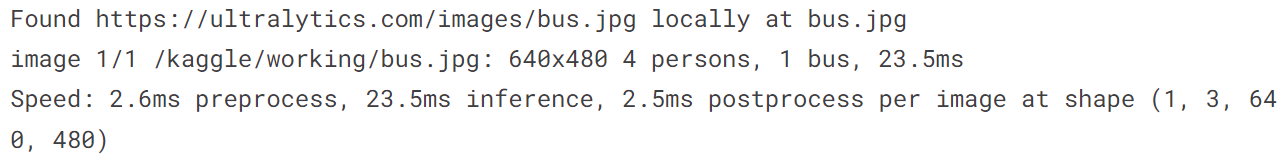

5.2 训练分割模型

model = YOLO('yolov8n-seg.pt')

model.train(data='coco128-seg.yaml', epochs = 10)

model('https://ultralytics.com/images/bus.jpg')

[ultralytics.yolo.engine.results.Results object with attributes:boxes: ultralytics.yolo.engine.results.Boxes objectkeypoints: Nonekeys: ['boxes', 'masks']masks: ultralytics.yolo.engine.results.Masks objectnames: {0: 'person', 1: 'bicycle', 2: 'car', 3: 'motorcycle', 4: 'airplane', 5: 'bus', 6: 'train', 7: 'truck', 8: 'boat', 9: 'traffic light', 10: 'fire hydrant', 11: 'stop sign', 12: 'parking meter', 13: 'bench', 14: 'bird', 15: 'cat', 16: 'dog', 17: 'horse', 18: 'sheep', 19: 'cow', 20: 'elephant', 21: 'bear', 22: 'zebra', 23: 'giraffe', 24: 'backpack', 25: 'umbrella', 26: 'handbag', 27: 'tie', 28: 'suitcase', 29: 'frisbee', 30: 'skis', 31: 'snowboard', 32: 'sports ball', 33: 'kite', 34: 'baseball bat', 35: 'baseball glove', 36: 'skateboard', 37: 'surfboard', 38: 'tennis racket', 39: 'bottle', 40: 'wine glass', 41: 'cup', 42: 'fork', 43: 'knife', 44: 'spoon', 45: 'bowl', 46: 'banana', 47: 'apple', 48: 'sandwich', 49: 'orange', 50: 'broccoli', 51: 'carrot', 52: 'hot dog', 53: 'pizza', 54: 'donut', 55: 'cake', 56: 'chair', 57: 'couch', 58: 'potted plant', 59: 'bed', 60: 'dining table', 61: 'toilet', 62: 'tv', 63: 'laptop', 64: 'mouse', 65: 'remote', 66: 'keyboard', 67: 'cell phone', 68: 'microwave', 69: 'oven', 70: 'toaster', 71: 'sink', 72: 'refrigerator', 73: 'book', 74: 'clock', 75: 'vase', 76: 'scissors', 77: 'teddy bear', 78: 'hair drier', 79: 'toothbrush'}orig_img: array([[[122, 148, 172],[120, 146, 170],[125, 153, 177],...,[157, 170, 184],[158, 171, 185],[158, 171, 185]],[[127, 153, 177],[124, 150, 174],[127, 155, 179],...,[158, 171, 185],[159, 172, 186],[159, 172, 186]],[[128, 154, 178],[126, 152, 176],[126, 154, 178],...,[158, 171, 185],[158, 171, 185],[158, 171, 185]],...,[[185, 185, 191],[182, 182, 188],[179, 179, 185],...,[114, 107, 112],[115, 105, 111],[116, 106, 112]],[[157, 157, 163],[180, 180, 186],[185, 186, 190],...,[107, 97, 103],[102, 92, 98],[108, 98, 104]],[[112, 112, 118],[160, 160, 166],[169, 170, 174],...,[ 99, 89, 95],[ 96, 86, 92],[102, 92, 98]]], dtype=uint8)orig_shape: (1080, 810)path: '/kaggle/working/bus.jpg'probs: Nonesave_dir: Nonespeed: {'preprocess': 2.610445022583008, 'inference': 23.540735244750977, 'postprocess': 2.538442611694336}]

!yolo predict model = '/kaggle/working/runs/segment/train/weights/best.pt' source = '/kaggle/working/bus.jpg'

image = Image.open('/kaggle/working/runs/segment/predict2/bus.jpg')

plt.figure(figsize=(12, 8))

plt.imshow(image)

plt.axis('off')

plt.show()

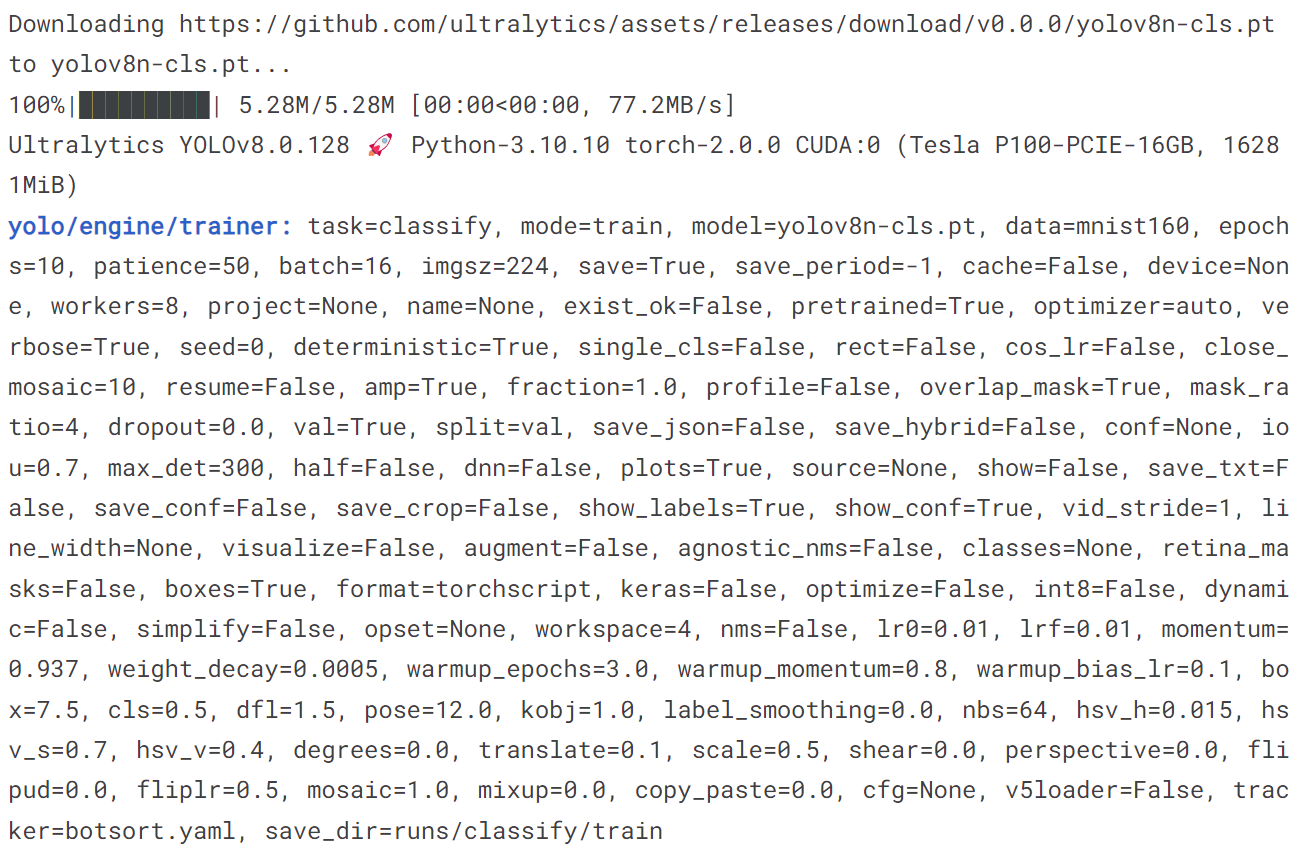

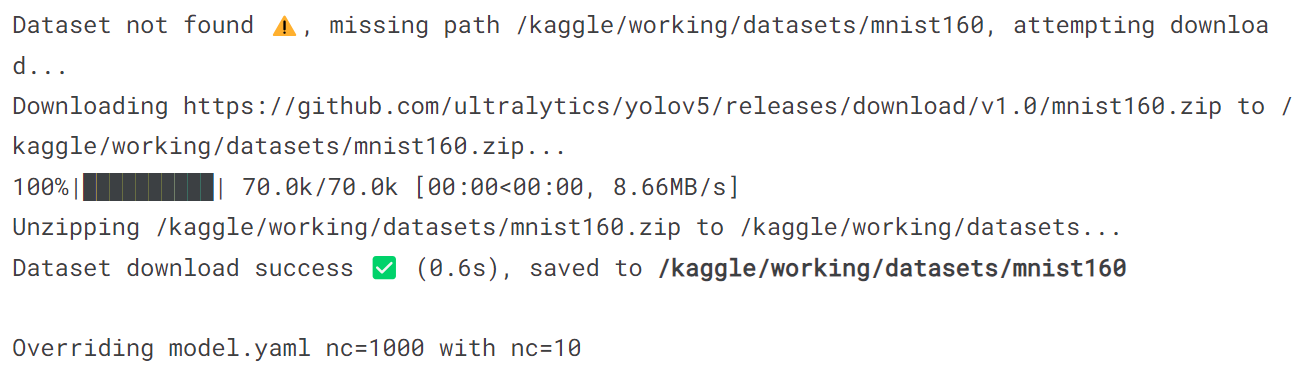

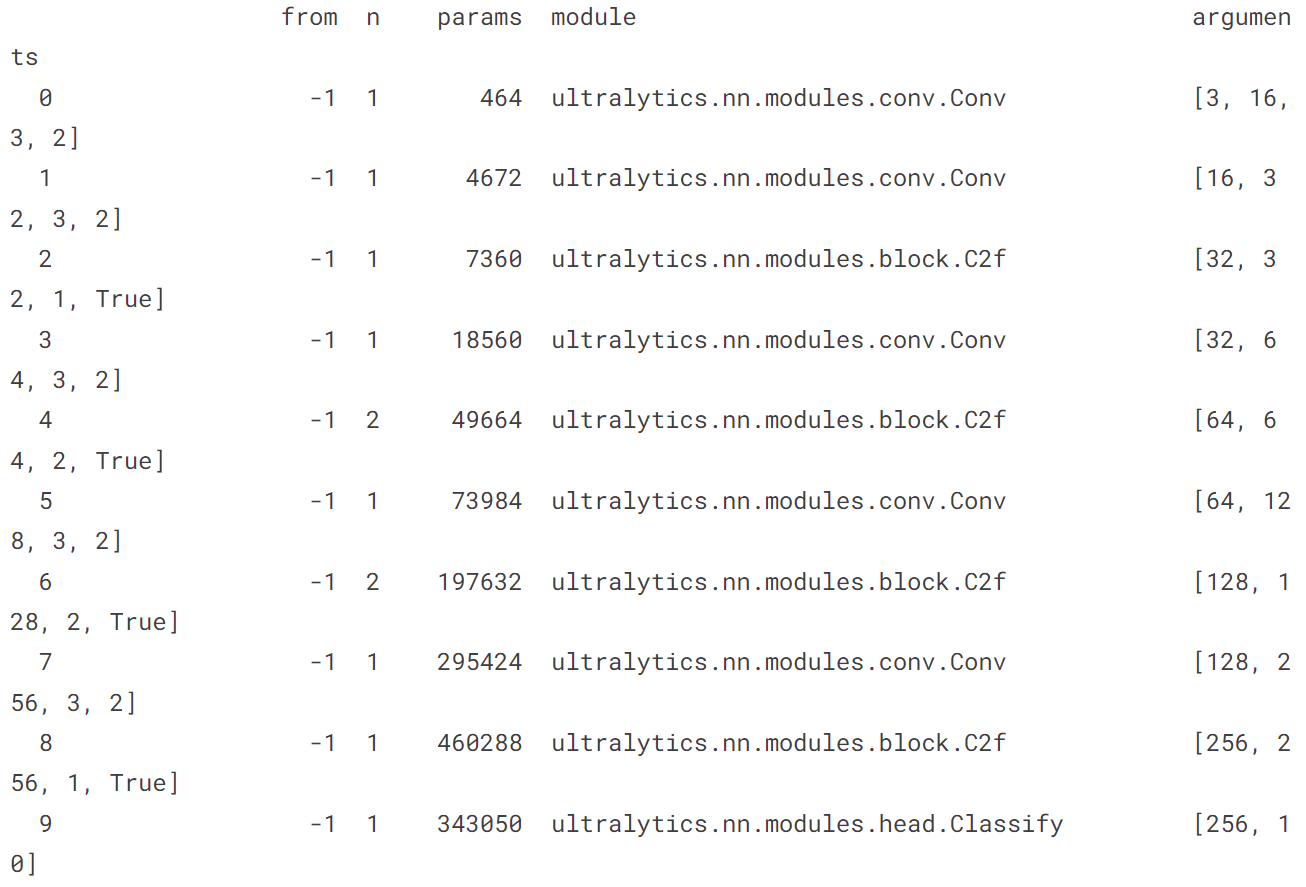

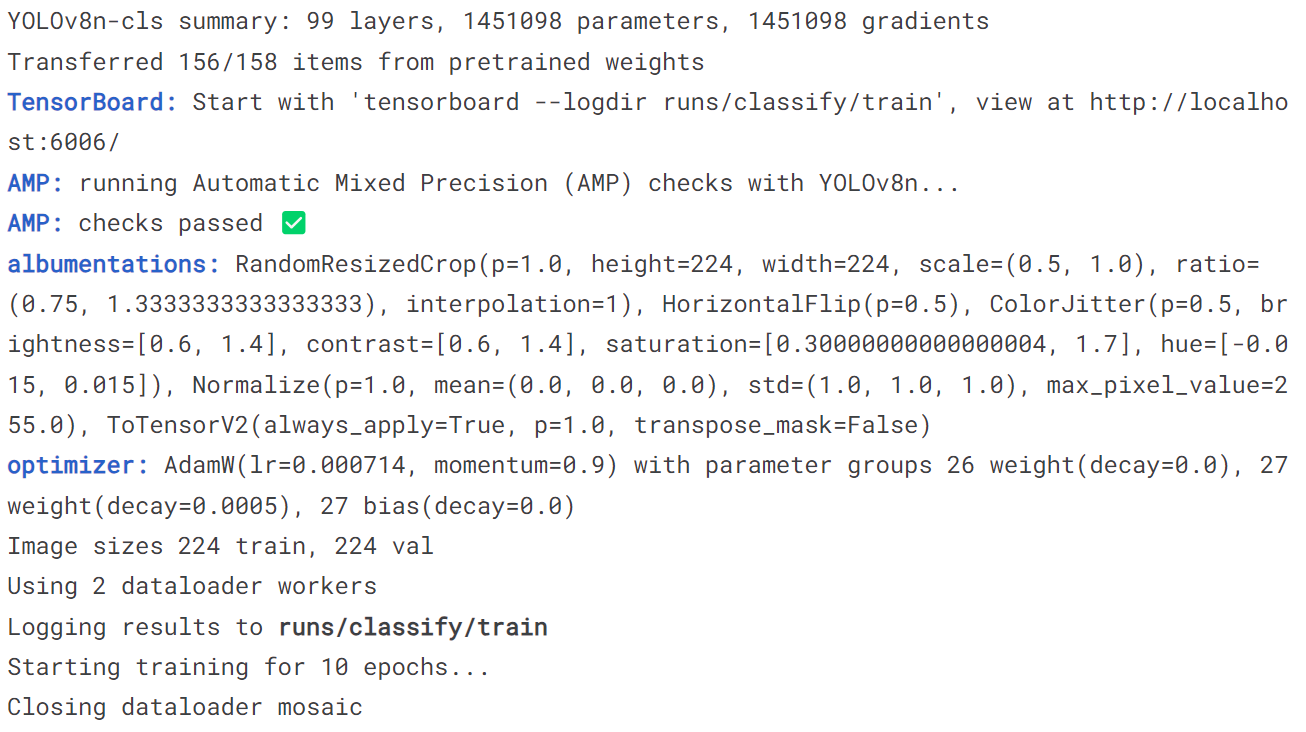

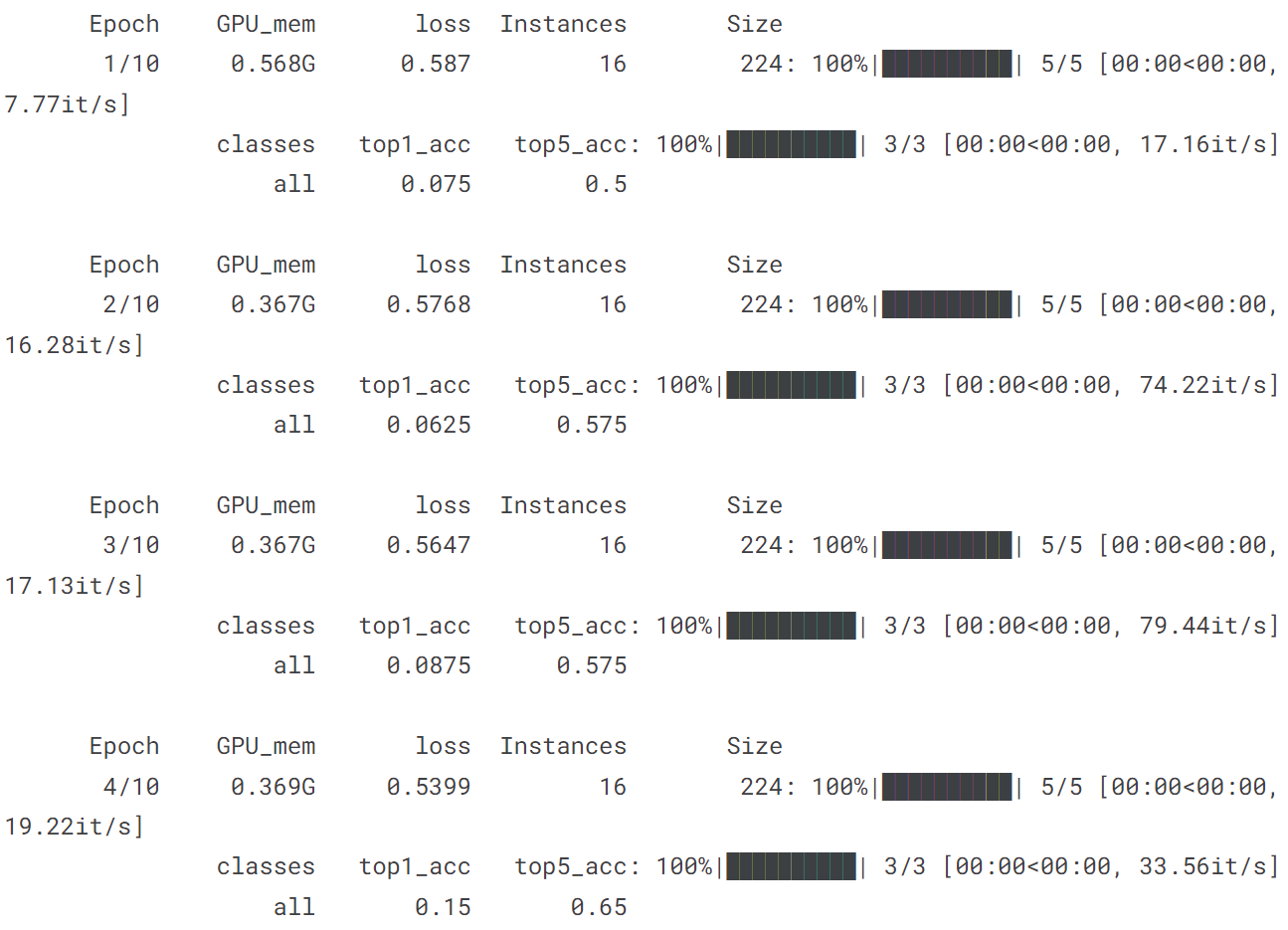

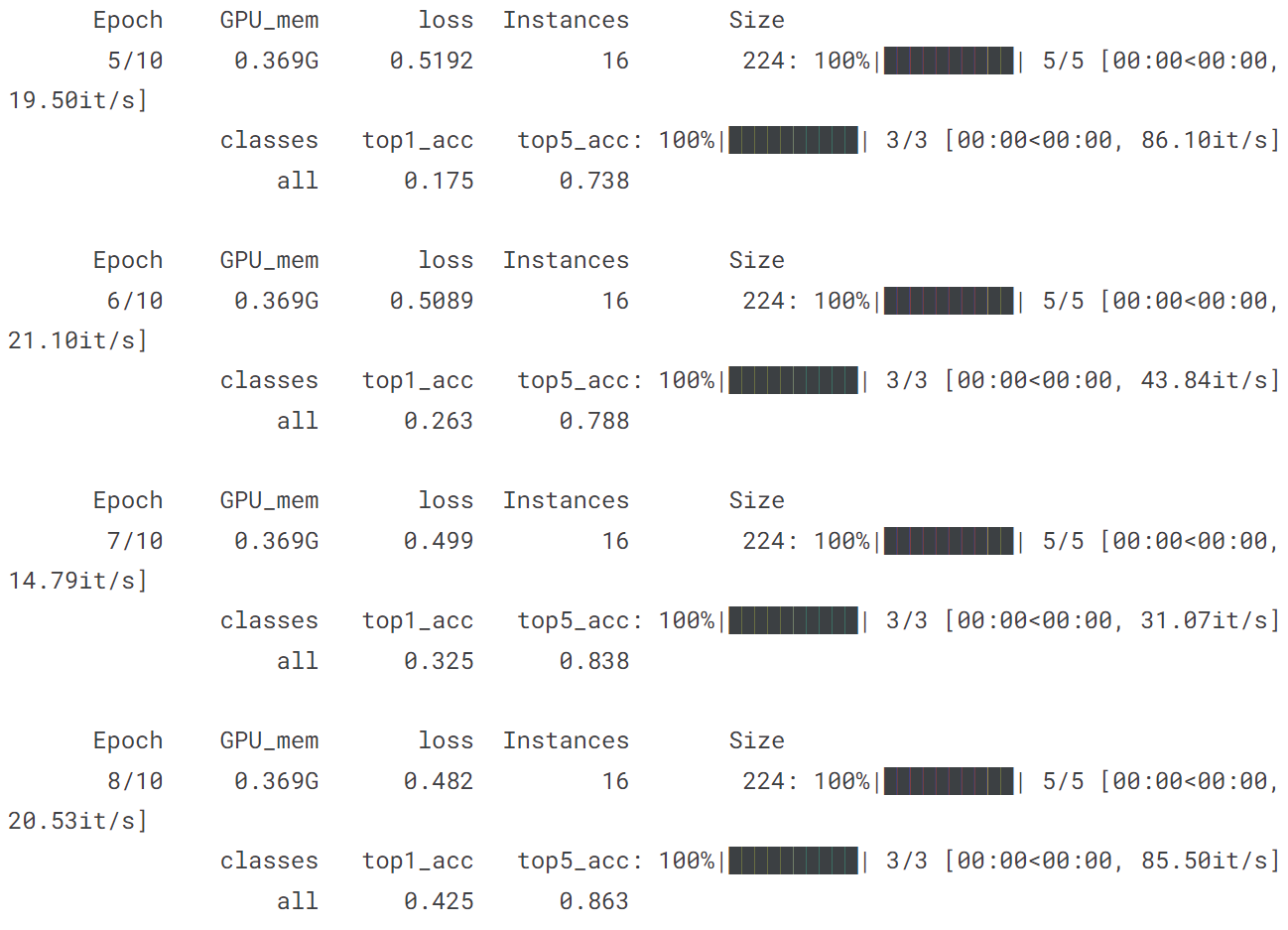

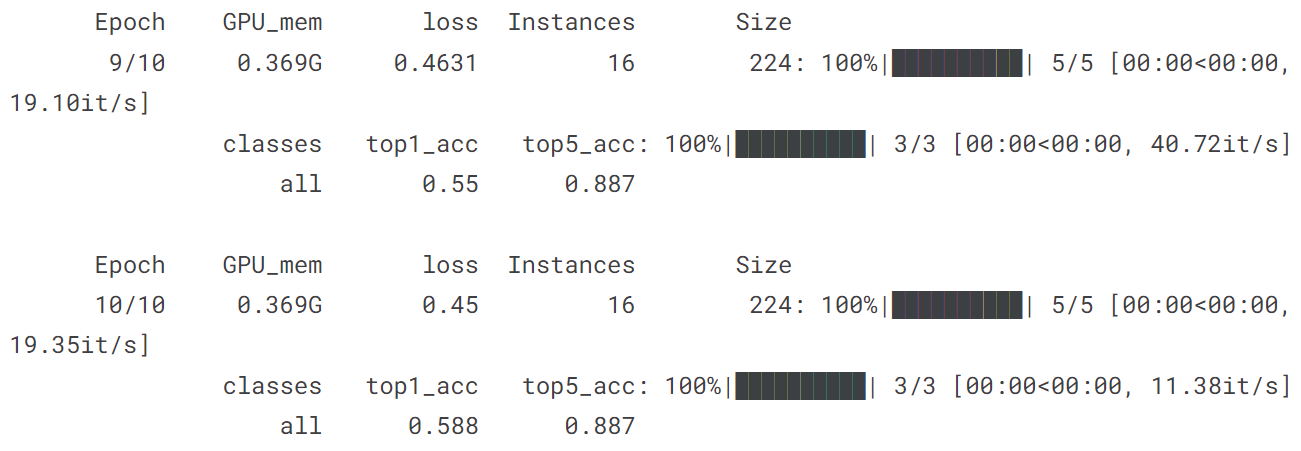

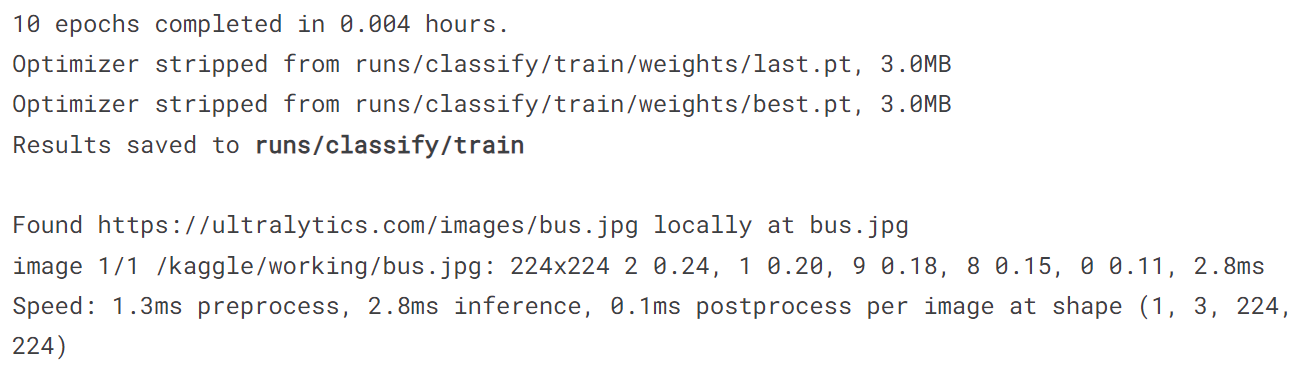

5.3 训练分类模型

model = YOLO('yolov8n-cls.pt')

model.train(data='mnist160', epochs = 10)

model('https://ultralytics.com/images/bus.jpg')

[ultralytics.yolo.engine.results.Results object with attributes:boxes: Nonekeypoints: Nonekeys: ['probs']masks: Nonenames: {0: '0', 1: '1', 2: '2', 3: '3', 4: '4', 5: '5', 6: '6', 7: '7', 8: '8', 9: '9'}orig_img: array([[[122, 148, 172],[120, 146, 170],[125, 153, 177],...,[157, 170, 184],[158, 171, 185],[158, 171, 185]],[[127, 153, 177],[124, 150, 174],[127, 155, 179],...,[158, 171, 185],[159, 172, 186],[159, 172, 186]],[[128, 154, 178],[126, 152, 176],[126, 154, 178],...,[158, 171, 185],[158, 171, 185],[158, 171, 185]],...,[[185, 185, 191],[182, 182, 188],[179, 179, 185],...,[114, 107, 112],[115, 105, 111],[116, 106, 112]],[[157, 157, 163],[180, 180, 186],[185, 186, 190],...,[107, 97, 103],[102, 92, 98],[108, 98, 104]],[[112, 112, 118],[160, 160, 166],[169, 170, 174],...,[ 99, 89, 95],[ 96, 86, 92],[102, 92, 98]]], dtype=uint8)orig_shape: (1080, 810)path: '/kaggle/working/bus.jpg'probs: ultralytics.yolo.engine.results.Probs objectsave_dir: Nonespeed: {'preprocess': 1.3382434844970703, 'inference': 2.797365188598633, 'postprocess': 0.07772445678710938}]

!yolo predict model = '/kaggle/working/runs/classify/train/weights/best.pt' source = '/kaggle/working/bus.jpg'

image = Image.open('/kaggle/working/runs/classify/predict2/bus.jpg')

plt.figure(figsize=(12, 8))

plt.imshow(image)

plt.axis('off')

plt.show()

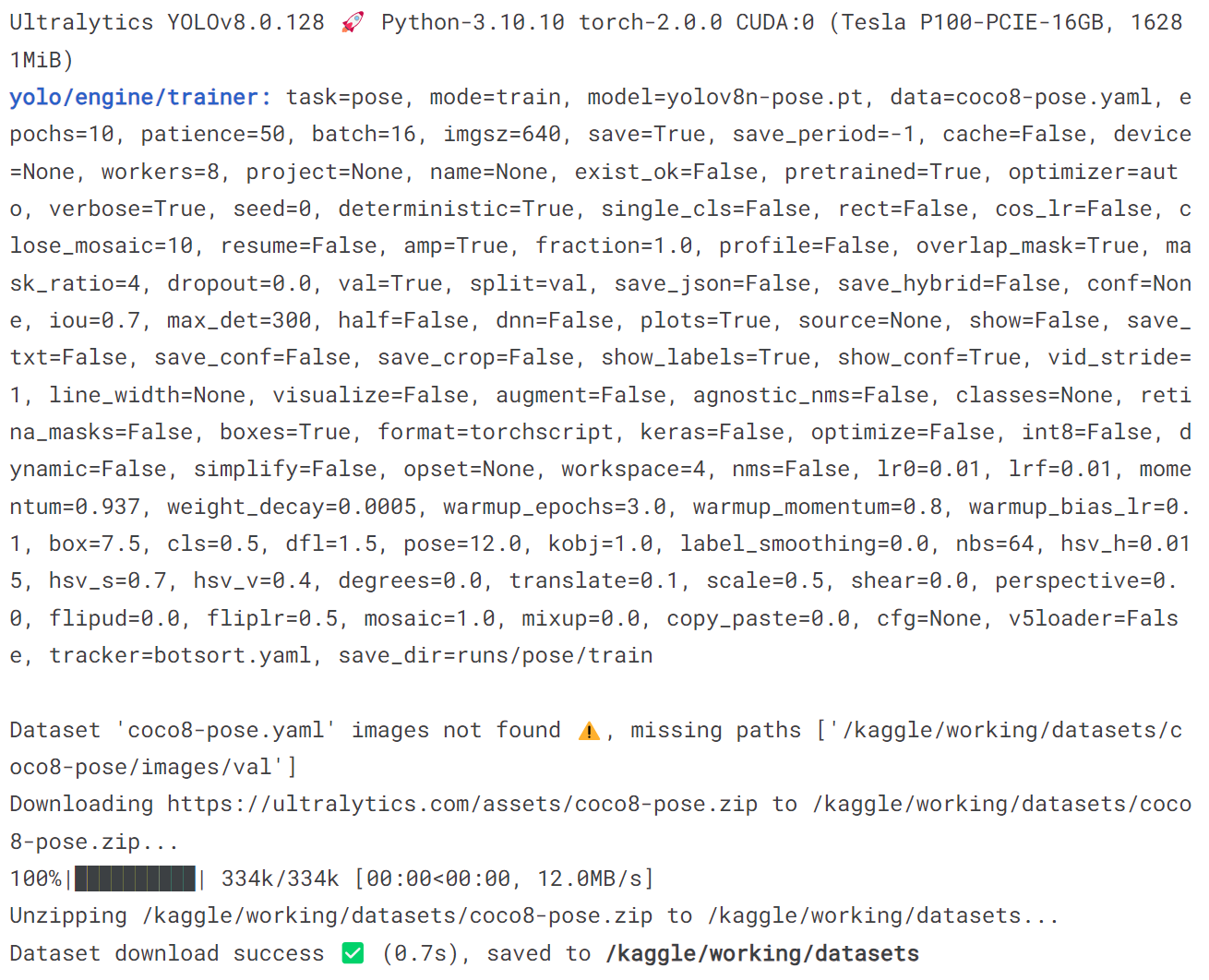

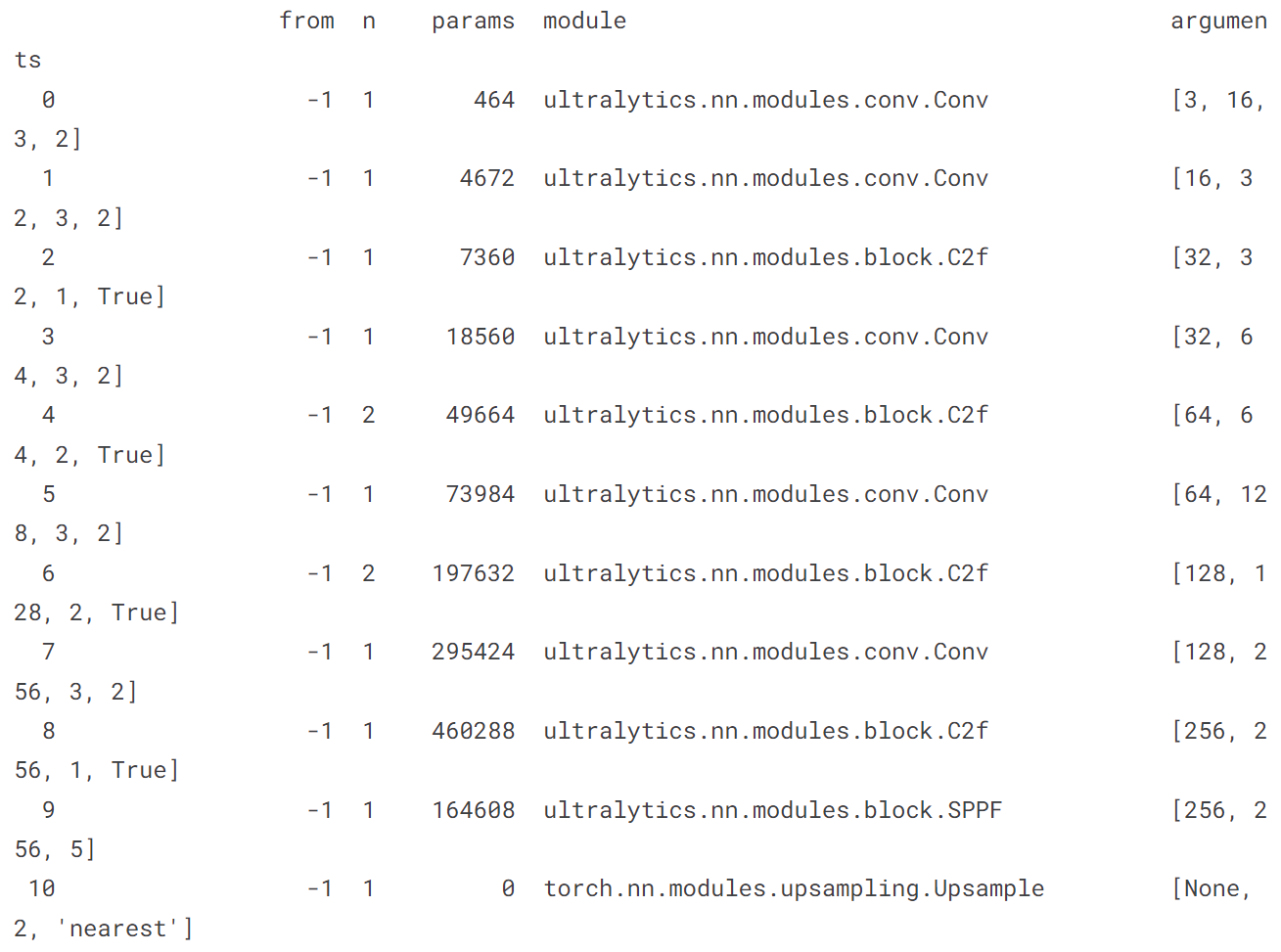

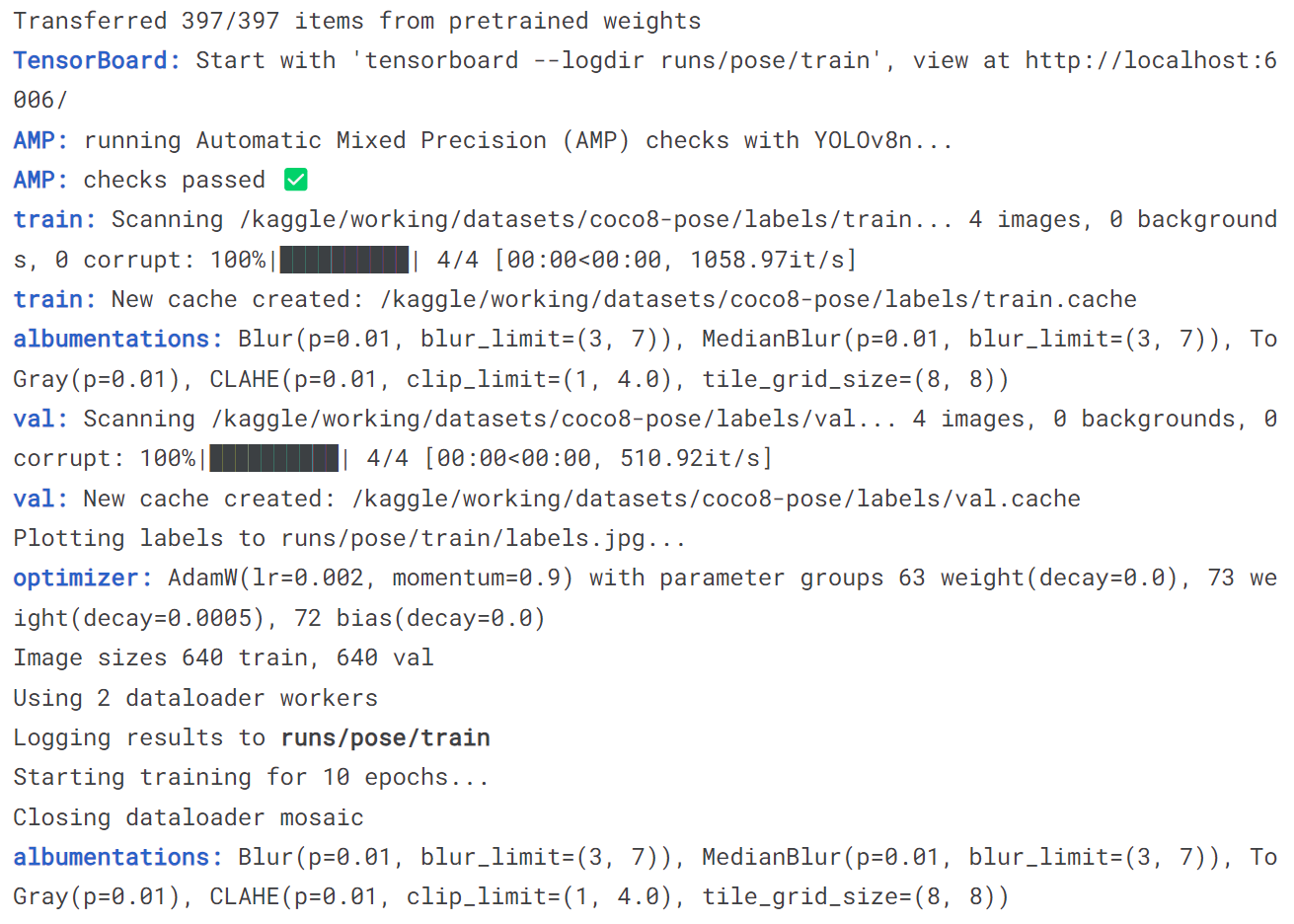

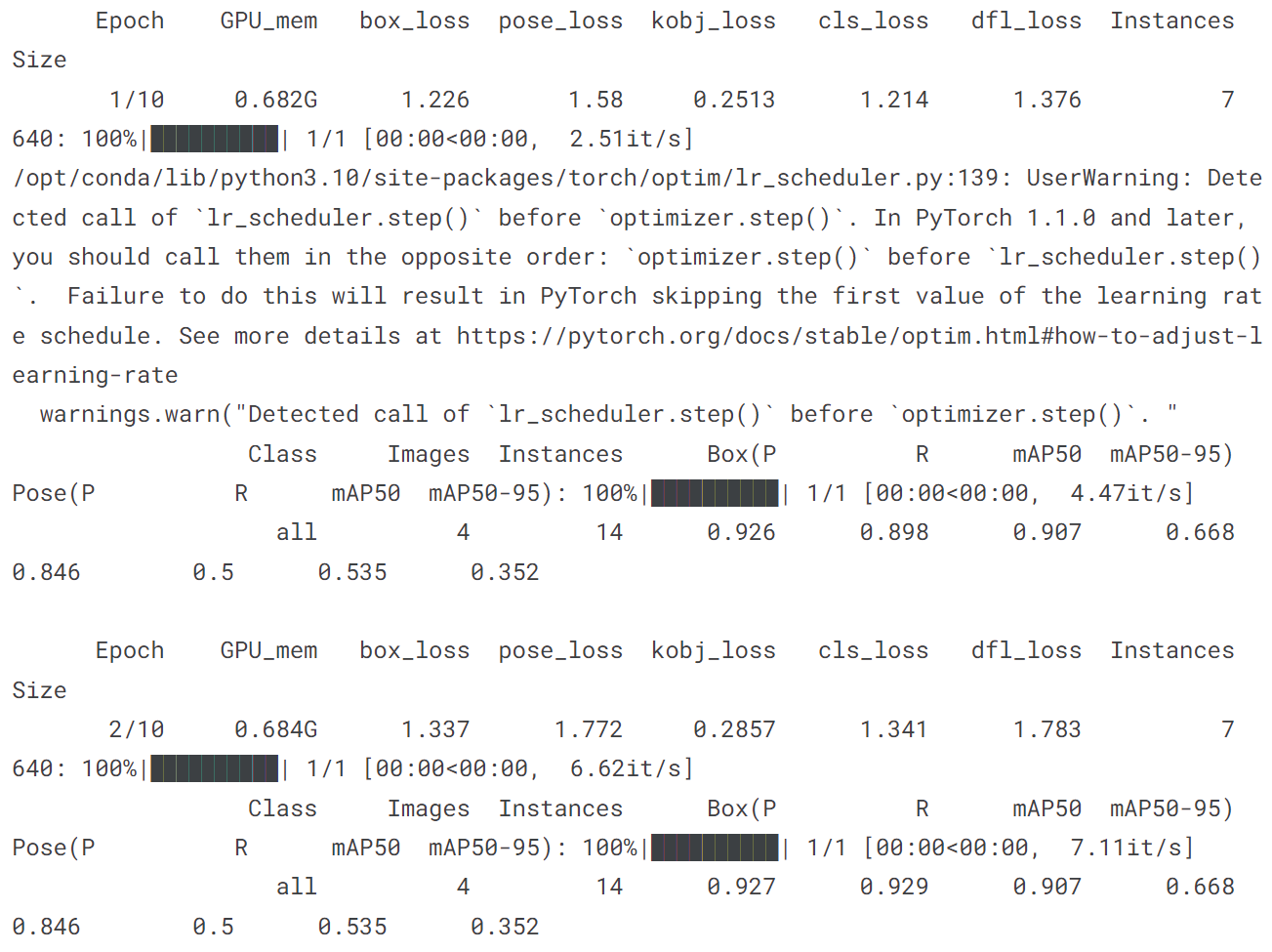

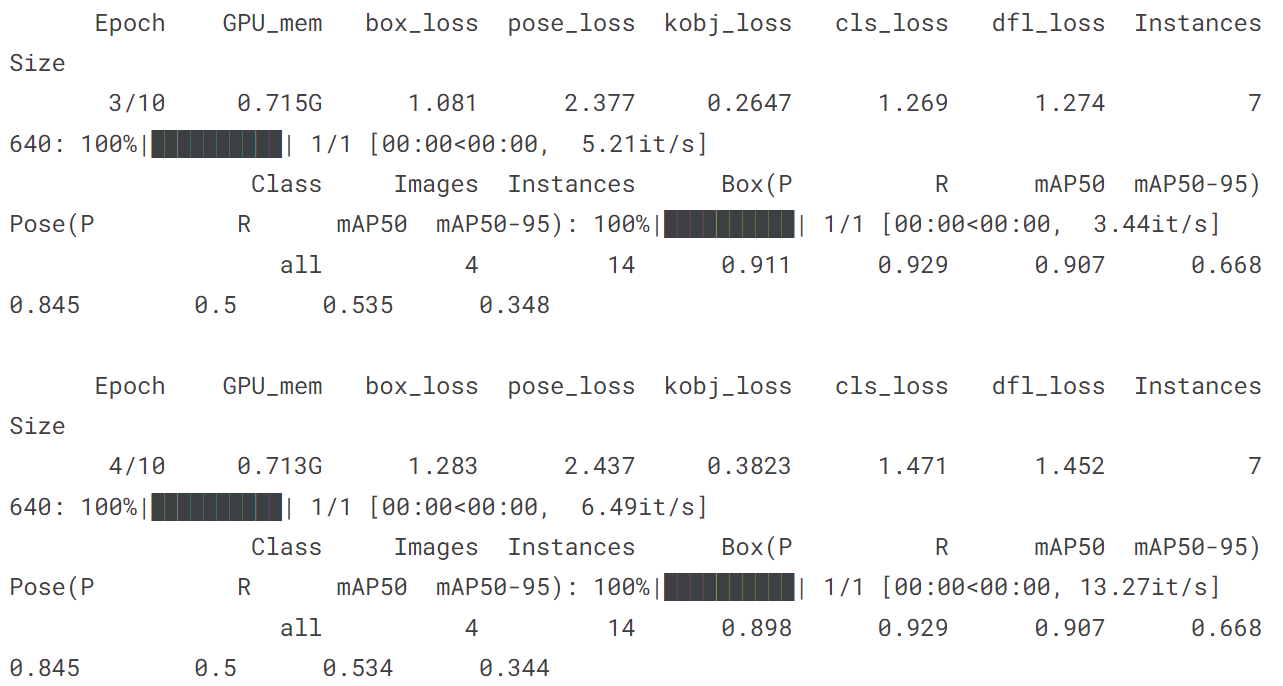

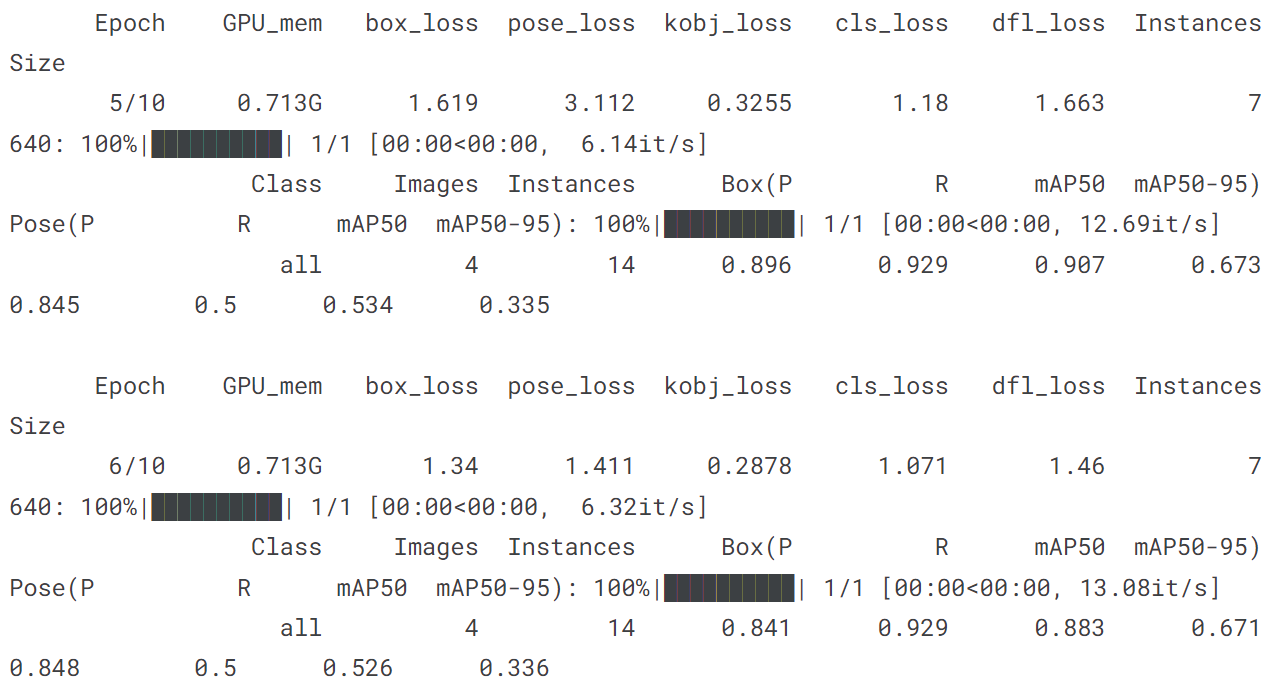

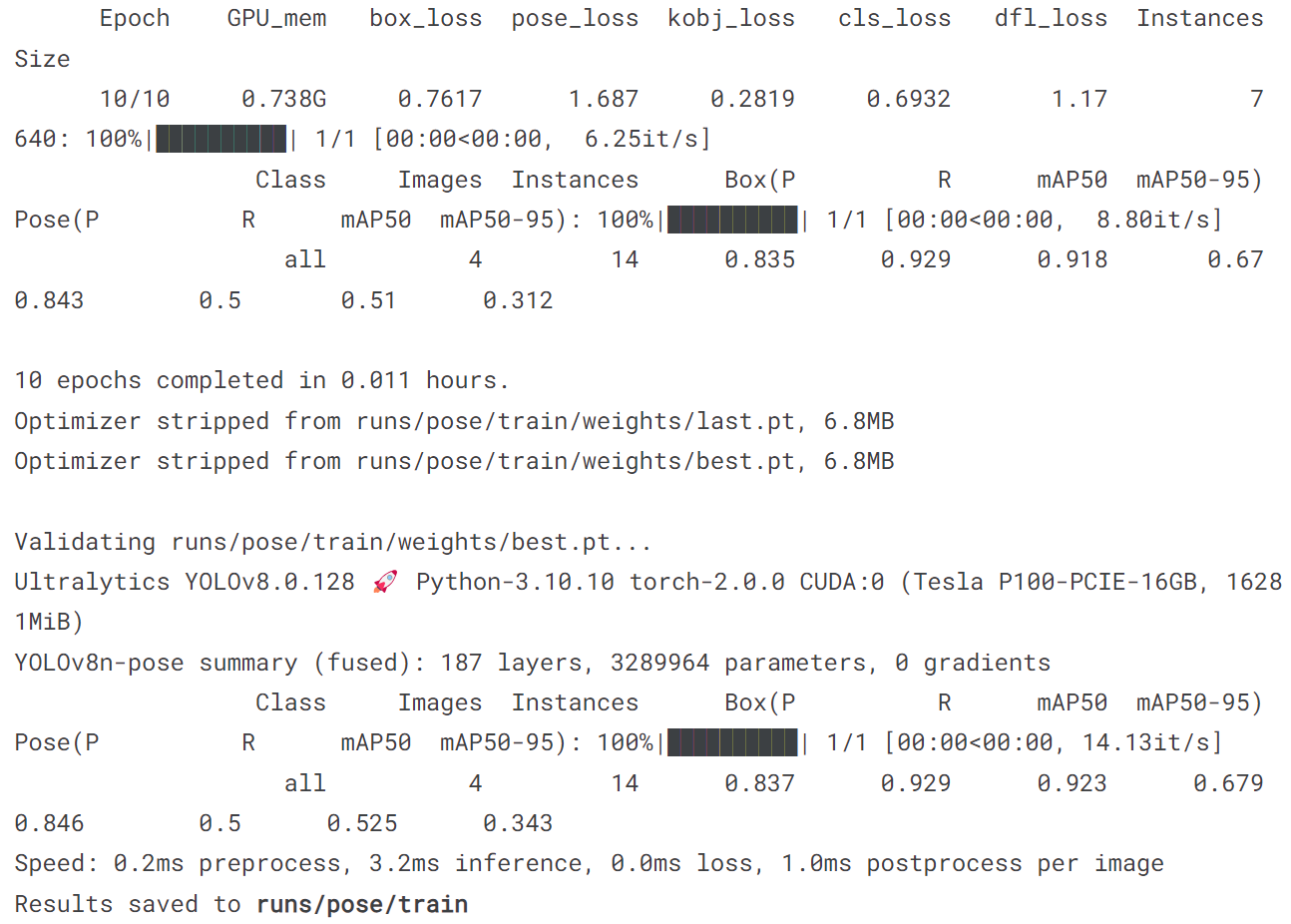

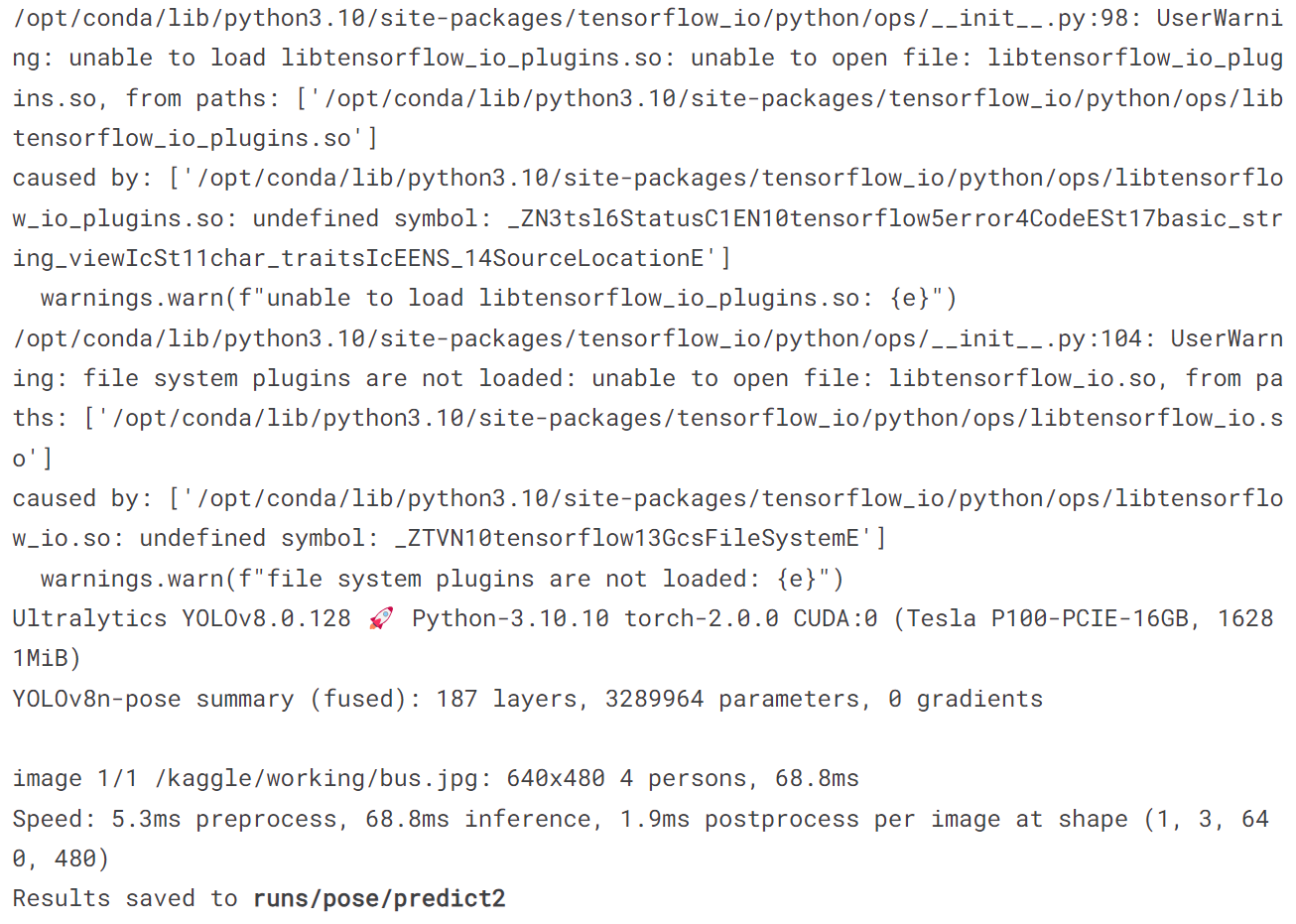

5.4 训练pose模型

model = YOLO('yolov8n-pose.pt')

model.train(data='coco8-pose.yaml', epochs = 10)

model('https://ultralytics.com/images/bus.jpg')

[ultralytics.yolo.engine.results.Results object with attributes:boxes: ultralytics.yolo.engine.results.Boxes objectkeypoints: ultralytics.yolo.engine.results.Keypoints objectkeys: ['boxes', 'keypoints']masks: Nonenames: {0: 'person'}orig_img: array([[[122, 148, 172],[120, 146, 170],[125, 153, 177],...,[157, 170, 184],[158, 171, 185],[158, 171, 185]],[[127, 153, 177],[124, 150, 174],[127, 155, 179],...,[158, 171, 185],[159, 172, 186],[159, 172, 186]],[[128, 154, 178],[126, 152, 176],[126, 154, 178],...,[158, 171, 185],[158, 171, 185],[158, 171, 185]],...,[[185, 185, 191],[182, 182, 188],[179, 179, 185],...,[114, 107, 112],[115, 105, 111],[116, 106, 112]],[[157, 157, 163],[180, 180, 186],[185, 186, 190],...,[107, 97, 103],[102, 92, 98],[108, 98, 104]],[[112, 112, 118],[160, 160, 166],[169, 170, 174],...,[ 99, 89, 95],[ 96, 86, 92],[102, 92, 98]]], dtype=uint8)orig_shape: (1080, 810)path: '/kaggle/working/bus.jpg'probs: Nonesave_dir: Nonespeed: {'preprocess': 2.290487289428711, 'inference': 22.292375564575195, 'postprocess': 1.9459724426269531}]

!yolo predict model = '/kaggle/working/runs/pose/train/weights/best.pt' source = '/kaggle/working/bus.jpg'

image = Image.open('/kaggle/working/runs/pose/predict2/bus.jpg')

plt.figure(figsize=(12, 8))

plt.imshow(image)

plt.axis('off')

plt.show()