python ansconda 等的下载、安装等请参阅:

Python开源项目CodeFormer——人脸重建(Face Restoration),模糊清晰、划痕修复及黑白上色的实践![]() https://blog.csdn.net/beijinghorn/article/details/134334021

https://blog.csdn.net/beijinghorn/article/details/134334021

友情提示:

(1)这是2023年的论文;

(2)必须有 CUDA !

(3)运行速度慢!效果一般!

(4)有2个bug;后面会介绍一下。

1 PGDiff

https://github.com/pq-yang/PGDiff

1.1 论文Paper

《PGDiff: Guiding Diffusion Models for Versatile Face Restoration via Partial Guidance》

Peiqing Yang1 Shangchen Zhou1 Qingyi Tao2 Chen Change Loy1

S-Lab, Nanyang Technological University SenseTime Research, Singapore

Accepted to NeurIPS 2023

PGDiff builds a versatile framework that is applicable to a broad range of face restoration tasks.

If you find PGDiff helpful to your projects, please consider ⭐ this repo. Thanks!

Supported Applications

Blind Restoration

Colorization

Inpainting

Reference-based Restoration

Old Photo Restoration (w/ scratches)

[TODO] Natural Image Restoration

1.2 进化史 Updates

2021.10.10: Release our codes and models. Have fun! 😋

2021.08.16: This repo is created.

1.3 安装 Installation

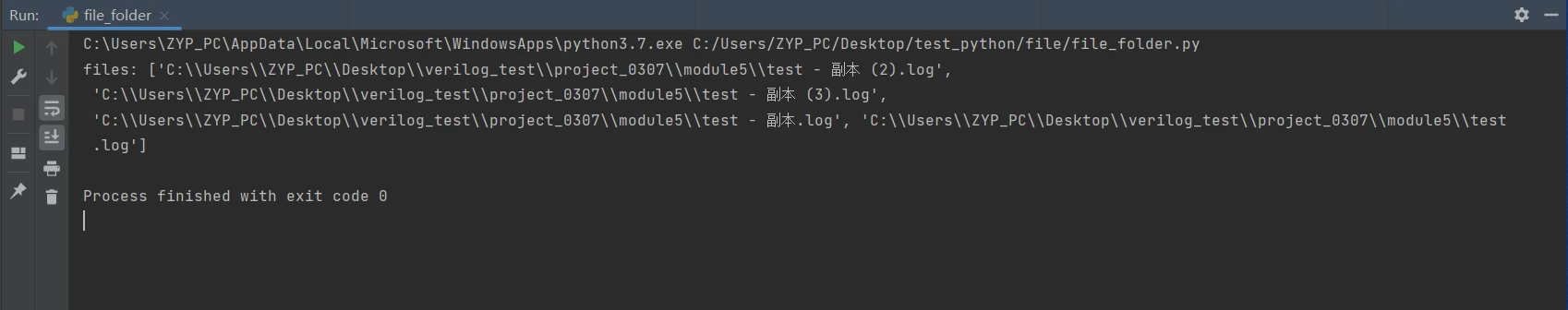

Codes and Environment

# git clone this repository

git clone https://github.com/pq-yang/PGDiff.git

cd PGDiff

# create new anaconda env

conda create -n pgdiff python=1.8 -y

conda activate pgdiff

# install python dependencies

conda install mpi4py

pip3 install -r requirements.txt

pip install -e .

Pretrained Model

Download the pretrained face diffusion model from [Google Drive | BaiduPan (pw: pgdf)] to the models folder (credit to DifFace).

https://pan.baidu.com/share/init?surl=VHv48RDUXI8onMEodVFZkw

1.4 功能 Applications

1.4.1 Blind Restoration

To extract smooth semantics from the input images, download the pretrained restorer from [Google Drive | BaiduPan (pw: pgdf)] to the models/restorer folder. The pretrained restorer provided here is modified from the

1 generator of Real-ESRGAN. Note that the pretrained restorer can also be flexibly replaced with other restoration models by modifying the create_restorer function and specifying your own --restorer_path accordingly.

https://pan.baidu.com/share/init?surl=IkEnPGDJqFcg4dKHGCH9PQ

Commands

Guidance scale

for BFR is generally taken from [0.05, 0.1]. Smaller

tends to produce a higher-quality result, while larger

yields a higher-fidelity result.

For cropped and aligned faces (512x512):

python inference_pgdiff.py --task restoration --in_dir [image folder] --out_dir [result folder] --restorer_path [restorer path] --guidance_scale [s]

Example:

python inference_pgdiff.py --task restoration --in_dir testdata/cropped_faces --out_dir results/blind_restoration --guidance_scale 0.05

1.4.2 Colorization

We provide a set of color statistics in the adaptive_instance_normalization function as the default colorization style. One may change the colorization style by running the script scripts/color_stat_calculation.py to obtain target statistics (avg mean & avg std) and replace those in the adaptive_instance_normalization function.

Commands

For cropped and aligned faces (512x512):

python inference_pgdiff.py --task colorization --in_dir [image folder] --out_dir [result folder] --lightness_weight [w_l] --color_weight [w_c] --guidance_scale [s]

Example:

Try different color styles for various outputs!

# style 0 (default)

python inference_pgdiff.py --task colorization --in_dir testdata/grayscale_faces --out_dir results/colorization --guidance_scale 0.01

# style 1 (uncomment line 272-273 in `guided_diffusion/script_util.py`)

python inference_pgdiff.py --task colorization --in_dir testdata/grayscale_faces --out_dir results/colorization_style1 --guidance_scale 0.01

# style 3 (uncomment line 278-279 in `guided_diffusion/script_util.py`)

python inference_pgdiff.py --task colorization --in_dir testdata/grayscale_faces --out_dir results/colorization_style3 --guidance_scale 0.01

1.4.3 Inpainting

A folder for mask(s) mask_dir must be specified with each mask image name corresponding to each input (masked) image. Each input mask shoud be a binary map with white pixels representing masked regions (refer to testdata/append_masks). We also provide a script scripts/irregular_mask_gen.py to randomly generate irregular stroke masks on input images.

Note: If you don't specify mask_dir, we will automatically treat the input image as if there are no missing pixels.

Commands

For cropped and aligned faces (512x512):

python inference_pgdiff.py --task inpainting --in_dir [image folder] --mask_dir [mask folder] --out_dir [result folder] --unmasked_weight [w_um] --guidance_scale [s]

Example:

Try different seeds for various outputs!

python inference_pgdiff.py --task inpainting --in_dir testdata/masked_faces --mask_dir testdata/append_masks --out_dir results/inpainting --guidance_scale 0.01 --seed 4321

1.4.4 Reference-based Restoration

To extract identity features from both the reference image and the intermediate results, download the pretrained ArcFace model from [Google Drive | BaiduPan (pw: pgdf)] to the models folder.

A folder for reference image(s) ref_dir must be specified with each reference image name corresponding to each input image. A reference image is suggested to be a high-quality image from the same identity as the input low-quality image. Test image pairs we provided here are from the CelebRef-HQ dataset.

https://pan.baidu.com/share/init?surl=Ku-d57YYavAuScTFpvaP3Q

Commands

Similar to blind face restoration, reference-based restoration requires to tune the guidance scale

according to the input quality, which is generally taken from [0.05, 0.1].

For cropped and aligned faces (512x512):

python inference_pgdiff.py --task ref_restoration --in_dir [image folder] --ref_dir [reference folder] --out_dir [result folder] --ss_weight [w_ss] --ref_weight [w_ref] --guidance_scale [s]

Example:

# Choice 1: MSE Loss (default)

python inference_pgdiff.py --task ref_restoration --in_dir testdata/ref_cropped_faces --ref_dir testdata/ref_faces --out_dir results/ref_restoration_mse --guidance_scale 0.05 --ref_weight 25

# Choice 2: Cosine Similarity Loss (uncomment line 71-72)

python inference_pgdiff.py --task ref_restoration --in_dir testdata/ref_cropped_faces --ref_dir testdata/ref_faces --out_dir results/ref_restoration_cos --guidance_scale 0.05 --ref_weight 1e4

1.4.5 Old Photo Restoration

If scratches exist, a folder for mask(s) mask_dir must be specified with the name of each mask image corresponding to that of each input image. Each input mask shoud be a binary map with white pixels representing masked regions. To obtain a scratch map automatically, we recommend using the scratch detection model from Bringing Old Photo Back to Life. One may also generate or adjust the scratch map with an image editing app (e.g., Photoshop).

If scratches don't exist, set the mask_dir augment as None (default). As a result, if you don't specify mask_dir, we will automatically treat the input image as if there are no missing pixels.

Commands

For cropped and aligned faces (512x512):

python inference_pgdiff.py --task old_photo_restoration --in_dir [image folder] --mask_dir [mask folder] --out_dir [result folder] --op_lightness_weight [w_op_l] --op_color_weight [w_op_c] --guidance_scale [s]

Demos

Similar to blind face restoration, old photo restoration is a more complex task (restoration + colorization + inpainting) that requires to tune the guidance scale

according to the input quality. Generally,$s$ is taken from [0.0015, 0.005]. Smaller

tends to produce a higher-quality result, while larger

yields a higher-fidelity result.

Degradation: Light

# no scratches (don't specify mask_dir)

python inference_pgdiff.py --task old_photo_restoration --in_dir testdata/op_cropped_faces/lg --out_dir results/op_restoration/lg --guidance_scale 0.004 --seed 4321

Degradation: Medium

# no scratches (don't specify mask_dir)

python inference_pgdiff.py --task old_photo_restoration --in_dir testdata/op_cropped_faces/med --out_dir results/op_restoration/med --guidance_scale 0.002 --seed 1234

# with scratches

python inference_pgdiff.py --task old_photo_restoration --in_dir testdata/op_cropped_faces/med_scratch --mask_dir testdata/op_mask --out_dir results/op_restoration/med_scratch --guidance_scale 0.002 --seed 1111

Degradation: Heavy

python inference_pgdiff.py --task old_photo_restoration --in_dir testdata/op_cropped_faces/hv --mask_dir testdata/op_mask --out_dir results/op_restoration/hv --guidance_scale 0.0015 --seed 4321

Customize your results with different color styles!

# style 1 (uncomment line 272-273 in `guided_diffusion/script_util.py`)

python inference_pgdiff.py --task old_photo_restoration --in_dir testdata/op_cropped_faces/hv --mask_dir testdata/op_mask --out_dir results/op_restoration/hv_style1 --guidance_scale 0.0015 --seed 4321

# style 2 (uncomment line 275-276 in `guided_diffusion/script_util.py`)

python inference_pgdiff.py --task old_photo_restoration --in_dir testdata/op_cropped_faces/hv --mask_dir testdata/op_mask --out_dir results/op_restoration/hv_style2 --guidance_scale 0.0015 --seed 4321

1.5 引用Citation

If you find our work useful for your research, please consider citing:

@inproceedings{yang2023pgdiff,

title={{PGDiff}: Guiding Diffusion Models for Versatile Face Restoration via Partial Guidance},

author={Yang, Peiqing and Zhou, Shangchen and Tao, Qingyi and Loy, Chen Change},

booktitle={NeurIPS},

year={2023}

}

1.6 权利 License

This project is licensed under NTU S-Lab License 1.0. Redistribution and use should follow this license.

1.7 知识 Acknowledgement

This study is supported under the RIE2020 Industry Alignment Fund – Industry Collaboration Projects (IAF-ICP) Funding Initiative, as well as cash and in-kind contribution from the industry partner(s).

This implementation is based on guided-diffusion. We also adopt the pretrained face diffusion model from DifFace, the pretrained identity feature extraction model from ArcFace, and the restorer backbone from Real-ESRGAN. Thanks for their awesome works!

1.8 联系 Contact

If you have any questions, please feel free to reach out at peiqingyang99@outlook.com.