import torch

import numpy as np

import torch. nn as nn

import matplotlib. pyplot as plt

def trend ( time, slope= 0 ) : return slope * time

def seasonal_pattern ( season_time) : return np. where( season_time < 0.4 , np. cos( season_time * 2 * np. pi) , 1 / np. exp( 3 * season_time) )

def seasonality ( time, period, amplitude= 1 , phase= 0 ) : season_time = ( ( time + phase) % period) / periodreturn amplitude * seasonal_pattern( season_time)

def noise ( time, noise_level= 1 ) : return np. random. randn( len ( time) ) * noise_level

X = torch. arange( 1 , 1001 )

Y = trend( X, 0.3 ) + seasonality( X, period= 365 , amplitude= 30 ) + noise( X, 15 ) + 200

X. shape, Y. shape

(torch.Size([1000]), torch.Size([1000]))

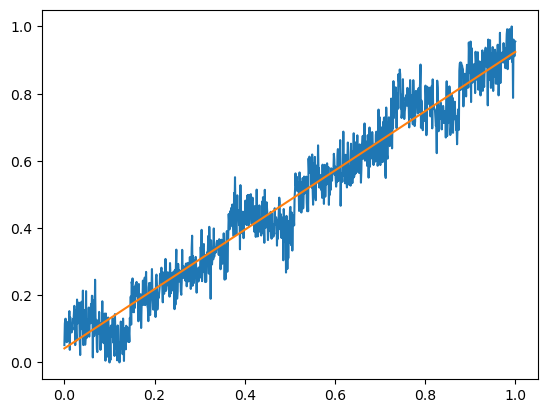

plt. plot( X. numpy( ) , Y. numpy( ) ) ;

X = X. type ( torch. float32)

Y = Y. type ( torch. float32)

X. dtype, Y. dtype

(torch.float32, torch.float32)

X = ( X - X. min ( ) ) / ( X. max ( ) - X. min ( ) )

Y = ( Y - Y. min ( ) ) / ( Y. max ( ) - Y. min ( ) )

plt. plot( X. numpy( ) , Y. numpy( ) ) ;

k = nn. Parameter( torch. rand( 1 , dtype= torch. float32) )

b = nn. Parameter( torch. rand( 1 , dtype= torch. float32) )

k, b

(Parameter containing:tensor([0.6231], requires_grad=True),Parameter containing:tensor([0.0044], requires_grad=True))

def linear_model ( x) : return k * x + b

optimizer = torch. optim. SGD( [ k, b] , lr= 0.01 )

loss_func = nn. MSELoss( )

epoch_num = 2000

for epoch in range ( epoch_num) : y_pred = linear_model( X) loss = loss_func( y_pred, Y) optimizer. zero_grad( ) loss. backward( ) optimizer. step( )

k, b

(Parameter containing:tensor([0.8825], requires_grad=True),Parameter containing:tensor([0.0419], requires_grad=True))

k2 = k. detach( ) . numpy( ) [ 0 ]

b2 = b. detach( ) . numpy( ) [ 0 ] plt. plot( X, Y) ;

plt. plot( X, k2 * X + b2) ;

class LinearModel ( nn. Module) : def __init__ ( self) : super ( ) . __init__( ) self. k = nn. Parameter( torch. rand( 1 , dtype= torch. float32) ) self. b = nn. Parameter( torch. rand( 1 , dtype= torch. float32) ) def forward ( self, x) : return self. k * x + self. b

model = LinearModel( )

optimizer = torch. optim. SGD( [ k, b] , lr= 0.01 )

loss_func = nn. MSELoss( ) epoch_num = 2000

for epoch in range ( epoch_num) : y_pred = model( X) loss = loss_func( y_pred, Y) optimizer. zero_grad( ) loss. backward( ) optimizer. step( )

k, b

(Parameter containing:tensor([0.8825], requires_grad=True),Parameter containing:tensor([0.0419], requires_grad=True))

k2 = k. detach( ) . numpy( ) [ 0 ]

b2 = b. detach( ) . numpy( ) [ 0 ] plt. plot( X, Y) ;

plt. plot( X, k2 * X + b2) ;

model = LinearModel( )

optimizer = torch. optim. SGD( [ k, b] , lr= 0.01 )

loss_func = nn. MSELoss( )

epoch_num = 2000

iter_step = 10

batch_size = 100

for epoch in range ( epoch_num) : for i in range ( iter_step) : random_samples = torch. randint( X. size( ) [ 0 ] , ( batch_size, ) ) X_i, Y_i = X[ random_samples] , Y[ random_samples] y_pred = model( X_i) loss = loss_func( y_pred, Y_i) optimizer. zero_grad( ) loss. backward( ) optimizer. step( )

k, b

(Parameter containing:tensor([0.8825], requires_grad=True),Parameter containing:tensor([0.0419], requires_grad=True))

k2 = k. detach( ) . numpy( ) [ 0 ]

b2 = b. detach( ) . numpy( ) [ 0 ] plt. plot( X, Y) ;

plt. plot( X, k2 * X + b2) ;