目录

介绍

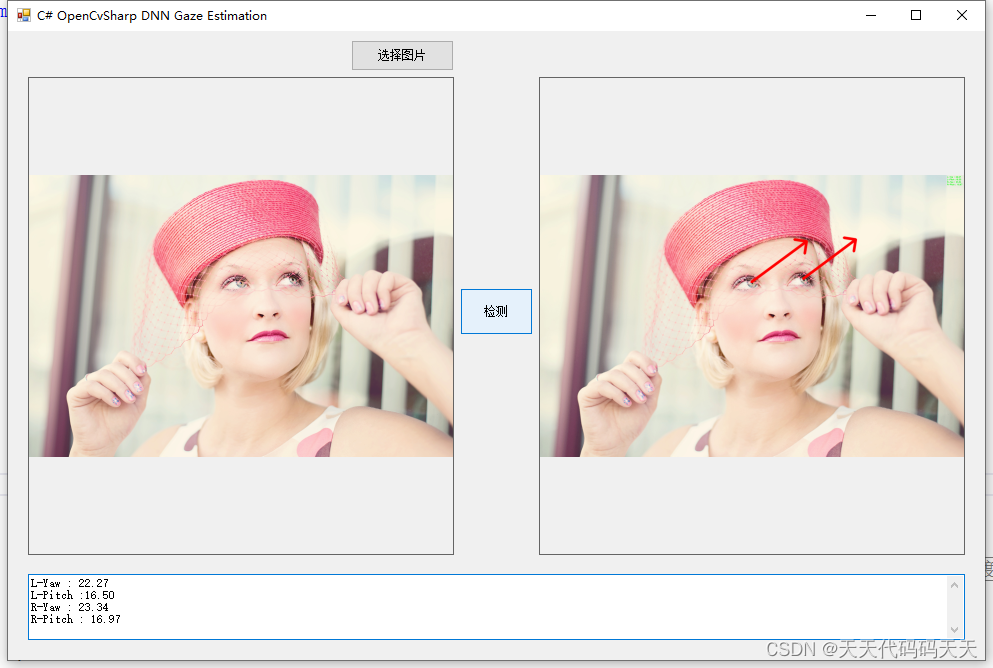

效果

模型信息

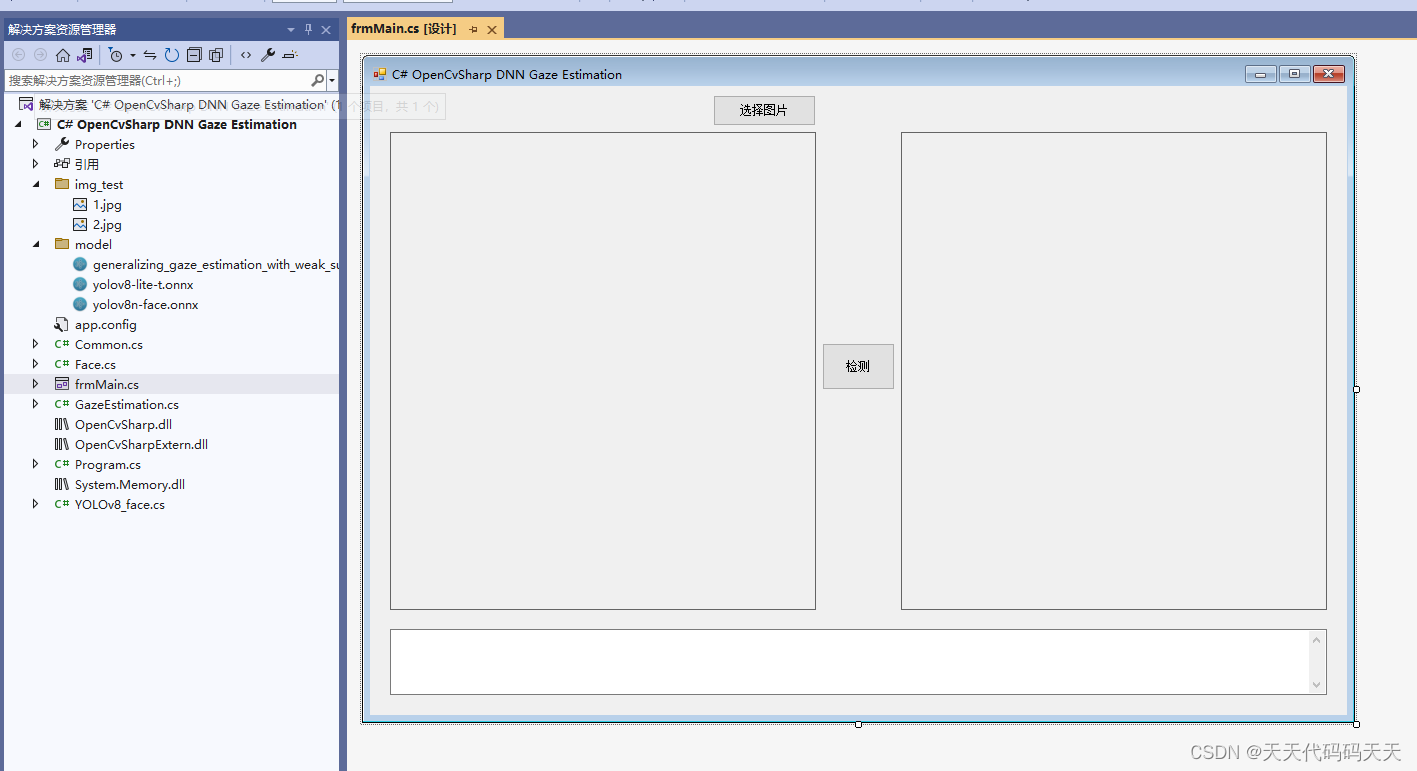

项目

代码

frmMain.cs

GazeEstimation.cs

下载

C# OpenCvSharp DNN Gaze Estimation

介绍

训练源码地址:https://github.com/deepinsight/insightface/tree/master/reconstruction/gaze

效果

模型信息

Inputs

-------------------------

name:input

tensor:Float[1, 3, 160, 160]

---------------------------------------------------------------

Outputs

-------------------------

name:output

tensor:Float[1, 962, 3]

---------------------------------------------------------------

项目

代码

frmMain.cs

using OpenCvSharp;

using OpenCvSharp.Dnn;

using System;

using System.Collections.Generic;

using System.Drawing;

using System.Windows.Forms;

namespace OpenCvSharp_Yolov8_Demo

{

public partial class frmMain : Form

{

public frmMain()

{

InitializeComponent();

}

string fileFilter = "*.*|*.bmp;*.jpg;*.jpeg;*.tiff;*.tiff;*.png";

string image_path = "";

string startupPath;

Mat image;

Mat result_image;

YOLOv8_face face_detector = new YOLOv8_face("model/yolov8n-face.onnx", 0.45f, 0.5f);

GazeEstimation gaze_predictor=new GazeEstimation("model/generalizing_gaze_estimation_with_weak_supervision_from_synthetic_views_1x3x160x160.onnx");

private void Form1_Load(object sender, EventArgs e)

{

image_path = "img_test/1.jpg";

pictureBox1.Image = new Bitmap(image_path);

image = new Mat(image_path);

}

private void button1_Click(object sender, EventArgs e)

{

OpenFileDialog ofd = new OpenFileDialog();

ofd.Filter = fileFilter;

if (ofd.ShowDialog() != DialogResult.OK) return;

pictureBox1.Image = null;

image_path = ofd.FileName;

pictureBox1.Image = new Bitmap(image_path);

textBox1.Text = "";

pictureBox2.Image = null;

}

private void button2_Click(object sender, EventArgs e)

{

if (image_path == "")

{

return;

}

textBox1.Text = "";

pictureBox2.Image = null;

button2.Enabled = false;

Application.DoEvents();

image = new Mat(image_path);

List<Face> ltFace = face_detector.Detect(new Mat(image_path));

if (ltFace.Count > 0)

{

result_image = image.Clone();

//face_detector.DrawPred(ltFace, result_image);

String info = "";

foreach (Face item in ltFace)

{

gaze_predictor.Detect(image, item);

result_image = gaze_predictor.DrawOn(result_image, item,out info);

}

pictureBox2.Image = new Bitmap(result_image.ToMemoryStream());

textBox1.Text = info;

}

else

{

textBox1.Text = "无信息";

}

button2.Enabled = true;

}

}

}

using OpenCvSharp;

using OpenCvSharp.Dnn;

using System;

using System.Collections.Generic;

using System.Drawing;

using System.Windows.Forms;namespace OpenCvSharp_Yolov8_Demo

{public partial class frmMain : Form{public frmMain(){InitializeComponent();}string fileFilter = "*.*|*.bmp;*.jpg;*.jpeg;*.tiff;*.tiff;*.png";string image_path = "";string startupPath;Mat image;Mat result_image;YOLOv8_face face_detector = new YOLOv8_face("model/yolov8n-face.onnx", 0.45f, 0.5f);GazeEstimation gaze_predictor=new GazeEstimation("model/generalizing_gaze_estimation_with_weak_supervision_from_synthetic_views_1x3x160x160.onnx");private void Form1_Load(object sender, EventArgs e){image_path = "img_test/1.jpg";pictureBox1.Image = new Bitmap(image_path);image = new Mat(image_path);}private void button1_Click(object sender, EventArgs e){OpenFileDialog ofd = new OpenFileDialog();ofd.Filter = fileFilter;if (ofd.ShowDialog() != DialogResult.OK) return;pictureBox1.Image = null;image_path = ofd.FileName;pictureBox1.Image = new Bitmap(image_path);textBox1.Text = "";pictureBox2.Image = null;}private void button2_Click(object sender, EventArgs e){if (image_path == ""){return;}textBox1.Text = "";pictureBox2.Image = null;button2.Enabled = false;Application.DoEvents();image = new Mat(image_path);List<Face> ltFace = face_detector.Detect(new Mat(image_path));if (ltFace.Count > 0){result_image = image.Clone();//face_detector.DrawPred(ltFace, result_image);String info = "";foreach (Face item in ltFace){gaze_predictor.Detect(image, item);result_image = gaze_predictor.DrawOn(result_image, item,out info);}pictureBox2.Image = new Bitmap(result_image.ToMemoryStream());textBox1.Text = info;}else{textBox1.Text = "无信息";}button2.Enabled = true;}}

}

GazeEstimation.cs

unsafe public class GazeEstimation

{

float[] mean = new float[] { 0.5f, 0.5f, 0.5f };

float[] std = new float[] { 0.5f, 0.5f, 0.5f };

int[] iris_idx_481 = new int[] { 248, 252, 224, 228, 232, 236, 240, 244 };

int num_eye = 481;

int input_size = 160;

float[] eye_kps;

Net net;

public GazeEstimation(string modelpath)

{

net = CvDnn.ReadNetFromOnnx(modelpath);

eye_kps = new float[num_eye * 2 * 3];

}

public void Detect(Mat img, Face box)

{

Point kps_right_eye = box.kpt[1];

Point kps_left_eye = box.kpt[0];

float[] center = new float[] { (kps_right_eye.X + kps_left_eye.X) * 0.5f, (kps_right_eye.Y + kps_left_eye.Y) * 0.5f };

float _size = (float)(Math.Max(box.rect.Width / 1.5f, Math.Abs(kps_right_eye.X - kps_left_eye.X)) * 1.5f);

float _scale = input_size / _size;

//transform

float cx = center[0] * _scale;

float cy = center[1] * _scale;

float[] data = new float[] { _scale, 0, (float)(-cx + input_size * 0.5), 0, _scale, (float)(-cy + input_size * 0.5) };

Mat M = new Mat(2, 3, MatType.CV_32F, data);

Mat cropped = new Mat();

Cv2.WarpAffine(img, cropped, M, new Size(input_size, input_size));

Mat rgbimg = new Mat();

Cv2.CvtColor(cropped, rgbimg, ColorConversionCodes.BGR2RGB);

Mat normalized_mat = Normalize(rgbimg);

Mat blob = CvDnn.BlobFromImage(normalized_mat);

net.SetInput(blob);

Mat[] outs = new Mat[3] { new Mat(), new Mat(), new Mat() };

string[] outBlobNames = net.GetUnconnectedOutLayersNames().ToArray();

net.Forward(outs, outBlobNames);

float* opred = (float*)outs[0].Data;//outs[0]的形状是(1,962,3)

Mat IM = new Mat();

Cv2.InvertAffineTransform(M, IM);

//trans_points

float scale = (float)Math.Sqrt(IM.At<float>(0, 0) * IM.At<float>(0, 0) + IM.At<float>(0, 1) * IM.At<float>(0, 1));

int row = outs[0].Size(1);

int col = outs[0].Size(2);

for (int i = 0; i < row; i++)

{

eye_kps[i * 3] = IM.At<float>(0, 0) * opred[i * 3] + IM.At<float>(0, 1) * opred[i * 3 + 1] + IM.At<float>(0, 2);

eye_kps[i * 3 + 1] = IM.At<float>(1, 0) * opred[i * 3] + IM.At<float>(1, 1) * opred[i * 3 + 1] + IM.At<float>(1, 2);

eye_kps[i * 3 + 2] = opred[i * 3 + 2] * scale;

}

}

public Mat DrawOn(Mat srcimg, Face box, out string info)

{

StringBuilder sb = new StringBuilder();

float rescale = 300.0f / box.rect.Width;

Mat eimg = new Mat();

Cv2.Resize(srcimg, eimg, new Size(), rescale, rescale);

//draw_item

int row = num_eye * 2;

for (int i = 0; i < row; i++)

{

float tmp = eye_kps[i * 3];

eye_kps[i * 3] = eye_kps[i * 3 + 1] * rescale;

eye_kps[i * 3 + 1] = tmp * rescale;

eye_kps[i * 3 + 2] *= rescale;

}

//angles_and_vec_from_eye

int slice = num_eye * 3;

float[] theta_x_y_vec_l = new float[5];

float[] eye_kps_l = new float[481 * 3];

float[] eye_kps_r = new float[481 * 3];

Array.Copy(eye_kps, 0, eye_kps_l, 0, 481 * 3);

Array.Copy(eye_kps, 481 * 3, eye_kps_r, 0, 481 * 3);

angles_and_vec_from_eye(eye_kps_l, iris_idx_481, theta_x_y_vec_l);

float[] theta_x_y_vec_r = new float[5];

angles_and_vec_from_eye(eye_kps_r, iris_idx_481, theta_x_y_vec_r);

float[] gaze_pred = new float[] { (float)((theta_x_y_vec_l[0] + theta_x_y_vec_r[0]) * 0.5), (float)((theta_x_y_vec_l[1] + theta_x_y_vec_r[1]) * 0.5) };

float diag = (float)Math.Sqrt((float)eimg.Rows * eimg.Cols);

float[] eye_pos_left = new float[] { 0, 0 };

float[] eye_pos_right = new float[] { 0, 0 };

for (int i = 0; i < 8; i++)

{

int ind = iris_idx_481[i];

eye_pos_left[0] += eye_kps[ind * 3];

eye_pos_left[1] += eye_kps[ind * 3 + 1];

eye_pos_right[0] += eye_kps[slice + ind * 3];

eye_pos_right[1] += eye_kps[slice + ind * 3 + 1];

}

eye_pos_left[0] /= 8;

eye_pos_left[1] /= 8;

eye_pos_right[0] /= 8;

eye_pos_right[1] /= 8;

float dx = (float)(0.4 * diag * Math.Sin(theta_x_y_vec_l[1]));

float dy = (float)(0.4 * diag * Math.Sin(theta_x_y_vec_l[0]));

Point eye_left_a = new Point(eye_pos_left[1], eye_pos_left[0]); 左眼的箭头线的起始点坐标

Point eye_left_b = new Point(eye_pos_left[1] + dx, eye_pos_left[0] + dy); 左右的箭头线的终点坐标

Cv2.ArrowedLine(eimg, eye_left_a, eye_left_b, new Scalar(0, 0, 255), 5, LineTypes.AntiAlias, 0, 0.18);

float yaw_deg_l = (float)(theta_x_y_vec_l[1] * (180 / Math.PI));

float pitch_deg_l = (float)(-theta_x_y_vec_l[0] * (180 / Math.PI));

dx = (float)(0.4 * diag * Math.Sin(theta_x_y_vec_r[1]));

dy = (float)(0.4 * diag * Math.Sin(theta_x_y_vec_r[0]));

Point eye_right_a = new Point(eye_pos_right[1], eye_pos_right[0]); 右眼的箭头线的起始点坐标

Point eye_right_b = new Point(eye_pos_right[1] + dx, eye_pos_right[0] + dy); 右眼的箭头线的终点坐标

Cv2.ArrowedLine(eimg, eye_right_a, eye_right_b, new Scalar(0, 0, 255), 5, LineTypes.AntiAlias, 0, 0.18);

float yaw_deg_r = (float)(theta_x_y_vec_r[1] * (180 / Math.PI));

float pitch_deg_r = (float)(-theta_x_y_vec_r[0] * (180 / Math.PI));

Cv2.Resize(eimg, eimg, new Size(srcimg.Cols, srcimg.Rows));

//draw Yaw, Pitch

string label = String.Format("L-Yaw : {0:f2}", yaw_deg_l);

sb.AppendLine(label);

Cv2.PutText(eimg, label, new Point(eimg.Cols - 200, 30), HersheyFonts.HersheySimplex, 0.7, new Scalar(0, 255, 0), 2);

label = String.Format("L-Pitch :{0:f2}", pitch_deg_l);

sb.AppendLine(label);

Cv2.PutText(eimg, label, new Point(eimg.Cols - 200, 60), HersheyFonts.HersheySimplex, 0.7, new Scalar(0, 255, 0), 2);

label = String.Format("R-Yaw : {0:f2}", yaw_deg_r);

sb.AppendLine(label);

Cv2.PutText(eimg, label, new Point(eimg.Cols - 200, 90), HersheyFonts.HersheySimplex, 0.7, new Scalar(0, 255, 0), 2);

label = String.Format("R-Pitch : {0:f2}", pitch_deg_r);

sb.AppendLine(label);

Cv2.PutText(eimg, label, new Point(eimg.Cols - 200, 120), HersheyFonts.HersheySimplex, 0.7, new Scalar(0, 255, 0), 2);

info = sb.ToString();

return eimg;

}

public Mat Normalize(Mat src)

{

Mat[] bgr = src.Split();

for (int i = 0; i < bgr.Length; ++i)

{

bgr[i].ConvertTo(bgr[i], MatType.CV_32FC1, 1.0 / (255.0 * std[i]), (0.0 - mean[i]) / std[i]);

}

Cv2.Merge(bgr, src);

foreach (Mat channel in bgr)

{

channel.Dispose();

}

return src;

}

/// <summary>

/// 输入参数eye的形状是(481,3)

/// 输入参数iris_lms_idx的长度shi

/// 输出theta_x_y_vec的长度是5, 分别是theta_x, theta_y, vec[0], vec[1], vec[2]

/// </summary>

void angles_and_vec_from_eye(float[] eye, int[] iris_lms_idx, float[] theta_x_y_vec)

{

float[] mean = new float[] { 0, 0, 0 };

for (int i = 0; i < 32; i++)

{

mean[0] += eye[i * 3];

mean[1] += eye[i * 3 + 1];

mean[2] += eye[i * 3 + 2];

}

mean[0] /= 32;

mean[1] /= 32;

mean[2] /= 32;

float[] p_iris = new float[8 * 3];

for (int i = 0; i < 8; i++)

{

int ind = iris_lms_idx[i];

p_iris[i * 3] = eye[ind * 3] - mean[0];

p_iris[i * 3 + 1] = eye[ind * 3 + 1] - mean[1];

p_iris[i * 3 + 2] = eye[ind * 3 + 2] - mean[2];

}

float[] mean_p_iris = new float[] { 0, 0, 0 };

for (int i = 0; i < 8; i++)

{

mean_p_iris[0] += p_iris[i * 3];

mean_p_iris[1] += p_iris[i * 3 + 1];

mean_p_iris[2] += p_iris[i * 3 + 2];

}

mean_p_iris[0] /= 8;

mean_p_iris[1] /= 8;

mean_p_iris[2] /= 8;

float l2norm_p_iris = (float)Math.Sqrt(mean_p_iris[0] * mean_p_iris[0] + mean_p_iris[1] * mean_p_iris[1] + mean_p_iris[2] * mean_p_iris[2]);

theta_x_y_vec[2] = mean_p_iris[0] / l2norm_p_iris; ///vec[0]

theta_x_y_vec[3] = mean_p_iris[1] / l2norm_p_iris; ///vec[1]

theta_x_y_vec[4] = mean_p_iris[2] / l2norm_p_iris; ///vec[2]

//angles_from_vec

float x = -theta_x_y_vec[4];

float y = theta_x_y_vec[3];

float z = -theta_x_y_vec[2];

float theta = (float)Math.Atan2(y, x);

float phi = (float)(Math.Atan2(Math.Sqrt(x * x + y * y), z) - Math.PI * 0.5);

theta_x_y_vec[0] = phi;

theta_x_y_vec[1] = theta;

}

}

unsafe public class GazeEstimation{float[] mean = new float[] { 0.5f, 0.5f, 0.5f };float[] std = new float[] { 0.5f, 0.5f, 0.5f };int[] iris_idx_481 = new int[] { 248, 252, 224, 228, 232, 236, 240, 244 };int num_eye = 481;int input_size = 160;float[] eye_kps;Net net;public GazeEstimation(string modelpath){net = CvDnn.ReadNetFromOnnx(modelpath);eye_kps = new float[num_eye * 2 * 3];}public void Detect(Mat img, Face box){Point kps_right_eye = box.kpt[1];Point kps_left_eye = box.kpt[0];float[] center = new float[] { (kps_right_eye.X + kps_left_eye.X) * 0.5f, (kps_right_eye.Y + kps_left_eye.Y) * 0.5f };float _size = (float)(Math.Max(box.rect.Width / 1.5f, Math.Abs(kps_right_eye.X - kps_left_eye.X)) * 1.5f);float _scale = input_size / _size;//transformfloat cx = center[0] * _scale;float cy = center[1] * _scale;float[] data = new float[] { _scale, 0, (float)(-cx + input_size * 0.5), 0, _scale, (float)(-cy + input_size * 0.5) };Mat M = new Mat(2, 3, MatType.CV_32F, data);Mat cropped = new Mat();Cv2.WarpAffine(img, cropped, M, new Size(input_size, input_size));Mat rgbimg = new Mat();Cv2.CvtColor(cropped, rgbimg, ColorConversionCodes.BGR2RGB);Mat normalized_mat = Normalize(rgbimg);Mat blob = CvDnn.BlobFromImage(normalized_mat);net.SetInput(blob);Mat[] outs = new Mat[3] { new Mat(), new Mat(), new Mat() };string[] outBlobNames = net.GetUnconnectedOutLayersNames().ToArray();net.Forward(outs, outBlobNames);float* opred = (float*)outs[0].Data;//outs[0]的形状是(1,962,3)Mat IM = new Mat();Cv2.InvertAffineTransform(M, IM);//trans_pointsfloat scale = (float)Math.Sqrt(IM.At<float>(0, 0) * IM.At<float>(0, 0) + IM.At<float>(0, 1) * IM.At<float>(0, 1));int row = outs[0].Size(1);int col = outs[0].Size(2);for (int i = 0; i < row; i++){eye_kps[i * 3] = IM.At<float>(0, 0) * opred[i * 3] + IM.At<float>(0, 1) * opred[i * 3 + 1] + IM.At<float>(0, 2);eye_kps[i * 3 + 1] = IM.At<float>(1, 0) * opred[i * 3] + IM.At<float>(1, 1) * opred[i * 3 + 1] + IM.At<float>(1, 2);eye_kps[i * 3 + 2] = opred[i * 3 + 2] * scale;}}public Mat DrawOn(Mat srcimg, Face box, out string info){StringBuilder sb = new StringBuilder();float rescale = 300.0f / box.rect.Width;Mat eimg = new Mat();Cv2.Resize(srcimg, eimg, new Size(), rescale, rescale);//draw_itemint row = num_eye * 2;for (int i = 0; i < row; i++){float tmp = eye_kps[i * 3];eye_kps[i * 3] = eye_kps[i * 3 + 1] * rescale;eye_kps[i * 3 + 1] = tmp * rescale;eye_kps[i * 3 + 2] *= rescale;}//angles_and_vec_from_eyeint slice = num_eye * 3;float[] theta_x_y_vec_l = new float[5];float[] eye_kps_l = new float[481 * 3];float[] eye_kps_r = new float[481 * 3];Array.Copy(eye_kps, 0, eye_kps_l, 0, 481 * 3);Array.Copy(eye_kps, 481 * 3, eye_kps_r, 0, 481 * 3);angles_and_vec_from_eye(eye_kps_l, iris_idx_481, theta_x_y_vec_l);float[] theta_x_y_vec_r = new float[5];angles_and_vec_from_eye(eye_kps_r, iris_idx_481, theta_x_y_vec_r);float[] gaze_pred = new float[] { (float)((theta_x_y_vec_l[0] + theta_x_y_vec_r[0]) * 0.5), (float)((theta_x_y_vec_l[1] + theta_x_y_vec_r[1]) * 0.5) };float diag = (float)Math.Sqrt((float)eimg.Rows * eimg.Cols);float[] eye_pos_left = new float[] { 0, 0 };float[] eye_pos_right = new float[] { 0, 0 };for (int i = 0; i < 8; i++){int ind = iris_idx_481[i];eye_pos_left[0] += eye_kps[ind * 3];eye_pos_left[1] += eye_kps[ind * 3 + 1];eye_pos_right[0] += eye_kps[slice + ind * 3];eye_pos_right[1] += eye_kps[slice + ind * 3 + 1];}eye_pos_left[0] /= 8;eye_pos_left[1] /= 8;eye_pos_right[0] /= 8;eye_pos_right[1] /= 8;float dx = (float)(0.4 * diag * Math.Sin(theta_x_y_vec_l[1]));float dy = (float)(0.4 * diag * Math.Sin(theta_x_y_vec_l[0]));Point eye_left_a = new Point(eye_pos_left[1], eye_pos_left[0]); 左眼的箭头线的起始点坐标Point eye_left_b = new Point(eye_pos_left[1] + dx, eye_pos_left[0] + dy); 左右的箭头线的终点坐标Cv2.ArrowedLine(eimg, eye_left_a, eye_left_b, new Scalar(0, 0, 255), 5, LineTypes.AntiAlias, 0, 0.18);float yaw_deg_l = (float)(theta_x_y_vec_l[1] * (180 / Math.PI));float pitch_deg_l = (float)(-theta_x_y_vec_l[0] * (180 / Math.PI));dx = (float)(0.4 * diag * Math.Sin(theta_x_y_vec_r[1]));dy = (float)(0.4 * diag * Math.Sin(theta_x_y_vec_r[0]));Point eye_right_a = new Point(eye_pos_right[1], eye_pos_right[0]); 右眼的箭头线的起始点坐标Point eye_right_b = new Point(eye_pos_right[1] + dx, eye_pos_right[0] + dy); 右眼的箭头线的终点坐标Cv2.ArrowedLine(eimg, eye_right_a, eye_right_b, new Scalar(0, 0, 255), 5, LineTypes.AntiAlias, 0, 0.18);float yaw_deg_r = (float)(theta_x_y_vec_r[1] * (180 / Math.PI));float pitch_deg_r = (float)(-theta_x_y_vec_r[0] * (180 / Math.PI));Cv2.Resize(eimg, eimg, new Size(srcimg.Cols, srcimg.Rows));//draw Yaw, Pitchstring label = String.Format("L-Yaw : {0:f2}", yaw_deg_l);sb.AppendLine(label);Cv2.PutText(eimg, label, new Point(eimg.Cols - 200, 30), HersheyFonts.HersheySimplex, 0.7, new Scalar(0, 255, 0), 2);label = String.Format("L-Pitch :{0:f2}", pitch_deg_l);sb.AppendLine(label);Cv2.PutText(eimg, label, new Point(eimg.Cols - 200, 60), HersheyFonts.HersheySimplex, 0.7, new Scalar(0, 255, 0), 2);label = String.Format("R-Yaw : {0:f2}", yaw_deg_r);sb.AppendLine(label);Cv2.PutText(eimg, label, new Point(eimg.Cols - 200, 90), HersheyFonts.HersheySimplex, 0.7, new Scalar(0, 255, 0), 2);label = String.Format("R-Pitch : {0:f2}", pitch_deg_r);sb.AppendLine(label);Cv2.PutText(eimg, label, new Point(eimg.Cols - 200, 120), HersheyFonts.HersheySimplex, 0.7, new Scalar(0, 255, 0), 2);info = sb.ToString();return eimg;}public Mat Normalize(Mat src){Mat[] bgr = src.Split();for (int i = 0; i < bgr.Length; ++i){bgr[i].ConvertTo(bgr[i], MatType.CV_32FC1, 1.0 / (255.0 * std[i]), (0.0 - mean[i]) / std[i]);}Cv2.Merge(bgr, src);foreach (Mat channel in bgr){channel.Dispose();}return src;}/// <summary>/// 输入参数eye的形状是(481,3)/// 输入参数iris_lms_idx的长度shi/// 输出theta_x_y_vec的长度是5, 分别是theta_x, theta_y, vec[0], vec[1], vec[2]/// </summary>void angles_and_vec_from_eye(float[] eye, int[] iris_lms_idx, float[] theta_x_y_vec){float[] mean = new float[] { 0, 0, 0 };for (int i = 0; i < 32; i++){mean[0] += eye[i * 3];mean[1] += eye[i * 3 + 1];mean[2] += eye[i * 3 + 2];}mean[0] /= 32;mean[1] /= 32;mean[2] /= 32;float[] p_iris = new float[8 * 3];for (int i = 0; i < 8; i++){int ind = iris_lms_idx[i];p_iris[i * 3] = eye[ind * 3] - mean[0];p_iris[i * 3 + 1] = eye[ind * 3 + 1] - mean[1];p_iris[i * 3 + 2] = eye[ind * 3 + 2] - mean[2];}float[] mean_p_iris = new float[] { 0, 0, 0 };for (int i = 0; i < 8; i++){mean_p_iris[0] += p_iris[i * 3];mean_p_iris[1] += p_iris[i * 3 + 1];mean_p_iris[2] += p_iris[i * 3 + 2];}mean_p_iris[0] /= 8;mean_p_iris[1] /= 8;mean_p_iris[2] /= 8;float l2norm_p_iris = (float)Math.Sqrt(mean_p_iris[0] * mean_p_iris[0] + mean_p_iris[1] * mean_p_iris[1] + mean_p_iris[2] * mean_p_iris[2]);theta_x_y_vec[2] = mean_p_iris[0] / l2norm_p_iris; ///vec[0]theta_x_y_vec[3] = mean_p_iris[1] / l2norm_p_iris; ///vec[1]theta_x_y_vec[4] = mean_p_iris[2] / l2norm_p_iris; ///vec[2]//angles_from_vecfloat x = -theta_x_y_vec[4];float y = theta_x_y_vec[3];float z = -theta_x_y_vec[2];float theta = (float)Math.Atan2(y, x);float phi = (float)(Math.Atan2(Math.Sqrt(x * x + y * y), z) - Math.PI * 0.5);theta_x_y_vec[0] = phi;theta_x_y_vec[1] = theta;}}下载

源码下载

![BUUCTF Reverse/[2019红帽杯]Snake](https://img-blog.csdnimg.cn/direct/7658b43febcd45cd80fd2911ceee5aa2.png)