32.1 Common Space

This section describes the spaces needed to support viewing and interacting with the virtual world.

本节介绍支持查看虚拟世界并与之交互所需的空间。

The spaces required for supporting viewing and interacting with a virtual world can vary depending on the specific use case and requirements of the system being designed. However, there are some general guidelines that can be followed:

支持查看虚拟世界和与虚拟世界交互所需的空间可能因特定用例和所设计系统的要求而异。但是,可以遵循一些一般准则:

Viewing Space: The primary space required is an area where users can comfortably view the virtual world. This typically involves using a computer or other device with a screen that displays the virtual environment. The size of this space will depend on factors such as the resolution of the display and the distance from which the user views the content.

观看空间:所需的主要空间是用户可以舒适地观看虚拟世界的区域。这通常涉及使用具有显示虚拟环境的屏幕的计算机或其他设备。此空间的大小将取决于显示器的分辨率和用户查看内容的距离等因素。

Interaction Space: In addition to a viewing space, it may also be necessary to have an interaction space where users can physically interact with the virtual world. This could involve using specialized hardware such as controllers, gloves, or even body-tracking technology. The size of the interaction space will depend on the type of interactions that need to take place within the virtual world.

交互空间:除了观看空间外,可能还需要一个交互空间,用户可以在其中与虚拟世界进行物理交互。这可能涉及使用专门的硬件,如控制器、手套,甚至是身体跟踪技术。交互空间的大小将取决于虚拟世界中需要发生的交互类型。

Collaboration Space: If multiple people are going to be working together in the virtual world, it may be necessary to designate a collaborative space where they can all work together simultaneously. This could involve creating shared avatars or objects that allow multiple users to interact with the same virtual environment at once.

协作空间:如果多人要在虚拟世界中一起工作,则可能需要指定一个可以同时工作的协作空间。这可能涉及创建共享头像或对象,允许多个用户同时与同一虚拟环境进行交互。

Navigation Space: Finally, it’s important to consider how users will navigate through the virtual world. Depending on the complexity of the environment and the types of interactions that will take place, different navigation methods may be required. For example, if users are navigating through a large open world, they may require a more advanced method of navigation than simply clicking on hotspots on a map.

导航空间:最后,重要的是要考虑用户将如何在虚拟世界中导航。根据环境的复杂性和将要发生的交互类型,可能需要不同的导航方法。例如,如果用户正在大型开放世界中导航,他们可能需要一种更高级的导航方法,而不仅仅是单击地图上的热点。

Overall, the key thing to remember when designing spaces for virtual reality environments is to keep the end user in mind and focus on creating experiences that are intuitive, engaging, and immersive.

总的来说,在为虚拟现实环境设计空间时,要记住的关键是牢记最终用户,并专注于创造直观、引人入胜和身临其境的体验。

32.2 Projection and Viewing Transformations

This section describes projection transformation and the portions of the viewing transformation required to set the viewpoint.

本节介绍投影变换以及设置视点所需的查看变换部分。

Projection transformation is a part of the viewing transformation in virtual reality systems, which is responsible for transforming the 3D objects into a format that can be displayed on a 2D screen or a head-mounted display. The projection transformation process involves several steps that are essential to set the viewpoint for the user, including:

投影变换是虚拟现实系统中观看变换的一部分,它负责将 3D 对象转换为可以在 2D 屏幕或头戴式显示器上显示的格式。投影变换过程涉及为用户设置视点所必需的几个步骤,包括:

Object selection: The first step in projection transformation is to select the objects that need to be transformed. This could involve selecting specific objects or a group of objects that are relevant to the user’s interaction.

对象选择:投影变换的第一步是选择需要变换的对象。这可能涉及选择与用户交互相关的特定对象或一组对象。

Object positioning: Once the objects are selected, the projection transformation process involves positioning them in a way that makes sense for the user’s perspective. This could involve translating, rotating, or scaling the objects to fit the user’s field of view.

对象定位:选择对象后,投影变换过程涉及以对用户视角有意义的方式定位它们。这可能涉及平移、旋转或缩放对象以适应用户的视野。

Object orientation: The next step in projection transformation is to orient the objects in a way that makes sense for the user’s perspective. This could involve rotating the objects to align them with the user’s viewpoint or adjusting their orientation to match the user’s gaze.

面向对象:投影转换的下一步是以对用户视角有意义的方式定向对象。这可能涉及旋转对象以使其与用户的视点对齐,或调整其方向以匹配用户的视线。

Projection onto a plane: Finally, the projection transformation process involves projecting the position and orientation of the objects onto a 2D plane that can be displayed on a screen or head-mounted display. This is typically done by applying a matrix transformation to convert the object’s position and orientation from 3D space to 2D space.

投影到平面上:最后,投影变换过程涉及将对象的位置和方向投影到可显示在屏幕或头戴式显示器上的 2D 平面上。这通常是通过应用矩阵变换将对象的位置和方向从 3D 空间转换为 2D 空间来完成的。

The portions of the viewing transformation required to set the viewpoint include the projection transformation, which is responsible for transforming the 3D objects into a format that can be displayed on a 2D screen or a head-mounted display. Other portions of the viewing transformation may include the camera transformation, which is responsible for setting the camera’s position and orientation, and the perspective transformation, which is responsible for adjusting the perspective of the displayed objects based on the user’s viewpoint.

设置视点所需的查看变换部分包括投影变换,它负责将 3D 对象转换为可在 2D 屏幕或头戴式显示器上显示的格式。查看转换的其他部分可能包括相机转换,前者负责设置相机的位置和方向,后者负责根据用户的视点调整显示对象的透视。

32.3 Distortion Correction

describes how to remove the effects of distortion caused by curved screens and lenses. Although it is possible to construct lens systems that do not introduce distortion, weight and cost constraints on HMDs often lead to the use of lenses that do cause distortion.

介绍如何消除曲面屏幕和镜头引起的失真影响。虽然可以构建不引入畸变的镜头系统,但 HMD 的重量和成本限制通常会导致使用确实会导致畸变的镜头。

To remove the effects of distortion caused by curved screens and lenses, there are several techniques that can be used. One common approach is to use a distortion correction algorithm that can analyze the distortion and apply corrections to the image data. This can involve using a mathematical model of the distortion to predict where the distortion occurs and how it affects the image, and then applying corrections to the image data to restore the original image.

为了消除曲面屏幕和镜头引起的失真影响,可以使用多种技术。一种常见的方法是使用畸变校正算法,该算法可以分析失真并对图像数据应用校正。这可能涉及使用失真的数学模型来预测失真发生的位置以及它如何影响图像,然后对图像数据应用校正以恢复原始图像。

Another approach is to use a lens system that is designed specifically to minimize distortion. This can involve using specialized lenses or lens arrays that are optimized to minimize distortion and to maintain a consistent field of view. This can also involve using specialized camera systems that can capture images with minimal distortion, such as using fisheye lenses or fish-eye cameras.

另一种方法是使用专门设计用于最大限度地减少失真的镜头系统。这可能涉及使用经过优化的专用镜头或镜头阵列,以最大限度地减少失真并保持一致的视野。这还可能涉及使用可以以最小失真捕获图像的专用相机系统,例如使用鱼眼镜头或鱼眼相机。

It’s worth noting that while it is possible to construct lens systems that do not introduce distortion, weight and cost constraints on HMDs often limit the use of such systems. As a result, developers must balance the need for distortion correction against the limitations of available hardware.

值得注意的是,虽然可以构建不引入畸变的镜头系统,但 HMD 的重量和成本限制通常会限制此类系统的使用。因此,开发人员必须在失真校正需求与可用硬件的限制之间取得平衡。

32.4 Handling Latency and Jitter

Motion to Photon (MTP) delay refers to the total time taken for a motion tracking system to translate the motion information captured by its sensors into visual data that can be displayed on a screen. It includes various system latencies that occur across different components of the system, such as tracker sensor delays, tracker finite sampling rates, transmission delays, and synchronization delays on the input side, as well as rendering time, OS and driver buffering delays, reformatting delays, and scan-out delays on the output side.

运动到光子 (MTP) 延迟是指运动跟踪系统将其传感器捕获的运动信息转换为可在屏幕上显示的视觉数据所花费的总时间。它包括跨系统不同组件发生的各种系统延迟,例如跟踪器传感器延迟、跟踪器有限采样率、传输延迟和输入端的同步延迟,以及输出端的渲染时间、操作系统和驱动程序缓冲延迟、重新格式化延迟和扫描输出延迟。

One of the challenges associated with reducing MTP delay is that many of these delays cannot be eliminated entirely due to technical constraints and tradeoffs involved in designing and implementing the system. For instance, reducing the tracker sensor delays would require faster sensors, but faster sensors would also increase the power consumption and heat generation of the system, making it less sustainable over long periods of time. Similarly, reducing the rendering time and reformatting delays would require more powerful processing capabilities, but this would also increase the overall cost and power consumption of the system.

与减少MTP延迟相关的挑战之一是,由于设计和实施系统所涉及的技术限制和权衡,其中许多延迟无法完全消除。例如,减少跟踪器传感器延迟将需要更快的传感器,但更快的传感器也会增加系统的功耗和热量产生,使其在很长一段时间内难以实现。同样,减少渲染时间和重新格式化延迟将需要更强大的处理能力,但这也会增加系统的总体成本和功耗。

As a result, efforts to reduce MTP delay often focus on optimizing individual components of the system to minimize the impact of each of these delays on the overall system performance. Some approaches to reducing MTP delay include using high-performance sensors and processors, optimizing the communication between the sensors and the display, and using techniques like temporal anti-aliasing to smooth out any remaining visual artifacts. Additionally, some displays, such as HMDs that scan out in portrait mode, may be designed with specific hardware or software optimizations to reduce the delay for one eye compared to the other.

因此,减少 MTP 延迟的工作通常侧重于优化系统的各个组件,以最大程度地减少每个延迟对整体系统性能的影响。减少MTP延迟的一些方法包括使用高性能传感器和处理器,优化传感器和显示器之间的通信,以及使用时间抗锯齿等技术来消除任何剩余的视觉伪影。此外,某些显示器(例如以纵向模式扫描的 HMD)可能设计有特定的硬件或软件优化,以减少一只眼睛相对于另一只眼睛的延迟。

VR systems employ several techniques to deal with this latency and jitter, including Frame Sync, Predictive Tracking, Time Warp (synchronous and asynchronous), and Direct Rendering. Each of these is described below. Not all techniques are employed in every system, but they can be combined to provide a superior experience.

VR 系统采用多种技术来处理这种延迟和抖动,包括帧同步、预测跟踪、时间扭曲(同步和异步)和直接渲染。下面将逐一介绍。并非每个系统都采用所有技术,但它们可以组合在一起以提供卓越的体验。

这里简要概述了用于处理 VR 系统中延迟和抖动的技术:

Frame Sync: This technique involves synchronizing the rendering of frames between the VR headset and other components in the system, such as the computer or game console. In this approach, the headset captures the user’s motion data and sends it to the computer, which then renders the next frame and sends it back to the headset. The headset then displays the frame in real-time, ensuring that there is no delay between the user’s actions and the displayed content. Frame Sync is commonly used in commercial VR systems, such as the Oculus Rift and HTC Vive.

帧同步:此技术涉及在 VR 头戴式设备与系统中的其他组件(如计算机或游戏机)之间同步帧的渲染。在这种方法中,头戴显示设备捕获用户的运动数据并将其发送到计算机,然后计算机渲染下一帧并将其发送回头戴显示设备。然后,头戴式设备实时显示帧,确保用户的操作和显示的内容之间没有延迟。帧同步通常用于商业 VR 系统,例如 Oculus Rift 和 HTC Vive。

Predictive Tracking: This technique involves using motion capture data to predict the user’s future motion and render the next frame in advance. This can be particularly useful in systems with high latency or jitter, as it allows the system to anticipate the user’s motion and render the next frame before the user’s actual motion is captured. Predictive tracking is commonly used in motion capture systems and is also used in some VR systems, such as the VR-ready version of the Xbox One.

预测跟踪:该技术涉及使用动作捕捉数据来预测用户未来的运动并提前渲染下一帧。这在具有高延迟或抖动的系统中特别有用,因为它允许系统预测用户的运动,并在捕获用户的实际运动之前渲染下一帧。预测跟踪通常用于动作捕捉系统,也用于某些 VR 系统,例如 Xbox One 的 VR 就绪版本。

Time Warp (synchronous and asynchronous): Time Warp is a technique that involves stretching and warping the image on the screen to match the user’s motion. In synchronous Time Warp, the system waits for the user’s motion data before warping the image, resulting in a smooth and natural experience. In asynchronous Time Warp, the system stretches and warps the image in real-time, resulting in a more responsive and fluid experience. Time Warp is commonly used in PC-based VR systems, such as the HTC Vive and Oculus Rift.

时间扭曲(同步和异步):时间扭曲是一种涉及拉伸和扭曲屏幕上的图像以匹配用户运动的技术。在同步时间扭曲中,系统在扭曲图像之前等待用户的运动数据,从而产生流畅自然的体验。在异步时间扭曲中,系统实时拉伸和扭曲图像,从而产生更灵敏和流畅的体验。Time Warp 通常用于基于 PC 的 VR 系统,例如 HTC Vive 和 Oculus Rift。

Direct Rendering: This technique involves rendering the VR content directly on the VR headset itself, rather than on a separate computer or game console. This can reduce the overall latency and increase the performance of the system, as the headset can render the content directly without the need for additional hardware or software. Direct Rendering is commonly used in commercial VR systems, such as the Oculus Quest and Vive Cosmos.

直接渲染:此技术涉及直接在 VR 头戴式设备本身上渲染 VR 内容,而不是在单独的计算机或游戏机上渲染。这可以减少整体延迟并提高系统性能,因为头戴式设备可以直接渲染内容,而无需额外的硬件或软件。直接渲染通常用于商业 VR 系统,例如 Oculus Quest 和 Vive Cosmos。

In summary, Frame Sync, Predictive Tracking, Time Warp, and Direct Rendering are some of the techniques used to deal with latency and jitter in VR systems. Each of these techniques has its own advantages and limitations, and the best approach depends on the specific requirements of the system and the user’s preferences.

总之,帧同步、预测跟踪、时间扭曲和直接渲染是用于处理 VR 系统中延迟和抖动的一些技术。这些技术中的每一种都有自己的优点和局限性,最佳方法取决于系统的具体要求和用户的偏好。

32.5 Frame Sync

The underlying rendering and display scan-out circuitry usually runs at a fixed refresh rate, somewhere between 60 and 90 Hz. The currently available frame is scanned out whether or not there is a new image to be displayed, and independent of the rendering initiation or completion time. Thus, for long renders an old image may be repeatedly displayed. This section describes how to synchronize rendering with scan-out.

底层渲染和显示扫描电路通常以固定的刷新率运行,介于 60 到 90 Hz 之间。无论是否有要显示的新图像,都会扫描出当前可用的帧,并且与渲染启动或完成时间无关。因此,对于长时间渲染,旧图像可能会重复显示。本节介绍如何将渲染与扫描输出同步。

The synchronization of rendering with scan-out is an important aspect of VR systems, as it can significantly affect the user experience and the overall performance of the system. One of the techniques used to synchronize rendering with scan-out is Frame Sync, as mentioned earlier.

渲染与扫描的同步是VR系统的一个重要方面,因为它会显着影响用户体验和系统的整体性能。如前所述,用于将渲染与扫描输出同步的技术之一是帧同步。

Frame Sync involves synchronizing the rendering of frames with the display scan-out rate of the VR headset. In other words, the system waits for the display to scan out a new frame before rendering the next frame. This ensures that the user’s eyes are always looking at the latest content, resulting in a smoother and more natural experience.

帧同步涉及将帧的渲染与 VR 头戴式设备的显示扫描速率同步。换句话说,系统在渲染下一帧之前等待显示器扫描出新帧。这确保了用户的眼睛始终在看最新内容,从而带来更流畅、更自然的体验。

Frame Sync can be implemented using a variety of techniques, including:

可以使用多种技术实现帧同步,包括:

Frame Buffer Synchronization: This technique involves synchronizing the rendering of frames with the display scan-out rate by using a frame buffer that is synchronized with the display. The frame buffer is a memory buffer that stores the rendered frames, and it is synchronized with the display using a technique called “synchronous scan-out”. This technique ensures that the display always displays the latest frame, resulting in a smooth and natural experience.

帧缓冲区同步:此技术涉及使用与显示器同步的帧缓冲区将帧的呈现与显示器扫描出速率同步。帧缓冲区是存储渲染帧的内存缓冲区,它使用一种称为“同步扫描输出”的技术与显示器同步。这种技术可确保显示器始终显示最新的帧,从而带来流畅自然的体验。

Frame Rate Control: This technique involves synchronizing the rendering of frames with the display scan-out rate by controlling the frame rate of the system. The system can be configured to render at a fixed frame rate that matches the display scan-out rate, ensuring that the user’s eyes are always looking at the latest content. This technique can be implemented using a variety of techniques, including software and hardware-based frame rate control.

帧速率控制:此技术涉及通过控制系统的帧速率将帧的渲染与显示扫描速率同步。系统可以配置为以与显示器扫描速率相匹配的固定帧速率进行渲染,确保用户的眼睛始终在看最新内容。该技术可以使用多种技术来实现,包括基于软件和硬件的帧速率控制。

Remote Rendering: This technique involves rendering the VR content remotely on a high-performance server and streaming the rendered frames to the VR headset. This can significantly reduce the overall latency of the system, as the rendering is performed on a high-performance server, and the rendered frames are streamed to the VR headset in real-time. This technique can be combined with Frame Sync to further reduce the latency of the system.

远程渲染:此技术涉及在高性能服务器上远程渲染 VR 内容,并将渲染的帧流式传输到 VR 头戴式设备。这可以显著降低系统的整体延迟,因为渲染是在高性能服务器上执行的,渲染的帧是实时流式传输到 VR 头戴式设备的。此技术可以与帧同步结合使用,以进一步减少系统的延迟。

In summary, synchronizing rendering with scan-out is a critical aspect of VR systems, and various techniques, including Frame Sync, Frame Buffer Synchronization, Frame Rate Control, and Remote Rendering, can be used to achieve this goal. The choice of technique will depend on the specific requirements of the system and the user’s preferences.

总之,将渲染与扫描输出同步是 VR 系统的一个关键方面,可以使用各种技术(包括帧同步、帧缓冲区同步、帧速率控制和远程渲染)来实现这一目标。技术的选择将取决于系统的具体要求和用户的偏好。

32.6 Predictive Tracking

The inertial measurement units included in many VR tracking systems provide direct measurements of positional and (acceleration and rate of rotation). The Kalman and other optimal estimation filters used to perform sensor fusion on the tracking systems can also estimate these derivative estimates along with the location and orientation. The resulting state vector can be used to estimate a pose (location and orientation) at points in time other than the present, such as the expected future time when the next frame to be rendered will be displayed. Ron Azuma showed that such predictions can improve tracking for delays of up to about 80 ms [Azuma 1995]. This section describes how to harness predictive tracking to reduce perceived latency.

许多VR跟踪系统中包含的惯性测量单元可直接测量位置和(加速度和旋转速率)。用于在跟踪系统上执行传感器融合的卡尔曼和其他最优估计滤波器也可以估计这些导数估计以及位置和方向。生成的状态向量可用于估计当前时间点以外的时间点的姿态(位置和方向),例如显示下一帧要渲染的预期未来时间。Ron Azuma 表明,这种预测可以改善对高达 80 毫秒左右延迟的跟踪 [Azuma 1995]。本节介绍如何利用预测跟踪来减少感知的延迟。

Predictive tracking is a technique that involves using the predicted future location and orientation of the user’s head to improve the tracking accuracy of the VR system. This technique can be particularly useful in systems with high latency or jitter, as it allows the system to anticipate the user’s motion and improve the tracking accuracy before the user’s actual motion is captured.

预测跟踪是一种技术,涉及使用预测的用户头部的未来位置和方向来提高 VR 系统的跟踪精度。这种技术在具有高延迟或抖动的系统中特别有用,因为它允许系统在捕获用户的实际运动之前预测用户的运动并提高跟踪精度。

One of the techniques used to implement predictive tracking is the Common Prediction Algorithm (CPA), which is a predictive tracking algorithm that uses motion capture data to predict the user’s future motion and improve the tracking accuracy. In CPA, a motion capture system is used to capture the user’s motion data, which is then used to train a predictive model. The predictive model is used to predict the user’s future motion based on the motion capture data, and the resulting motion data is used to update the tracking data in real-time.

用于实现预测跟踪的技术之一是通用预测算法 (CPA),它是一种预测跟踪算法,它使用运动捕捉数据来预测用户未来的运动并提高跟踪精度。在 CPA 中,动作捕捉系统用于捕获用户的运动数据,然后用于训练预测模型。预测模型用于根据动作捕捉数据预测用户未来的运动,生成的运动数据用于实时更新跟踪数据。

Another technique used to implement predictive tracking is the Predictive Tracking Work (PredATW), which is a ML-based predictor that predicts the ATW latency for a VR application and then schedules it as late as possible. This is the first work to do so, and it can significantly reduce the perceived latency of a VR application. The PredATW uses motion capture data and machine learning algorithms to predict the ATW latency, and then schedules the rendering of the next frame as late as possible to minimize the latency.

用于实现预测跟踪的另一种技术是预测跟踪工作 (PredATW),它是一种基于 ML 的预测器,可预测 VR 应用程序的 ATW 延迟,然后尽可能晚地安排它。这是第一个这样做的工作,它可以显着降低VR应用程序的感知延迟。PredATW 使用动作捕捉数据和机器学习算法来预测 ATW 延迟,然后尽可能晚地安排下一帧的渲染,以最大程度地减少延迟。

In summary, predictive tracking is a technique that can be used to improve the tracking accuracy of VR systems and reduce the perceived latency. Common Prediction Algorithm and Predictive Tracking Work are two techniques that can be used to implement predictive tracking. The use of predictive tracking can significantly improve the user experience and the overall performance of the VR system.

综上所述,预测跟踪是一种可用于提高VR系统跟踪精度并减少感知延迟的技术。通用预测算法和预测跟踪工作是可用于实现预测跟踪的两种技术。使用预测跟踪可以显着改善用户体验和 VR 系统的整体性能。

32.7 Time Warp

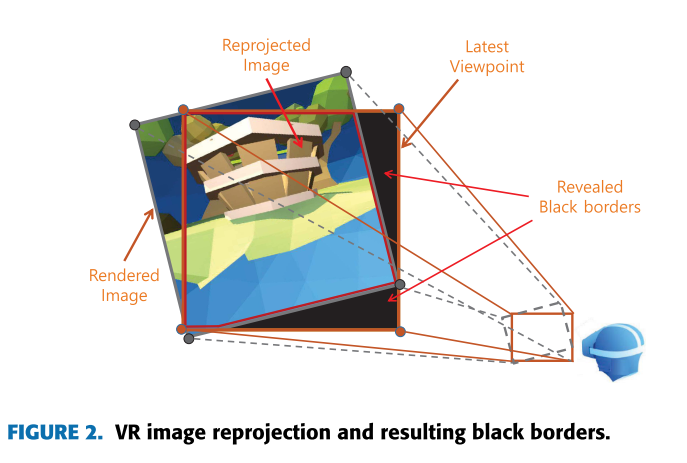

Because rendering a scene takes time, and because there can be a delay between the end of rendering and the start of display scan-out, the image produced using the head pose that was available when rendering began is not perfectly matched to the pose when that image is presented to the viewer. A solution is to reproject—warp—the original image based on the inverse difference between the original pose and the new pose calculated for the newly estimated time of presentation. The rendered image is thus adjusted to more closely match what should have been rendered had the future pose been known a priori.

由于渲染场景需要时间,并且渲染结束和显示扫描开始之间可能存在延迟,因此使用渲染开始时可用的头部姿势生成的图像与将该图像呈现给观看者时的姿势并不完全匹配。一种解决方案是根据原始姿势和新姿势之间的反差(为新估计的呈现时间计算)重新投影(变形)原始图像。因此,渲染的图像被调整为更接近于应该渲染的图像,如果先验地知道未来的姿势。

Reprojection is a technique used in VR systems to address the mismatch between the head pose used during rendering and the pose observed by the user at the time of display. It involves warping or transforming the original image based on the inverse difference between the initial pose and the updated pose calculated for the current time.

重新投影是 VR 系统中使用的一种技术,用于解决渲染期间使用的头部姿势与用户在显示时观察到的姿势之间的不匹配。它涉及根据初始姿势与为当前时间计算的更新姿势之间的反差来扭曲或转换原始图像。

The basic idea behind reprojection is to create a virtual camera that moves in sync with the user’s head movement. When the user’s head moves, the virtual camera follows suit and captures the scene from a different perspective. At the same time, the system updates the pose of the virtual camera based on the user’s new head pose and calculates the corresponding viewing parameters, such as the position and orientation of the camera. Finally, the system applies a transformation matrix to the original image to correct for the difference between the initial pose and the updated pose.

重新投影背后的基本思想是创建一个与用户头部运动同步移动的虚拟摄像机。当用户的头部移动时,虚拟摄像机会跟随并从不同的角度捕捉场景。同时,系统根据用户新的头部姿势更新虚拟摄像机的姿态,并计算出相应的观看参数,如摄像机的位置和方向。最后,系统将变换矩阵应用于原始图像,以校正初始姿势和更新姿势之间的差异。

Reprojection can help reduce the perception of latency in VR systems by making the visual feedback more accurate and consistent with the user’s actions. By warping the original image based on the inverse difference between the initial pose and the updated pose, the system can make the image appear to be more accurately aligned with the user’s head movement. This can result in a more immersive and responsive VR experience for users.

重新投影可以使视觉反馈更加准确并与用户的操作保持一致,从而帮助减少 VR 系统中对延迟的感知。通过根据初始姿势和更新姿势之间的反差对原始图像进行扭曲,系统可以使图像看起来更准确地与用户的头部运动对齐。这可以为用户带来更加身临其境和响应迅速的 VR 体验。

Overall, reprojection is an effective technique for reducing the latency in VR systems and improving the user experience. By warping the original image based on the inverse difference between the initial pose and the updated pose, the system can make the image appear to be more accurately aligned with the user’s head movement, resulting in a more immersive and responsive VR experience.

总体而言,重新投影是减少VR系统延迟和改善用户体验的有效技术。通过根据初始姿势和更新姿势之间的反向差异对原始图像进行扭曲,系统可以使图像看起来更准确地与用户的头部运动对齐,从而产生更加身临其境和响应迅速的VR体验。

32.8 Direct Rendering

(aka Direct Mode, Direct-to-Display)

To support transparent borders and other user-interface effects, some operating systems store each rendered frame before compositing it onto the display, which adds a frame of latency. To improve throughput, some graphics-card drivers keep two or more frames in the pipeline, with CPU rendering completing more than a frame sooner than the image will be presented to the display. Both approaches add to the end-to-end latency for VR systems. This section describes how to avoid these delays using direct rendering. Vendor-specific APIs have been provided by nVidia and AMD to bypass the operating system and render directly to the display device. (A vendor-independent approach is being implemented within a new Microsoft API as well.) Each of these approaches also offers control over the number of buffers and their presentation to the display surface, enabling either frame asynchronous or frame synchronous swapping of buffers and determination of the time at which vertical retrace happens. This enables front-buffer rendering, but also double-buffer rendering where the buffers are swapped just before vertical retrace, thus providing the combined benefit of extended render times together with low-latency presentation.

为了支持透明边框和其他用户界面效果,某些操作系统会先存储每个渲染的帧,然后再将其合成到显示器上,这会增加帧的延迟。为了提高吞吐量,某些显卡驱动程序在管道中保留两个或更多帧,CPU 渲染完成的时间比图像呈现到显示器的时间快一帧以上。这两种方法都增加了 VR 系统的端到端延迟。本节介绍如何使用直接渲染来避免这些延迟。nVidia 和 AMD 提供了特定于供应商的 API,以绕过操作系统并直接渲染到显示设备。(在新的 Microsoft API 中也实现了独立于供应商的方法。这些方法中的每一种还提供了对缓冲区数量及其在显示表面上的呈现的控制,从而实现了缓冲区的帧异步或帧同步交换,并确定了垂直回溯发生的时间。这支持前缓冲区渲染,但也支持双缓冲区渲染,其中缓冲区在垂直回溯之前交换,从而提供了延长渲染时间和低延迟演示的综合优势。

Direct Rendering is a technique that allows graphics hardware to render images directly to the display without going through the operating system. This approach eliminates the need to store each rendered frame before compositing it onto the display, which reduces the latency and improves the performance of VR systems.

直接渲染是一种技术,它允许图形硬件将图像直接渲染到显示器上,而无需通过操作系统。这种方法消除了在将每个渲染帧合成到显示器上之前存储每个渲染帧的需要,从而减少了延迟并提高了 VR 系统的性能。

By bypassing the operating system, vendors such as nVidia and AMD have provided APIs that enable direct rendering to the display device. This approach allows for control over the number of buffers and their presentation to the display surface, enabling either frame asynchronous or frame synchronous swapping of buffers and determining the time at which vertical retrace occurs. This enables front-buffer rendering, which provides extended render times together with low-latency presentation.

通过绕过操作系统,nVidia 和 AMD 等供应商提供了 API,可以直接渲染到显示设备。这种方法允许控制缓冲区的数量及其在显示表面上的呈现,从而实现缓冲区的帧异步或帧同步交换,并确定垂直回溯发生的时间。这支持前端缓冲区渲染,从而提供更长的渲染时间和低延迟演示。

In addition, a new Microsoft API is being implemented to offer a vendor-independent approach for direct rendering. This API allows for control over the number of buffers and their presentation to the display surface, enabling either frame asynchronous or frame synchronous swapping of buffers and determining the time at which vertical retrace happens. This provides a more flexible and standardized approach for direct rendering, which can further improve the performance and latency of VR systems.

此外,正在实施新的 Microsoft API,以提供独立于供应商的直接渲染方法。此 API 允许控制缓冲区的数量及其在显示图面上的呈现,从而实现缓冲区的帧异步或帧同步交换,并确定垂直回溯发生的时间。这为直接渲染提供了一种更灵活和标准化的方法,可以进一步提高 VR 系统的性能和延迟。

Overall, direct rendering is an effective technique for reducing latency and improving performance in VR systems. By rendering directly to the display device, graphics hardware can eliminate the need to go through the operating system and reduce the latency associated with storing and compositing rendered frames. This approach offers control over the number of buffers and their presentation to the display surface, enabling either frame asynchronous or frame synchronous swapping of buffers and determining the time at which vertical retrace happens. This enables front-buffer rendering, which provides extended render times together with low-latency presentation, and a vendor-independent approach for direct rendering, which can further improve the performance and latency of VR systems.

总体而言,直接渲染是减少延迟和提高 VR 系统性能的有效技术。通过直接渲染到显示设备,图形硬件可以消除通过操作系统的需要,并减少与存储和合成渲染帧相关的延迟。这种方法提供了对缓冲区数量及其在显示图面上的呈现的控制,从而实现了缓冲区的帧异步或帧同步交换,并确定垂直回溯发生的时间。这支持前置缓冲区渲染,提供更长的渲染时间和低延迟的演示,以及独立于供应商的直接渲染方法,这可以进一步提高 VR 系统的性能和延迟。

32.9 Overfill and Oversampling

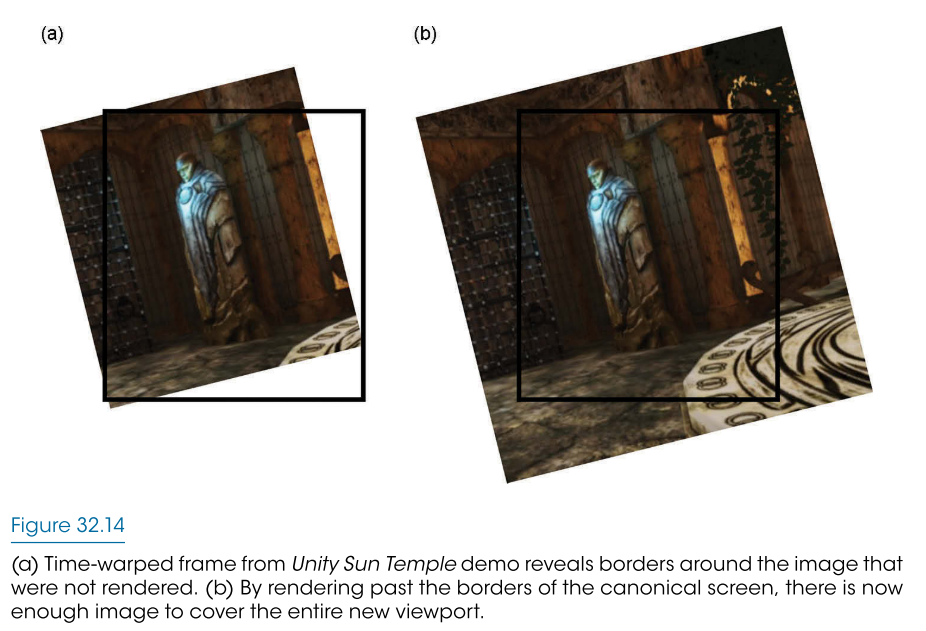

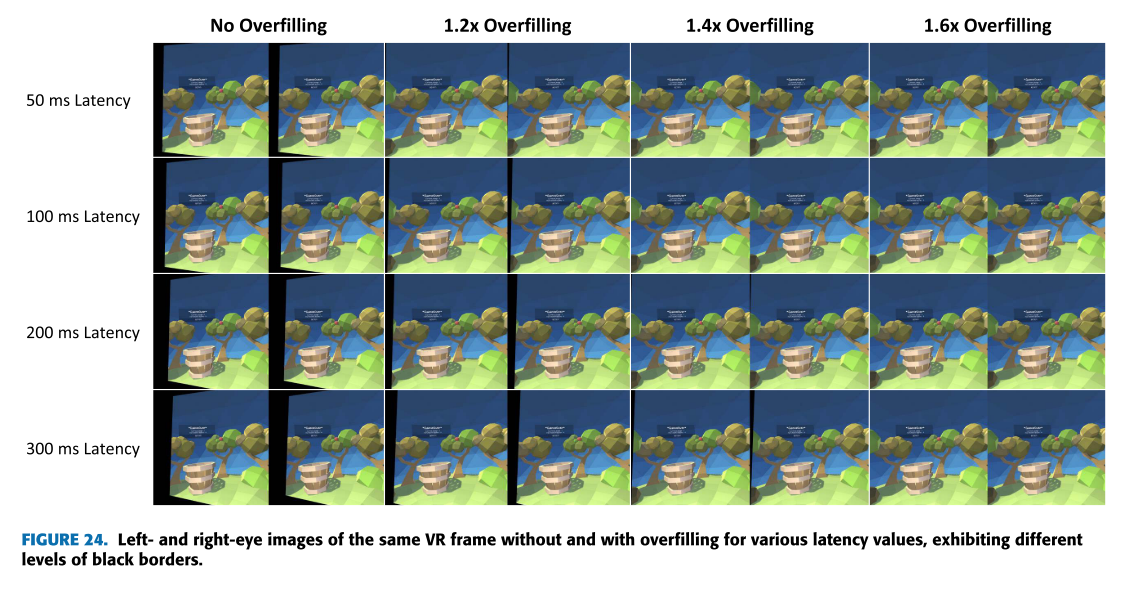

Time warp reprojects an image from a different viewpoint. Normally, the original image could be rendered to exactly cover the canonical screen; however, reprojection causes the new viewpoint to see past those original borders. This produces black borders creeping in from the edges. Distortion correction can produce a similar effect when the canonical screen does not completely fill the display, resulting in similar borders. Both issues can be addressed using Overfill—i.e. rendering an image that goes beyond the edges of the canonical screen. This section describes how to use these approaches to remove rendering artifacts (Figure 32.14). Overfill requires adjustment of both the projection transformation (making the projection region wider) and the graphics viewport size in pixels (providing a place to store the extra pixels); the viewing transformation remains the same. The size of overfill needed to hide the borders depends on the distortion correction being done, on the length of time between rendering and warping, and on the speed of rotation of the viewer’s head: faster rotation reveals more border per unit time.

时间扭曲从不同的视角重新投影图像。通常,原始图像可以渲染以完全覆盖规范屏幕;但是,重新投影会导致新视点超越这些原始边界。这会产生从边缘爬进来的黑色边框。当规范屏幕未完全填满显示器时,失真校正会产生类似的效果,从而导致相似的边框。这两个问题都可以使用过度填充来解决,即渲染超出规范屏幕边缘的图像。本节介绍如何使用这些方法来删除渲染伪影(图 32.14)。过度填充需要调整投影变换(使投影区域更宽)和图形视口大小(以像素为单位)(提供存储额外像素的位置);观看转换保持不变。隐藏边框所需的过度填充大小取决于正在进行的失真校正、渲染和变形之间的时间长度以及观看者头部的旋转速度:旋转速度越快,单位时间内的边框就越多。

Overfill is a technique used to address rendering artifacts that occur when rendering an image to a specific viewpoint, but the image extends beyond the edges of the canonical screen. To use Overfill, the original image is rendered to a larger region that includes the borders of the canonical screen. Then, the projection transformation and graphics viewport size are adjusted to provide a place to store the extra pixels. The viewing transformation remains the same.

过度填充是一种技术,用于解决将图像渲染到特定视点时出现的渲染伪影,但图像超出了规范屏幕的边缘。要使用过度填充,原始图像将渲染到更大的区域,其中包括规范屏幕的边框。然后,调整投影变换和图形视口大小,以提供存储额外像素的位置。观看转换保持不变。

When using Overfill, the amount of extra space required to hide the borders depends on the distortion correction being done, the length of time between rendering and warping, and the speed of rotation of the viewer’s head. Faster rotation reveals more border per unit time.

使用过度填充时,隐藏边框所需的额外空间量取决于正在进行的失真校正、渲染和变形之间的时间长度以及观看者头部的旋转速度。旋转速度越快,单位时间内的边框就越多。

Overfill can be used in combination with Time Warp to further reduce latency. Time Warp reprojects an image from a different viewpoint, which can produce black borders creeping in from the edges. Overfill can be used to remove these borders and provide a more seamless viewing experience.

Overfill 可以与 Time Warp 结合使用,以进一步减少延迟。时间扭曲从不同的视角重新投影图像,这可能会产生从边缘爬进来的黑色边框。过度填充可用于去除这些边框并提供更无缝的观看体验。

Overall, Overfill is an effective technique for removing rendering artifacts that occur when rendering an image to a specific viewpoint but the image extends beyond the edges of the canonical screen. By rendering the image to a larger region and adjusting the projection transformation and graphics viewport size, the extra pixels can be stored and the borders can be hidden. Overfill can be used in combination with Time Warp to further reduce latency and provide a more seamless viewing experience.

总体而言,过度填充是一种有效的技术,用于消除将图像渲染到特定视点时出现的渲染伪影,但图像超出了规范屏幕的边缘。通过将图像渲染到更大的区域并调整投影变换和图形视口大小,可以存储多余的像素并隐藏边框。Overfill 可以与 Time Warp 结合使用,以进一步减少延迟并提供更无缝的观看体验。

32.10 Rendering State

VR systems take time-varying, linear, and nonlinear geometric descriptions of the relative locations and orientations of objects in space and produce descriptions suitable for implementation in the linear geometric operations available in graphics libraries. This section describes how to manage this state across graphics libraries. The resulting linear operations can be implemented in various rendering systems, including basic graphics libraries (OpenGL, Direct3D, GLES, Vulkan, etc.), game engines (Unreal, Unity, Blender, etc.), and others (VTK, OpenCV, etc.). These systems have a v ariety of distance units (meters, mm, pixels, etc.) and coordinate systems (right-handed vs. left-handed, screen lower-left vs. upper-left, etc.). This means that no single internal representation can be used within a VR system that is to be implemented across multiple rendering systems. It also means that all aspects of the coordinate system must be carefully described because they will be unfamiliar to users of some of the rendering systems.

VR 系统对空间中物体的相对位置和方向进行随时间变化的线性和非线性几何描述,并生成适合在图形库中可用的线性几何操作中实现的描述。本节介绍如何跨图形库管理此状态。由此产生的线性操作可以在各种渲染系统中实现,包括基本图形库(OpenGL、Direct3D、GLES、Vulkan 等)、游戏引擎(Unreal、Unity、Blender 等)和其他(VTK、OpenCV 等)。这些系统具有多种距离单位(米、毫米、像素等)和坐标系(右撇子与左撇子、屏幕左下角与左上角等)。这意味着在要跨多个渲染系统实现的 VR 系统中,不能使用单一的内部表示。这也意味着必须仔细描述坐标系的所有方面,因为某些渲染系统的用户将不熟悉这些方面。

To manage the state across graphics libraries, VR systems require a way to convert between different coordinate systems and distance units. One solution is to use a common coordinate system that is familiar to all rendering systems, such as the right-handed笛卡尔系,which uses upward facing vectors and positive x-axis pointing to the right. Another solution is to use a mapping function that converts between the coordinate systems of the VR system and the rendering system.

为了跨图形库管理状态,VR 系统需要一种在不同坐标系和距离单位之间进行转换的方法。一种解决方案是使用所有渲染系统都熟悉的通用坐标系,例如右手笛卡尔系,它使用向上的矢量和指向右侧的正 x 轴。另一种解决方案是使用映射函数,在 VR 系统的坐标系和渲染系统的坐标系之间进行转换。

To ensure consistency across different rendering systems, it is important to describe the coordinate system in detail. This includes specifying the distance units used, whether the coordinate system is left-handed or right-handed, and any additional information about the orientation of the coordinate axes. Additionally, it may be necessary to include information about any transformations that have been applied to the coordinate system, such as scaling or rotation.

为了确保不同渲染系统之间的一致性,必须详细描述坐标系。这包括指定使用的距离单位、坐标系是左手还是右手,以及有关坐标轴方向的任何其他信息。此外,可能需要包含有关已应用于坐标系的任何变换的信息,例如缩放或旋转。

Overall, managing the state across different rendering systems requires careful consideration of coordinate systems, distance units, and any transformations that have been applied. By providing clear descriptions of the coordinate system and carefully implementing mapping functions, VR systems can ensure consistency across different rendering systems and provide a seamless user experience.

总体而言,跨不同渲染系统管理状态需要仔细考虑坐标系、距离单位以及已应用的任何变换。通过提供清晰的坐标系描述和精心实现的映射功能,VR系统可以确保不同渲染系统之间的一致性,并提供无缝的用户体验。