Updates on Function Calling were a major highlight at OpenAI DevDay.

In another world,原来的function call都不再正常工作了,必须全部重写。

function和function call全部由tool和tool_choice取代。2023年11月之前关于function call的代码都准备翘翘。

干嘛要整个tool出来取代function呢?原因有很多,不再赘述。作为程序员,我们真正关心的是:怎么改?

简单来说,就是整合chatgpt的能力和你个人的能力通过这个tools。怎么做呢?

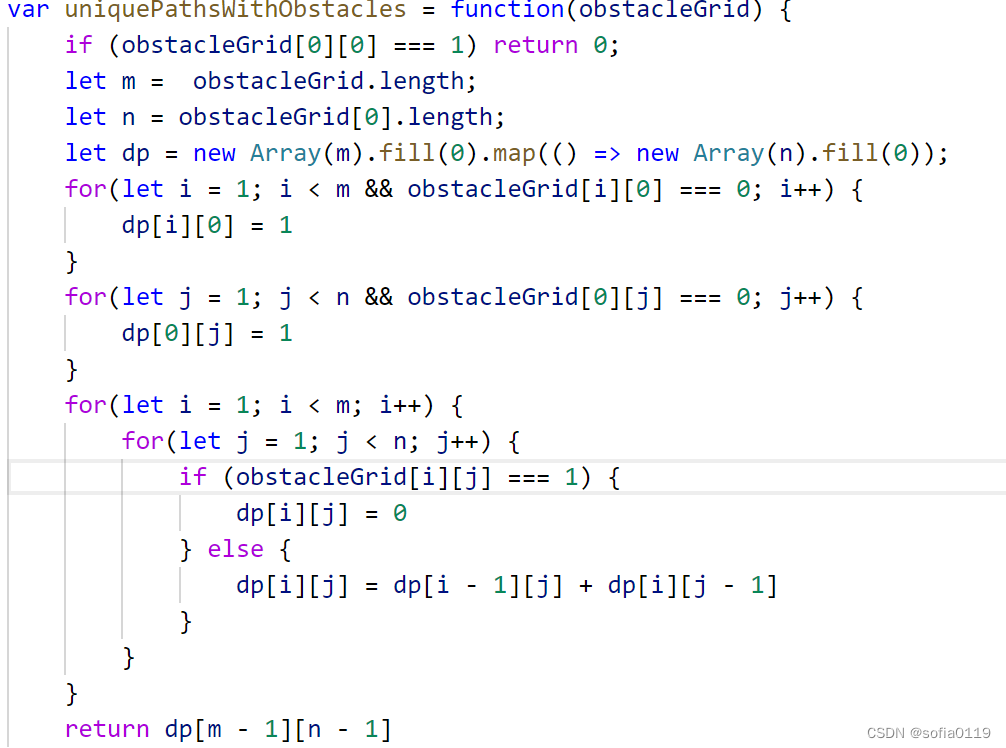

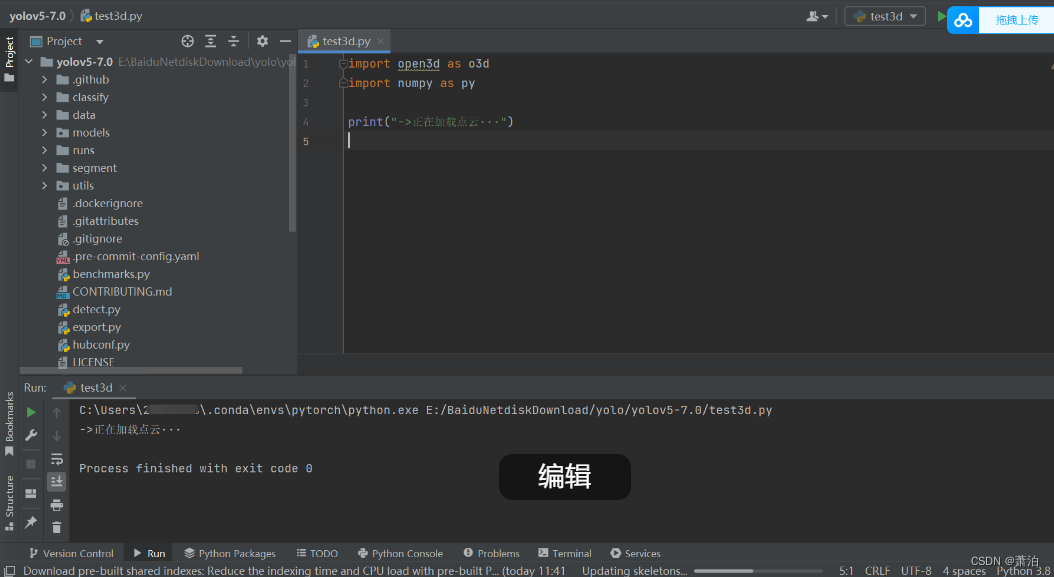

第一步,定义function和parameters

import json

import openai#step 1: Define 2 functions: self-defined function and text completions

def get_weather(location, unit="fahrenheit"):if "beijing" in location.lower():return json.dumps({"location": location, "temperature": "11", "unit": "celsius"})elif "tokyo" in location.lower():return json.dumps({"location": location, "temperature": "33", "unit": "celsius"})else:return json.dumps({"location": location, "temperature": "22", "unit": "celsius"})def chat_completions(parameter_message):response = openai.chat.completions.create(model = "gpt-3.5-turbo-1106",messages = parameter_message,tools = ai_function,tool_choice="auto", # auto is default, but we'll be explicit)return response.choices[0].message#step 2: Define parameters for text completion, and functions for transferring to Toolsai_prompt = [{"role" : "user","content": "What's the weather in tokyo?"}]ai_function = [{"type" : "function","function": {"name": "get_weather","parameters": {"type": "object","properties": {"location": {"type": "string","description": "The city and state, e.g. San Francisco, CA",},"unit": {"type": "string", "enum": ["celsius", "fahrenheit"]},},"required": ["location"],},},}

]第二步,第一轮chat completions

先做一个chat completions, 再由chat completion决定是否要调用function。

# step 3: first round call text completions, to get the response_message/tool_calls

first_response = chat_completions(ai_prompt)

tool_calls = first_response.tool_calls

tool_choice参数让chatgpt模型自行决断是否需要function介入。

response是返回的object,message里包含一个tool_calls array.

tool_calls array The tool calls generated by the model, such as function calls.

id string The ID of the tool call.

type string The type of the tool. Currently, only function is supported.

function object: The function that the model called.name: string The name of the function to call.arguments: string The arguments to call the function with, as generated by the model in JSON format. Note that the model does not always generate valid JSON, and may hallucinate parameters not defined by your function schema. Validate the arguments in your code before calling your function.

第三步,第一轮chat completions的结果加入prompt,再把function参数加入prompt,然后一起喂给chatgpt

if tool_calls:available_functions = {"get_weather": get_weather,} # only one function in this example, but you can have multipleai_prompt.append(first_response) # step 4: extend conversation with assistant's replyfor tool_call in tool_calls:function_name = tool_call.function.namefunction_to_call = available_functions[function_name]function_args = json.loads(tool_call.function.arguments)function_response = function_to_call(location=function_args.get("location"),unit=function_args.get("unit"),)ai_prompt.append({"tool_call_id": tool_call.id,"role": "tool","name": function_name,"content": function_response,}) # step 5: extend conversation with function responseprint("\nfinal msg1 --> ", ai_prompt)second_response = chat_completions(ai_prompt) # get a new response from the model where it can see the function responseprint("\n second_response --> ", second_response)

得出结果:

ChatCompletionMessage(content='The weather in Tokyo is 33°C. Enjoy the sunny day!', role='assistant', function_call=None, tool_calls=None)

tool和tool_choice,取代了过去的function和function calling。