问题

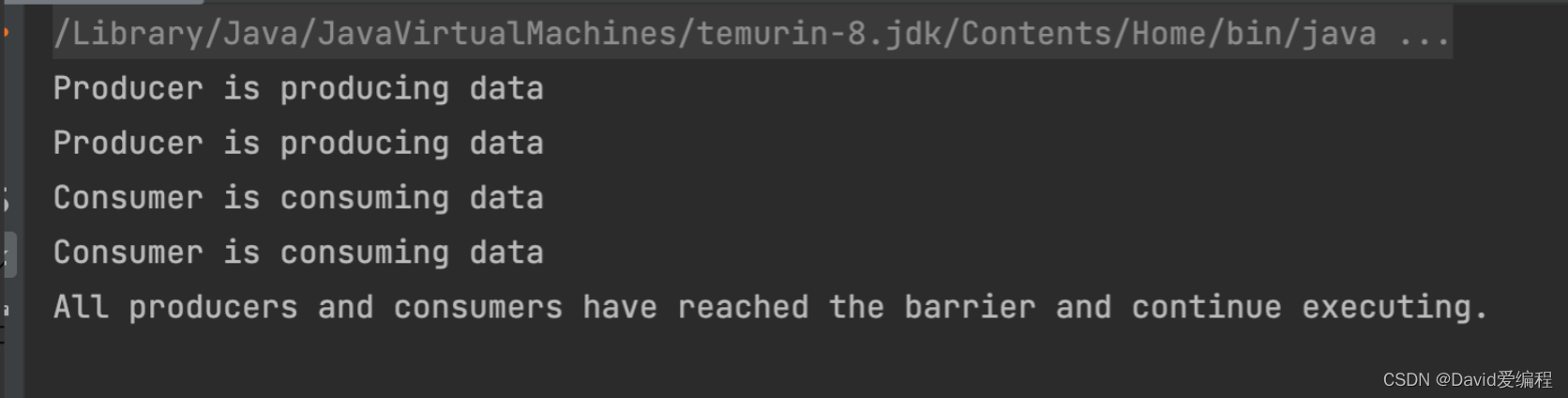

Caused by: org.apache.flink.core.fs.UnsupportedFileSystemSchemeException: Could not find a file system implementation for scheme 'hdfs'. The scheme is not directly supported by Flink and no Hadoop file system to support this scheme could be loaded. For a full list of supported file systems, please see https://nightlies.apache.org/flink/flink-docs-stable/ops/filesystems/.at org.apache.flink.core.fs.FileSystem.getUnguardedFileSystem(FileSystem.java:547)at org.apache.flink.core.fs.FileSystem.get(FileSystem.java:413)at org.apache.flink.core.fs.Path.getFileSystem(Path.java:274)at org.apache.flink.runtime.state.filesystem.FsCheckpointStorageAccess.<init>(FsCheckpointStorageAccess.java:67)at org.apache.flink.runtime.state.storage.FileSystemCheckpointStorage.createCheckpointStorage(FileSystemCheckpointStorage.java:323)at org.apache.flink.streaming.runtime.tasks.StreamTask.<init>(StreamTask.java:444)at org.apache.flink.streaming.runtime.tasks.StreamTask.<init>(StreamTask.java:365)at org.apache.flink.streaming.runtime.tasks.StreamTask.<init>(StreamTask.java:338)at org.apache.flink.streaming.runtime.tasks.StreamTask.<init>(StreamTask.java:330)at org.apache.flink.streaming.runtime.tasks.StreamTask.<init>(StreamTask.java:320)at org.apache.flink.streaming.runtime.tasks.OneInputStreamTask.<init>(OneInputStreamTask.java:75)at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)at java.lang.reflect.Constructor.newInstance(Constructor.java:423)at org.apache.flink.runtime.taskmanager.Task.loadAndInstantiateInvokable(Task.java:1601)at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:725)at org.apache.flink.runtime.taskmanager.Task.run(Task.java:552)at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.flink.core.fs.UnsupportedFileSystemSchemeException: Cannot support file system for 'hdfs' via Hadoop, because Hadoop is not in the classpath, or some classes are missing from the classpath.at org.apache.flink.runtime.fs.hdfs.HadoopFsFactory.create(HadoopFsFactory.java:199)at org.apache.flink.core.fs.FileSystem.getUnguardedFileSystem(FileSystem.java:530)... 18 more

Caused by: java.lang.IllegalAccessError: class org.apache.hadoop.hdfs.web.HftpFileSystem cannot access its superinterface org.apache.hadoop.hdfs.web.TokenAspect$TokenManagementDelegatorat java.lang.ClassLoader.defineClass1(Native Method)at java.lang.ClassLoader.defineClass(ClassLoader.java:763)at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)at java.net.URLClassLoader.defineClass(URLClassLoader.java:468)at java.net.URLClassLoader.access$100(URLClassLoader.java:74)at java.net.URLClassLoader$1.run(URLClassLoader.java:369)at java.net.URLClassLoader$1.run(URLClassLoader.java:363)at java.security.AccessController.doPrivileged(Native Method)at java.net.URLClassLoader.findClass(URLClassLoader.java:362)at java.lang.ClassLoader.loadClass(ClassLoader.java:424)at org.apache.flink.util.FlinkUserCodeClassLoader.loadClassWithoutExceptionHandling(FlinkUserCodeClassLoader.java:67)at org.apache.flink.util.ChildFirstClassLoader.loadClassWithoutExceptionHandling(ChildFirstClassLoader.java:84)at org.apache.flink.util.FlinkUserCodeClassLoader.loadClass(FlinkUserCodeClassLoader.java:51)at java.lang.ClassLoader.loadClass(ClassLoader.java:357)at org.apache.flink.util.FlinkUserCodeClassLoaders$SafetyNetWrapperClassLoader.loadClass(FlinkUserCodeClassLoaders.java:260)at java.lang.Class.forName0(Native Method)at java.lang.Class.forName(Class.java:348)at java.util.ServiceLoader$LazyIterator.nextService(ServiceLoader.java:370)at java.util.ServiceLoader$LazyIterator.next(ServiceLoader.java:404)at java.util.ServiceLoader$1.next(ServiceLoader.java:480)at org.apache.hadoop.fs.FileSystem.loadFileSystems(FileSystem.java:3289)at org.apache.hadoop.fs.FileSystem.getFileSystemClass(FileSystem.java:3334)at org.apache.flink.runtime.fs.hdfs.HadoopFsFactory.create(HadoopFsFactory.java:108)... 19 more

原因

hadoop2.x 存在 org.apache.hadoop.hdfs.web.HftpFileSystem 类。

hadoop3.x 没有 org.apache.hadoop.hdfs.web.HftpFileSystem 类。

因此会导致 SPI 发现这个实现类的时候报错。