前言

大家好,我是Snu77,这里是RT-DETR有效涨点专栏。

本专栏的内容为根据ultralytics版本的RT-DETR进行改进,内容持续更新,每周更新文章数量3-10篇。

专栏以ResNet18、ResNet50为基础修改版本,同时修改内容也支持ResNet32、ResNet101和PPHGNet版本,其中ResNet为RT-DETR官方版本1:1移植过来的,参数量基本保持一致(误差很小很小),不同于ultralytics仓库版本的ResNet官方版本,同时ultralytics仓库的一些参数是和RT-DETR相冲的所以我也是会教大家调好一些参数和代码,真正意义上的跑ultralytics的和RT-DETR官方版本的无区别

👑欢迎大家订阅本专栏,一起学习RT-DETR👑

一、本文介绍

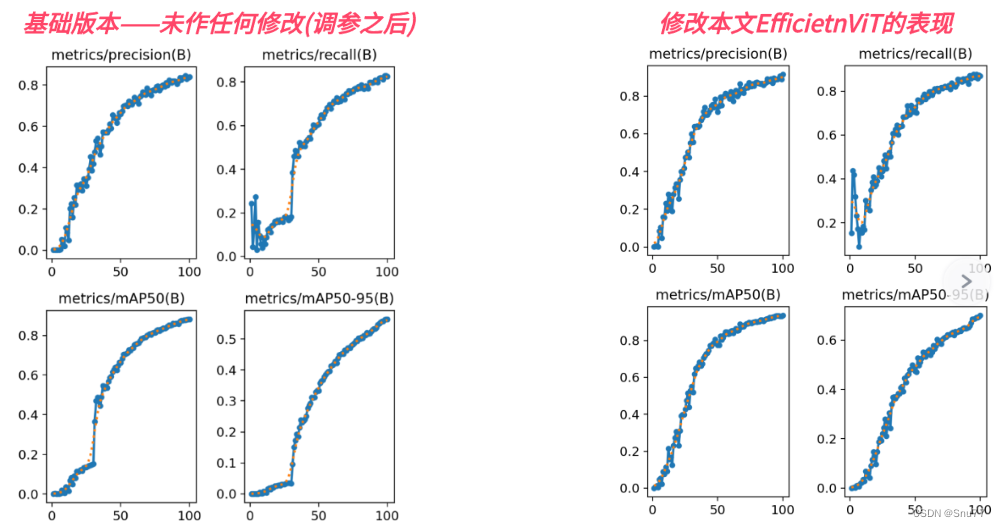

本文给大家带来的改进机制是主干网络,一个名字EfficientViT的特征提取网络,其基本原理是提升视觉变换器在高效处理高分辨率视觉任务的能力。它采用了创新的建筑模块设计,包括三明治布局和级联群组注意力模块。其是一种高效率的特征提取网络训练速度非常快,推理速度也要比基础版本的要快,其效果完爆之前的MobileNetV3等轻量化网络模型。欢迎大家订阅本专栏,本专栏每周更新3-10篇最新机制,更有包含我所有改进的文件和交流群提供给大家。

专栏链接:RT-DETR剑指论文专栏,持续复现各种顶会内容——论文收割机RT-DETR

目录

一、本文介绍

二、EfficientViT原理

2.1 EfficientViT的基本原理

三、EfficientViT的核心代码

四、手把手教你添加EfficientViT机制

4.1 修改一

4.2 修改二

4.3 修改三

4.4 修改四

4.5 修改五

4.6 修改六

4.7 修改七

4.8 修改八

4.9 RT-DETR不能打印计算量问题的解决

4.10 可选修改

五、EfficientViT的yaml文件

5.1 yaml文件

5.2 运行文件

5.3 成功训练截图

六、全文总结

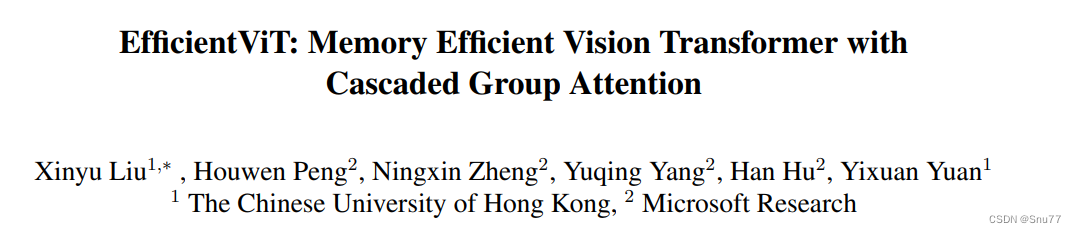

二、EfficientViT原理

论文地址:论文官方地址

代码地址:代码官方地址

2.1 EfficientViT的基本原理

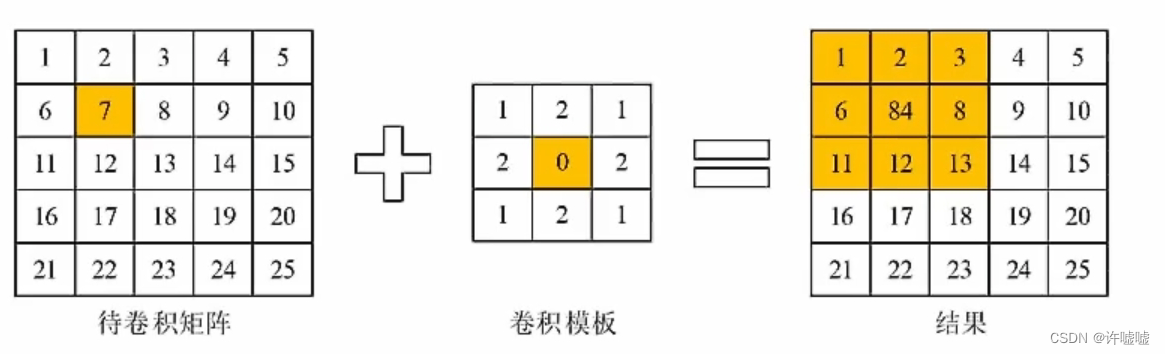

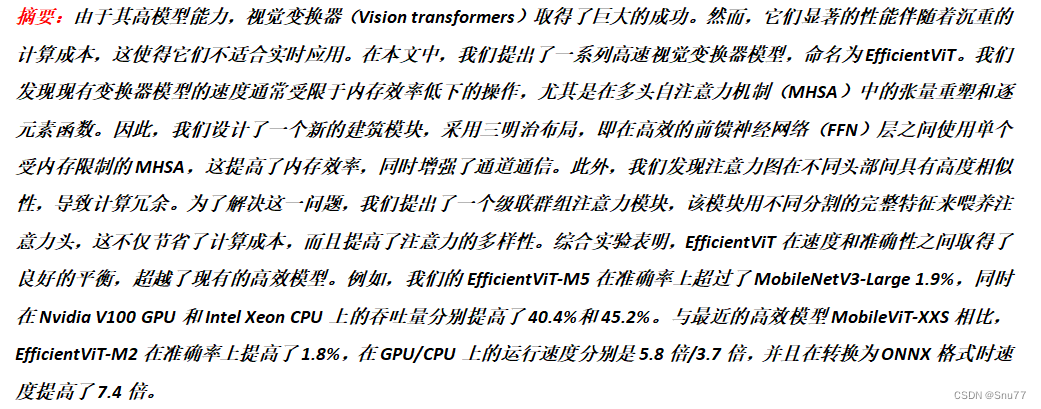

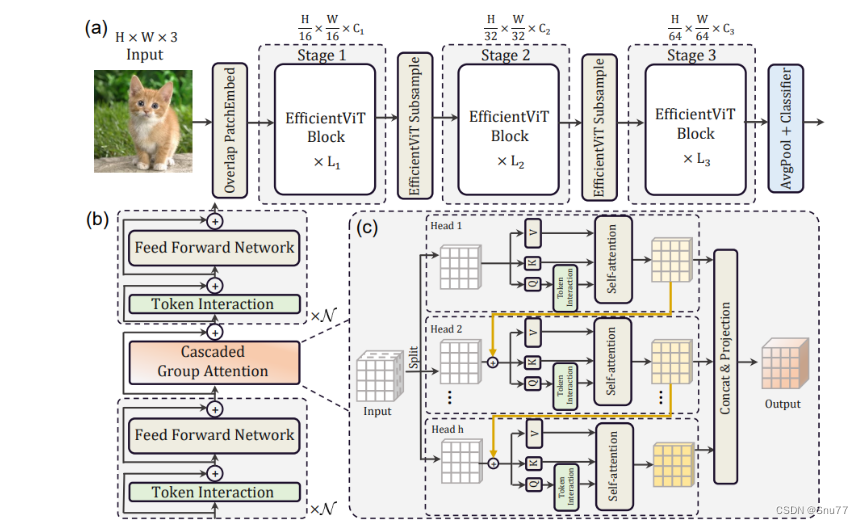

EfficientViT的基本原理是提升视觉变换器在高效处理高分辨率视觉任务的能力。它采用了创新的建筑模块设计,包括三明治布局和级联群组注意力模块。

1. 三明治布局:在前馈神经网络(FFN)层之间使用单个受内存限制的多头自注意力机制(MHSA),以提高内存效率。

2. 级联群组注意力模块:通过将不同的特征分割喂给不同的注意力头,减少计算冗余,并提高注意力的多样性。

下面为大家展示了EfficientViT的整体架构和关键组成部分:

(a). 架构概览:EfficientViT的整体架构分为三个阶段,每个阶段都包含了若干EfficientViT块,随着阶段的进展,特征图的维度会减小,而通道数会增加。

(b). 三明治布局块:展示了EfficientViT块的内部结构,它采用了一种三明治布局,其中的自注意力层(绿色部分)被两层前馈神经网络(FFN)夹在中间。

(c). 级联群组注意力:这是一个创新的注意力机制,通过将输入特征分割成不同的部分,分别喂给

不同的注意力头。

三、EfficientViT的核心代码

代码的使用方式看章节四。

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.utils.checkpoint as checkpoint

import itertools

from timm.models.layers import SqueezeExcite

import numpy as np

import itertools__all__ = ['EfficientViT_M0', 'EfficientViT_M1', 'EfficientViT_M2', 'EfficientViT_M3', 'EfficientViT_M4','EfficientViT_M5']class Conv2d_BN(torch.nn.Sequential):def __init__(self, a, b, ks=1, stride=1, pad=0, dilation=1,groups=1, bn_weight_init=1, resolution=-10000):super().__init__()self.add_module('c', torch.nn.Conv2d(a, b, ks, stride, pad, dilation, groups, bias=False))self.add_module('bn', torch.nn.BatchNorm2d(b))torch.nn.init.constant_(self.bn.weight, bn_weight_init)torch.nn.init.constant_(self.bn.bias, 0)@torch.no_grad()def switch_to_deploy(self):c, bn = self._modules.values()w = bn.weight / (bn.running_var + bn.eps) ** 0.5w = c.weight * w[:, None, None, None]b = bn.bias - bn.running_mean * bn.weight / \(bn.running_var + bn.eps) ** 0.5m = torch.nn.Conv2d(w.size(1) * self.c.groups, w.size(0), w.shape[2:], stride=self.c.stride, padding=self.c.padding, dilation=self.c.dilation,groups=self.c.groups)m.weight.data.copy_(w)m.bias.data.copy_(b)return mdef replace_batchnorm(net):for child_name, child in net.named_children():if hasattr(child, 'fuse'):setattr(net, child_name, child.fuse())elif isinstance(child, torch.nn.BatchNorm2d):setattr(net, child_name, torch.nn.Identity())else:replace_batchnorm(child)class PatchMerging(torch.nn.Module):def __init__(self, dim, out_dim, input_resolution):super().__init__()hid_dim = int(dim * 4)self.conv1 = Conv2d_BN(dim, hid_dim, 1, 1, 0, resolution=input_resolution)self.act = torch.nn.ReLU()self.conv2 = Conv2d_BN(hid_dim, hid_dim, 3, 2, 1, groups=hid_dim, resolution=input_resolution)self.se = SqueezeExcite(hid_dim, .25)self.conv3 = Conv2d_BN(hid_dim, out_dim, 1, 1, 0, resolution=input_resolution // 2)def forward(self, x):x = self.conv3(self.se(self.act(self.conv2(self.act(self.conv1(x))))))return xclass Residual(torch.nn.Module):def __init__(self, m, drop=0.):super().__init__()self.m = mself.drop = dropdef forward(self, x):if self.training and self.drop > 0:return x + self.m(x) * torch.rand(x.size(0), 1, 1, 1,device=x.device).ge_(self.drop).div(1 - self.drop).detach()else:return x + self.m(x)class FFN(torch.nn.Module):def __init__(self, ed, h, resolution):super().__init__()self.pw1 = Conv2d_BN(ed, h, resolution=resolution)self.act = torch.nn.ReLU()self.pw2 = Conv2d_BN(h, ed, bn_weight_init=0, resolution=resolution)def forward(self, x):x = self.pw2(self.act(self.pw1(x)))return xclass CascadedGroupAttention(torch.nn.Module):r""" Cascaded Group Attention.Args:dim (int): Number of input channels.key_dim (int): The dimension for query and key.num_heads (int): Number of attention heads.attn_ratio (int): Multiplier for the query dim for value dimension.resolution (int): Input resolution, correspond to the window size.kernels (List[int]): The kernel size of the dw conv on query."""def __init__(self, dim, key_dim, num_heads=8,attn_ratio=4,resolution=14,kernels=[5, 5, 5, 5], ):super().__init__()self.num_heads = num_headsself.scale = key_dim ** -0.5self.key_dim = key_dimself.d = int(attn_ratio * key_dim)self.attn_ratio = attn_ratioqkvs = []dws = []for i in range(num_heads):qkvs.append(Conv2d_BN(dim // (num_heads), self.key_dim * 2 + self.d, resolution=resolution))dws.append(Conv2d_BN(self.key_dim, self.key_dim, kernels[i], 1, kernels[i] // 2, groups=self.key_dim,resolution=resolution))self.qkvs = torch.nn.ModuleList(qkvs)self.dws = torch.nn.ModuleList(dws)self.proj = torch.nn.Sequential(torch.nn.ReLU(), Conv2d_BN(self.d * num_heads, dim, bn_weight_init=0, resolution=resolution))points = list(itertools.product(range(resolution), range(resolution)))N = len(points)attention_offsets = {}idxs = []for p1 in points:for p2 in points:offset = (abs(p1[0] - p2[0]), abs(p1[1] - p2[1]))if offset not in attention_offsets:attention_offsets[offset] = len(attention_offsets)idxs.append(attention_offsets[offset])self.attention_biases = torch.nn.Parameter(torch.zeros(num_heads, len(attention_offsets)))self.register_buffer('attention_bias_idxs',torch.LongTensor(idxs).view(N, N))@torch.no_grad()def train(self, mode=True):super().train(mode)if mode and hasattr(self, 'ab'):del self.abelse:self.ab = self.attention_biases[:, self.attention_bias_idxs]def forward(self, x): # x (B,C,H,W)B, C, H, W = x.shapetrainingab = self.attention_biases[:, self.attention_bias_idxs]feats_in = x.chunk(len(self.qkvs), dim=1)feats_out = []feat = feats_in[0]for i, qkv in enumerate(self.qkvs):if i > 0: # add the previous output to the inputfeat = feat + feats_in[i]feat = qkv(feat)q, k, v = feat.view(B, -1, H, W).split([self.key_dim, self.key_dim, self.d], dim=1) # B, C/h, H, Wq = self.dws[i](q)q, k, v = q.flatten(2), k.flatten(2), v.flatten(2) # B, C/h, Nattn = ((q.transpose(-2, -1) @ k) * self.scale+(trainingab[i] if self.training else self.ab[i]))attn = attn.softmax(dim=-1) # BNNfeat = (v @ attn.transpose(-2, -1)).view(B, self.d, H, W) # BCHWfeats_out.append(feat)x = self.proj(torch.cat(feats_out, 1))return xclass LocalWindowAttention(torch.nn.Module):r""" Local Window Attention.Args:dim (int): Number of input channels.key_dim (int): The dimension for query and key.num_heads (int): Number of attention heads.attn_ratio (int): Multiplier for the query dim for value dimension.resolution (int): Input resolution.window_resolution (int): Local window resolution.kernels (List[int]): The kernel size of the dw conv on query."""def __init__(self, dim, key_dim, num_heads=8,attn_ratio=4,resolution=14,window_resolution=7,kernels=[5, 5, 5, 5], ):super().__init__()self.dim = dimself.num_heads = num_headsself.resolution = resolutionassert window_resolution > 0, 'window_size must be greater than 0'self.window_resolution = window_resolutionself.attn = CascadedGroupAttention(dim, key_dim, num_heads,attn_ratio=attn_ratio,resolution=window_resolution,kernels=kernels, )def forward(self, x):B, C, H, W = x.shapeif H <= self.window_resolution and W <= self.window_resolution:x = self.attn(x)else:x = x.permute(0, 2, 3, 1)pad_b = (self.window_resolution - H %self.window_resolution) % self.window_resolutionpad_r = (self.window_resolution - W %self.window_resolution) % self.window_resolutionpadding = pad_b > 0 or pad_r > 0if padding:x = torch.nn.functional.pad(x, (0, 0, 0, pad_r, 0, pad_b))pH, pW = H + pad_b, W + pad_rnH = pH // self.window_resolutionnW = pW // self.window_resolution# window partition, BHWC -> B(nHh)(nWw)C -> BnHnWhwC -> (BnHnW)hwC -> (BnHnW)Chwx = x.view(B, nH, self.window_resolution, nW, self.window_resolution, C).transpose(2, 3).reshape(B * nH * nW, self.window_resolution, self.window_resolution, C).permute(0, 3, 1, 2)x = self.attn(x)# window reverse, (BnHnW)Chw -> (BnHnW)hwC -> BnHnWhwC -> B(nHh)(nWw)C -> BHWCx = x.permute(0, 2, 3, 1).view(B, nH, nW, self.window_resolution, self.window_resolution,C).transpose(2, 3).reshape(B, pH, pW, C)if padding:x = x[:, :H, :W].contiguous()x = x.permute(0, 3, 1, 2)return xclass EfficientViTBlock(torch.nn.Module):""" A basic EfficientViT building block.Args:type (str): Type for token mixer. Default: 's' for self-attention.ed (int): Number of input channels.kd (int): Dimension for query and key in the token mixer.nh (int): Number of attention heads.ar (int): Multiplier for the query dim for value dimension.resolution (int): Input resolution.window_resolution (int): Local window resolution.kernels (List[int]): The kernel size of the dw conv on query."""def __init__(self, type,ed, kd, nh=8,ar=4,resolution=14,window_resolution=7,kernels=[5, 5, 5, 5], ):super().__init__()self.dw0 = Residual(Conv2d_BN(ed, ed, 3, 1, 1, groups=ed, bn_weight_init=0., resolution=resolution))self.ffn0 = Residual(FFN(ed, int(ed * 2), resolution))if type == 's':self.mixer = Residual(LocalWindowAttention(ed, kd, nh, attn_ratio=ar, \resolution=resolution, window_resolution=window_resolution,kernels=kernels))self.dw1 = Residual(Conv2d_BN(ed, ed, 3, 1, 1, groups=ed, bn_weight_init=0., resolution=resolution))self.ffn1 = Residual(FFN(ed, int(ed * 2), resolution))def forward(self, x):return self.ffn1(self.dw1(self.mixer(self.ffn0(self.dw0(x)))))class EfficientViT(torch.nn.Module):def __init__(self, img_size=400,patch_size=16,frozen_stages=0,in_chans=3,stages=['s', 's', 's'],embed_dim=[64, 128, 192],key_dim=[16, 16, 16],depth=[1, 2, 3],num_heads=[4, 4, 4],window_size=[7, 7, 7],kernels=[5, 5, 5, 5],down_ops=[['subsample', 2], ['subsample', 2], ['']],pretrained=None,distillation=False, ):super().__init__()resolution = img_sizeself.patch_embed = torch.nn.Sequential(Conv2d_BN(in_chans, embed_dim[0] // 8, 3, 2, 1, resolution=resolution),torch.nn.ReLU(),Conv2d_BN(embed_dim[0] // 8, embed_dim[0] // 4, 3, 2, 1,resolution=resolution // 2), torch.nn.ReLU(),Conv2d_BN(embed_dim[0] // 4, embed_dim[0] // 2, 3, 2, 1,resolution=resolution // 4), torch.nn.ReLU(),Conv2d_BN(embed_dim[0] // 2, embed_dim[0], 3, 1, 1,resolution=resolution // 8))resolution = img_size // patch_sizeattn_ratio = [embed_dim[i] / (key_dim[i] * num_heads[i]) for i in range(len(embed_dim))]self.blocks1 = []self.blocks2 = []self.blocks3 = []for i, (stg, ed, kd, dpth, nh, ar, wd, do) in enumerate(zip(stages, embed_dim, key_dim, depth, num_heads, attn_ratio, window_size, down_ops)):for d in range(dpth):eval('self.blocks' + str(i + 1)).append(EfficientViTBlock(stg, ed, kd, nh, ar, resolution, wd, kernels))if do[0] == 'subsample':# ('Subsample' stride)blk = eval('self.blocks' + str(i + 2))resolution_ = (resolution - 1) // do[1] + 1blk.append(torch.nn.Sequential(Residual(Conv2d_BN(embed_dim[i], embed_dim[i], 3, 1, 1, groups=embed_dim[i], resolution=resolution)),Residual(FFN(embed_dim[i], int(embed_dim[i] * 2), resolution)), ))blk.append(PatchMerging(*embed_dim[i:i + 2], resolution))resolution = resolution_blk.append(torch.nn.Sequential(Residual(Conv2d_BN(embed_dim[i + 1], embed_dim[i + 1], 3, 1, 1, groups=embed_dim[i + 1],resolution=resolution)),Residual(FFN(embed_dim[i + 1], int(embed_dim[i + 1] * 2), resolution)), ))self.blocks1 = torch.nn.Sequential(*self.blocks1)self.blocks2 = torch.nn.Sequential(*self.blocks2)self.blocks3 = torch.nn.Sequential(*self.blocks3)self.width_list = [i.size(1) for i in self.forward(torch.randn(1, 3, 640, 640))]def forward(self, x):outs = []x = self.patch_embed(x)outs.append(x)x = self.blocks1(x)outs.append(x)x = self.blocks2(x)outs.append(x)x = self.blocks3(x)outs.append(x)return outsEfficientViT_m0 = {'img_size': 224,'patch_size': 16,'embed_dim': [64, 128, 192],'depth': [1, 2, 3],'num_heads': [4, 4, 4],'window_size': [7, 7, 7],'kernels': [7, 5, 3, 3],

}EfficientViT_m1 = {'img_size': 224,'patch_size': 16,'embed_dim': [128, 144, 192],'depth': [1, 2, 3],'num_heads': [2, 3, 3],'window_size': [7, 7, 7],'kernels': [7, 5, 3, 3],

}EfficientViT_m2 = {'img_size': 224,'patch_size': 16,'embed_dim': [128, 192, 224],'depth': [1, 2, 3],'num_heads': [4, 3, 2],'window_size': [7, 7, 7],'kernels': [7, 5, 3, 3],

}EfficientViT_m3 = {'img_size': 224,'patch_size': 16,'embed_dim': [128, 240, 320],'depth': [1, 2, 3],'num_heads': [4, 3, 4],'window_size': [7, 7, 7],'kernels': [5, 5, 5, 5],

}EfficientViT_m4 = {'img_size': 224,'patch_size': 16,'embed_dim': [128, 256, 384],'depth': [1, 2, 3],'num_heads': [4, 4, 4],'window_size': [7, 7, 7],'kernels': [7, 5, 3, 3],

}EfficientViT_m5 = {'img_size': 224,'patch_size': 16,'embed_dim': [192, 288, 384],'depth': [1, 3, 4],'num_heads': [3, 3, 4],'window_size': [7, 7, 7],'kernels': [7, 5, 3, 3],

}def EfficientViT_M0(pretrained='', frozen_stages=0, distillation=False, fuse=False, pretrained_cfg=None,model_cfg=EfficientViT_m0):model = EfficientViT(frozen_stages=frozen_stages, distillation=distillation, pretrained=pretrained, **model_cfg)if pretrained:model.load_state_dict(update_weight(model.state_dict(), torch.load(pretrained)['model']))if fuse:replace_batchnorm(model)return modeldef EfficientViT_M1(pretrained='', frozen_stages=0, distillation=False, fuse=False, pretrained_cfg=None,model_cfg=EfficientViT_m1):model = EfficientViT(frozen_stages=frozen_stages, distillation=distillation, pretrained=pretrained, **model_cfg)if pretrained:model.load_state_dict(update_weight(model.state_dict(), torch.load(pretrained)['model']))if fuse:replace_batchnorm(model)return modeldef EfficientViT_M2(pretrained='', frozen_stages=0, distillation=False, fuse=False, pretrained_cfg=None,model_cfg=EfficientViT_m2):model = EfficientViT(frozen_stages=frozen_stages, distillation=distillation, pretrained=pretrained, **model_cfg)if pretrained:model.load_state_dict(update_weight(model.state_dict(), torch.load(pretrained)['model']))if fuse:replace_batchnorm(model)return modeldef EfficientViT_M3(pretrained='', frozen_stages=0, distillation=False, fuse=False, pretrained_cfg=None,model_cfg=EfficientViT_m3):model = EfficientViT(frozen_stages=frozen_stages, distillation=distillation, pretrained=pretrained, **model_cfg)if pretrained:model.load_state_dict(update_weight(model.state_dict(), torch.load(pretrained)['model']))if fuse:replace_batchnorm(model)return modeldef EfficientViT_M4(pretrained='', frozen_stages=0, distillation=False, fuse=False, pretrained_cfg=None,model_cfg=EfficientViT_m4):model = EfficientViT(frozen_stages=frozen_stages, distillation=distillation, pretrained=pretrained, **model_cfg)if pretrained:model.load_state_dict(update_weight(model.state_dict(), torch.load(pretrained)['model']))if fuse:replace_batchnorm(model)return modeldef EfficientViT_M5(pretrained='', frozen_stages=0, distillation=False, fuse=False, pretrained_cfg=None,model_cfg=EfficientViT_m5):model = EfficientViT(frozen_stages=frozen_stages, distillation=distillation, pretrained=pretrained, **model_cfg)if pretrained:model.load_state_dict(update_weight(model.state_dict(), torch.load(pretrained)['model']))if fuse:replace_batchnorm(model)return modeldef update_weight(model_dict, weight_dict):idx, temp_dict = 0, {}for k, v in weight_dict.items():# k = k[9:]if k in model_dict.keys() and np.shape(model_dict[k]) == np.shape(v):temp_dict[k] = vidx += 1model_dict.update(temp_dict)print(f'loading weights... {idx}/{len(model_dict)} items')return model_dict四、手把手教你添加EfficientViT机制

下面教大家如何修改该网络结构,主干网络结构的修改步骤比较复杂,我也会将task.py文件上传到CSDN的文件中,大家如果自己修改不正确,可以尝试用我的task.py文件替换你的,然后只需要修改其中的第1、2、3、5步即可。

⭐修改过程中大家一定要仔细⭐

4.1 修改一

首先我门中到如下“ultralytics/nn”的目录,我们在这个目录下在创建一个新的目录,名字为'Addmodules'(此文件之后就用于存放我们的所有改进机制),之后我们在创建的目录内创建一个新的py文件复制粘贴进去 ,可以根据文章改进机制来起,这里大家根据自己的习惯命名即可。

4.2 修改二

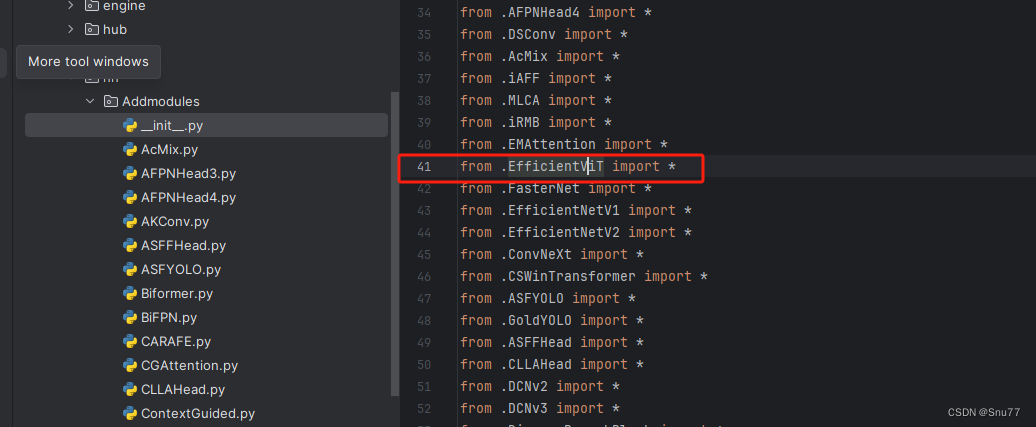

第二步我们在我们创建的目录内创建一个新的py文件名字为'__init__.py'(只需要创建一个即可),然后在其内部导入我们本文的改进机制即可,其余代码均为未发大家没有不用理会!。

4.3 修改三

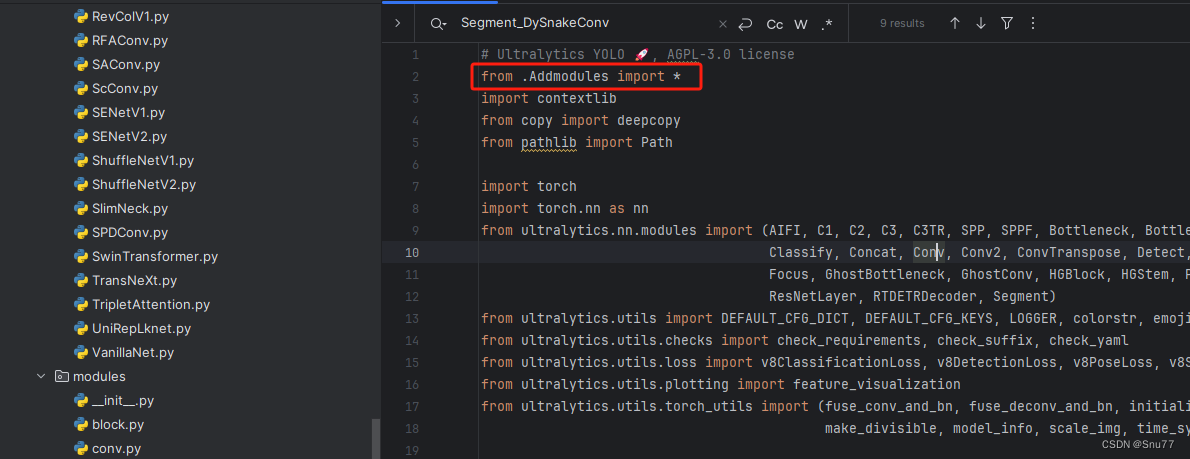

第三步我门中到如下文件'ultralytics/nn/tasks.py'然后在开头导入我们的所有改进机制(如果你用了我多个改进机制,这一步只需要修改一次即可)。

4.4 修改四

添加如下两行代码!!!

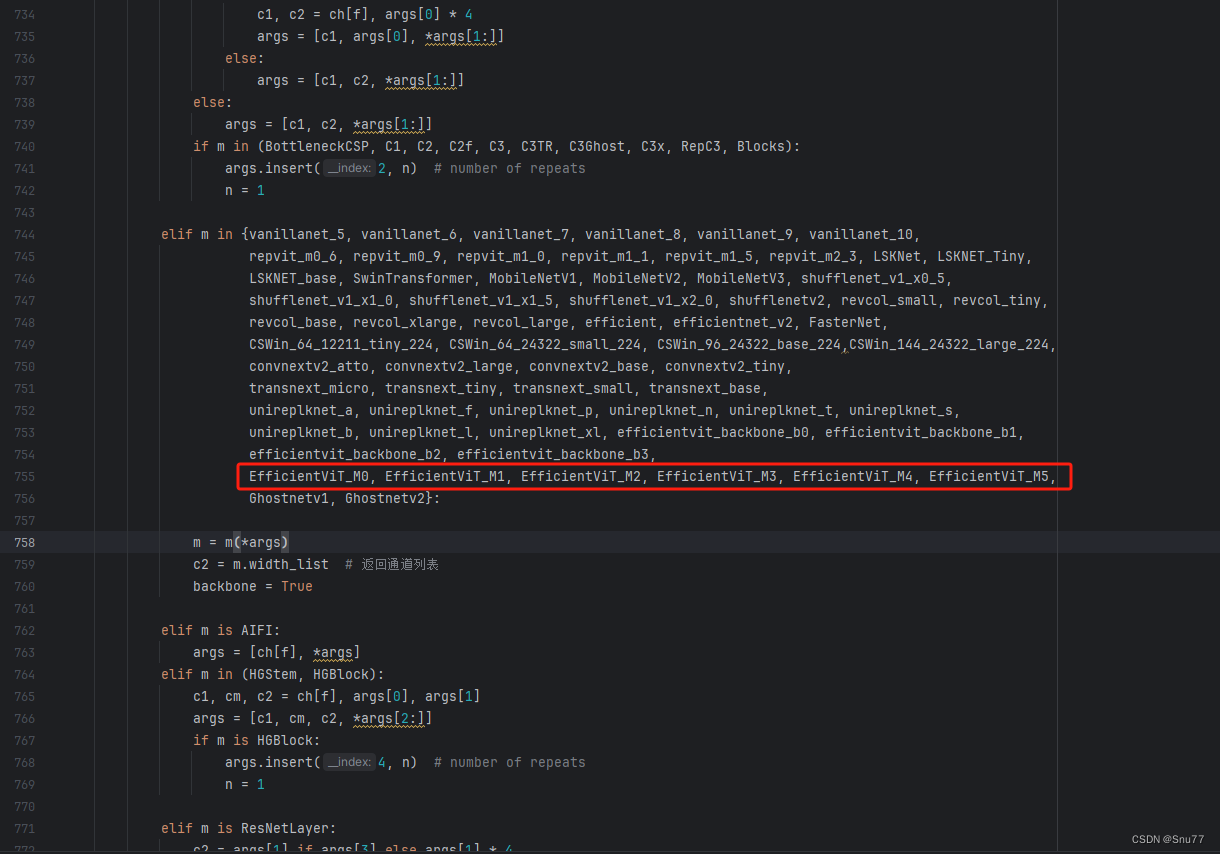

4.5 修改五

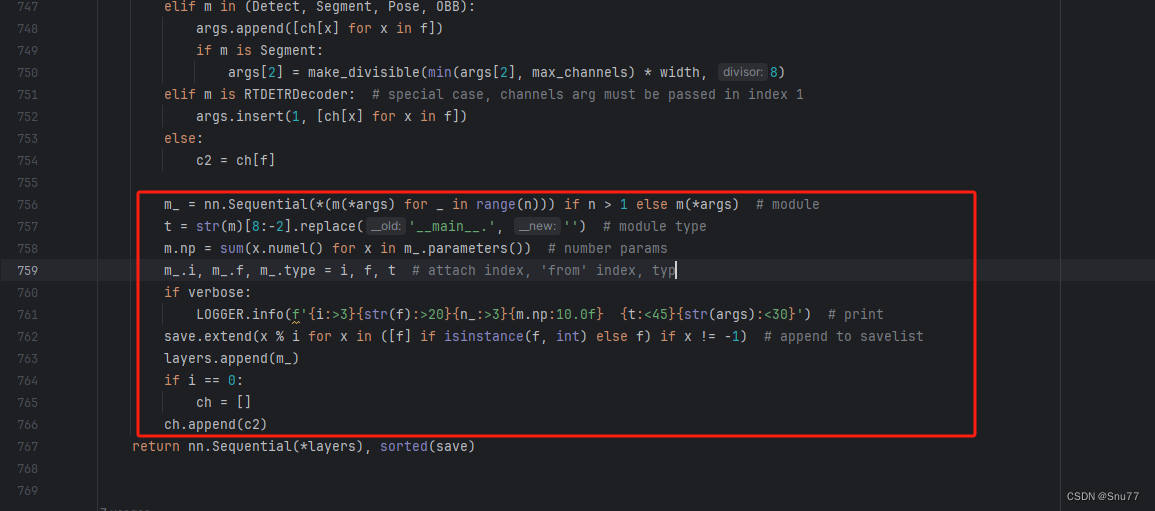

找到七百多行大概把具体看图片,按照图片来修改就行,添加红框内的部分,注意没有()只是函数名(此处我的文件里已经添加很多了后期都会发出来,大家没有的不用理会即可)。

elif m in {自行添加对应的模型即可,下面都是一样的}:m = m(*args)c2 = m.width_list # 返回通道列表backbone = True4.6 修改六

用下面的代码替换红框内的内容。

if isinstance(c2, list):m_ = mm_.backbone = True

else:m_ = nn.Sequential(*(m(*args) for _ in range(n))) if n > 1 else m(*args) # modulet = str(m)[8:-2].replace('__main__.', '') # module type

m.np = sum(x.numel() for x in m_.parameters()) # number params

m_.i, m_.f, m_.type = i + 4 if backbone else i, f, t # attach index, 'from' index, type

if verbose:LOGGER.info(f'{i:>3}{str(f):>20}{n_:>3}{m.np:10.0f} {t:<45}{str(args):<30}') # print

save.extend(x % (i + 4 if backbone else i) for x in ([f] if isinstance(f, int) else f) if x != -1) # append to savelist

layers.append(m_)

if i == 0:ch = []

if isinstance(c2, list):ch.extend(c2)if len(c2) != 5:ch.insert(0, 0)

else:ch.append(c2)4.7 修改七

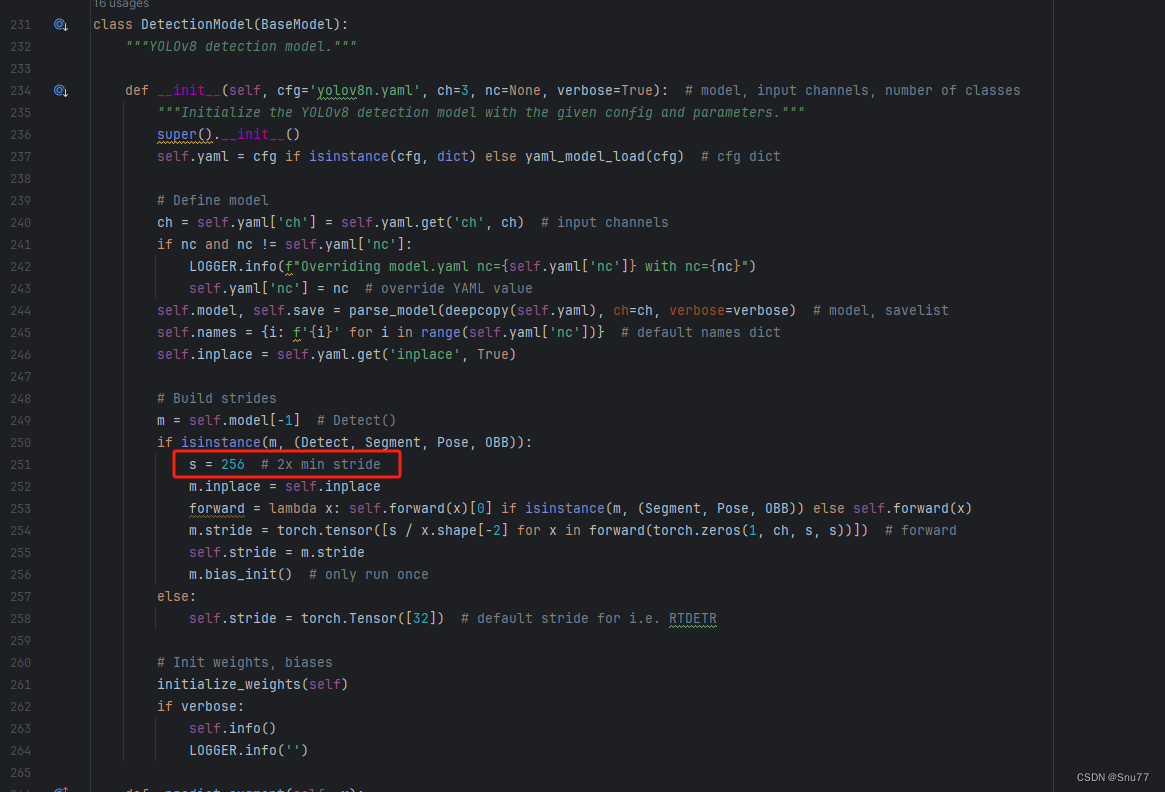

修改七这里非常要注意,不是文件开头YOLOv8的那predict,是400+行的RTDETR的predict!!!初始模型如下,用我给的代码替换即可!!!

代码如下->

def predict(self, x, profile=False, visualize=False, batch=None, augment=False, embed=None):"""Perform a forward pass through the model.Args:x (torch.Tensor): The input tensor.profile (bool, optional): If True, profile the computation time for each layer. Defaults to False.visualize (bool, optional): If True, save feature maps for visualization. Defaults to False.batch (dict, optional): Ground truth data for evaluation. Defaults to None.augment (bool, optional): If True, perform data augmentation during inference. Defaults to False.embed (list, optional): A list of feature vectors/embeddings to return.Returns:(torch.Tensor): Model's output tensor."""y, dt, embeddings = [], [], [] # outputsfor m in self.model[:-1]: # except the head partif m.f != -1: # if not from previous layerx = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layersif profile:self._profile_one_layer(m, x, dt)if hasattr(m, 'backbone'):x = m(x)if len(x) != 5: # 0 - 5x.insert(0, None)for index, i in enumerate(x):if index in self.save:y.append(i)else:y.append(None)x = x[-1] # 最后一个输出传给下一层else:x = m(x) # runy.append(x if m.i in self.save else None) # save outputif visualize:feature_visualization(x, m.type, m.i, save_dir=visualize)if embed and m.i in embed:embeddings.append(nn.functional.adaptive_avg_pool2d(x, (1, 1)).squeeze(-1).squeeze(-1)) # flattenif m.i == max(embed):return torch.unbind(torch.cat(embeddings, 1), dim=0)head = self.model[-1]x = head([y[j] for j in head.f], batch) # head inferencereturn x4.8 修改八

我们将下面的s用640替换即可,这一步也是部分的主干可以不修改,但有的不修改就会报错,所以我们还是修改一下。

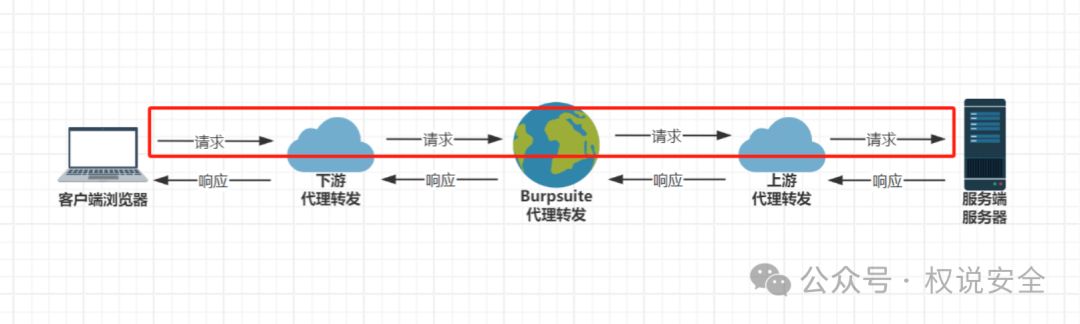

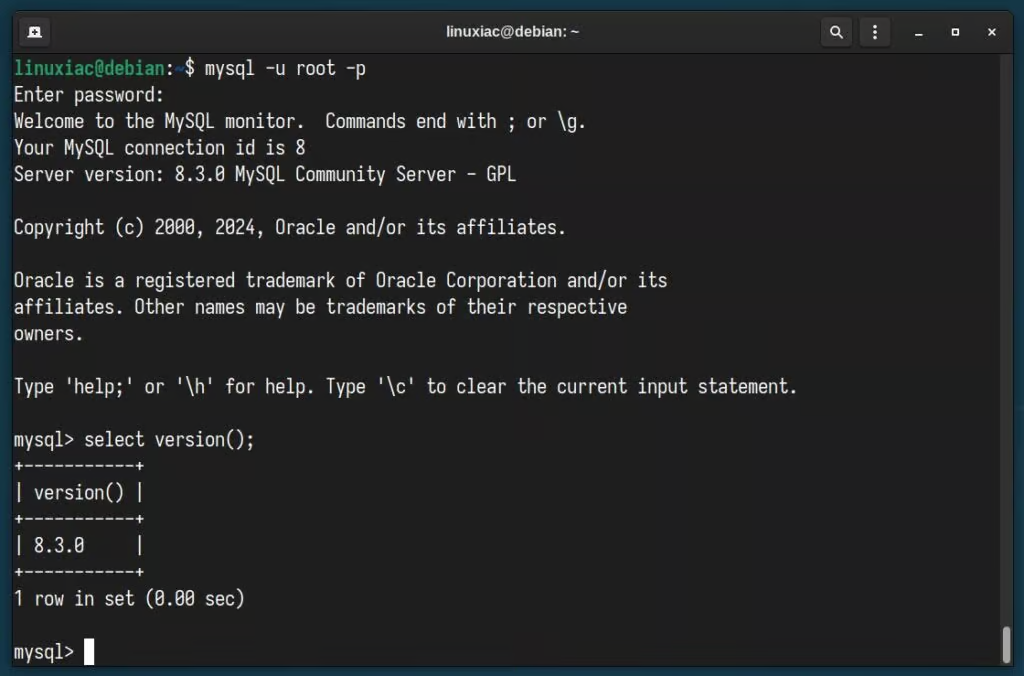

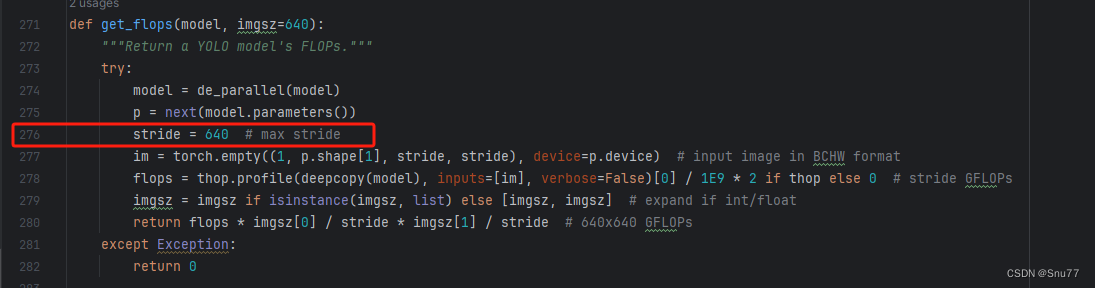

4.9 RT-DETR不能打印计算量问题的解决

计算的GFLOPs计算异常不打印,所以需要额外修改一处, 我们找到如下文件'ultralytics/utils/torch_utils.py'文件内有如下的代码按照如下的图片进行修改,大家看好函数就行,其中红框的640可能和你的不一样, 然后用我给的代码替换掉整个代码即可。

def get_flops(model, imgsz=640):"""Return a YOLO model's FLOPs."""try:model = de_parallel(model)p = next(model.parameters())# stride = max(int(model.stride.max()), 32) if hasattr(model, 'stride') else 32 # max stridestride = 640im = torch.empty((1, 3, stride, stride), device=p.device) # input image in BCHW formatflops = thop.profile(deepcopy(model), inputs=[im], verbose=False)[0] / 1E9 * 2 if thop else 0 # stride GFLOPsimgsz = imgsz if isinstance(imgsz, list) else [imgsz, imgsz] # expand if int/floatreturn flops * imgsz[0] / stride * imgsz[1] / stride # 640x640 GFLOPsexcept Exception:return 0

4.10 可选修改

有些读者的数据集部分图片比较特殊,在验证的时候会导致形状不匹配的报错,如果大家在验证的时候报错形状不匹配的错误可以固定验证集的图片尺寸,方法如下 ->

找到下面这个文件ultralytics/models/yolo/detect/train.py然后其中有一个类是DetectionTrainer class中的build_dataset函数中的一个参数rect=mode == 'val'改为rect=False

五、EfficientViT的yaml文件

5.1 yaml文件

大家复制下面的yaml文件,然后通过我给大家的运行代码运行即可,RT-DETR的调参部分需要后面的文章给大家讲,现在目前免费给大家看这一部分不开放。

# Ultralytics YOLO 🚀, AGPL-3.0 license

# RT-DETR-l object detection model with P3-P5 outputs. For details see https://docs.ultralytics.com/models/rtdetr# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n-cls.yaml' will call yolov8-cls.yaml with scale 'n'# [depth, width, max_channels]l: [1.00, 1.00, 1024]backbone:# [from, repeats, module, args]- [-1, 1, EfficientViT_M0, []] # 4head:- [-1, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 5 input_proj.2- [-1, 1, AIFI, [1024, 8]] # 6- [-1, 1, Conv, [256, 1, 1]] # 7, Y5, lateral_convs.0- [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 8- [3, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 9 input_proj.1- [[-2, -1], 1, Concat, [1]] # 10- [-1, 3, RepC3, [256, 0.5]] # 11, fpn_blocks.0- [-1, 1, Conv, [256, 1, 1]] # 12, Y4, lateral_convs.1- [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 13- [2, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 14 input_proj.0- [[-2, -1], 1, Concat, [1]] # 15 cat backbone P4- [-1, 3, RepC3, [256, 0.5]] # X3 (16), fpn_blocks.1- [-1, 1, Conv, [256, 3, 2]] # 17, downsample_convs.0- [[-1, 12], 1, Concat, [1]] # 18 cat Y4- [-1, 3, RepC3, [256, 0.5]] # F4 (19), pan_blocks.0- [-1, 1, Conv, [256, 3, 2]] # 20, downsample_convs.1- [[-1, 7], 1, Concat, [1]] # 21 cat Y5- [-1, 3, RepC3, [256, 0.5]] # F5 (22), pan_blocks.1- [[16, 19, 22], 1, RTDETRDecoder, [nc, 256, 300, 4, 8, 3]] # Detect(P3, P4, P5)

5.2 运行文件

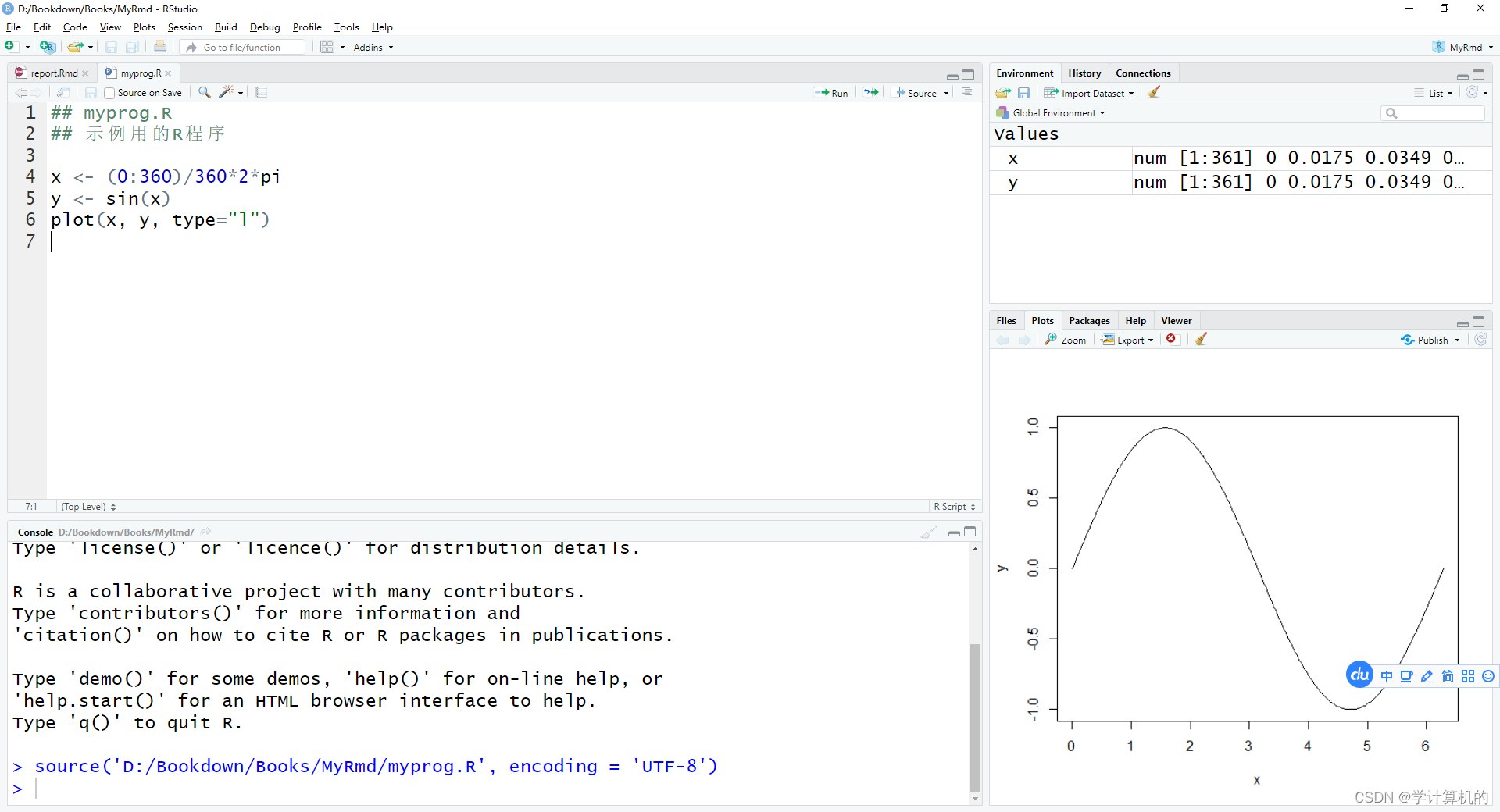

大家可以创建一个train.py文件将下面的代码粘贴进去然后替换你的文件运行即可开始训练。

import warnings

from ultralytics import RTDETR

warnings.filterwarnings('ignore')if __name__ == '__main__':model = RTDETR('替换你想要运行的yaml文件')# model.load('') # 可以加载你的版本预训练权重model.train(data=r'替换你的数据集地址即可',cache=False,imgsz=640,epochs=72,batch=4,workers=0,device='0',project='runs/RT-DETR-train',name='exp',# amp=True)5.3 成功训练截图

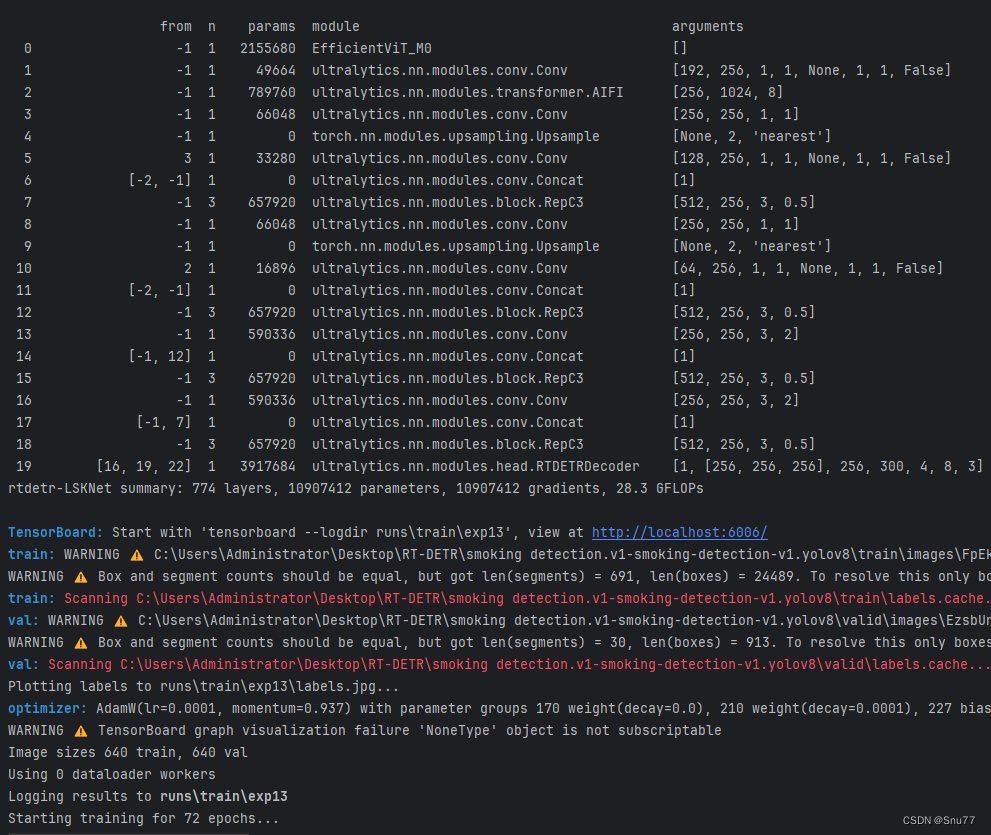

下面是成功运行的截图(确保我的改进机制是可用的),已经完成了有1个epochs的训练,图片太大截不全第2个epochs了。

六、全文总结

从今天开始正式开始更新RT-DETR剑指论文专栏,本专栏的内容会迅速铺开,在短期呢大量更新,价格也会乘阶梯性上涨,所以想要和我一起学习RT-DETR改进,可以在前期直接关注,本文专栏旨在打造全网最好的RT-DETR专栏为想要发论文的家进行服务。

专栏链接:RT-DETR剑指论文专栏,持续复现各种顶会内容——论文收割机RT-DETR