非精确线搜索步长规则

在数值优化中,线搜索是一种寻找合适步长的策略,以确保在目标函数上获得足够的下降。如最速下降法,拟牛顿法这些常用的优化算法等,其中的线搜索步骤通常使用Armijo规则、Goldstein规则或Wolfe规则等。

设无约束优化问题:

min f ( x ) , x ∈ R n \min f(x),{\kern 1pt} \,x \in {R^n} minf(x),x∈Rn

参数迭代过程: x k + 1 ← x k + α k d k x_{k+1}\leftarrow x_k + \alpha_k d_k xk+1←xk+αkdk

α k = arg min f ( x k + 1 ) = arg min α k > 0 f ( x k + α k ⋅ d k ) = arg min α k > 0 ϕ ( α k ) \alpha _{k}=\arg\min f\left(x_{k+1}\right) =\arg\min_{\alpha_k>0}f\left(x_{k}+\alpha_k\cdot d_{k}\right) \\ =\arg\min_{\alpha_k>0}\phi\left(\alpha_k\right) αk=argminf(xk+1)=argαk>0minf(xk+αk⋅dk)=argαk>0minϕ(αk)

从而我们可以得到关于步长 α k \alpha_k αk的方程:

ϕ ( α k ) ≜ f ( x k + α k d k ) \phi(\alpha_k)\triangleq f(x_{k}+\alpha_k d_{k}) ϕ(αk)≜f(xk+αkdk)

对 ϕ ( α k ) \phi(\alpha_k) ϕ(αk)求导:

ϕ ′ ( α k ) = ∇ f ( x k + α k d k ) T ⋅ d k \phi^{\prime}(\alpha_k)=\nabla f(x_{k}+\alpha_k d_{k})^{T}\cdot d_{k} ϕ′(αk)=∇f(xk+αkdk)T⋅dk

当步长 α k = 0 \alpha_k=0 αk=0时, ϕ ( 0 ) = f ( x k ) \phi(0)=f(x_{k}) ϕ(0)=f(xk) ϕ ′ ( 0 ) = ∇ f T ( x k ) ⋅ d k < 0 \phi^{\prime}\left(0\right)=\nabla f^{\rm T}\left(x_{k}\right)\cdot d_{k}<0 ϕ′(0)=∇fT(xk)⋅dk<0

Tips: 当 ∇ f ( x k ) ⋅ d k < 0 \nabla f\left(x_{k}\right)\cdot d_{k}<0 ∇f(xk)⋅dk<0时,即下降方向与负梯度方向的夹角为锐角时,下降有效

我们绘制一个假设的 ϕ ( α k ) \phi(\alpha_k) ϕ(αk)的函数曲线:

ϕ ( α k ) \phi(\alpha_k) ϕ(αk)在0处的切线方程:

L ( α k ) = f ( x k ) + ∇ f T ( x k ) d k ⋅ α k L({\alpha _k}) = f\left( {{x_k}} \right) + \nabla {f^{\rm{T}}}\left( {{x_k}} \right){d_k} \cdot {\alpha _k} L(αk)=f(xk)+∇fT(xk)dk⋅αk

Armijo规则

Armijo规则是最简单的线搜索规则之一,它基于函数值的下降来决定步长。

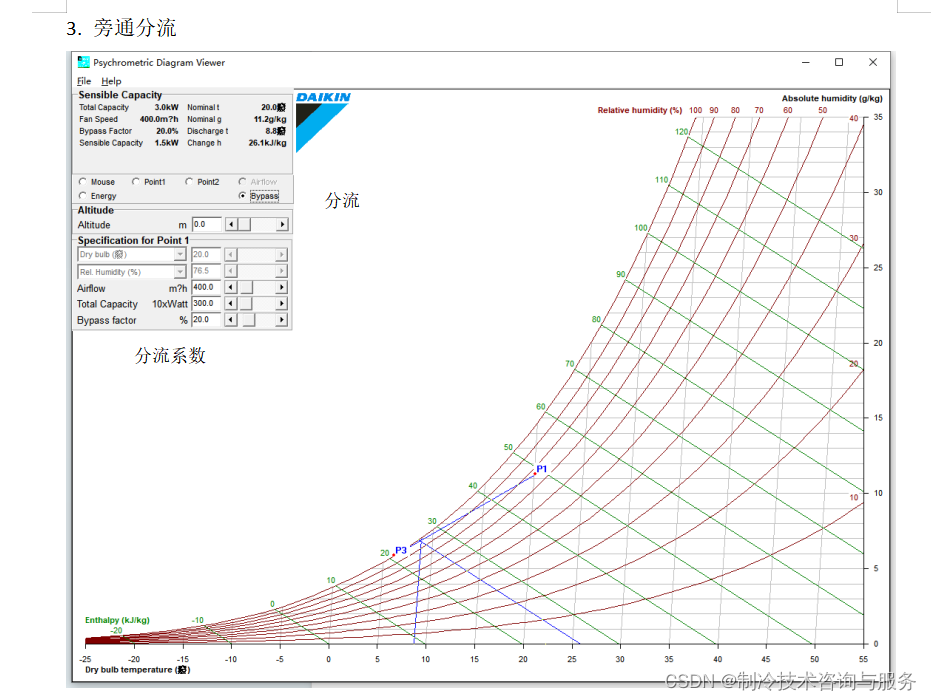

具体而言,Armijo规则要求如下图所示:

绿色划线范围为 α k \alpha_k αk可取值的范围。

设置连接0点 ϕ ( 0 ) = f ( x k ) \phi (0) = f({x_k}) ϕ(0)=f(xk)和射线 L ( α k ) = f ( x k ) + ∇ f T ( x k ) d k ⋅ α k L({\alpha _k}) = f\left( {{x_k}} \right) + \nabla {f^{\rm{T}}}\left( {{x_k}} \right){d_k} \cdot {\alpha _k} L(αk)=f(xk)+∇fT(xk)dk⋅αk之间作一条过 ( 0 , f ( x k ) ) (0,f(x_k)) (0,f(xk))斜率为 c ⋅ ∇ f ( x k ) T d k c \cdot \nabla f(x_k)^T d_k c⋅∇f(xk)Tdk的射线。所有满足以下方程的 α k {\alpha _k} αk便可作为步长。即图中绿线划出的取值范围。

ϕ ( α k ) = f ( x k + α k ⋅ d k ) ≤ f ( x k ) + c ⋅ α k ⋅ ∇ f ( x k ) T d k \phi(\alpha_k)=f(x_k + \alpha_k \cdot d_k) \leq f(x_k) + c \cdot \alpha_k \cdot \nabla f(x_k)^T d_k ϕ(αk)=f(xk+αk⋅dk)≤f(xk)+c⋅αk⋅∇f(xk)Tdk

其中, α k \alpha_k αk是步长, c c c 是Armijo规则的参数,通常取小于1的值。Armijo规则保证在朝着梯度方向(即搜索方向 d k d_k dk)移动时,目标函数能够有足够的下降。

Goldstein规则

由于Armijo规则,引入 α k \alpha_k αk的取值范围包含了0附近的值,为了保证迭代步长不会太小,

Goldstein规则是对Armijo规则的一种改进,它引入了一个额外的上界条件。Goldstein规则要求:

f ( x k + α k ⋅ d k ) ≤ f ( x k ) + c 1 ⋅ α k ⋅ ∇ f ( x k ) T d k f(x_k + \alpha_k \cdot d_k) \leq f(x_k) + c_1 \cdot \alpha_k \cdot \nabla f(x_k)^T d_k f(xk+αk⋅dk)≤f(xk)+c1⋅αk⋅∇f(xk)Tdk

并且,

f ( x k + α k ⋅ d k ) ≥ ( 1 − c 1 ) ⋅ α k ⋅ ∇ f ( x k ) T d k f(x_k + \alpha_k \cdot d_k) \geq (1 - c_1) \cdot \alpha_k \cdot \nabla f(x_k)^T d_k f(xk+αk⋅dk)≥(1−c1)⋅αk⋅∇f(xk)Tdk

其中, c 1 c_1 c1 是Goldstein规则的参数,通常取小于0.5的值。Goldstein规则的引入使得步长既要满足下降条件,又要限制在一个合理的范围内。

其示意图如下:

蓝色划线范围为 α k \alpha_k αk可取值的范围。

Wolfe规则

Wolfe规则结合了Armijo条件和曲率条件,它对步长进行更为严格的控制。 ϕ ( α k ) \phi(\alpha_k) ϕ(αk)的斜率由负值最大逐步增加,因此新增一个斜率的约束,去掉小步长。

Wolfe规则要求两个条件:

-

Armijo条件:

ϕ ( α k ) = f ( x k + α k ⋅ d k ) ≤ f ( x k ) + c 1 ⋅ α k ⋅ ∇ f ( x k ) T d k \phi(\alpha_k)=f(x_k + \alpha_k \cdot d_k) \leq f(x_k) + c_1 \cdot \alpha_k \cdot \nabla f(x_k)^T d_k ϕ(αk)=f(xk+αk⋅dk)≤f(xk)+c1⋅αk⋅∇f(xk)Tdk -

曲率条件:

ϕ ′ ( α k ) = ∇ f ( x k + α k ⋅ d k ) T d k ≥ c 2 ⋅ ∇ f ( x k ) T d k \phi^{\prime}(\alpha_k)=\nabla f(x_k + \alpha_k \cdot d_k)^T d_k \geq c_2 \cdot \nabla f(x_k)^T d_k ϕ′(αk)=∇f(xk+αk⋅dk)Tdk≥c2⋅∇f(xk)Tdk

其中, c 1 c_1 c1 和 c 2 c_2 c2 是Wolfe规则的参数,通常 0 < c 1 < c 2 < 1 0 < c_1 < c_2 < 1 0<c1<c2<1。Wolfe规则旨在确保步长既满足足够的下降条件,又满足足够的曲率条件,以保证收敛性和数值稳定性。

蓝色划线范围为 α k \alpha_k αk可取值的范围。

C++示例代码

以上规则在实际应用中通常作为拟牛顿法的一部分。以下是一个简单的C++示例程序,演示了Armijo、Goldstein和Wolfe规则的使用。

#include <iostream>

#include <cmath>

#include <Eigen/Dense>

#include <limits>using namespace Eigen;double objectiveFunction(const VectorXd& x) {return x[0]*x[0] + 2*x[1]*x[1];

}VectorXd gradient(const VectorXd& x) {VectorXd grad(2);grad[0] = 2*x[0];grad[1] = 4*x[1];return grad;

}bool armijoRule(const VectorXd& x, const VectorXd& d, double alpha, double beta) {double c = 0.5; // Armijo参数double expectedReduction = c * alpha * gradient(x).dot(d);double actualReduction = objectiveFunction(x - alpha * d) - objectiveFunction(x);return actualReduction >= expectedReduction;

}void armijoExample() {VectorXd x(2);x << 1.0, 1.0; // Initial guessVectorXd d = -gradient(x); // Descent directiondouble alpha = 1.0; // Initial step sizedouble beta = 0.5; // Step size reduction factorwhile (!armijoRule(x, d, alpha, beta)) {alpha *= beta;}std::cout << "Armijo rule: Optimal step size = " << alpha << std::endl;

}bool goldsteinRule(const VectorXd& x, const VectorXd& d, double alpha, double beta, double gamma) {double c1 = 0.5; // Goldstein参数double expectedReduction = c1 * alpha * gradient(x).dot(d);double actualReduction = objectiveFunction(x - alpha * d) - objectiveFunction(x);double upperBound = (1 - c1) * alpha * gradient(x).dot(d);return actualReduction >= expectedReduction && actualReduction <= upperBound;

}void goldsteinExample() {VectorXd x(2);x << 1.0, 1.0; // Initial guessVectorXd d = -gradient(x); // Descent directiondouble alpha = 1.0; // Initial step sizedouble beta = 0.5; // Step size reduction factordouble gamma = 2.0; // Additional parameter for Goldstein rulewhile (!goldsteinRule(x, d, alpha, beta, gamma)) {alpha *= beta;}std::cout << "Goldstein rule: Optimal step size = " << alpha << std::endl;

}bool wolfeRule(const VectorXd& x, const VectorXd& d, double alpha, double c1, double c2) {double phi0 = objectiveFunction(x);double phi_alpha = objectiveFunction(x + alpha * d);double phi_prime_0 = gradient(x).dot(d);double phi_prime_alpha = gradient(x + alpha * d).dot(d);// Armijo条件if (phi_alpha > phi0 + c1 * alpha * phi_prime_0)return false;// 曲率条件if (phi_prime_alpha < c2 * phi_prime_0)return false;return true;

}void wolfeExample() {VectorXd x(2);x << 1.0, 1.0; // Initial guessVectorXd d = -gradient(x); // Descent directiondouble alpha = 1.0; // Initial step sizedouble c1 = 1e-4; // Armijo条件参数double c2 = 0.9; // 曲率条件参数while (!wolfeRule(x, d, alpha, c1, c2)) {alpha *= 0.5; // Reduce step size}std::cout << "Wolfe rule: Optimal step size = " << alpha << std::endl;

}int main()

{armijoExample();goldsteinExample();wolfeExample();

}//g++ xiansousuo.cpp `pkg-config eigen3 --libs --cflags`

以上代码通过迭代求得最佳的步长。

在实际应用中,选择适当的线搜索规则和参数非常重要。这些规则的性能可能因问题的性质而异,因此需要根据具体情况进行调整。线搜索规则的选择直接影响了优化算法的性能和收敛速度,因此在应用中需要进行仔细的实验和调优。

参考链接

https://www.bilibili.com/video/BV1H14y1R7Yo/?spm_id_from=333.337.search-card.all.click