一、什么是operator?

在Kubernetes中我们经常使用 Deployment、DaemonSet、Service、ConfigMap 等资源,这些资源都是Kubernetes的内置资源,而对这些资源的创建、更新、删除的动作都会被称为事件(Event),Kubernetes 的 Controller Manager 负责事件监听,并触发相应的动作来满足期望(Spec),这种声明式的方式简化了用户的操作,用户在使用时只需关心应用程序的最终状态即可。随着 Kubernetes 的发展, 在一些场景更为复杂的分布式应用系统,原生 Kubernetes 内置资源在这些场景下就显得有些力不从心。简而言之,Operator就是一个为管理kubernetes集群内添加的一个自定义资源类型的对应的自定义控制器。

二、CRD

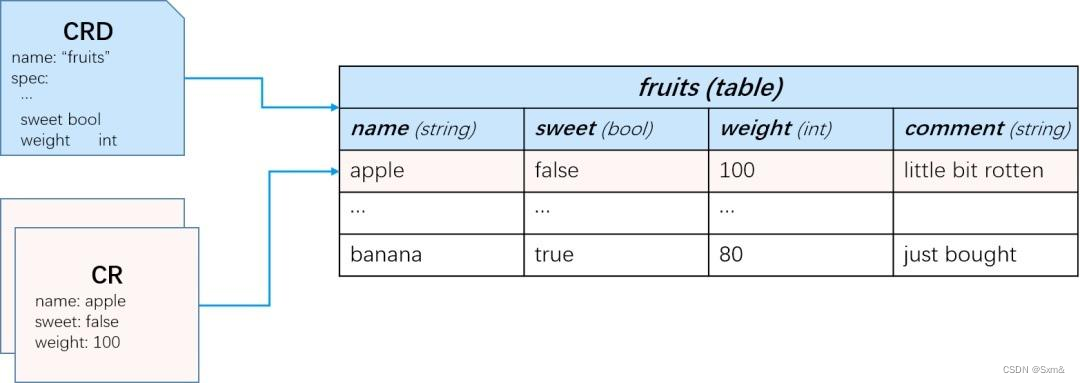

CRD 的全称是 Custom Resource Definition。顾名思义,它指的就是,允许用户在 Kubernetes 中添加一个跟 Pod、Node 类似的、新的 API 资源类型,即:自定义 API 资源。简而言之,就是介绍这个资源有什么属性,这些属性的类型是什么,结构是怎样的。

当你创建新的CRD时,Kubernetes API 服务器会为你所指定的每个版本生成一个新的 RESTful 资源路径。 CRD保证新的资源快速注册到kubenetes集群。基于 CRD 对象所创建的自定义资源可以是名称空间作用域的,也可以是集群作用域的, 取决于 CRD 对象 spec.scope 字段的设置。

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:# 名字必需与下面的 spec 字段匹配,并且格式为 '<名称的复数形式>.<组名>'name: crontabs.stable.example.com

spec:# 组名称,用于 REST API: /apis/<组>/<版本>group: stable.example.com# 列举此 CustomResourceDefinition 所支持的版本versions:- name: v1# 每个版本都可以通过 served 标志来独立启用或禁止served: true# 其中一个且只有一个版本必需被标记为存储版本storage: trueschema:openAPIV3Schema:type: objectproperties:spec:type: objectproperties:cronSpec:type: stringimage:type: stringreplicas:type: integer# 可以是 Namespaced 或 Clusterscope: Namespacednames:# 名称的复数形式,用于 URL:/apis/<组>/<版本>/<名称的复数形式>plural: crontabs# 名称的单数形式,作为命令行使用时和显示时的别名singular: crontab# kind 通常是单数形式的驼峰命名(CamelCased)形式。你的资源清单会使用这一形式。kind: CronTab# shortNames 允许你在命令行使用较短的字符串来匹配资源shortNames:- ct

三、CR

CR 的全称是Custom Resource,是CRD的产物,根据CRD确定自身有什么属性,为这些属性赋值来定义一个该实例。一个CR实例可以直接类比k8s内建的一个Pod实例,可以像Pod一样去使用一个CR。CRD 是资源类型定义,具体的资源叫 CR。

类比数据库:

CRD就像是一张表,定义了表有哪些字段,字段的类型,创建 CRD:这一步相当于 CREATE TABLE fruits ;

$ kubectl create -f fruits-crd.yaml

创建 CR:相当于 INSERT INTO fruits values(…);

$ kubectl create -f apple-cr.yamlapple-cr.yaml:

apiVersion: example.org/v1kind: Fruitmetadata:name: applespec:sweet: falseweight: 100comment: little bit rotten

四、operator开发

4.1 controller-runtime

controller-runtime 是 Kubernetes 社区提供可供快速搭建一套 实现了controller 功能的工具,用户无需自行实现controller的功能了,只需要专注于如何处理当前Kubernetes APIServer发来的请求,只需要专注于自己的业务处理Reconciler即可。

目前,Kubernetes 社区基于controller-runtime推出了Operator SDK和 Kubebuilder这两种常用的开发Operator的SDK,他们本质上并没有什么区别。

4.2 client-go

client-go是kubernetes官方提供的go语言的客户端库,是从 Kubernetes的代码中单独抽离出来的包。使用client-go可以与kubernetes集群交互,包括资源的访问操作。掌握client-go,对于kubernetes开发非常重要。

controller-runtime是对client-go进行了封装可供快速搭建一套实现了controller功能的工具库包。由Kubernetes 社区推出。

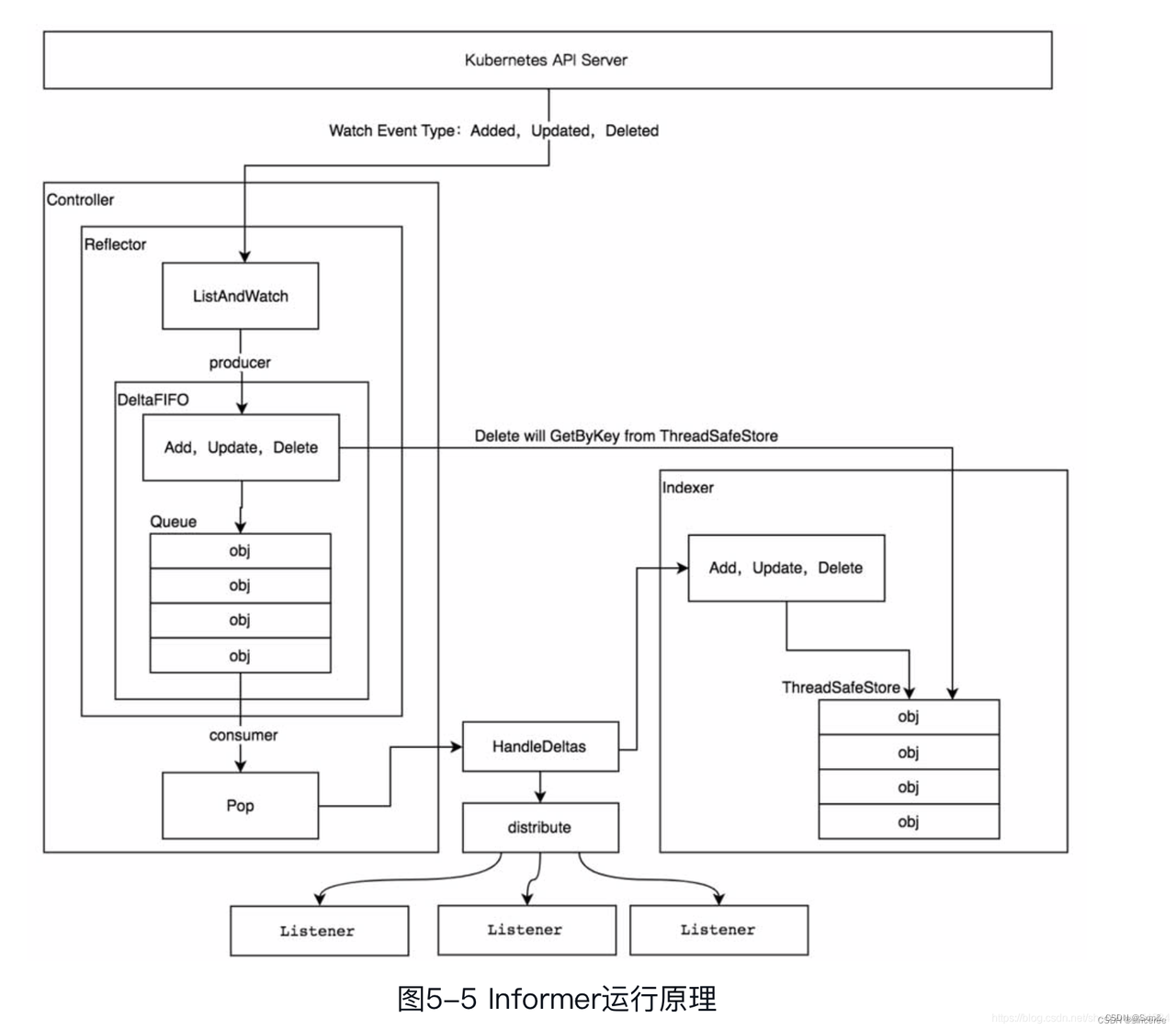

五、informer机制底层原理

informer 是 client-go 中的核心工具包,在kubernetes中,各个组件通过HTTP协议跟 API Server 进行通信。如果各组件每次都直接和API Server 进行交互,会给API Server 和ETCD造成非常大的压力。在不依赖任何中间件的情况下,通过informer保证了消息的实时性、可靠性和顺序性。

API Server:以REST形式对外暴露提供服务,是k8s系统中所有组件沟通的桥梁,是整个系统的数据总线,集群管理的核心。

ETCD:etcd是一个高可用的key-value数据库,是k8s集群的唯一底层数据存储。

详细架构图:

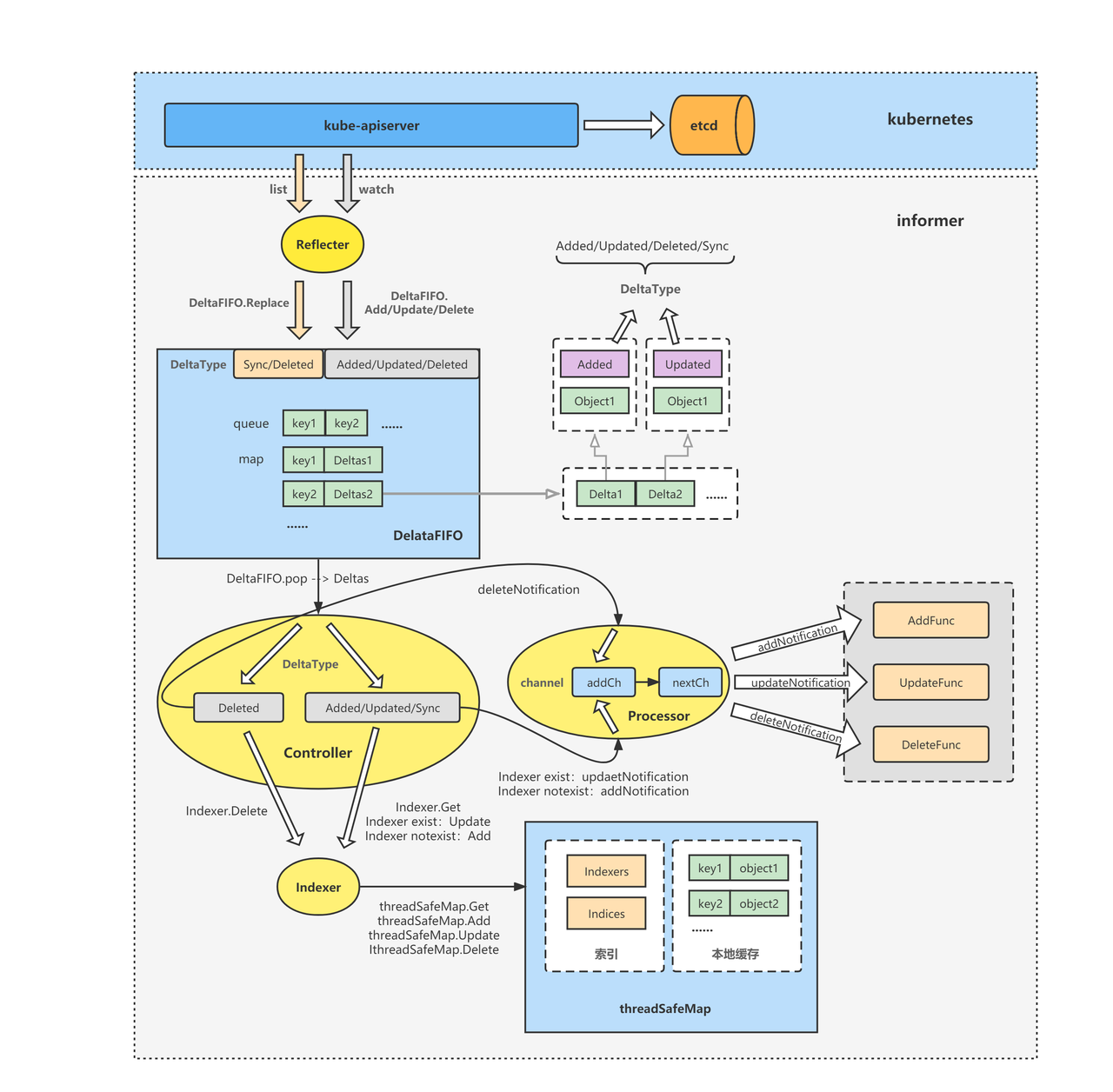

由上图可知,Informer由如下组件组成:

由上图可知,Informer由如下组件组成:

- Reflector:一方面通过List和Watch API来监听资源对象的变化,另一方面将这些变更信息放入DeltaFIFO中。

- DeltaFIFO:用来存储Reflector监听到的对象变更信息。

- Controller:这里的Controller并不是用户的Controller,而是Informer中的一个对象;其首先不断的从DeltaFIFO中Pop Deltas(理解为是一个资源的Event即可),然后将Event的变化一方面同步到Indexer中(也就是cache),另一方面来触发用户在informer.AddEventHandler 注册的Handler。

- Indexer:Informer中维护的资源缓存,当我们通过Lister的list和get接口来获取资源信息时,是从cache中获取的,并不会从APIServer中获取;

- Processor:用来维护和响应用户注册的Handler

运行原理图:

5.1 informer启动

informer启动有以下步骤:

- 注册及启动processLoop和reflector

- reflector开始LIST和WATCH,watch到的数据进行对比处理,存入到queue中

- processLoop开始循环pop队列数据

factory := informers.NewSharedInformerFactory(clientset, 0)podInformer := factory.Core().V1().Pods().Informer()podInformer.AddEventHandler(cache.ResourceEventHandlerFuncs{AddFunc: func(obj interface{}) {mObj := obj.(v1.Object)log.Printf("New pod added: %s", mObj.GetName())},UpdateFunc: func(oldObj, newObj interface{}) {oObj := oldObj.(v1.Object)nObj := newObj.(v1.Object)log.Printf("%s pod updated to %s", oObj.GetName(), nObj.GetName())},DeleteFunc: func(obj interface{}) {mObj := obj.(v1.Object)log.Printf("pod deleted from store: %s", mObj.GetName())},})//启动informerpodInformer.Run(stopCh)func (s *sharedIndexInformer) Run(stopCh <-chan struct{}) {......fifo := NewDeltaFIFOWithOptions(DeltaFIFOOptions{//FIFO持有indexer引用KnownObjects: s.indexer,EmitDeltaTypeReplaced: true,})cfg := &Config{Queue: fifo,ListerWatcher: s.listerWatcher,ObjectType: s.objectType,FullResyncPeriod: s.resyncCheckPeriod,RetryOnError: false,ShouldResync: s.processor.shouldResync,//注册回调函数HandleDeltas,后面从queue弹出数据的时候要用到Process: s.HandleDeltas,WatchErrorHandler: s.watchErrorHandler,}//根据config创建controllerfunc() {s.startedLock.Lock()defer s.startedLock.Unlock()s.controller = New(cfg)s.controller.(*controller).clock = s.clocks.started = true}()......s.controller.Run(stopCh)

}

func (c *controller) Run(stopCh <-chan struct{}) {defer utilruntime.HandleCrash()go func() {<-stopChc.config.Queue.Close()}()r := NewReflector(c.config.ListerWatcher,c.config.ObjectType,c.config.Queue,c.config.FullResyncPeriod,)// 省略代码......var wg wait.Group//启动reflectorwg.StartWithChannel(stopCh, r.Run)//启动processLoopwait.Until(c.processLoop, time.Second, stopCh)wg.Wait()

}

5.2 ListAndWatch

reflector启动之后,开始ListAndWatch,watch与API Server建立长连接,使用HTTP协议的分块传输编码(ChunkedTransfer Encoding)实现。

func (r *Reflector) Run(stopCh <-chan struct{}) {klog.V(3).Infof("Starting reflector %s (%s) from %s", r.expectedTypeName, r.resyncPeriod, r.name)wait.BackoffUntil(func() {// reflector进行list和watchif err := r.ListAndWatch(stopCh); err != nil {r.watchErrorHandler(r, err)}}, r.backoffManager, true, stopCh)klog.V(3).Infof("Stopping reflector %s (%s) from %s", r.expectedTypeName, r.resyncPeriod, r.name)

}

switch event.Type {//watch到add事件case watch.Added:err := r.store.Add(event.Object)if err != nil {utilruntime.HandleError(fmt.Errorf("%s: unable to add watch event object (%#v) to store: %v", r.name, event.Object, err))}//watch到modified事件case watch.Modified:err := r.store.Update(event.Object)if err != nil {utilruntime.HandleError(fmt.Errorf("%s: unable to update watch event object (%#v) to store: %v", r.name, event.Object, err))}//watch到delete事件case watch.Deleted:// TODO: Will any consumers need access to the "last known// state", which is passed in event.Object? If so, may need// to change this.err := r.store.Delete(event.Object)if err != nil {utilruntime.HandleError(fmt.Errorf("%s: unable to delete watch event object (%#v) from store: %v", r.name, event.Object, err))}case watch.Bookmark:// A `Bookmark` means watch has synced here, just update the resourceVersiondefault:utilruntime.HandleError(fmt.Errorf("%s: unable to understand watch event %#v", r.name, event))}

watch到的对象加入到DelataFIFO中,以update事件为例:

func (f *DeltaFIFO) Update(obj interface{}) error {f.lock.Lock()defer f.lock.Unlock()f.populated = truereturn f.queueActionLocked(Updated, obj)

}

func (f *DeltaFIFO) queueActionLocked(actionType DeltaType, obj interface{}) error {id, err := f.KeyOf(obj)if err != nil {return KeyError{obj, err}}oldDeltas := f.items[id]newDeltas := append(oldDeltas, Delta{actionType, obj})newDeltas = dedupDeltas(newDeltas)if len(newDeltas) > 0 {if _, exists := f.items[id]; !exists {//将key放入到queuef.queue = append(f.queue, id)}//将new Deltas放入到items中f.items[id] = newDeltas//事件到达广播,用于唤醒阻塞在cond上的协程f.cond.Broadcast()} else {// This never happens, because dedupDeltas never returns an empty list// when given a non-empty list (as it is here).// If somehow it happens anyway, deal with it but complain.if oldDeltas == nil {klog.Errorf("Impossible dedupDeltas for id=%q: oldDeltas=%#+v, obj=%#+v; ignoring", id, oldDeltas, obj)return nil}klog.Errorf("Impossible dedupDeltas for id=%q: oldDeltas=%#+v, obj=%#+v; breaking invariant by storing empty Deltas", id, oldDeltas, obj)f.items[id] = newDeltasreturn fmt.Errorf("Impossible dedupDeltas for id=%q: oldDeltas=%#+v, obj=%#+v; broke DeltaFIFO invariant by storing empty Deltas", id, oldDeltas, obj)}return nil

}

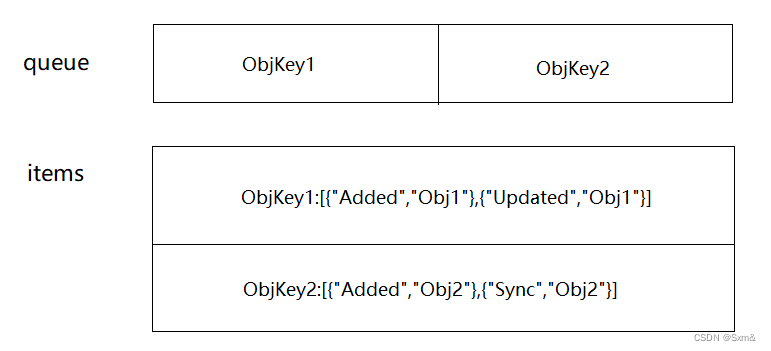

DeltaFIFO的数据结构如下:

type DeltaFIFO struct {// lock/cond protects access to 'items' and 'queue'.lock sync.RWMutexcond sync.Cond// `items` maps a key to a Deltas.// Each such Deltas has at least one Delta.items map[string]Deltas// `queue` maintains FIFO order of keys for consumption in Pop().// There are no duplicates in `queue`.// A key is in `queue` if and only if it is in `items`.queue []string// populated is true if the first batch of items inserted by Replace() has been populated// or Delete/Add/Update/AddIfNotPresent was called first.populated bool// initialPopulationCount is the number of items inserted by the first call of Replace()initialPopulationCount int// keyFunc is used to make the key used for queued item// insertion and retrieval, and should be deterministic.keyFunc KeyFunc// knownObjects list keys that are "known" --- affecting Delete(),// Replace(), and Resync()knownObjects KeyListerGetter// Used to indicate a queue is closed so a control loop can exit when a queue is empty.// Currently, not used to gate any of CRUD operations.closed bool// emitDeltaTypeReplaced is whether to emit the Replaced or Sync// DeltaType when Replace() is called (to preserve backwards compat).emitDeltaTypeReplaced bool

}

到这里,已经将最新的数据推送到了DeltaFIFO的queue中,接下来看下怎么处理queue中的数据。

5.3 handleDelatas

DeltaFIFO的queue出队,回到之前注册并启动起来的processLoop:

func (c *controller) processLoop() {for {//从queue弹出数据,交由process处理,也就是之前注册的handleDeltasobj, err := c.config.Queue.Pop(PopProcessFunc(c.config.Process))if err != nil {if err == ErrFIFOClosed {return}if c.config.RetryOnError {// This is the safe way to re-enqueue.// 重新入队queuec.config.Queue.AddIfNotPresent(obj)}}}

}

func (f *DeltaFIFO) Pop(process PopProcessFunc) (interface{}, error) {f.lock.Lock()defer f.lock.Unlock()for {for len(f.queue) == 0 {// When the queue is empty, invocation of Pop() is blocked until new item is enqueued.// When Close() is called, the f.closed is set and the condition is broadcasted.// Which causes this loop to continue and return from the Pop().if f.closed {return nil, ErrFIFOClosed}//如果queue中没有数据,阻塞等待,接收到广播后,协程会唤醒f.cond.Wait()}id := f.queue[0]f.queue = f.queue[1:]depth := len(f.queue)if f.initialPopulationCount > 0 {f.initialPopulationCount--}item, ok := f.items[id]if !ok {// This should never happenklog.Errorf("Inconceivable! %q was in f.queue but not f.items; ignoring.", id)continue}//出队的时候会将items中对应key的数据删掉delete(f.items, id)// Only log traces if the queue depth is greater than 10 and it takes more than// 100 milliseconds to process one item from the queue.// Queue depth never goes high because processing an item is locking the queue,// and new items can't be added until processing finish.// https://github.com/kubernetes/kubernetes/issues/103789if depth > 10 {trace := utiltrace.New("DeltaFIFO Pop Process",utiltrace.Field{Key: "ID", Value: id},utiltrace.Field{Key: "Depth", Value: depth},utiltrace.Field{Key: "Reason", Value: "slow event handlers blocking the queue"})defer trace.LogIfLong(100 * time.Millisecond)}//处理数据,重点看下这个方法,进入HandleDeltaserr := process(item)if e, ok := err.(ErrRequeue); ok {f.addIfNotPresent(id, item)err = e.Err}// Don't need to copyDeltas here, because we're transferring// ownership to the caller.return item, err}

}func (s *sharedIndexInformer) HandleDeltas(obj interface{}) error {s.blockDeltas.Lock()defer s.blockDeltas.Unlock()// from oldest to newestfor _, d := range obj.(Deltas) {switch d.Type {//在初始化Delta_fifo时使用。Informer会通过list得到某资源下全部的对象,//而Replace方法就可以把这些资源对象一次性装载至队列,并同步至Indexer。case Sync, Replaced, Added, Updated:s.cacheMutationDetector.AddObject(d.Object)//从本地缓存indexer中查询数据是否存在if old, exists, err := s.indexer.Get(d.Object); err == nil && exists {//如果存在,则更新indexer中该数据if err := s.indexer.Update(d.Object); err != nil {return err}isSync := falseswitch {case d.Type == Sync:// Sync events are only propagated to listeners that requested resyncisSync = truecase d.Type == Replaced:if accessor, err := meta.Accessor(d.Object); err == nil {if oldAccessor, err := meta.Accessor(old); err == nil {// Replaced events that didn't change resourceVersion are treated as resync events// and only propagated to listeners that requested resyncisSync = accessor.GetResourceVersion() == oldAccessor.GetResourceVersion()}}}//分发监听者,通知监听updates.processor.distribute(updateNotification{oldObj: old, newObj: d.Object}, isSync)} else {//如果不存在,则在indexer中添加该数据if err := s.indexer.Add(d.Object); err != nil {return err}//分发监听者,通知监听adds.processor.distribute(addNotification{newObj: d.Object}, false)}case Deleted:if err := s.indexer.Delete(d.Object); err != nil {return err}//分发监听者,通知监听deletes.processor.distribute(deleteNotification{oldObj: d.Object}, false)}}return nil

}

func (p *sharedProcessor) distribute(obj interface{}, sync bool) {p.listenersLock.RLock()defer p.listenersLock.RUnlock()if sync {for _, listener := range p.syncingListeners {listener.add(obj)}} else {for _, listener := range p.listeners {//监听者添加通知listener.add(obj)}}

}

func (p *processorListener) add(notification interface{}) {//通知发送到addChp.addCh <- notification

}

数据发送到了监听者的addCh中,那么监听者是什么时候注册的,又是怎么工作的?

其实在informer注册eventHandler的时候就注册了监听者.

podInformer.AddEventHandler(cache.ResourceEventHandlerFuncs{AddFunc: func(obj interface{}) {mObj := obj.(v1.Object)log.Printf("New pod added: %s", mObj.GetName())},UpdateFunc: func(oldObj, newObj interface{}) {oObj := oldObj.(v1.Object)nObj := newObj.(v1.Object)log.Printf("%s pod updated to %s", oObj.GetName(), nObj.GetName())},DeleteFunc: func(obj interface{}) {mObj := obj.(v1.Object)log.Printf("pod deleted from store: %s", mObj.GetName())},})

func (s *sharedIndexInformer) AddEventHandler(handler ResourceEventHandler) {s.AddEventHandlerWithResyncPeriod(handler, s.defaultEventHandlerResyncPeriod)

}

func (s *sharedIndexInformer) AddEventHandlerWithResyncPeriod(handler ResourceEventHandler, resyncPeriod time.Duration) {//省略代码//......//创建监听者listener := newProcessListener(handler, resyncPeriod, determineResyncPeriod(resyncPeriod, s.resyncCheckPeriod), s.clock.Now(), initialBufferSize)if !s.started {s.processor.addListener(listener)return}// in order to safely join, we have to// 1. stop sending add/update/delete notifications// 2. do a list against the store// 3. send synthetic "Add" events to the new handler// 4. unblocks.blockDeltas.Lock()defer s.blockDeltas.Unlock()//添加监听者s.processor.addListener(listener)for _, item := range s.indexer.List() {listener.add(addNotification{newObj: item})}

}

func newProcessListener(handler ResourceEventHandler, requestedResyncPeriod, resyncPeriod time.Duration, now time.Time, bufferSize int) *processorListener {ret := &processorListener{nextCh: make(chan interface{}),addCh: make(chan interface{}),handler: handler,pendingNotifications: *buffer.NewRingGrowing(bufferSize),requestedResyncPeriod: requestedResyncPeriod,resyncPeriod: resyncPeriod,}ret.determineNextResync(now)return ret

}

func (p *sharedProcessor) addListener(listener *processorListener) {p.listenersLock.Lock()defer p.listenersLock.Unlock()p.addListenerLocked(listener)if p.listenersStarted {//在两个不同的协程使监听者运行起来//pop负责从channel中拿通知//run负责处理通知p.wg.Start(listener.run)p.wg.Start(listener.pop)}

}

func (p *processorListener) pop() {defer utilruntime.HandleCrash()defer close(p.nextCh) // Tell .run() to stopvar nextCh chan<- interface{}var notification interface{}for {select {case nextCh <- notification:// Notification dispatchedvar ok boolnotification, ok = p.pendingNotifications.ReadOne()if !ok { // Nothing to popnextCh = nil // Disable this select case}//联系前面distribute分发监听者的时候将notification发送到addChcase notificationToAdd, ok := <-p.addCh:if !ok {return}if notification == nil { // No notification to pop (and pendingNotifications is empty)// Optimize the case - skip adding to pendingNotificationsnotification = notificationToAddnextCh = p.nextCh} else { // There is already a notification waiting to be dispatchedp.pendingNotifications.WriteOne(notificationToAdd)}}}

}

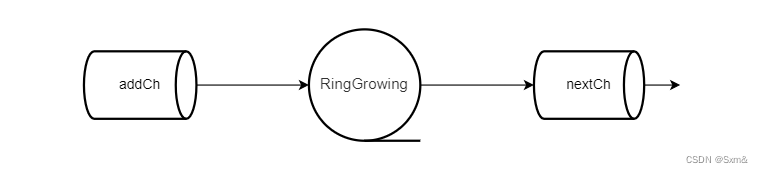

p.pendingNotifications是一个环形buffer的数据结构,addCh将notification写入到环形buffer,再从环形buffer取出notification发送到nextCh

func (p *processorListener) run() {// this call blocks until the channel is closed. When a panic happens during the notification// we will catch it, **the offending item will be skipped!**, and after a short delay (one second)// the next notification will be attempted. This is usually better than the alternative of never// delivering again.stopCh := make(chan struct{})wait.Until(func() {for next := range p.nextCh {//这里调用到用户定义的handler方法switch notification := next.(type) {case updateNotification:p.handler.OnUpdate(notification.oldObj, notification.newObj)case addNotification:p.handler.OnAdd(notification.newObj)case deleteNotification:p.handler.OnDelete(notification.oldObj)default:utilruntime.HandleError(fmt.Errorf("unrecognized notification: %T", next))}}// the only way to get here is if the p.nextCh is empty and closedclose(stopCh)}, 1*time.Second, stopCh)

}

5.4 三级缓存

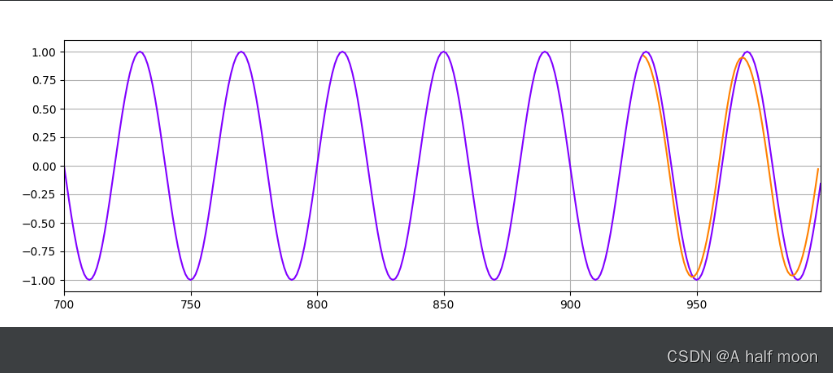

List-watch 是一个典型的生产者-消费者模型,这种模型常见的问题就是,消费者处理事件的速度跟不上生产者生成事件的速度,所以我们需要缓存来存储生产者的事件,然后让消费者慢慢处理。

5.4.1 DeltaFIFO

DeltaFIFO并没有做具体地对资源对象做更新删除等操作,它更多是充当一个缓冲和转存的作用。

5.4.2 Indexer

资源对象的最新本地缓存是在Indexer中的,Indexer与etcd中存储的对象是保持状态一致的。Indexer的存储是由ThreadSafeMap实现的,ThreadSafeMap是一个并发安全的存储,具有存储相关的增、删、改、查等操作。Indexer在封装ThreadSafeMap的基础上,实现了索引的相关功能。所以Index可以理解为一个带索引的并发安全的本地存储。

DeltaFIFO中的元素被弹出来后被同步到了 Indexer 存储中,参照handlerDelatas。

5.4.2.1 Resync机制

Resync机制会将Indexer本地存储中的资源同步到DeltaFIFO中,并将这些资源对象设置为Sync的操作类型。Resync在Reflector中定时执行,定时调用UpdateFunc。

func (f *DeltaFIFO) Resync() error {f.lock.Lock()defer f.lock.Unlock()if f.knownObjects == nil {return nil}//遍历本地存储的所有keykeys := f.knownObjects.ListKeys()for _, k := range keys {//同步keyif err := f.syncKeyLocked(k); err != nil {return err}}return nil

}

func (f *DeltaFIFO) syncKeyLocked(key string) error {obj, exists, err := f.knownObjects.GetByKey(key)if err != nil {klog.Errorf("Unexpected error %v during lookup of key %v, unable to queue object for sync", err, key)return nil} else if !exists {klog.Infof("Key %v does not exist in known objects store, unable to queue object for sync", key)return nil}id, err := f.KeyOf(obj)if err != nil {return KeyError{obj, err}}//如果FIFO中有相同key的Event进来,说明该资源对象有了新的Event,故不作syncif len(f.items[id]) > 0 {return nil}//重新放入FIFO队列中if err := f.queueActionLocked(Sync, obj); err != nil {return fmt.Errorf("couldn't queue object: %v", err)}return nil

}

5.4.3 RingGrowing

DeltaFIFO中的元素被弹出来后,一方面是被同步到Indexer中,另一方面是去通知事件回调。但是k8s并没有直接去做事件回调,而是多做了一层缓冲RingGrowing,RingGrowing 是一个环形数据结构。