使用CLIP模型的对图像进行相似度计算。

1、CLIP中的图像相似度

使用CLIP计算两个图像之间的相似度是一个简单的过程,只需要两个步骤:首先提取两个图像的特征,然后计算它们的余弦相似度。

首先,确保已安装所需的软件包。建议设置和使用虚拟环境:

#Start by setting up a virtual environment

virtualenv venv-similarity

source venv-similarity/bin/activate

#Install required packages

pip install transformers Pillow torch

接下来,继续计算图像相似度:

#!/usr/bin/env python

# -*- coding: utf-8 -*-import torch

from PIL import Image

from transformers import AutoProcessor, CLIPModel

import torch.nn as nn

import osos.environ["http_proxy"] = "http://127.0.0.1:21882"

os.environ["https_proxy"] = "http://127.0.0.1:21882"device = torch.device('cuda' if torch.cuda.is_available() else "cpu")

#####--------两种方法都可以

processor = AutoProcessor.from_pretrained("openai/clip-vit-base-patch32",cache_dir="./CLIPModel")

model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32",cache_dir="./CLIPModel").to(device)# processor = AutoProcessor.from_pretrained("CLIP-Model")

# model = CLIPModel.from_pretrained("CLIP-Model").to(device)

#Extract features from image1

image1 = Image.open('img1.jpg')

with torch.no_grad():inputs1 = processor(images=image1, return_tensors="pt").to(device)image_features1 = model.get_image_features(**inputs1)#Extract features from image2

image2 = Image.open('img2.jpg')

with torch.no_grad():inputs2 = processor(images=image2, return_tensors="pt").to(device)image_features2 = model.get_image_features(**inputs2)#Compute their cosine similarity and convert it into a score between 0 and 1

cos = nn.CosineSimilarity(dim=0)

sim = cos(image_features1[0],image_features2[0]).item()

sim = (sim+1)/2

print('Similarity:', sim)

2张相似的图像

使用两张相似图像的示例,获得的相似度得分为令人印象深刻的95.5%。

2、图像相似度检索

在深入评估它们的性能之前,让我们使用COCO数据集的验证集中的图像来比较CLIP的结果。我们采用的流程如下:

- 遍历数据集以提取所有图像的特征。

- 将嵌入存储在FAISS索引中。

- 提取输入图像的特征。

- 检索相似度最高的三张图像。

#!/usr/bin/env python

# -*- coding: utf-8 -*-import torch

from PIL import Image

from transformers import AutoProcessor, CLIPModel, AutoImageProcessor, AutoModel

import faiss

import os

import numpy as npdevice = torch.device('cuda' if torch.cuda.is_available() else "cpu")# Load CLIP model and processor

processor_clip = AutoProcessor.from_pretrained("CLIP-Model")

model_clip = CLIPModel.from_pretrained("CLIP-Model").to(device)

# model = CLIPModel.from_pretrained("CLIP_Model").to(device)#Input image

source='laptop.jpg'

image = Image.open(source)

# Retrieve all filenames

images = []

for root, dirs, files in os.walk('./test_data/'):for file in files:if file.endswith('jpg'):images.append(root + '/' + file)

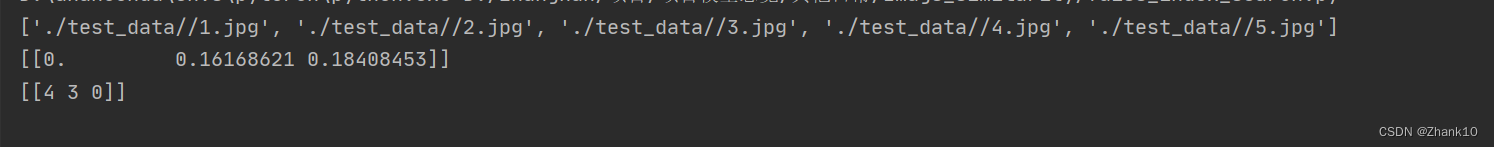

print(images)# Define a function that normalizes embeddings and add them to the index

def add_vector_to_index(embedding, index):vector = embedding.detach().cpu().numpy()vector = np.float32(vector)faiss.normalize_L2(vector)index.add(vector)def extract_features_clip(image):with torch.no_grad():inputs = processor_clip(images=image, return_tensors="pt").to(device)image_features = model_clip.get_image_features(**inputs)return image_features# Create 2 indexes.

index_clip = faiss.IndexFlatL2(512)

for image_path in images:img = Image.open(image_path).convert('RGB')clip_features = extract_features_clip(img)add_vector_to_index(clip_features, index_clip)faiss.write_index(index_clip, "clip.index")with torch.no_grad():inputs_clip = processor_clip(images=image, return_tensors="pt").to(device)image_features_clip = model_clip.get_image_features(**inputs_clip)def normalizeL2(embeddings):vector = embeddings.detach().cpu().numpy()vector = np.float32(vector)faiss.normalize_L2(vector)return vectorimage_features_clip = normalizeL2(image_features_clip)

index_clip = faiss.read_index("clip.index")

d_clip, i_clip = index_clip.search(image_features_clip, 3)

print(d_clip)

print(i_clip)

结果如下: