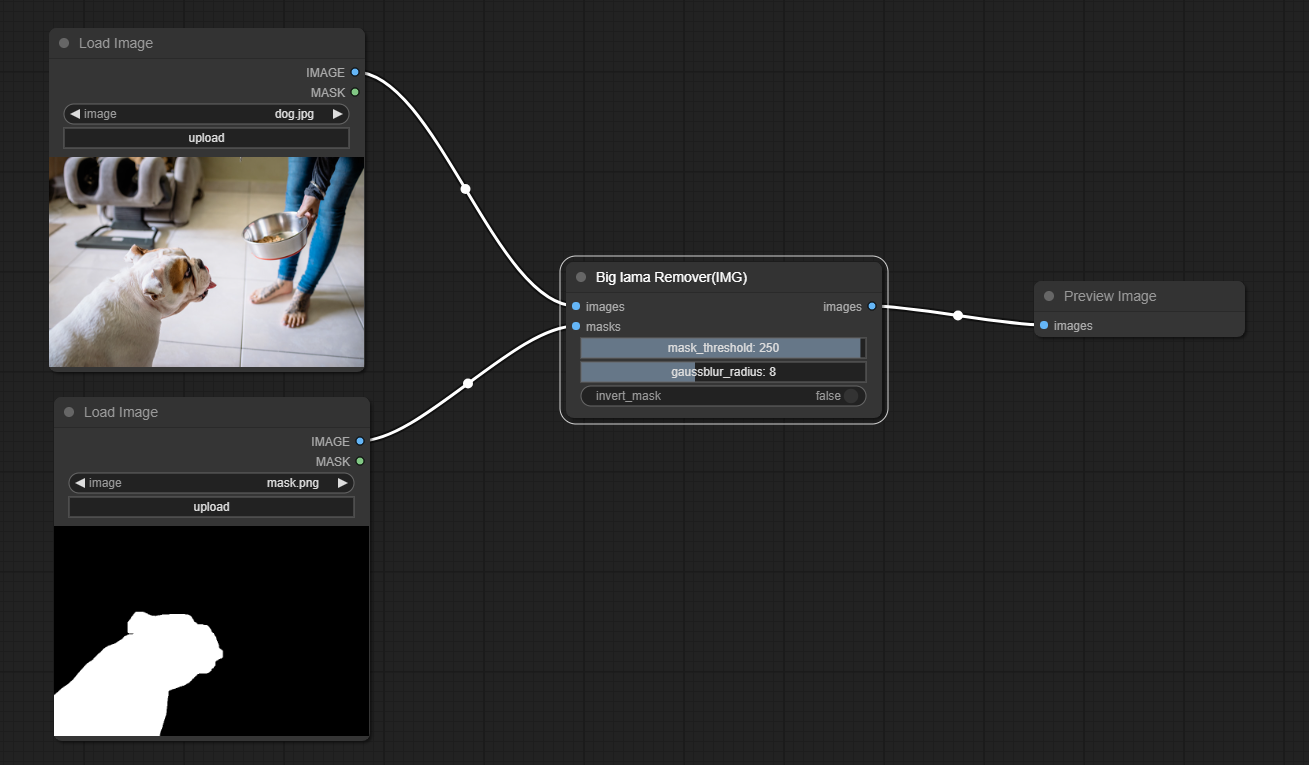

Stable Diffusion ComfyUI 基础教程(一) ComfyUI安装与常用插件 - 知乎最近发现很多人在搬运我的文章,,,,那我也发 前言:相信大家玩 Stable Diffusion(以下简称SD)都是用的 web UI 操作界面吧,不知道有没有小伙伴听说过 ComfyUI。ComfyUI 是 一个基于节点流程的 Stable Diffusi…![]() https://zhuanlan.zhihu.com/p/680844052安装comfyui的插件很简单,主要就是将其放置在custom_nodes目录下即可,实践一个简单的comfyui的插件,以GitHub - Layer-norm/comfyui-lama-remover: a simple lama remover为例,这是一个关于图像擦粗的算法。

https://zhuanlan.zhihu.com/p/680844052安装comfyui的插件很简单,主要就是将其放置在custom_nodes目录下即可,实践一个简单的comfyui的插件,以GitHub - Layer-norm/comfyui-lama-remover: a simple lama remover为例,这是一个关于图像擦粗的算法。

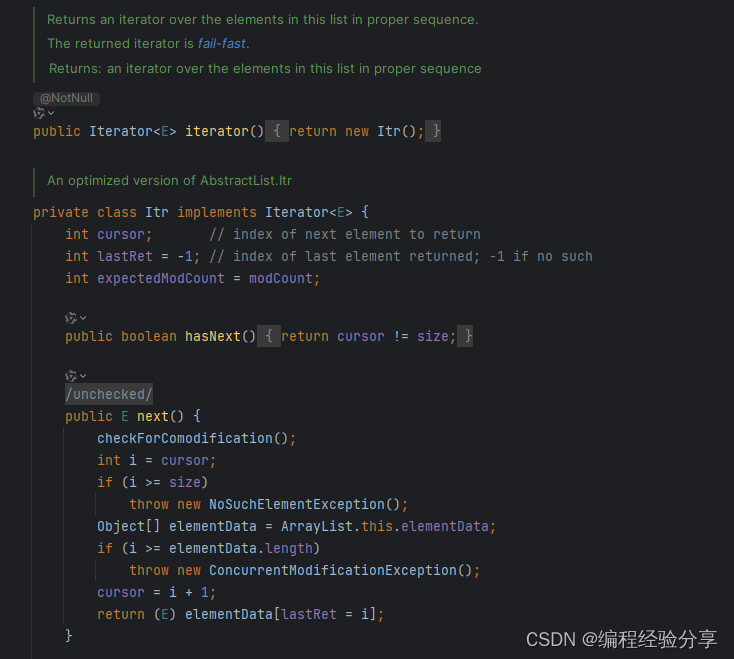

在__init__.py中

from .nodes.remover import NODE_CLASS_MAPPINGS, NODE_DISPLAY_NAME_MAPPINGS__all__ = ['NODE_CLASS_MAPPINGS', 'NODE_DISPLAY_NAME_MAPPINGS']nodes/remover.py中,

class LamaRemover:@classmethoddef INPUT_TYPES(s):return {"required": {"images": ("IMAGE",),"masks": ("MASK",),"mask_threshold": ("INT", {"default": 250, "min": 0, "max": 255, "step": 1, "display": "slider"}),"gaussblur_radius": ("INT", {"default": 8, "min": 0, "max": 20, "step": 1, "display": "slider"}),"invert_mask": ("BOOLEAN", {"default": False}),},}CATEGORY = "LamaRemover"RETURN_NAMES = ("images",)RETURN_TYPES = ("IMAGE",)FUNCTION = "lama_remover"def lama_remover(self, images, masks, mask_threshold, gaussblur_radius, invert_mask):mylama = model.BigLama()ten2pil = transforms.ToPILImage()results = []for image, mask in zip(images, masks):ori_image = tensor2pil(image)print(f"input image size :{ori_image.size}")w, h = ori_image.sizep_image = padimage(ori_image)pt_image = pil2tensor(p_image)mask = mask.unsqueeze(0)ori_mask = ten2pil(mask)ori_mask = ori_mask.convert('L')print(f"input mask size :{ori_mask.size}")p_mask = padmask(ori_mask)if p_mask.size != p_image.size:print("resize mask")p_mask = p_mask.resize(p_image.size)# invert mask# 反转遮罩if not invert_mask:p_mask = ImageOps.invert(p_mask)# gaussian Blur# 高斯模糊遮罩(模糊的是白色)p_mask = p_mask.filter(ImageFilter.GaussianBlur(radius=gaussblur_radius))# mask_threshold# 遮罩阈值,越大越强gray = p_mask.point(lambda x: 0 if x > mask_threshold else 255)pt_mask = pil2tensor(gray)# lama# lama模型result = mylama(pt_image, pt_mask)img_result = ten2pil(result)# crop into the original size# 裁剪成输入大小x, y = img_result.sizeif x > w or y > h:img_result = cropimage(img_result, w, h)# turn to comfyui tensor# 变成comfyui格式(i,h,w,c)i = pil2comfy(img_result)results.append(i)return (torch.cat(results, dim=0),)在lama/model.py中

# reference from https://github.com/enesmsahin/simple-lama-inpaintingimport os

import torch

import numpy as np

from PIL import Image, ImageOps, ImageFilter

from ..utils import get_models_path

from comfy.model_management import get_torch_device

DEVICE = get_torch_device()class BigLama:def __init__(self):self.device = DEVICEmodel_path = get_models_path(filename="big-lama.pt")print(f"{model_path}")try:self.model = torch.jit.load(model_path, map_location=self.device)except:print(f"can't use comfy device")self.device = "cuda" if torch.cuda.is_available() else "cpu"self.model = torch.jit.load(model_path, map_location=self.device)self.model.eval()self.model.to(self.device)def __call__(self, image, mask):with torch.inference_mode():result = self.model(image, mask)return result[0]

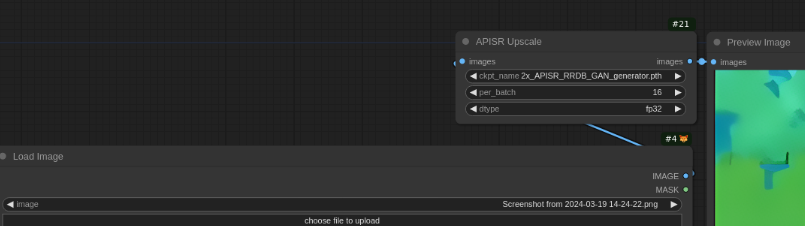

以GitHub - kijai/ComfyUI-APISR: Node to use APISR upscale models in ComfyUI为例,也是一个很简单的例子。

代码结构上,architecture都是原作者的代码,最核心的在nodes.py中,requirements.txt中是需要安装的库,

__init__.py:

from .nodes import NODE_CLASS_MAPPINGS, NODE_DISPLAY_NAME_MAPPINGS__all__ = ["NODE_CLASS_MAPPINGS", "NODE_DISPLAY_NAME_MAPPINGS"]nodes.py:

import folder_paths

import os

import torch

import torch.nn.functional as F

from .architecture.rrdb import RRDBNet

from .architecture.grl import GRL

import comfy.model_management as mm

import comfy.utils

from contextlib import nullcontextclass APISR_upscale:@classmethoddef INPUT_TYPES(s):return {"required": {"ckpt_name": (folder_paths.get_filename_list("upscale_models"),),"images": ("IMAGE",),"per_batch": ("INT", {"default": 16, "min": 1, "max": 4096, "step": 1}),"dtype": (['fp32','fp16',], {"default": 'fp32'}),},}RETURN_TYPES = ("IMAGE",)RETURN_NAMES = ("images",)FUNCTION = "upscale"CATEGORY = "ASPIR"def upscale(self, ckpt_name, dtype, images, per_batch):device = mm.get_torch_device()model_path = folder_paths.get_full_path("upscale_models", ckpt_name)custom_config = {'dtype': dtype,'ckpt_name': ckpt_name,}dtype = (convert_dtype(dtype))if not hasattr(self, 'model') or self.model == None or custom_config != self.current_config:self.model = Noneself.current_config = custom_configif "RRDB" in ckpt_name:self.model = load_rrdb(model_path, scale=2)elif "GRL" in ckpt_name:self.model = load_grl(model_path, scale=4)self.model = self.model.to(dtype).to(device)images = images.permute(0, 3, 1, 2)B, C, H, W = images.shapeH = (H // 8) * 8W = (W // 8) * 8if images.shape[2] != H or images.shape[3] != W:images = F.interpolate(images, size=(H, W), mode="bicubic")images = images.to(device=device, dtype=dtype)self.model.to(device)pbar = comfy.utils.ProgressBar(B)t = []autocast_condition = not comfy.model_management.is_device_mps(device)with torch.autocast(comfy.model_management.get_autocast_device(device),dtype=dtype) if autocast_condition else nullcontext():for start_idx in range(0, B, per_batch):sub_images = self.model(images[start_idx:start_idx + per_batch])t.append(sub_images.cpu())# Calculate the number of images processed in this batchbatch_count = sub_images.shape[0]# Update the progress bar by the number of images processed in this batchpbar.update(batch_count)self.model.cpu()t = torch.cat(t, dim=0).permute(0, 2, 3, 1).cpu().to(torch.float32)return (t,)NODE_CLASS_MAPPINGS = {"APISR_upscale": APISR_upscale,

}

NODE_DISPLAY_NAME_MAPPINGS = {"APISR_upscale": "APISR Upscale",

}