Python环境下基于机器学习(多层感知机,决策树,随机森林,高斯过程,AdaBoost,朴素贝叶斯)的压缩机故障识别(出口阀泄漏,止逆阀泄露,轴承损伤,惯性轮损伤,活塞损伤,皮带损伤等)。

空压机是一种经典的动力设备,也被誉为企业产品生产的"生命气源",,广泛应用于制药工业、爆破采煤、矿上通风、风动实验等众多领域。空压机的工作机理是通过利用旋转电机的机械能对气体进行挤压,从而使得气体能够产生巨大的能量,利用充满能量的气体进行一些爆破、通风等作业,从而满足现实中的使用需求。

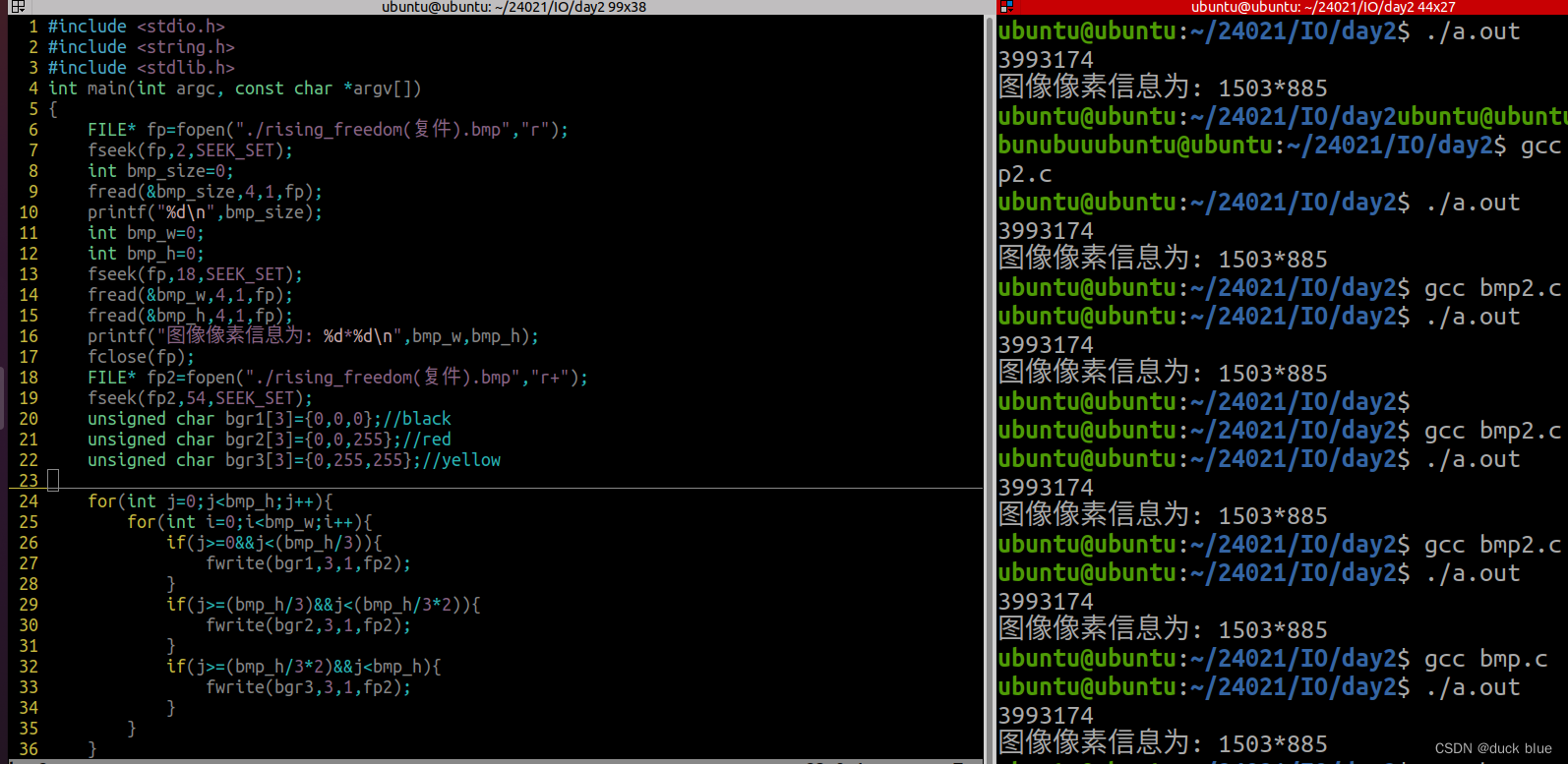

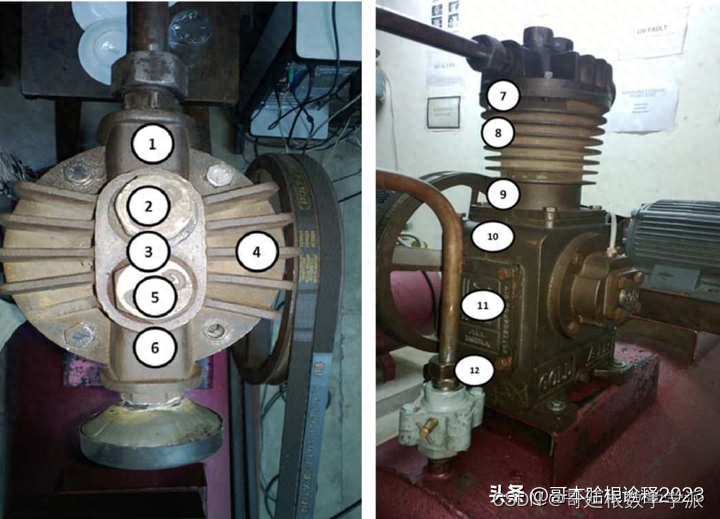

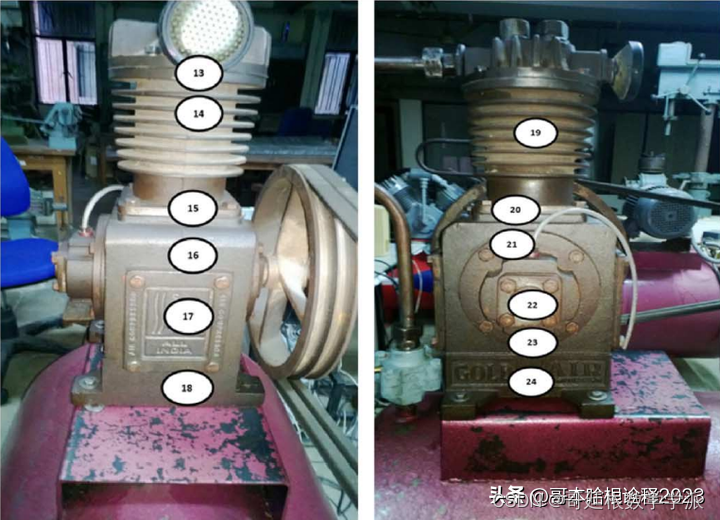

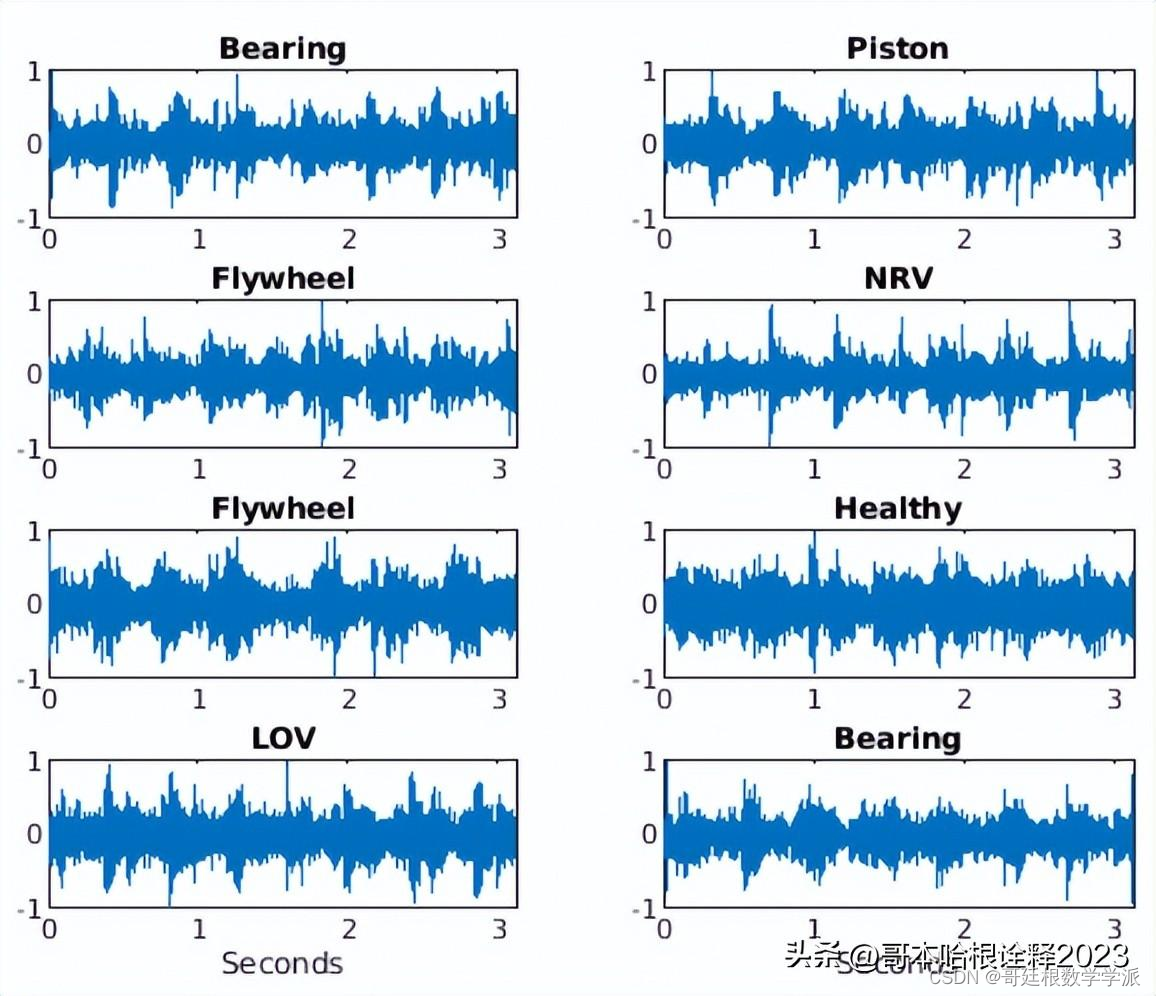

本项目使用声信号检测空压机的 7 种故障,分别为出口阀泄漏Leakage Outlet Valve(LOV),入口阀泄露Leakage Inlet Valve(LIV),止逆阀泄露Non-Return Valve(NRV),轴承损伤Bearing,惯性轮损伤Flywheel,活塞损伤Piston,皮带损伤Riderbelt和健康状态。经过特征提取后,利用各种机器学习算法对空压机故障进行分类,试验台如下:

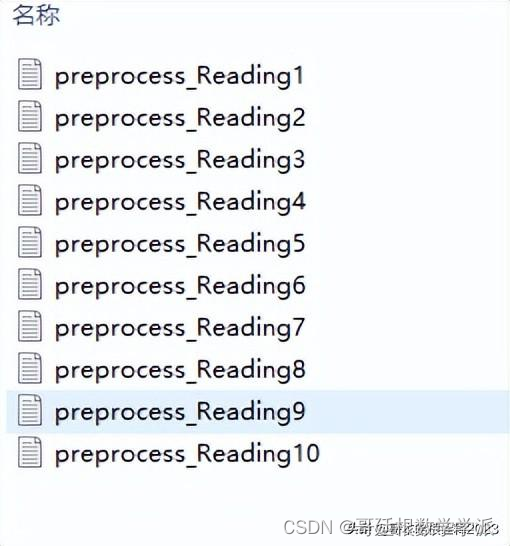

所测得的声信号以Flywheel为例

接下来开始步入正题,由于代码包含众多模块,为了避免篇幅过长,只写主函数。提取的特征可以选择维数是否约简,分类器也可选择是否进行参数优化(粒子群算法,pip install pyswarm),代码必要的标注都有了,也比较容易看懂。

import time

import pandas as pd

import numpy as np

import matplotlib.pyplot as pltMaxExpNo=10

counter=-1

#标签

labels=['Bearing','Flywheel','Healthy','LIV','LOV','NRV','Piston','Riderbelt']

import PrimaryStatFeatures #特征提取模块

import FFT_Module #FFT变换

#所要提取的特征

data_columns_PrimaryStatFeatures=['Mean','Min','Max','StdDv','RMS','Skewness','Kurtosis','CrestFactor','ShapeFactor']

data_columns_Target=['Fault']

Faults={labels[0]:int(0),labels[1]:int(1),labels[2]:int(2),labels[3]:int(3),labels[4]:int(4),labels[5]:int(5),labels[6]:int(6),labels[7]:int(7)}

for label in labels:for ExpNo in range(1,MaxExpNo+1):counter+=1file='Data\\'+label+'\\preprocess_Reading'+str(ExpNo)+'.txt'X=np.loadtxt(file,delimiter=',')if (counter%10==0): print('Lading files: ',str(counter/(len(labels)*MaxExpNo)*100),'% completed')StatFeatures=PrimaryStatFeatures.PrimaryFeatureExtractor(X)FFT_Features,data_columns_FFT_Features=FFT_Module.FFT_BasedFeatures(X)data_columns=data_columns_PrimaryStatFeatures+data_columns_FFT_Features+data_columns_Targetif (label==labels[0] and ExpNo==1): data=pd.DataFrame(columns=data_columns)StatFeatures[0].extend(FFT_Features)StatFeatures[0].extend([Faults[label]])df_temp=pd.DataFrame(StatFeatures,index=[counter],columns=data_columns)data=data.append(df_temp)input_data=data.drop(columns=['Fault'])

#数据标准化处理

#参考: http://benalexkeen.com/feature-scaling-with-scikit-learn/

from sklearn import preprocessing

normalization_status='RobustScaler'

''' Choices:1. Normalization2. StandardScaler3. MinMaxScaler4. RobustScaler5. Normalizer6. WithoutNormalization '''

input_data_columns=data_columns_PrimaryStatFeatures+data_columns_FFT_Featuresif (normalization_status=='Normalization'):data_array=preprocessing.normalize(input_data,norm='l2',axis=0)input_data=pd.DataFrame(data_array,columns=input_data_columns)

elif (normalization_status=='StandardScaler'):scaler = preprocessing.StandardScaler()scaled_df = scaler.fit_transform(input_data)input_data = pd.DataFrame(scaled_df, columns=input_data_columns)

elif (normalization_status=='MinMaxScaler'):scaler = preprocessing.MinMaxScaler()scaled_df = scaler.fit_transform(input_data)input_data = pd.DataFrame(scaled_df, columns=input_data_columns)

elif (normalization_status=='RobustScaler'):scaler = preprocessing.RobustScaler()scaled_df = scaler.fit_transform(input_data)input_data = pd.DataFrame(scaled_df, columns=input_data_columns)

elif (normalization_status=='Normalizer'):scaler = preprocessing.Normalizer()scaled_df = scaler.fit_transform(input_data)input_data = pd.DataFrame(scaled_df, columns=input_data_columns)

elif (normalization_status=='WithoutNormalization'):print ('No normalization is required')target_data=pd.DataFrame(data['Fault'],columns=['Fault'],dtype=int)DimReductionStatus=False

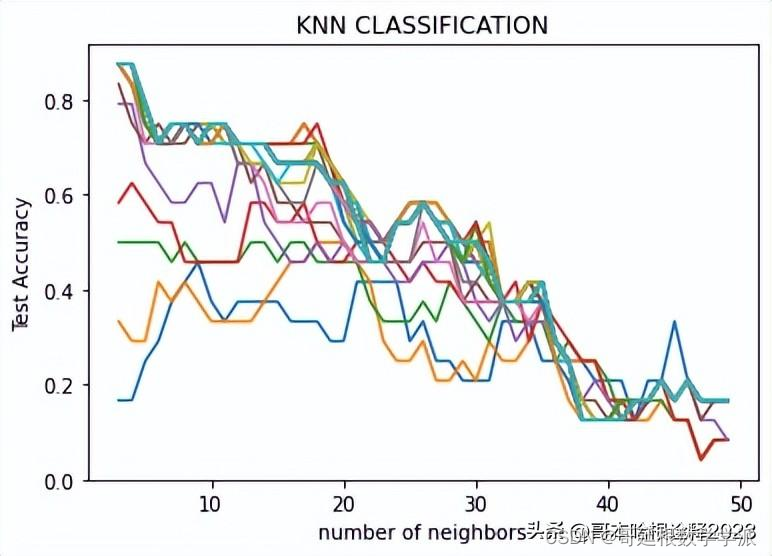

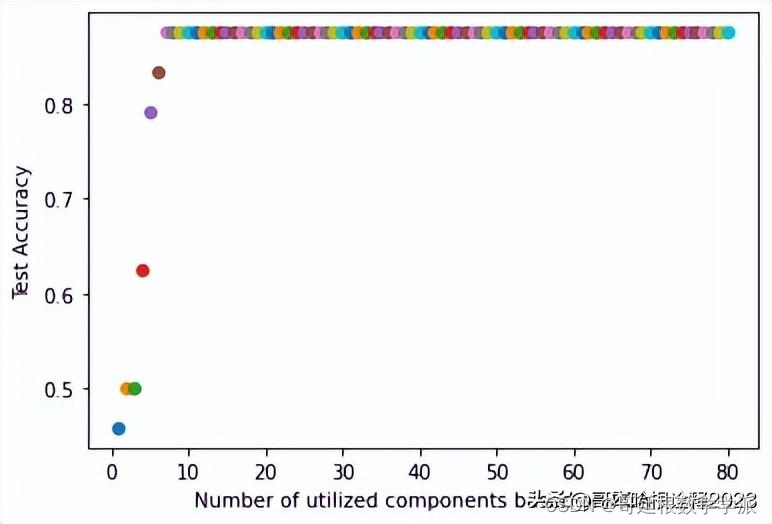

if (DimReductionStatus==True):for nComponents in range(1,110):#降维#主成分分析from sklearn import decompositionpca = decomposition.PCA(n_components=nComponents)pca.fit(input_data)input_data_reduced = pca.transform(input_data)#训练集和测试集划分from sklearn.model_selection import train_test_splitx_train,x_test,y_train,y_test=train_test_split(input_data_reduced,target_data,test_size=0.3,random_state=42,stratify=target_data)#使用 KNN(K 最近邻)训练import KNN_Classifiertest_accuracy_max=KNN_Classifier.KNNClassifier(x_train,x_test,y_train,y_test)plt.figure(10)plt.scatter(nComponents,test_accuracy_max)plt.xlabel('Number of utilized components based on PCA')plt.ylabel('Test Accuracy')#使用 SVC(支持向量分类器)进行训练import SVC_Classifiertest_accuracy_max=SVC_Classifier.SVCClassifier(x_train,x_test,y_train,y_test)plt.figure(11)plt.scatter(nComponents,test_accuracy_max)

else:from sklearn.model_selection import train_test_splitx_train,x_test,y_train,y_test=train_test_split(input_data,target_data,test_size=0.3,random_state=42,stratify=target_data)#使用决策树进行训练

import DT_Classifier

DT_Classifier.DTClassifier(x_train,x_test,y_train,y_test)from sklearn.neural_network import MLPClassifier

from sklearn.neighbors import KNeighborsClassifier

from sklearn.svm import SVC

from sklearn.gaussian_process import GaussianProcessClassifier

from sklearn.gaussian_process.kernels import RBF

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier, AdaBoostClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.discriminant_analysis import QuadraticDiscriminantAnalysis#写入最佳分类器参数

file1 = open('Optimized Parameters for Classifiers.txt','w')

print('\nThis file contains the output optimized parameters for different classifiers\n\n\n',file=file1)#分类器参数优化

#*************************************

SVMOptStatus=True

KNNOptStatus=True

MLPOptStatus=True

DTOptStatus=True

import CLFOptimizer

#pip install pyswarm

#优化 SVC 参数

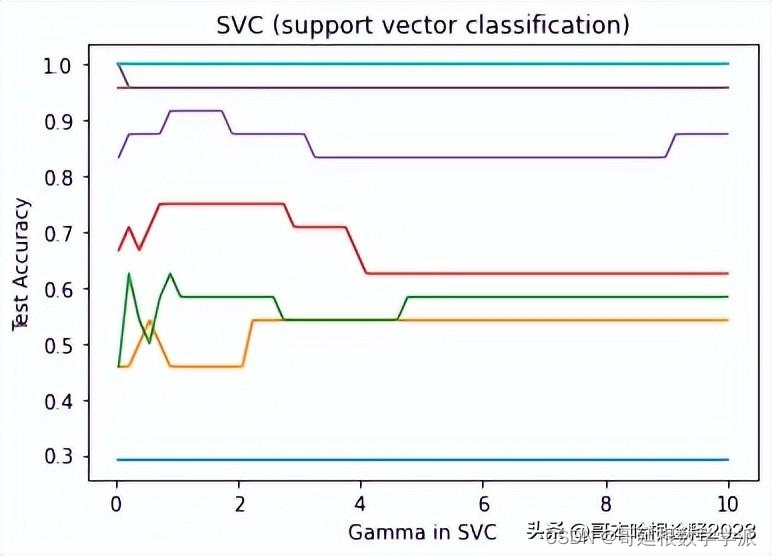

if (SVMOptStatus==True):SVM_kernels = ['linear','poly','rbf','sigmoid']for KernelType in SVM_kernels:print('\n\nstage: Optimizing SVC:',KernelType)SVCParams_opt,SVCAccuracy_opt=CLFOptimizer.SVCOPT(KernelType,x_train,x_test,y_train,y_test)print('\nClassifier: SVC-',KernelType,', Gamma=',SVCParams_opt[0],', PenaltyPrameter=',SVCParams_opt[1],', Test Acuuracy=',SVCAccuracy_opt,file=file1)#优化 KNN 参数

if (KNNOptStatus==True):print('\n\nstage: Optimizing KNN')KNNParams_opt,KNNAccuracy_opt=CLFOptimizer.KNNOPT(x_train,x_test,y_train,y_test)print('\nClassifier: KNN, n_neighbors=',KNNParams_opt,', Test Acuuracy=',KNNAccuracy_opt,file=file1)#优化 MLP 分类器

if (MLPOptStatus==True):print('\n\nstage: Optimizing MLP')MLPParams_opt,MLPAccuracy_opt=CLFOptimizer.MLPOPT(x_train,x_test,y_train,y_test)print('\nClassifier: MLP, hidden_layer_sizes=(',MLPParams_opt[0],',',MLPParams_opt[1],',',MLPParams_opt[2],'), Test Acuuracy=',MLPAccuracy_opt,file=file1)#优化决策树分类器

if (DTOptStatus==True):print('\n\nstage: Optimizing Decision Tree')DTParams_opt,DTAccuracy_opt=CLFOptimizer.DTOPT(x_train,x_test,y_train,y_test)print('\nClassifier: Decision Tree, max_depth=',DTParams_opt[0],', min_samples_split=',DTParams_opt[1],', min_samples_leaf=',DTParams_opt[2],', Test Acuuracy=',DTAccuracy_opt,file=file1)file1.close()#生成分类器名称及其配置

classifiers=[]

CLFnames=[]CLFnames= CLFnames + ["SVC-linear","K-Nearest Neighbors","Multi-Layer Perceptron","Decision Tree", "Random Forest", "Gaussian Process", "AdaBoost","Naive Bayes", "QDA"]#classifiers=classifiers+ [

# SVC(kernel='linear',gamma=1.785,C=3.463),

# KNeighborsClassifier(n_neighbors=3),

# MLPClassifier(hidden_layer_sizes=(28,34,80,),alpha=1),

# DecisionTreeClassifier(),

# RandomForestClassifier(),

# GaussianProcessClassifier(1.0 * RBF(1.0)),

# AdaBoostClassifier(),

# GaussianNB(),

# QuadraticDiscriminantAnalysis()]classifiers=classifiers+ [SVC(),KNeighborsClassifier(),MLPClassifier(),DecisionTreeClassifier(),RandomForestClassifier(),GaussianProcessClassifier(),AdaBoostClassifier(DecisionTreeClassifier(),n_estimators=1000,learning_rate=1),GaussianNB(),QuadraticDiscriminantAnalysis()]#将分类结果写入文件

f = open('ClassificationResults.txt','w')

print('\nThis file contains an overall comparison of different classifiers performance\n\n\n',file=f)

import ClassificationModule

ClassificationModule.Classifiers(CLFnames,classifiers,x_train,x_test,y_train,y_test)

分类器优化参数结果

This file contains the output optimized parameters for different classifiers

Classifier: SVC- linear , Gamma= 1.61614372336136 , PenaltyPrameter= 6.455613795161285 , Test Acuuracy= 1.0

Classifier: SVC- poly , Gamma= 0.02472261261696812 , PenaltyPrameter= 8.084311259878596 , Test Acuuracy= 0.875

Classifier: SVC- rbf , Gamma= 0.003211151769260453 , PenaltyPrameter= 3.2794598503610994 , Test Acuuracy= 0.9583333333333334

Classifier: SVC- sigmoid , Gamma= 0.001 , PenaltyPrameter= 9.44412249946835 , Test Acuuracy= 1.0

Classifier: KNN, n_neighbors= 3 , Test Acuuracy= 0.875

Classifier: MLP, hidden_layer_sizes=( 19.0 , 97.0 , 14.0 ), Test Acuuracy= 1.0

Classifier: Decision Tree, max_depth= 2121.0 , min_samples_split= 6.0 , min_samples_leaf= 4.0 , Test Acuuracy= 1.0

Training accuracy for SVC-linear is: 0.9642857142857143 and Prediction accuracy is: 0.875

Training accuracy for K-Nearest Neighbors is: 0.8928571428571429 and Prediction accuracy is: 0.7916666666666666

分类结果

Training accuracy for Multi-Layer Perceptron is: 1.0 and Prediction accuracy is: 0.9583333333333334

Training accuracy for Decision Tree is: 1.0 and Prediction accuracy is: 0.7916666666666666

Training accuracy for Random Forest is: 1.0 and Prediction accuracy is: 0.9583333333333334

Training accuracy for Gaussian Process is: 1.0 and Prediction accuracy is: 0.8333333333333334

Training accuracy for AdaBoost is: 1.0 and Prediction accuracy is: 0.9583333333333334

Training accuracy for Naive Bayes is: 1.0 and Prediction accuracy is: 0.9166666666666666

Training accuracy for QDA is: 1.0 and Prediction accuracy is: 0.3333333333333333

完整代码可通过知乎学术咨询获得:

Python环境下基于机器学习的空压机故障识别

工学博士,担任《Mechanical System and Signal Processing》审稿专家,担任《中国电机工程学报》优秀审稿专家,《控制与决策》,《系统工程与电子技术》,《电力系统保护与控制》,《宇航学报》等EI期刊审稿专家。

擅长领域:现代信号处理,机器学习,深度学习,数字孪生,时间序列分析,设备缺陷检测、设备异常检测、设备智能故障诊断与健康管理PHM等。