pytorch tutorial

- 1.Installation

- 2.Tensor Basics

- 3.Gradient Calculation With Autograd

- 4.Back propagation

- 5.6 Gradient Descent with Autograd and Back propagation & Train Pipeline Model, Loss, and Optimizer

- 第一步:纯手写

- 第二步:梯度计算使用Autograd

- 第三步:loss和optimizer使用pytorch的包

- 第四步:搭建模型使用pytorch

- 7.Linear Regression

- 8.Logistic Regression

- 9.Dataset and DataLoader

- 10.Dataset Transforms

- 11.Softmax and Cross Entropy

- Softmax

- Cross-Entropy

- Binary Classification

- Multiclass Classification

- 12.Activation Functions

- 13.Feed-Forward Neural Network

- 14.Convolutional Neural Network(CNN)

- 15.Transfer Learning

- 16.How To Use The TensorBoard

- 17.Saving and Loading Models

- 18.Create & Deploy A Deep Learning App - Pytorch Model Deployment With Flask & Hero

1.Installation

Pytorch官网-安装

- 在Linux或者Windows上,希望获得GPU支持,则需要你有GPU,且需要先去安装CUDA。

conda create -n pytorch # 创建名为pytorch的conda环境

conda activate pytorch # 激活名为pytroch的conda环境

conda install pytorch::pytorch torchvision torchaudio -c pytorch

测试:

python

--------------

>>> import torch

>>> x = torch.rand(3)

>>> print(x)

>>> torch.cuda.is_available() # 检测cuda是否可用

2.Tensor Basics

- 如果tensor在CPU上,则tensor和转化后的np.array会共享相同的内存地址。这意味着修改一个会修改另一个

# 如果有GPU

if torch.cuda.is_available():device = torch.device('cuda')x = torch.ones(5, device=device)y = torch.ones(5)y = y.to(device)z = x + y# z.numpy() # 会返回一个error,因为numpy只能处理CPU的tensor,所以不能将GPU的tensor转化为numpyz = z.to("cpu") # 所有必须先将tensor放到CPU上

3.Gradient Calculation With Autograd

- z.backward如果不是标量,需要传入梯度方向

x = torch.randn(3, requires_grad=True) # 一旦我们对这个tensor执行操作,pytorch会创建一个所谓的计算图

print(x)

y = x+2

print(y)

z = y*y*2

print(z)

v = torch.tensor([0.1, 1.0, 0.001], dtype=torch.float32)

z.backward(v) # dz/dx,z不是标量,所以需要传参数:梯度方向,参考:https://blog.csdn.net/deephub/article/details/115438881# 但是很多时候,最终结果是个标量,所以不需要传入参数

print(x.grad)

- 如何避免pytorch将变量视为gradient function,例如:在训练循环中,我们需要更新权重,但此时不需要梯度计算

# 第一种方法:

x = torch.randn(3, requires_grad=True)

print(x)

x.requires_grad_(False)

print(x)

# 第二种方法:

x = torch.randn(3, requires_grad=True)

print(x)

y = x.detach() # 创建一个新的不需要计算梯度的tensor

print(y)

# # 第三种方法:

x = torch.randn(3, requires_grad=True)

print(x)

with torch.no_grad():y = x + 2print(y)

- 每当调用backward函数时,tensor的梯度会积累到.grad属性中,所以要十分小心

weights = torch.ones(4, requires_grad=True)

for epoch in range(3):model_output = (weights*3).sum()model_output.backward()print(weights.grad)weights.grad.zero_() # 必须对梯度进行清0,否则梯度会累积'''

下面是实际使用优化器的时候,实现梯度清0的过程

weights = torch.ones(4, requires_grad=True)

optimizer = torch.optim.SGD(weights, lr=0.01)

optimizer.step()

optimizer.zero_grad()

'''

4.Back propagation

x = torch.tensor(1.0)

y = torch.tensor(2.0)

w = torch.tensor(1.0, requires_grad=True)

# forward pass and compute the loss

y_hat = w * x

loss = (y_hat - y)**2

print(loss)

# backward pass

loss.backward()

print(w.grad)

5.6 Gradient Descent with Autograd and Back propagation & Train Pipeline Model, Loss, and Optimizer

pytorch中的一般训练pipeline:

- 设计模型,(input, output size, forward pass)

- 构建loss和optimizer

- 执行训练循环

3.1 forward pass: compute prediction

3.2 backward pass: gradients

3.3 update weights

第一步:纯手写

# f = w * x

# f = 2 * x

X = np.array([1, 2, 3, 4], dtype=np.float32)

Y = np.array([2, 4, 6, 8], dtype=np.float32)

w = 0.0# model prediction

def forward(x):return w * x# loss = MSE

def loss(y, y_predicted):return ((y_predicted-y)**2).mean()# gradient

# MSE = 1/N * (w*x-y)**2

# dJ/dw = 1/N 2x (w*x-y)

def gradient(x, y, y_predicted):return np.dot(2*x, y_predicted-y).mean()print(f'Prediction brefore training: f(5) = {forward(5):.3f}')# Training

learning_rate = 0.01

n_iters = 20for epoch in range(n_iters):# prediction = forward passy_pred = forward(X)# lossl = loss(Y, y_pred)# gradientsdw = gradient(X, Y, y_pred)# update weightsw -= learning_rate * dwif epoch % 2 == 0:print(f'epoch {epoch+1}: w = {w:.3f}, loss = {l:.8f}')print(f'Prediction after training: f(5) = {forward(5):.3f}')第二步:梯度计算使用Autograd

# f = w * x

# f = 2 * x

X = torch.tensor([1, 2, 3, 4], dtype=torch.float32)

Y = torch.tensor([2, 4, 6, 8], dtype=torch.float32)

w = torch.tensor(0.0, dtype=torch.float32, requires_grad=True)# model prediction

def forward(x):return w * x# loss = MSE

def loss(y, y_predicted):return ((y_predicted-y)**2).mean()print(f'Prediction brefore training: f(5) = {forward(5):.3f}')# Training

learning_rate = 0.01

n_iters = 100for epoch in range(n_iters):# prediction = forward passy_pred = forward(X)# lossl = loss(Y, y_pred)# gradients = backward passl.backward() # dl/dw: autograd的反向传播的计算精度,不如数值计算的精度# update weightswith torch.no_grad():w -= learning_rate * w.grad# zero gradientsw.grad.zero_()if epoch % 10 == 0:print(f'epoch {epoch+1}: w = {w:.3f}, loss = {l:.8f}')print(f'Prediction after training: f(5) = {forward(5):.3f}')

第三步:loss和optimizer使用pytorch的包

import torch

import torch.nn as nn# f = w * x

# f = 2 * x

X = torch.tensor([1, 2, 3, 4], dtype=torch.float32)

Y = torch.tensor([2, 4, 6, 8], dtype=torch.float32)

w = torch.tensor(0.0, dtype=torch.float32, requires_grad=True)# model prediction

def forward(x):return w * xprint(f'Prediction brefore training: f(5) = {forward(5):.3f}')# Training

learning_rate = 0.01

n_iters = 100loss = nn.MSELoss()

optimizer = torch.optim.SGD([w], lr=learning_rate)for epoch in range(n_iters):# prediction = forward passy_pred = forward(X)# lossl = loss(Y, y_pred)# gradients = backward passl.backward() # dl/dw: autograd的反向传播的计算精度,不如数值计算的精度# update weightsoptimizer.step()# zero gradientsoptimizer.zero_grad()if epoch % 10 == 0:print(f'epoch {epoch+1}: w = {w:.3f}, loss = {l:.8f}')print(f'Prediction after training: f(5) = {forward(5):.3f}')

第四步:搭建模型使用pytorch

import torch

import torch.nn as nn# f = w * x

# f = 2 * x

X = torch.tensor([[1], [2], [3], [4]], dtype=torch.float32) # 必须是2d array

Y = torch.tensor([[2], [4], [6], [8]], dtype=torch.float32)X_test = torch.tensor([5], dtype=torch.float32)n_samples, n_features = X.shape

print(n_samples, n_features)input_size = n_features

output_size = n_features# model = nn.Linear(input_size, output_size) # 这是最简单的线性模型,所以不需要自己定义。也可以用下面的类来重新定义class LinearRegression(nn.Module):def __init__(self, input_dim, output_dim):super(LinearRegression, self).__init__()# define layersself.lin = nn.Linear(input_dim, output_dim)def forward(self, x):return self.lin(x)model = LinearRegression(input_size, output_size)print(f'Prediction brefore training: f(5) = {model(X_test).item():.3f}')# Training

learning_rate = 0.01

n_iters = 100loss = nn.MSELoss()

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)for epoch in range(n_iters):# prediction = forward passy_pred = model(X)# lossl = loss(Y, y_pred)# gradients = backward passl.backward() # dl/dw: autograd的反向传播的计算精度,不如数值计算的精度# update weightsoptimizer.step()# zero gradientsoptimizer.zero_grad()if epoch % 10 == 0:[w, b] = model.parameters() # w是列表的列表print(f'epoch {epoch+1}: w = {w[0][0].item():.3f}, loss = {l:.8f}') # 结果与初始化参数和优化器有关print(f'Prediction after training: f(5) = {model(X_test).item():.3f}')

7.Linear Regression

import torch

import torch.nn as nn

import numpy as np # 用于一些数据转化

from sklearn import datasets # 用于生成一些回归数据集

import matplotlib.pyplot as plt # 画图# 0) prepare data

X_numpy, y_numpy = datasets.make_regression(n_samples=100, n_features=1, noise=20, random_state=1) # 这是double精度的X = torch.from_numpy(X_numpy.astype(np.float32))

y = torch.from_numpy(y_numpy.astype(np.float32))

y = y.view(y.shape[0], 1) # 将行向量reshape成列向量n_samples, n_features = X.shape# 1)model

input_size = n_features

output_size = 1

model = nn.Linear(input_size, output_size)# 2)loss and optimizer

learning_rate = 0.01

criterion = nn.MSELoss()

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)# 3)training loop

num_epochs = 100

for epoch in range(num_epochs):# forward pass and lossy_predicted = model(X)loss = criterion(y_predicted, y)# backward passloss.backward()# updateoptimizer.step()optimizer.zero_grad()if (epoch+1) % 10 == 0:print(f'epoch: {epoch+1}, loss={loss.item():.4f}')# plot

predicted = model(X).detach().numpy()

plt.plot(X_numpy, y_numpy, 'ro') # red dots

plt.plot(X_numpy, predicted, 'b')

plt.show()

8.Logistic Regression

import torch

import torch.nn as nn

import numpy as np

from sklearn import datasets # 为了导入binary classification dataset

from sklearn.preprocessing import StandardScaler # 为了scale features

from sklearn.model_selection import train_test_split # 为了split训练和测试数据# 0) prepare data

bc = datasets.load_breast_cancer() # binary classification problem

X, y = bc.data, bc.targetn_samples, n_features = X.shapeX_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=1234)# scalse

sc = StandardScaler()

X_train = sc.fit_transform(X_train)

X_test = sc.fit_transform(X_test)X_train = torch.from_numpy(X_train.astype(np.float32))

X_test = torch.from_numpy(X_test.astype(np.float32))

y_train = torch.from_numpy(y_train.astype(np.float32))

y_test = torch.from_numpy(y_test.astype(np.float32))y_train = y_train.view(y_train.shape[0], 1) # 将行向量变成列向量

y_test = y_test.view(y_test.shape[0], 1)# 1) model

# f = wx + b, sigmoid at the end

class LogisticRegression(nn.Module):def __init__(self, n_input_features):super(LogisticRegression, self).__init__()self.linear = nn.Linear(n_input_features, 1)def forward(self, x):y_predicted = torch.sigmoid(self.linear(x))return y_predictedmodel = LogisticRegression(n_features)# 2) loss and optimizer

learning_rate = 0.01

criterion = nn.BCELoss()

opitimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)# 3) training loop

num_epochs = 100

for epoch in range(num_epochs):# forward pass and lossy_predicted = model(X_train)loss = criterion(y_predicted, y_train)# backward passloss.backward()# updatesopitimizer.step()# zero gradientsopitimizer.zero_grad()if (epoch+1) % 10 == 0:print(f'epoch: {epoch+1}, loss = {loss.item():.4f}')with torch.no_grad():y_predicted = model(X_test)y_predicted_cls = y_predicted.round()acc = y_predicted_cls.eq(y_test).sum() / float(y_test.shape[0])print(f'accuracy = {acc:.4f}')

9.Dataset and DataLoader

import torch

import torchvision

from torch.utils.data import Dataset, DataLoader

import numpy as np

import math'''

epoch = 1 forward and backward pass of ALL training samplesbatch_size = number of training samples in one forward & backward passnumber of iterations = number of passes, each pass using [batch_size] number of samplese.g. 100 samples, batch_size=20 --> 100/20 = 5 iterations for 1 epoch

'''class WineDataset(Dataset):def __init__(self):# data loadingxy = np.loadtxt('./wine.csv', delimiter=',', dtype=np.float32, skiprows=1)self.x = torch.from_numpy(xy[:, 1:])self.y = torch.from_numpy(xy[:, [0]])self.n_samples = xy.shape[0]def __getitem__(self, index):return self.x[index], self.y[index]def __len__(self):return self.n_samplesdataset = WineDataset()

dataloader = DataLoader(dataset=dataset, batch_size=4, shuffle=True, num_workers=0)# dataiter = iter(dataloader) # 转化为迭代器

# data = next(dataiter) # 版本不同

# features, labels = data

# print(features, labels)# training loop

num_epochs = 2

total_samples = len(dataset)

n_iterations = math.ceil(total_samples/4) # 向上取整, 也可以用len(dataloader)

print(total_samples, n_iterations)for epoch in range(num_epochs):for i, (inputs, labels) in enumerate(dataloader):# forward backward, updateif (i+1) % 5 == 0:print(f'epoch {epoch+1}/{num_epochs}, step {i+1}/{n_iterations}, inputs {inputs.shape}')# pytorch还有内置的datasets

# torchvision.datasets.MNIST()

# fashion-mnist, cifar, coco

10.Dataset Transforms

import torch

import torchvision

from torch.utils.data import Dataset, DataLoader

import numpy as np

import math# datast = torchvision.datasets.MNIST(

# root='./data', transform=torchvision.transforms.ToTensor()) # 使用ToTensor转化器:将图片,numpy转化为tensors

# 可参考资料:https://pytorch.org/vision/stable/transforms.htmlclass WineDataset(Dataset):def __init__(self, transform=None):# data loadingxy = np.loadtxt('./wine.csv', delimiter=',', dtype=np.float32, skiprows=1)self.n_samples = xy.shape[0]# note that we do not convert to tensor hereself.x = xy[:, 1:]self.y = xy[:, [0]]self.transform = transformdef __getitem__(self, index):sample = self.x[index], self.y[index]if self.transform:sample = self.transform(sample)return sampledef __len__(self):return self.n_samplesclass ToTensor: # 创建自定义的transform类def __call__(self, sample): # 可调用函数inputs, targets = samplereturn torch.from_numpy(inputs), torch.from_numpy(targets)class MulTransform:def __init__(self, factor):self.factor = factordef __call__(self, sample):inputs, targets = sampleinputs *= self.factorreturn inputs, targetsdataset = WineDataset(transform=None)

# dataset = WineDataset(transform=None)

first_data = dataset[0]

features, labels = first_data

print(features)

print(type(features),type(labels))

11.Softmax and Cross Entropy

Softmax

import torch

import torch.nn as nn

import numpy as npdef softmax(x):return np.exp(x) / np.sum(np.exp(x), axis=0)x = np.array([2.0, 1.0, 0.1])

outputs = softmax(x)

print('softmax numpy:', outputs)x = torch.tensor([2.0, 1.0, 0.1])

outputs = torch.softmax(x, dim=0)

print(outputs)

Cross-Entropy

衡量分类模型的表现。

# 手动实现cross_entropydef cross_entropy(actual, predicted):loss = -np.sum(actual * np.log(predicted))return loss # / float(predicted.shape[0])# y must be one hot encoded

# if class 0: [1 0 0]

# if class 1: [0 1 0]

# if class 2: [0 0 1]

Y = np.array([1, 0, 0])# y_pred has probabilities

Y_pred_good = np.array([0.7, 0.2, 0.1])

Y_pred_bad = np.array([0.1, 0.3, 0.6])

l1 = cross_entropy(Y, Y_pred_good)

l2 = cross_entropy(Y, Y_pred_bad)

print(f'Loss numpy: {l1:.4f}')

print(f'Loss numpy: {l2:.4f}')

# 利用nn.CrossEntropyLoss()# 1 samples

loss = nn.CrossEntropyLoss() # nn.CrossEntropyLoss已经应用了LogSoftmax和NLLLoss

Y = torch.tensor([0]) # Y并非one hot编码,而是class label

# nsamples x nclasses = 1x3

Y_pred_good = torch.tensor([[2, 1.0, 0.1]])

Y_pred_bad = torch.tensor([[0.5, 2.0, 0.3]])l1 = loss(Y_pred_good, Y)

l2 = loss(Y_pred_bad, Y)print(l1.item())

print(l2.item())_, predictions1 = torch.max(Y_pred_good, 1)

_, predictions2 = torch.max(Y_pred_bad, 1)

print(predictions1)

print(predictions2)# 3 samples

Y = torch.tensor([2, 0, 1])

# nsamples x nclasses = 3x3

Y_pred_good = torch.tensor([[0.1, 1.0, 2.1], [2.0, 1.0, 0.1], [0.1, 3.0, 0.1]])

Y_pred_bad = torch.tensor([[2.1, 1.0, 0.1], [0.1, 1.0, 2.1], [0.1, 3.0, 0.1]])l1 = loss(Y_pred_good, Y)

l2 = loss(Y_pred_bad, Y)print(l1.item())

print(l2.item())_, predictions1 = torch.max(Y_pred_good, 1)

_, predictions2 = torch.max(Y_pred_bad, 1)

print(predictions1)

print(predictions2)

Binary Classification

# Binary classification

class NeuralNet1(nn.Module):def __init__(self, input_size, hidden_size):super(NeuralNet1, self).__init__()self.linear1 = nn.Linear(input_size, hidden_size)self.relu = nn.ReLU()self.linear2 = nn.Linear(hidden_size, 1)def forward(self, x):out = self.linear1(x)out = self.relu(out)out = self.linear2(out)# sigmoid at the endy_pred = torch.sigmoid(out)return y_predmodel = NeuralNet1(input_size=28*28, hidden_size=5)

criterion = nn.BCELoss()

Multiclass Classification

# Multiclass problem

class NeuralNet2(nn.Module):def __init__(self, input_size, hidden_size, num_classes):super(NeuralNet2, self).__init__()self.linear1 = nn.Linear(input_size, hidden_size)self.relu = nn.ReLU()self.linear2 = nn.Linear(hidden_size, num_classes)def forward(self, x):out = self.linear1(x)out = self.relu(out)out = self.linear2(out)# no softmax at the endreturn outmodel = NeuralNet2(input_size=28*28, hidden_size=5, num_classes=3)

criterion = nn.CrossEntropyLoss() # (applies Softmax)

12.Activation Functions

实现激活函数:nn.xxx, torch.xxx, F.xxx

import torch

import torch.nn as nn

import torch.nn.functional as F# option 1 (create nn modules)

class NeuralNet(nn.Module):def __init__(self, input_size, hidden_size):super(NeuralNet, self).__init__()self.linear1 = nn.Linear(input_size, hidden_size)self.relu = nn.ReLU()self.lienar2 = nn.Linear(hidden_size, 1) # 还有nn.LeakyReLU等self.sigmoid = nn.Sigmoid()def forward(self, x):out = self.linear1(x)out = self.relu(out)out = self.linear2(out)out = self.sigmoid(out)return out# option 2 (use activation functions directly in forward pass)

class NeuralNet(nn.Module):def __init__(self, input_size, hidden_size):super(NeuralNet, self).__init__()self.linear1 = nn.Linear(input_size, hidden_size)self.lienar2 = nn.Linear(hidden_size, 1)def forward(self, x):out = torch.relu(self.linear1(x)) # 有些可能在torch-API中没有,但是在F-API中有,如F.leaky_reluout = torch.sigmoid(self.linear2(out))return out

13.Feed-Forward Neural Network

MNIST

DataLoader, Transformation

Multilayer Neural Net, activation function

Loss and Optimizer

Training Loop (batch training)

Model evaluation

GPU support

import torch

import torch.nn as nn

import torchvision

import torchvision.transforms as transforms

import matplotlib.pyplot as plt# device config

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')# hyper parameters

input_size = 784 # 28x28

hidden_size = 100

num_classes = 10

num_epochs = 2

batch_size = 100

learning_rate = 0.001# MNIST

train_dataset = torchvision.datasets.MNIST(root='./data', train=True,transform = transforms.ToTensor(), download=True)test_dataset = torchvision.datasets.MNIST(root='./data', train=False,transform = transforms.ToTensor())print(len(train_dataset))train_loader = torch.utils.data.DataLoader(dataset=train_dataset, batch_size=batch_size, shuffle=True)test_loader = torch.utils.data.DataLoader(dataset=test_dataset, batch_size=batch_size, shuffle=False)examples = iter(train_loader)

samples, labels = next(examples)

print(samples.shape, labels.shape)for i in range(6):plt.subplot(2, 3, i+1)plt.imshow(samples[i][0], cmap='gray')class NeuralNet(nn.Module):def __init__(self, input_size, hidden_size, num_classes):super(NeuralNet, self).__init__()self.l1 = nn.Linear(input_size, hidden_size)self.relu = nn.ReLU()self.l2 = nn.Linear(hidden_size, num_classes)def forward(self, x):out = self.l1(x)out = self.relu(out)out = self.l2(out)return outmodel = NeuralNet(input_size, hidden_size, num_classes)# loss and optimizer

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)# training loop

n_total_steps = len(train_loader)

for epoch in range(num_epochs):for i, (images, labels) in enumerate(train_loader):# 100, 1, 28, 28# 100, 784images = images.reshape(-1, 28*28).to(device)labels = labels.to(device)# forwardoutputs = model(images)loss = criterion(outputs, labels)# backwardsoptimizer.zero_grad()loss.backward()optimizer.step()if (i+1) % 100 == 0:print(f'epoch {epoch+1} / {num_epochs}, step {i+1}/{n_total_steps}, loss = {loss.item():.4f}')# test

with torch.no_grad():n_correct = 0n_samples = 0for images, labels in test_loader:images = images.reshape(-1, 28*28).to(device)labels = labels.to(device)outputs = model(images)# value, index_, predictions = torch.max(outputs, 1) # 我们感兴趣的是indexn_samples += labels.shape[0]n_correct = (predictions == labels).sum().item()acc = 100.0 * n_correct / n_samplesprint(f'accuracy = {acc}')

14.Convolutional Neural Network(CNN)

import torch

import torch.nn as nn

import torch.nn.functional as F

import torchvision

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

import numpy as np# Device configuration

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')# Hyper-parameters

num_epochs = 4

batch_size = 4

learning_rate = 0.001# dataset has PILImage images of range[0, 1]

# we transform them to Tensors of normailized range[-1, 1]

transform = transforms.Compose([transforms.ToTensor(), # 将图片数据的形状从H*W*C转化为C*H*Wtransforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))] #对图片的每一个通道进行归一化

)train_dataset = torchvision.datasets.CIFAR10(root='./data', train=True,download=True, transform=transform)test_datasest = torchvision.datasets.CIFAR10(root='./data', train=False,download=True, transform=transform)train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=batch_size, shuffle=True)test_loader = torch.utils.data.DataLoader(train_dataset, batch_size=batch_size, shuffle=False)classes = ('plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck')# implement conv net

class ConvNet(nn.Module):def __init__(self):super(ConvNet, self).__init__()self.conv1 = nn.Conv2d(3, 6, 5) # in_channels=3, out_cannnels=6, kernel_size=5self.pool = nn.MaxPool2d(2, 2)self.conv2 = nn.Conv2d(6, 16, 5)self.fc1 = nn.Linear(16*5*5, 120)self.fc2 = nn.Linear(120, 84)self.fc3 = nn.Linear(84, 10)def forward(self, x):x = self.pool(F.relu(self.conv1(x)))x = self.pool(F.relu(self.conv2(x)))x = x.view(-1, 16*5*5)x = F.relu(self.fc1(x))x = F.relu(self.fc2(x))x = self.fc3(x)return xmodel = ConvNet().to(device)criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)n_total_steps = len(train_loader)

for epoch in range(num_epochs):for i, (images, labels) in enumerate(train_loader):# origin shape: [4, 3, 32, 32] = 4, 3, 1024# input_layer: 3 input channels, 6ouotput channels, 5 kernel sizeimages = images.to(device)labels = labels.to(device)# Forward passoutputs = model(images)loss = criterion(outputs, labels)# Backward and optimizeoptimizer.zero_grad()loss.backward()optimizer.step()if (i+1) % 2000 == 0:print(f'Epoch [{epoch+1}/{num_epochs}], Step [{i+1}/{n_total_steps}], Loss: {loss.item():.4f}')print('Finished Training')with torch.no_grad():n_correct = 0n_samples = 0n_class_correct = [0 for i in range(10)]n_class_samples = [0 for i in range(10)]for images, labels in test_loader:images = images.to(device)labels = labels.to(device)outputs = model(images)# max returns (value, index)_, predicted = torch.max(outputs, 1)n_samples += labels.size(0)n_correct += (predicted == labels).sum().item()for i in range(batch_size):label = labels[i]pred = predicted[i]if (label == pred):n_class_correct[label] += 1n_class_samples[label] += 1acc = 100.0 * n_correct / n_samplesprint(f'Accuracy of the network: {acc} %')for i in range(10):acc = 100.0 * n_class_correct[i] / n_class_samples[i]print(f'Accuracy of {classes[i]}: {acc} %')

15.Transfer Learning

本节主要学习ImageFolder, Scheduler, Transfer Learning.

使用预训练的ResNet 18 CNN,有18层深(CNN的层数一般就是指具有权重/参数的层数总和),可以进行1000分类。

# ImageFolder

# Scheduler

# Transfer Learningimport torch

import torch.nn as nn

import torch.optim as optim

from torch.optim import lr_scheduler

import numpy as np

import torchvision

from torchvision import datasets, models, transforms

import matplotlib.pyplot as plt

import time

import os

import copydevice = torch.device('cuda' if torch.cuda.is_available() else 'cpu')mean = np.array([0.485, 0.456, 0.406])

std = np.array([0.229, 0.224, 0.225])data_transforms = {'train': transforms.Compose([transforms.RandomResizedCrop(224),transforms.RandomHorizontalFlip(),transforms.ToTensor(),transforms.Normalize(mean, std)]),'val': transforms.Compose([transforms.Resize(256),transforms.CenterCrop(224),transforms.ToTensor(),transforms.Normalize(mean, std)]),

}# import data

data_dir = 'data/hymenoptera_data'

sets = ['train', 'val']

image_datasets = {x: datasets.ImageFolder(os.path.join(data_dir, x),data_transforms[x])for x in ['train', 'val']}dataloaders = {x: torch.utils.data.DataLoader(image_datasets[x], batch_size=4,shuffle=True, num_workers=0)for x in ['train', 'val']}dataset_sizes = {x: len(image_datasets[x]) for x in ['train', 'val']}

class_names = image_datasets['train'].classes

print(class_names)def train_model(model, criterion, optimizer, scheduler, num_epochs=25):since = time.time()best_model_wts = copy.deepcopy(model.state_dict())best_acc = 0.0for epoch in range(num_epochs):print(f'Epoch {epoch}/{num_epochs-1}')print('-' * 10)# Each epoch has a training and validation phasefor phase in ['train', 'val']:if phase == 'train':model.train() # Set model to training modeelse:model.eval() # Set model to evaluate moderunning_loss = 0.0running_corrects = 0# Iterate over data.for inputs, labels in dataloaders[phase]:inputs = inputs.to(device)labels = labels.to(device)# forward# track history if only in trainwith torch.set_grad_enabled(phase == 'train'): # 会根据属性决定是否开启梯度计算outputs = model(inputs)_, preds = torch.max(outputs, 1)loss = criterion(outputs, labels)# backward + optimize only if in training phaseif phase == 'train':optimizer.zero_grad()loss.backward()optimizer.step()# statisticsrunning_loss += loss.item() * inputs.size(0)running_corrects += torch.sum(preds == labels.data)if phase == 'train':scheduler.step()epoch_loss = running_loss / dataset_sizes[phase] # 整体的平均lossepoch_acc = running_corrects.double() / dataset_sizes[phase] # 整体的准确率print(f'{phase} Loss: {epoch_loss:.4f} Acc: {epoch_acc:.4f}')# deep copy the modelif phase == 'val' and epoch_acc > best_acc:best_acc = epoch_accbest_model_wts = copy.deepcopy(model.state_dict())print()time_elapsed = time.time() - sinceprint(f'Traning complete in {time_elapsed//60:.0f}m {time_elapsed%60:.0f}s')print(f'Best val Acc: {best_acc:4f}')# load best model weightsmodel.load_state_dict(best_model_wts)return model##### 全参数训练

model = models.resnet18(weights = models.ResNet18_Weights.IMAGENET1K_V1)

num_ftrs = model.fc.in_featuresmodel.fc = nn.Linear(num_ftrs, 2)

model.to(device)criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.001)# schedulerstep_lr_scheduler = lr_scheduler.StepLR(optimizer, step_size=7, gamma=0.1)model = train_model(model, criterion, optimizer, step_lr_scheduler, num_epochs=2)##### 全连接层训练model = models.resnet18(weights = models.ResNet18_Weights.IMAGENET1K_V1)

for param in model.parameters(): # 参数冻结param.requires_grad = False

num_ftrs = model.fc.in_featuresmodel.fc = nn.Linear(num_ftrs, 2) # 默认参数不冻结

model.to(device)criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.001)# schedulerstep_lr_scheduler = lr_scheduler.StepLR(optimizer, step_size=7, gamma=0.1)model = train_model(model, criterion, optimizer, step_lr_scheduler, num_epochs=2)

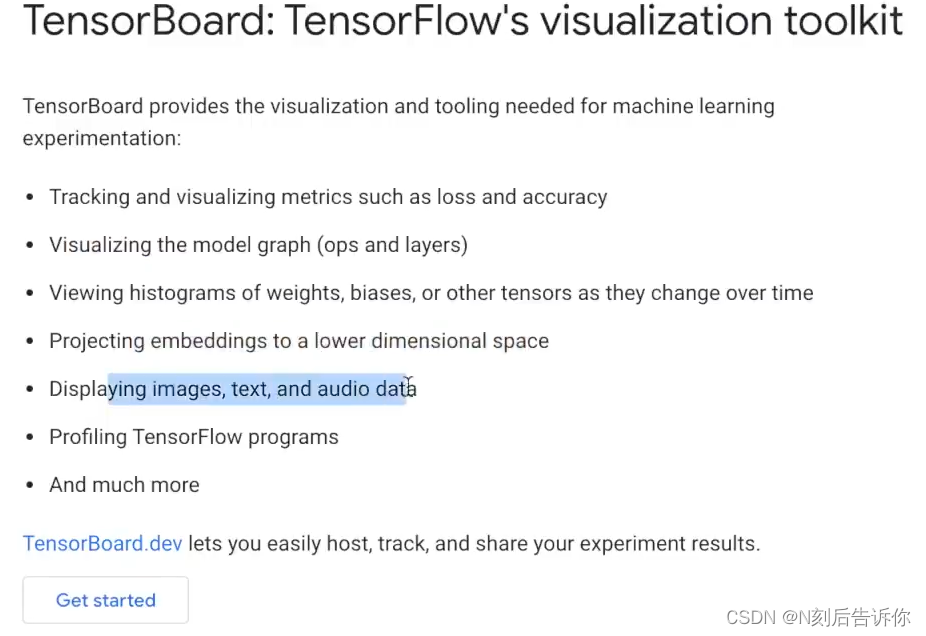

16.How To Use The TensorBoard

参考链接:

https://pytorch.org/docs/stable/tensorboard.html

https://blog.csdn.net/weixin_45100742/article/details/133919886

# 先安装tensorboard

pip install tensorboard

import torch

import torch.nn as nn

import torchvision

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

import sys

import torch.nn.functional as F

from torch.utils.tensorboard import SummaryWriter # 提供API,用于在给定目录创建事件文件,并向其中添加摘要和事件# device config

writer = SummaryWriter("runs/mnist") # 创建目录"./runs/mnist"

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# Hyper-parameters

input_size = 784 # 28x28

hidden_size = 500

num_classes = 10

num_epochs = 1

batch_size = 64

learning_rate = 0.001# MNIST

train_dataset = torchvision.datasets.MNIST(root="./data", train=True, transform=transforms.ToTensor(), download=True

)test_dataset = torchvision.datasets.MNIST(root="./data", train=False, transform=transforms.ToTensor()

)train_loader = torch.utils.data.DataLoader(dataset=train_dataset, batch_size=batch_size, shuffle=True

)test_loader = torch.utils.data.DataLoader(dataset=test_dataset, batch_size=batch_size, shuffle=False

)examples = iter(test_loader)

example_data, example_targets = next(examples)for i in range(6):plt.subplot(2, 3, i + 1)plt.imshow(example_data[i][0], cmap="gray")

# plt.show()# 将图像添加到tensorboard上去

img_grid = torchvision.utils.make_grid(example_data) # 先制作成网格图片

writer.add_image("mnist_images", img_grid) # 向summary中添加图片(标题, 图片内容)

# writer.close()

# sys.exit() # 在程序执行过程中退出# Fully connected neural network with one hidden layer

class NeuralNet(nn.Module):def __init__(self, input_size, hidden_size, num_classes):super(NeuralNet, self).__init__()self.l1 = nn.Linear(input_size, hidden_size)self.relu = nn.ReLU()self.l2 = nn.Linear(hidden_size, num_classes)def forward(self, x):out = self.l1(x)out = self.relu(out)out = self.l2(out)return outmodel = NeuralNet(input_size, hidden_size, num_classes).to(device)# loss and optimizer

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)# writer.add_graph(model, example_data.reshape(-1, 28 * 28))

# writer.close()# training loop

n_total_steps = len(train_loader)

running_loss = 0.0

running_correct = 0

for epoch in range(num_epochs):for i, (images, labels) in enumerate(train_loader):# 64, 1, 28, 28# 64, 784images = images.reshape(-1, 28 * 28).to(device)labels = labels.to(device)# Forward passoutputs = model(images)loss = criterion(outputs, labels)# Backward and optimizeoptimizer.zero_grad()loss.backward()optimizer.step()running_loss += loss.item()_, predicted = torch.max(outputs.data, 1)running_correct += (predicted == labels).sum().item()if (i + 1) % 100 == 0:print(f"epoch {epoch+1} / {num_epochs}, step {i+1}/{n_total_steps}, loss = {loss.item():.4f}")writer.add_scalar( # 添加标量数据到summary中,主要用来画图(标题,y轴值,x轴值)"training loss", running_loss / 100, epoch * n_total_steps + i)writer.add_scalar("accuracy", running_correct / 100, epoch * n_total_steps + i)running_loss = 0.0running_correct = 0

# sys.exit()# Test the model

# In test phase, we don't need to compute gradients (for memory efficiency)labels = []

preds = []

with torch.no_grad():n_correct = 0n_samples = 0for images, labels1 in test_loader:images = images.reshape(-1, 28 * 28).to(device)labels1 = labels1.to(device)outputs = model(images) # 64*10# for output in outputs:# print(output)# print(F.softmax(outputs, dim=0))# break# break# max returns (value, index)_, predicted = torch.max(outputs, 1) # 沿着第一个维度(行)求最大值n_samples += labels1.size(0) # 这一批次的样本数n_correct += (predicted == labels1).sum().item()class_predictions = [F.softmax(output, dim=0) for output in outputs] # 每一行做softmax,得到行tensor,然后组成listpreds.append(class_predictions) # class_predictions是由64个长度为10的tensor组成的列表# labels.append(predicted) # predicted是长为64的tensorlabels.append(labels1)preds = torch.cat([torch.stack(batch) for batch in preds])# torch.stack(batch)将64个(10,)的tensor堆叠成64*10的tensor# 这里得到的是10000*10的tensorlabels = torch.cat(labels) # 组合成长为10000的1维张量acc = 100.0 * n_correct / n_samplesprint(f"Accuracy of the network on the test images: {acc} %")classes = range(10)for i in classes:labels_i = labels == ipreds_i = preds[:, i]writer.add_pr_curve(str(i), labels_i, preds_i, global_step=0)writer.close()

# 使用tensorboard可视化事件文件

tensorboard --logdir=runs # 指定保存日志文件的路径

PS:

1.torch.stack()是将相同大小的tensor堆叠成更高维度的tensor,其拓展的维度由dim决定。

2.torch.cat()是将tensor进行拼接。

17.Saving and Loading Models

import torch

import torch.nn as nnclass Model(nn.Module):def __init__(self, n_input_features):super(Model, self).__init__()self.linear = nn.Linear(n_input_features, 1)def forward(self, x):y_pred = torch.sigmoid(self.linear(x))return y_predmodel = Model(n_input_features=6)

# train your model...

print(model.state_dict())

保存模型的两种方式:

1.保存整个模型

FILE = 'model.pth'

torch.save(model, FILE) # 保存模型# model class must be defined somewhere

model = torch.load(FILE) # 加载模型

model.eval()for param in model.parameters():print(param)

2.只需要保存参数(推荐)

torch.save(model.state_dict(), FILE) # 保存参数# model must be created again with parametersloaded_model = Model(n_input_features=6)

loaded_model.load_state_dict(torch.load(FILE)) # load_state_dict需要传入的参数是load_dict,而不是PATH

loaded_model.eval()for param in loaded_model.parameters():print(param)

3.如何保存和加载Checkpoint

model = Model(n_input_features=6)

# train your model...

learning_rate = 0.01

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

print(optimizer.state_dict())checkpoint = {"epoch": 90,"model_state": model.state_dict(),"optim_state": optimizer.state_dict()

}torch.save(checkpoint, "checkpoint.pth")loded_checkpoint = torch.load("checkpoint.pth")

epoch = loded_checkpoint["epoch"]model = Model(n_input_features=6)

optimizer = torch.optim.SGD(model.parameters(), lr=0)model.load_state_dict(checkpoint["model_state"])

optimizer.load_state_dict(checkpoint["optim_state"])

print(optimizer.state_dict())

4.在GPU/CPU上保存/加载

# Save on GPU, Load on CPU

device = torch.device("cuda")

model.to(device)

torch.save(model.state_dict(), PATH)device = torch.device('cpu')

model = Model(*args, **kwargs)

model.load_state_dict(torch.load(PATH, map_location=device)) # 必须在map_location参数上指定cpu# Save on GPU, Load on GPU

device = torch.device("cuda")

model.to(device)

torch.save(model.state_dict(), PATH)model = Model(*args, **kwargs)

model.load_state_dict(torch.load(PATH))

model.to(device)# Save on CPU, Load on GPU

torch.save(model.state_dict(), PATH)device = torch.device("cuda")

model = Model(*args, **kwargs)

model.load_state_dict(torch.load(PATH, map_location="cuda:0")) # Choose whatever GPU

model.to(device)

18.Create & Deploy A Deep Learning App - Pytorch Model Deployment With Flask & Hero

本节讨论如何

会创建一个简单的flask应用,带有rest api,并且以json数据作为返回

然后部署hero

pytorch会进行数字数字分类

mkdir pytorch-flask-tutorial

cd pytorch-flask-tutorial

python3 -m venv venv

. venv/bin/activate

pip install Flask

pip install torch torchvision

mkdir app

cd app

export FLASK_APP=main.py

export FLASK_ENV=development # 打开调试模式。在调试模式下, flask run 会缺省使用交互调试器和重载器

# 参考https://cloud.tencent.com/developer/article/2088748

flask run

参考资料:

Python3笔记之venv虚拟环境

Flask官方文档

Python_Flask教学视频