前言

上一篇讲解《鲍鱼年龄预测》的数据分析和可视化一些操作,对不同的四个模型产生了Baseline。本文在这个基础上做优化。

【Kaggle】练习赛《鲍鱼年龄预测》(上)

加载库

(略)以参考上篇

加载数据

# 加载所有数据

train = pd.read_csv(os.path.join(FILE_PATH, "train.csv"))

test = pd.read_csv(os.path.join(FILE_PATH, "test.csv"))

sample = pd.read_csv(os.path.join(FILE_PATH, "sample_submission.csv")) # 提交的文件也加载 不加载原始数据集# 由上篇得知,只有一个性别分类特征,这里直接处理了

dd={"M":0,"F":1,"I":2}

train['Sex'] = train['Sex'].map(dd)

test['Sex'] = test['Sex'].map(dd)

探索数据

X=train.drop(["Rings"],axis=1)

y=train["Rings"]

从上篇得知 y 的偏态情况比较严重,先出个图,再进行处理

import scipy.stats as stplt.figure(1); plt.title('Johnson SU')

sns.distplot(y, kde=True, fit=st.johnsonsu)

plt.figure(2); plt.title('Normal')

sns.distplot(y, kde=True, fit=st.norm)

plt.figure(3); plt.title('Log Normal')

sns.distplot(y, kde=True, fit=st.lognorm)

从上述的密度图来看,有一定的偏差,因此 y 可以进行对数处理

处理的方式有很多种 我这里采用log1p()的方式 ,也可以直接用log()函数

这里说明一下log1p()等价于log(1 + x)防止 x 接近 0时,导致精度丢失问题。

import scipy.stats as st

y=np.log1p(train["Rings"]) # 对数化处理plt.figure(1); plt.title('Johnson SU')

sns.distplot(y, kde=True, fit=st.johnsonsu)

plt.figure(2); plt.title('Normal')

sns.distplot(y, kde=True, fit=st.norm)

plt.figure(3); plt.title('Log Normal')

sns.distplot(y, kde=True, fit=st.lognorm)

处理后,效果明显好于之前,故确定用这个y 做为目标值

分离数据集

X_train,X_valid,y_train,y_valid=train_test_split(X,y,test_size=0.3,random_state=100)

模型进行优化

from sklearn.metrics import mean_absolute_error,mean_squared_log_error

import optuna

以下用

optuna对随机森林,XGB,LGBT,CAT 分别 进行调参,这里其他不作过多说明

特别需要说明的是在五折交叉验证时,采用的评估函数scoring选用的是neg_mean_squared_error,而在分类模型时我们可以选择accuracy,有小伙伴会问,为什么不选用neg_mean_squared_log_error, 这里出于以下两方面的原因:

- 一旦在训练时出现负数会报错,程序可能会终止

- 目标值已经对数化后,也没有必要再用对数的评估函数

随机森林

from sklearn.model_selection import cross_validate# RFdef rfr_objective(trial):print('Training the model with', X.shape[1], 'features')# 随机森林params = {'n_estimators' : trial.suggest_int("n_estimators", 10, 200, log=True),'max_depth' : trial.suggest_int("max_depth", 2, 32),'min_samples_split' : trial.suggest_int("min_samples_split", 2, 10),'min_samples_leaf' : trial.suggest_int("min_samples_leaf", 1, 10),}clf = RandomForestRegressor(**params)cv_results = cross_validate(clf, X, y, cv=5, scoring='neg_mean_squared_error') validation_score = np.mean(cv_results['test_score'])print(validation_score)return validation_scorerfr_study = optuna.create_study(direction="maximize")

rfr_study.optimize(rfr_objective, n_trials=100)

trial_rfr = rfr_study.best_trial

best_rfr_params=trial_rfr.params

best_rfr_params

经过上述调试,有以下结果(有情提示,需要较长时间)

best_rfr_params={'n_estimators': 164,'max_depth': 13,'min_samples_split': 8,'min_samples_leaf': 10}

根据优化的参数,验证结果

rfr = RandomForestRegressor(**best_rfr_params)

rfr.fit(X_train,y_train)

pred_rfr = rfr.predict(X_valid)

mse=mean_absolute_error(y_valid, pred_rfr)

rmsle=np.sqrt(mean_squared_log_error(np.expm1(y_valid), np.expm1(pred_rfr)))

mse,rmsle

# 结果 :(0.10865562442363748, 0.14914098508756424)

这个结果 0.14914 比上篇的0.15546 要提升了不少,特别说明的评估函数中的值,也需要

反操作(即指数化-1)。

XGB

from sklearn.model_selection import cross_validatedef xgb_objective(trial):max_depth = trial.suggest_int('max_depth', 4, 100)n_estimators = trial.suggest_int('n_estimators', 50, 2000)gamma = trial.suggest_float('gamma', 0, 1)reg_alpha = trial.suggest_float('reg_alpha', 0, 1)reg_lambda = trial.suggest_float('reg_lambda', 0, 1)min_child_weight = trial.suggest_int('min_child_weight', 0, 10)subsample = trial.suggest_float('subsample', 0, 1)colsample_bytree = trial.suggest_float('colsample_bytree', 0, 1)learning_rate = trial.suggest_float('learning_rate', 0, 0.4)print('Training the model with', X.shape[1], 'features')# XGBoostparams = {'n_estimators': n_estimators,'learning_rate': learning_rate,'gamma': gamma,'reg_alpha': reg_alpha,'reg_lambda': reg_lambda,'max_depth': max_depth,'min_child_weight': min_child_weight,'subsample': subsample,'colsample_bytree': colsample_bytree,'eval_metric':'mlogloss'}clf = XGBRegressor(**params)cv_results = cross_validate(clf, X, y, cv=5, scoring='neg_mean_squared_error') validation_score = np.mean(cv_results['test_score'])print(validation_score)return validation_scorexgb_study = optuna.create_study(direction="maximize")

xgb_study.optimize(xgb_objective, n_trials=100)

xgb_trial = xgb_study.best_trial

best_xgb_params=xgb_trial.params

best_xgb_params

优化后的结果如下:

best_xgb_params={'max_depth': 7,'n_estimators': 727,'gamma': 0.019463347824409248,'reg_alpha': 0.9036492945299859,'reg_lambda': 0.7959468494917995,'min_child_weight': 8,'subsample': 0.872156161442901,'colsample_bytree': 0.7472562751393625,'learning_rate': 0.0228883656098995}

验证结果

xgb= XGBRegressor(**best_xgb_params)

xgb.fit(X_train,y_train)

pred_xgb = xgb.predict(X_valid)

mse=mean_absolute_error(y_valid, pred_xgb)

rmsle=np.sqrt(mean_squared_log_error(np.expm1(y_valid), np.expm1(pred_xgb)))

mse,rmsle

# 结果:(0.10741827677460465, 0.14759020292535532)

这个结果 0.14759 比Baseline的0.1523 要提升了不少。

LGB

from sklearn.model_selection import cross_validatedef lgb_objective(trial):max_depth = trial.suggest_int('max_depth', 4, 100)n_estimators = trial.suggest_int('n_estimators', 50, 2000)gamma = trial.suggest_float('gamma', 0, 1)reg_alpha = trial.suggest_float('reg_alpha', 0, 1)reg_lambda = trial.suggest_float('reg_lambda', 0, 1)min_child_weight = trial.suggest_int('min_child_weight', 0, 10)subsample = trial.suggest_float('subsample', 0, 1)colsample_bytree = trial.suggest_float('colsample_bytree', 0, 1)learning_rate = trial.suggest_float('learning_rate', 0, 1)num_leaves = trial.suggest_int('max_depth', 0, 100)print('Training the model with', X.shape[1], 'features')# LightGBMparams = {'learning_rate': learning_rate,'n_estimators': n_estimators,'max_depth': max_depth,'lambda_l1': reg_alpha,'lambda_l2': reg_lambda,'colsample_bytree': colsample_bytree, 'subsample': subsample, 'min_child_samples': min_child_weight,'num_leaves':num_leaves,

# 'device': 'gpu', 这里需要说明 如果是GPU ,可以开启 'class_weight': 'balanced'}clf = LGBMRegressor(**params, verbose = -1, verbosity = -1) cv_results = cross_validate(clf, X, y, cv=5, scoring='neg_mean_squared_error') validation_score = np.mean(cv_results['test_score'])print(validation_score)return validation_scorelgb_study = optuna.create_study(direction="maximize")

lgb_study.optimize(lgb_objective, n_trials=100)ltrial = lgb_study.best_trial

best_lgb_params=ltrial.params

best_lgb_params

优化结果如下

best_lgb_params={'max_depth': 94,'n_estimators': 1823,'gamma': 0.8014536436723432,'reg_alpha': 0.27690360375348405,'reg_lambda': 0.21155600911723815,'min_child_weight': 5,'subsample': 0.4185852334032123,'colsample_bytree': 0.8138627851400476,'learning_rate': 0.12882994034325884}

验证结果

lgb = LGBMRegressor(**best_lgb_params)

lgb.fit(X_train,y_train)

pred_lgb = lgb.predict(X_valid)

mse=mean_absolute_error(y_valid, pred_lgb)

rmsle=np.sqrt(mean_squared_log_error(np.expm1(y_valid), np.expm1(pred_lgb)))

mse,rmsle

# (0.11032919202489437, 0.15094090223267007)

0.15094 比baseline 0.15156 略好

CAT

def cat_objective(trial):param = {'n_estimators': trial.suggest_int('n_estimators', 100, 1000),

# "loss_function": trial.suggest_categorical("loss_function", ["RMSE", "MAE"]),"learning_rate": trial.suggest_loguniform("learning_rate", 0.01, 0.4),"l2_leaf_reg": trial.suggest_loguniform("l2_leaf_reg", 1e-2, 1e0),"colsample_bylevel": trial.suggest_float("colsample_bylevel", 0.01, 0.1),"depth": trial.suggest_int("depth", 1, 10),"boosting_type": trial.suggest_categorical("boosting_type", ["Ordered", "Plain"]),"bootstrap_type": trial.suggest_categorical("bootstrap_type", ["Bayesian", "Bernoulli"]),"min_data_in_leaf": trial.suggest_int("min_data_in_leaf", 2, 20),"one_hot_max_size": trial.suggest_int("one_hot_max_size", 2, 20),

# "task_type": "GPU", ## 这里开GPU加速训练} if param["bootstrap_type"] == "Bayesian":param["bagging_temperature"] = trial.suggest_float("bagging_temperature", 0, 10)elif param["bootstrap_type"] == "Bernoulli":param["subsample"] = trial.suggest_float("subsample", 0.1, 1)reg = CatBoostRegressor(**param,logging_level='Silent')cv_results = cross_validate(reg, X, y, cv=5, scoring='neg_mean_squared_error') validation_score = np.mean(cv_results['test_score'])print(validation_score)return validation_scorecat_study = optuna.create_study(direction="maximize")

cat_study.optimize(cat_objective, n_trials=100)

cat_trial = cat_study.best_trial

best_cat_params=cat_trial.params

best_cat_params

优化结果

best_cat_params={'n_estimators': 993,'learning_rate': 0.3753731971809465,'l2_leaf_reg': 0.04331770751471458,'colsample_bylevel': 0.0996524145934415,'depth': 4,'boosting_type': 'Plain','bootstrap_type': 'Bernoulli','min_data_in_leaf': 6,'one_hot_max_size': 9,'subsample': 0.9598259850542418}

cat = CatBoostRegressor(**best_cat_params,logging_level='Silent')

cat.fit(X_train,y_train)

pred_cat = cat.predict(X_valid)

mse=mean_absolute_error(y_valid, pred_cat)

rmsle=np.sqrt(mean_squared_log_error(np.expm1(y_valid), np.expm1(pred_cat)))

mse,rmsle

# 结果 (0.10957684315860691, 0.14979804834365504)0.14979 好于baseline 0.15061

权重优化

用两种方法进行

SCIPY库中的 minimize

此方法相对简单,先初始化相同的权重

from scipy.optimize import minimize

def objective_st(x):w = x/sum(x)final_preds = w[0]*pred_rfr + w[1]*pred_xgb + w[2]*pred_lgb + w[3]*pred_cat return mean_squared_log_error(np.expm1(y_valid), np.expm1(final_preds))x0 = [1, 1, 1, 1]

result = minimize(objective_st, x0, method='Nelder-Mead')

result

message: Optimization terminated successfully.success: Truestatus: 0fun: 0.021673206133503535x: [ 3.019e-01 2.229e+00 8.386e-01 2.399e-01]nit: 84nfev: 159

final_simplex: (array([[ 3.019e-01, 2.229e+00, 8.386e-01, 2.399e-01],

[ 3.020e-01, 2.229e+00, 8.387e-01, 2.399e-01],

…,

[ 3.019e-01, 2.229e+00, 8.387e-01, 2.398e-01],

[ 3.020e-01, 2.229e+00, 8.387e-01, 2.399e-01]]), array([ 2.167e-02, 2.167e-02, 2.167e-02, 2.167e-02,

获得权重后再进行计算 ,生成文件,最后提交

w = result.x/sum(result.x)

# array([0.0836496 , 0.6175261 , 0.23235088, 0.06647342])

s0=rfr.predict(test)

s1=xgb.predict(test)

s2=lgb.predict(test)

s3=cat.predict(test)

final_s = w[0]*s0 + w[1]*s1 + w[2]*s2 + w[3]*s3

sample['Rings']=np.expm1(final_s)

sample.to_csv("submit_log1p.csv",index=None)

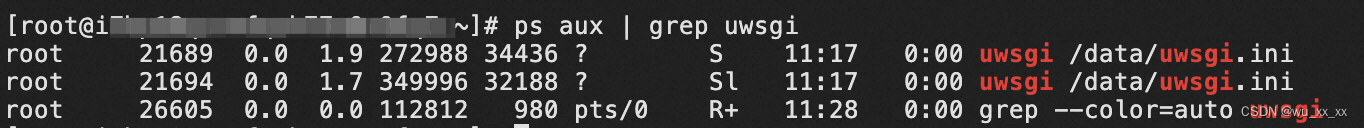

提交后的成绩为 0.14653,当时排名为 70名。

以图为证。

Optuna 的优化

与面前优化相同,需要化很长时间。

def objective_w(trial):# Define the trial parameters (weights)w_rfr = trial.suggest_float('w_rfr', 0.0, 1.0)w_xgb = trial.suggest_float('w_xgb', 0.0, 1.0)w_lgb = trial.suggest_float('w_lgb', 0.0, 1.0)w_cat = trial.suggest_float('w_cat', 0.0, 1.0)weights = np.array([w_rfr, w_xgb, w_lgb, w_cat]) weights /= weights.sum() # Initialize lists to store predictions and RMSLE scores for each foldrmsle_scores = []# Implement k-fold cross-validationk = 5 # Number of foldskf = KFold(n_splits=k, shuffle=True, random_state=42)# Train and predict for each foldfor train_idx, val_idx in kf.split(X):X_train, X_val = X.iloc[train_idx], X.iloc[val_idx]y_train, y_val = y.iloc[train_idx], y.iloc[val_idx]# Train Forest model model_rfr = RandomForestRegressor(**best_rfr_params)model_rfr.fit(X_train, y_train)y_pred_rfr = model_rfr.predict(X_val)# Train XGBoost model model_xgb = XGBRegressor(**best_xgb_params)model_xgb.fit(X_train, y_train)y_pred_xgb = model_xgb.predict(X_val)# Train LightGBM modelmodel_lgb = LGBMRegressor(**best_lgb_params, verbose = -1, verbosity = -1)model_lgb.fit(X_train, y_train)y_pred_lgb = model_lgb.predict(X_val)# Train CatBoost modelmodel_cat = CatBoostRegressor(**best_cat_params,logging_level='Silent')model_cat.fit(X_train, y_train, verbose=False)y_pred_cat = model_cat.predict(X_val)# Calculate weighted average predictionsy_pred_avg = weights[0]*y_pred_rfr + weights[1]* y_pred_xgb + weights[2]* y_pred_lgb + weights[3] * y_pred_cat# Calculate RMSLE for the foldrmsle_fold = np.sqrt(mean_squared_log_error(np.expm1(y_val), np.expm1(y_pred_avg)))rmsle_scores.append(rmsle_fold)# Calculate average RMSLE across all foldsrmsle_avg = np.mean(rmsle_scores)return rmsle_avg# Define the Optuna study

study_w = optuna.create_study(direction='minimize')

study_w.optimize(objective_w, n_trials=50)# Get the best weights from the study

best_weights_ensemble = study.best_params

weights = np.array([best_weights_ensemble['w_rfr'], best_weights_ensemble['w_xgb'], best_weights_ensemble['w_lgb'], best_weights_ensemble['w_cat']])

weights /= weights.sum() ffs = weights[0]*s0 + weights[1]* s1 + weights[2]* s2 + weights[3] * s2

sample['Rings']=np.expm1(ffs)

sample

这份结果提交,没有提升成绩,与之前提交的分数相当。

小结

- 本文在上篇的基础上,将目标值

Rings对数化 更加符合正态分布; - 通过Optuna 优化参数,对每个模型均有不同程度的提升;

- 在融合的过程,本文采用的硬投票的方式,也采用了两种方法确定权重系数,在实际情况下,还有软投票方式;

- 实际上各模型获得最优化参数后,最后生成的模型,仍可以使用5折验证的方式来提升模型的泛化能力;这部分代码就留给你们罗。

最后 预祝小伙伴参考本文,提交后获得更好的成绩。

附========

五折验证后提交,分数的确是提高了到了0.14647,由于提交的人增加,名次反而下降了。