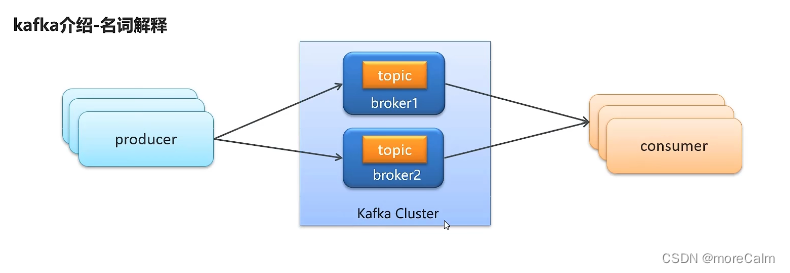

producer:发布消息的对象,称为消息产生者 (Kafka topic producer)

topic:Kafka将消息分门别类,每一个消息称为一个主题(topic)

consumer:订阅消息并处理发布消息的对象称为消费者(consumer)

broker:已发布的消息保存在一组服务器中,称为kafka集群,集群中的每一个服务器都是一个代理(broker),消费者(consumer)可以订阅一个或者多个主题(topic),并从broker中拉取数据,从而消费这些已发布的信息。

1、Kafka对zookeeper是一个强依赖,保存Kafka相关的节点数据,所以安装kafka之前要先安装zookeeper

下载镜像

docker pull zookeeper:3.4.14创建容器

docker run -d --name zookeeper -p 2181:2181 zookeeper:3.4.14下载镜像

docker pull wurstmeister/kafka:2.12-2.3.1创建容器

docker run -d --name kafka \

--env KAFKA_ADVERTISED_HOST_NAME=192.168.200.130 \

--env KAFKA_ZOOKEEPER_CONNECT=192.168.200.130:2181 \

--env KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://192.168.200.130:9092 \

--env KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092 \

--env KAFKA_HEAP_OPTS="-Xmx256M -Xms256M" \

--net=host wurstmeister/kafka:2.12-2.3.12、入门案例

①创建kafka-demo工程并引入依赖

<!--kafka--><dependency><groupId>org.apache.kafka</groupId><artifactId>kafka-clients</artifactId></dependency>②创建ProducerQuickStart生产者类并实现

package com.heima.kafkademo.sample;import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.clients.producer.ProducerRecord;import java.util.Properties;/*生产者*/

public class ProducerQuickStart {public static void main(String[] args) {/*1、kafka配置信息*/Properties properties = new Properties();//kafka连接地址properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.200.130:9092");//发送失败,失败的重试次数properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,5);//key和value的序列化器properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");/*2、生产对象*/KafkaProducer<String,String> producer = new KafkaProducer<String, String>(properties);//封装发送消息的对象ProducerRecord<String,String> record = new ProducerRecord<String, String>("itheima-topic","100001","hello kafka");/*3、发送消息*/producer.send(record);/*4、关闭通道,负责消息发送不成功*/producer.close();}

}

③创建ConsumerQuickStart消费者类并实现

package com.heima.kafkademo.sample;import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.clients.producer.ProducerConfig;import java.time.Duration;

import java.util.Collections;

import java.util.Properties;/*消费者*/

public class ConsumerQuickStart {public static void main(String[] args) {/*1、kafka配置信息*/Properties properties = new Properties();//kafka连接地址properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.200.130:9092");//发送失败,失败的重试次数properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,5);//key和value的序列化器properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");/*2、消费者对象*/KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(properties);/*3、订阅主题*/consumer.subscribe(Collections.singletonList("itheima-topic"));//当前线程处于一直监听状态while (true){//4、获取消息ConsumerRecords<String,String> consumerRecords =consumer.poll(Duration.ofMillis(1000));for (ConsumerRecord<String, String> record : consumerRecords) {System.out.println(record.key());System.out.println(record.value());}}}

}

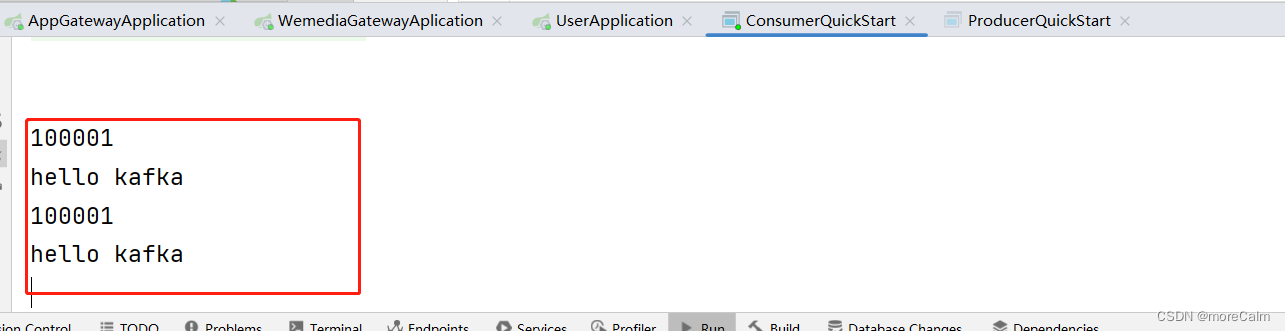

④运行测试

成功接收到消息

总结

-

生产者发送消息,多个消费者订阅同一个主题,只能有一个消费者收到消息(一对一)

-

生产者发送消息,多个消费者订阅同一个主题,所有消费者都能收到消息(一对多)

下一篇: springboot集成kafka收发消息