目录

- io_uring 异步

- io_uring 使用

- 对比于epoll的效果

- io_uring效果好在哪?

io_uring 异步

要求内核linux 5.10

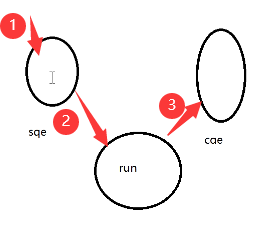

异步四元组:1、init(create)2、commit 3、callback 4、destory

fio : 测iops一秒钟读写磁盘的次数

| 方式 | 磁盘iops |

|---|---|

| io_uring | 11.6k |

| libaio | 10.7k |

| psync | 5k |

| spdk(内存操作 , 尽可能都放到用户态) | 157k |

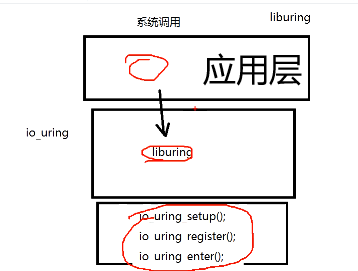

io_uring 使用

io_uring内核api:

io uring. setup();

io uring register();

io_ uring_ enter();

liburing接口:

- io_uring. _queue. init. params

- io_uring_prep_accept

- io_ uring. prep_recv

- io_uring_prep_send(uring, )

- io_ uring_submit0;

- io_uring wait_cqe

- io_uring_ peek_ batch_ cqe

- io_uring_cq_advance//取消事件

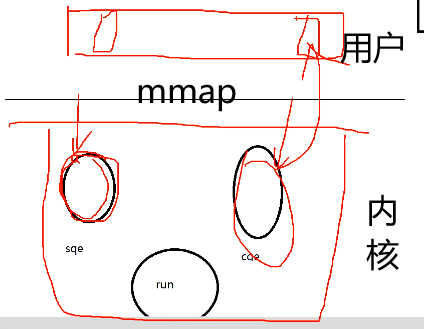

猜测运行机制:

io _uring. _setup();

做两件事

1)注册两个队列

2)使用mmap映射内核的队列和用户态的队列:

echo_websever:

// io_uring, tcp server// multhread, select/poll, epoll, coroutine, iouring

// reactor// io #include <liburing.h>#include <stdio.h>

#include <string.h>#include <sys/socket.h>

#include <netinet/in.h>#include <unistd.h>#define ENTRIES_LENGTH 4096enum {READ,WRITE,ACCEPT,};struct conninfo {int connfd;int type;

};void set_read_event(struct io_uring *ring, int fd, void *buf, size_t len, int flags) {struct io_uring_sqe *sqe = io_uring_get_sqe(ring);io_uring_prep_recv(sqe, fd, buf, len, flags);struct conninfo ci = {.connfd = fd,.type = READ};memcpy(&sqe->user_data, &ci, sizeof(struct conninfo));}void set_write_event(struct io_uring *ring, int fd, const void *buf, size_t len, int flags) {struct io_uring_sqe *sqe = io_uring_get_sqe(ring);io_uring_prep_send(sqe, fd, buf, len, flags);struct conninfo ci = {.connfd = fd,.type = WRITE};memcpy(&sqe->user_data, &ci, sizeof(struct conninfo));}void set_accept_event(struct io_uring *ring, int fd,struct sockaddr *cliaddr, socklen_t *clilen, unsigned flags) {struct io_uring_sqe *sqe = io_uring_get_sqe(ring);io_uring_prep_accept(sqe, fd, cliaddr, clilen, flags);struct conninfo ci = {.connfd = fd,.type = ACCEPT};memcpy(&sqe->user_data, &ci, sizeof(struct conninfo));}int main() {int listenfd = socket(AF_INET, SOCK_STREAM, 0); // if (listenfd == -1) return -1;

// listenfdstruct sockaddr_in servaddr, clientaddr;servaddr.sin_family = AF_INET;servaddr.sin_addr.s_addr = htonl(INADDR_ANY);servaddr.sin_port = htons(9999);if (-1 == bind(listenfd, (struct sockaddr*)&servaddr, sizeof(servaddr))) {return -2;}listen(listenfd, 10);struct io_uring_params params;memset(¶ms, 0, sizeof(params));// epoll --> struct io_uring ring;io_uring_queue_init_params(ENTRIES_LENGTH, &ring, ¶ms);socklen_t clilen = sizeof(clientaddr);set_accept_event(&ring, listenfd, (struct sockaddr*)&clientaddr, &clilen, 0);char buffer[1024] = {0};

//while (1) {struct io_uring_cqe *cqe;//从sqe地址复制地址io_uring_submit(&ring) ; //提交,在这个阶段进行accpet和recv、write等int ret = io_uring_wait_cqe(&ring, &cqe); //回调struct io_uring_cqe *cqes[10];int cqecount = io_uring_peek_batch_cqe(&ring, cqes, 10);// 10是每次最多返回10个队列元素int i = 0;unsigned count = 0;for (i = 0;i < cqecount;i ++) {cqe = cqes[i];count ++;struct conninfo ci;memcpy(&ci, &cqe->user_data, sizeof(ci));if (ci.type == ACCEPT) {int connfd = cqe->res;set_read_event(&ring, connfd, buffer, 1024, 0);} else if (ci.type == READ) {int bytes_read = cqe->res;if (bytes_read == 0) {close(ci.connfd);} else if (bytes_read < 0) {} else {printf("buffer : %s\n", buffer);set_write_event(&ring, ci.connfd, buffer, bytes_read, 0);}} else if (ci.type == WRITE) {set_read_event(&ring, ci.connfd, buffer, 1024, 0);}}io_uring_cq_advance(&ring, count);}}对比于epoll的效果

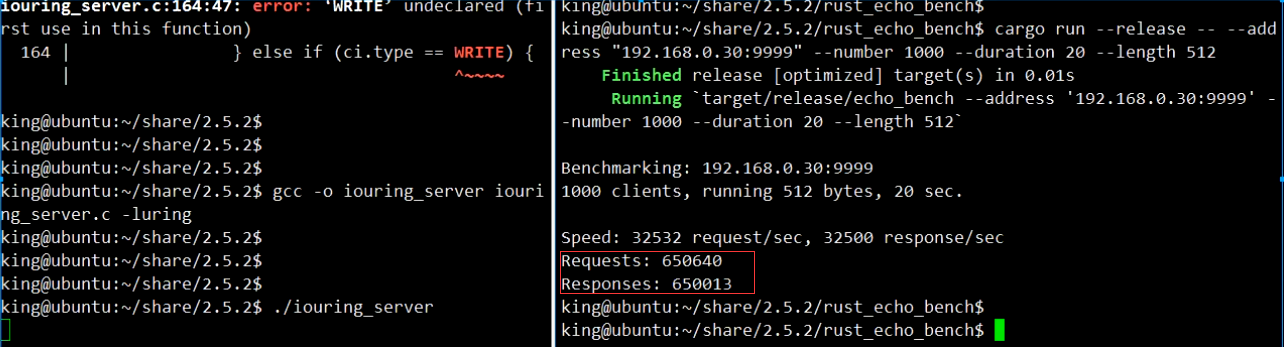

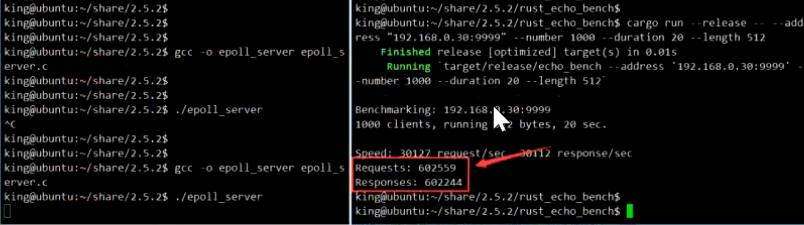

都采用echo_websever:

epoll: 60w

io_uring: 65w

io_uring效果好在哪?

epoll是把io放入了红黑树

io_uring共享内存:存储空间、修改变量