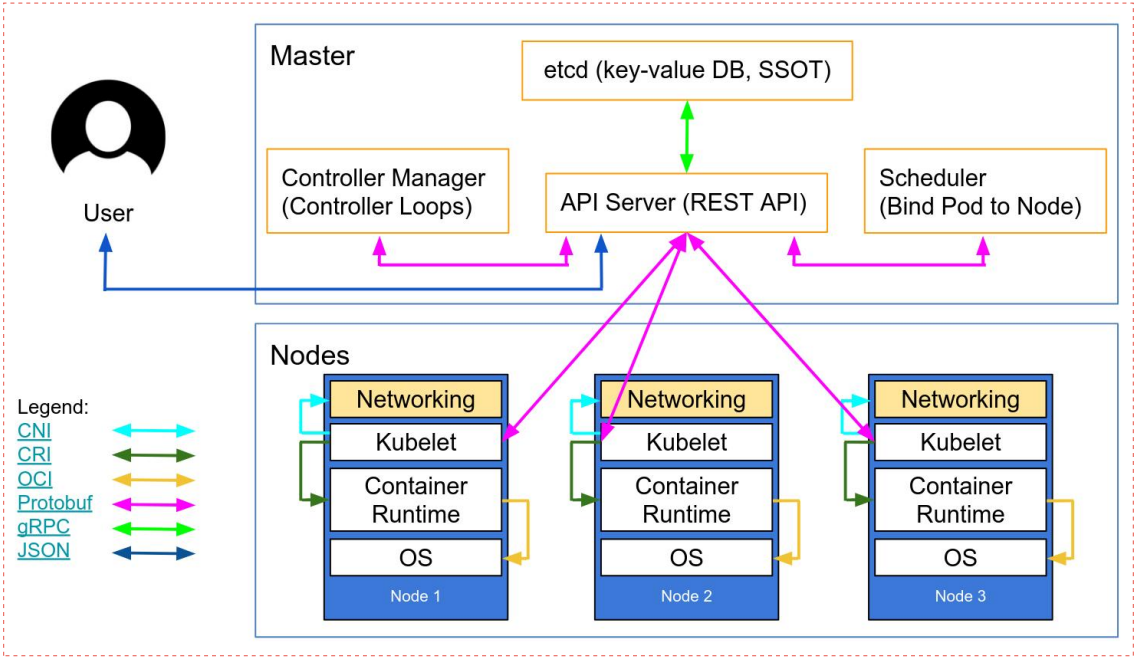

kubernetes master节点及node节点各核心组件的功能概述

master节点主要组件概述

master节点主要是k8s的控制节点,在master节点上主要有三个组件必不可少,apiserver、scheduler和controllermanager;etcd是集群的数据库,是非常核心的组件,一般是单独的一个集群;主要用来存储k8s集群各组件的配置信息以及各类状态信息;

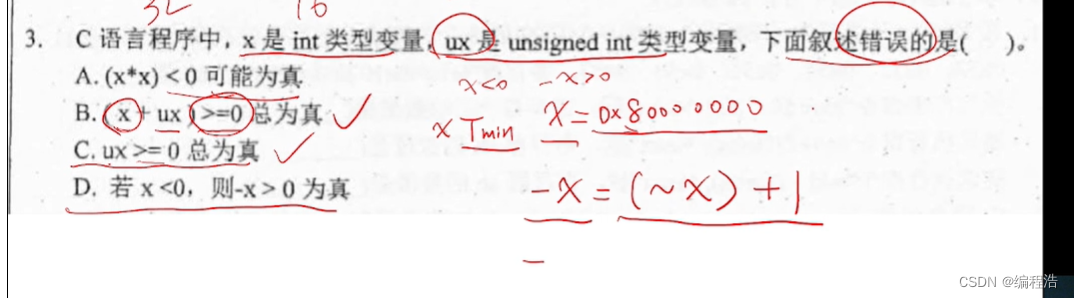

apiserver:apiserver组件主要负责接入请求、认证用户合法性以及提交资源请求的准入控制,是管理k8s集群的唯一入口,也是读写etcd中数据的唯一组件;其工作原理是集群各组件通过tcp会话,一直监视(watch)apiserver之上的资源变化,一旦有与之相关的资源变化,对应组件会通过apiserver到etcd中去获取应配置信息,然后根据etcd中的配置信息,做对应的操作,然后把操作后的状态信息再通过apiserver写入etcd中;apiserver认证和准入控制过程,用户通过https将管理集群的请求发送给apiserver,apiserver收到对应请求后,首先会验证用户的身份信息以及合法性;这个认证主要通过用户提供的证书信息;如果用户提供的证书信息apiserver能够再etcd中完全匹配到对应信息,那么apiserver会认为该用户是一个合法的用户;除此之外,apiserver还会对用户提交的资源请求进行准入控制,所谓准入控制是指对应用户提交的资源请求做语法格式检查,如果用户提交的请求,不满足apiserver中各api的格式或语法定义,则对应请求同样会被禁止,只有通过了apiserver的认证和准入控制规则以后,对应资源请求才会通过apiserver存入etcd中或从etcd中获取,然后被其他组件通过watch机制去发现与自己相关的消息事件,从而完成用户的资源请求;简单讲apiserver主要作用就是接入用户请求(这里主要是指管理员的各种管理请求和各组件链接apiserver的请求)认证用户合法性以及提交资源请求的准入控制;集群其他组件的数据的读写是不能直接在etcd中读写的,必须通过apiserver代理它们的请求到etcd中做对应数据的操作;

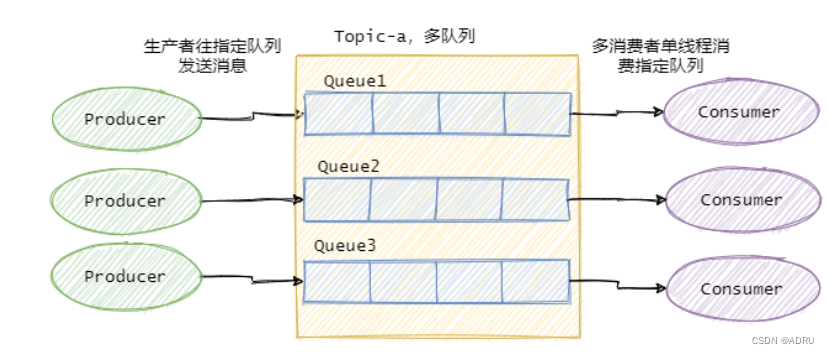

scheduler:scheduler主要负责为待调度Pod列表的每个Pod从可用Node列表中选择一个最适合的Node,并将信息写入etcd中。node节点上的kubelet通过APIServer监听到kubernetesScheduler产生的Pod绑定信息,然后获取对应的Pod清单,下载Image,并启动容器;这个过程会经历两个阶段,第一个阶段时预选阶段,所谓预选就是通过判断pod所需的卷是否和节点已存在的卷冲突,判读备选节点的资源是否满足备选pod的需求,判断备选系欸但是否包含备选pod的标签选择器指定的标签,以及节点亲和性、污点和污点容忍度的关系来判断pod是否满足节点容忍度的一些条件;来筛选掉不满足pod运行的节点,剩下的节点会进入第二阶段的优选过程;所谓优选就是在通过预选策略留下来的节点中再通过优选策略来选择一个最适合运行pod的节点来;如优先从备用节点列表中选择消耗资源最小的节点(通过cpu和内存来计算资源得分,消耗越少,得分越高,即谁的得分高就把pod调度到对应节点上);优先选择含有指定Label的节点。优先从备选节点列表中选择各项资源使用率最均衡的节点。使用Pod中tolerationList与节点Taint进行匹配并实现pod调度;简单讲scheduler主要作用就是为pod找到一个合适运行的node;通过预选和优选两个节点来实现pod的调度,然后把调度信息写入到etcd中;

controllermanager:controllermanager主要用来管理集群的其他控制器,比如副本控制器、节点控制器、命名空间控制器和服务账号控制器等;控制器作为集群内部的管理控制中心,负责集群内的Node、Pod副本、服务端点(Endpoint)、命名空间(Namespace)、服务账号(ServiceAccount)、资源定额(ResourceQuota)的管理,当某个Node意外宕机时,ControllerManager会及时发现并执行自动化修复流程,确保集群中的pod副本始终处于预期的工作状态。controller-manager控制器每间隔5秒检查一次节点的状态。如果controller-manager控制器没有收到自节点的心跳,则将该node节点被标记为不可达。controller-manager将在标记为无法访问之前等待40秒。如果该node节点被标记为无法访问后5分钟还没有恢复,controller-manager会删除当前node节点的所有pod并在其它可用节点重建这些pod;简单讲controllermanager主要负责集群pod副本始终处于用于期望的状态,如果对应pod不满足用户期望状态,则controllermanager会调用对应控制器,通过重启或重建的方式让对应pod始终和用户期望状态保持一致;

node节点主要组件概述

kube-proxy:kube-proxy是k8s网络代理,运行在node之上它反映了node上KubernetesAPI中定义的服务,并可以通过一组后端进行简单的TCP、UDP和SCTP流转发或者在一组后端进行循环TCP、UDP和SCTP转发,用户必须使用apiserverAPI创建一个服务来配置代理,其实就是kube-proxy通过在主机上维护网络规则并执行连接转发来实现Kubernetes服务访问;kube-proxy运行在每个节点上,监听APIServer中服务对象的变化,再通过管理IPtables或者IPVS规则来实现网络的转发;IPVS相对IPtables效率会更高一些,使用IPVS模式需要在运行Kube-Proxy的节点上安装ipvsadm、ipset工具包和加载ip_vs内核模块,当Kube-Proxy以IPVS代理模式启动时,Kube-Proxy将验证节点上是否安装了IPVS模块,如果未安装,则Kube-Proxy将回退到IPtables代理模式;使用IPVS模式,Kube-Proxy会监视KubernetesService对象和Endpoints,调用宿主机内核Netlink接口以相应地创建IPVS规则并定期与KubernetesService对象Endpoints对象同步IPVS规则,以确保IPVS状态与期望一致,访问服务时,流量将被重定向到其中一个后端Pod,IPVS使用哈希表作为底层数据结构并在内核空间中工作,这意味着IPVS可以更快地重定向流量,并且在同步代理规则时具有更好的性能,此外,IPVS为负载均衡算法提供了更多选项,例如:rr(轮询调度)、lc(最小连接数)、dh(目标哈希)、sh(源哈希)、sed(最短期望延迟)、nq(不排队调度)等。kubernetesv1.11之后默认使用IPVS,默认调度算法为rr;

kubelet:kubelet是运行在每个worker节点的代理组件,它会监视已分配给节点的pod;它主要功能有,向master汇报node节点的状态信息;接受指令并在Pod中创建docker容器;准备Pod所需的数据卷;返回pod的运行状态;在node节点执行容器健康检查;

客户端工具组件

1、命令行工具kubectl:它时一个通过命令行对k8s集群进行管理的客户端工具;工作逻辑是,默认情况是在用户家目录的.kube目录中查找一个名为config的配置文件,这个配置文件主要是保存用于连接k8s集群的认证信息;当然我们也可以使用设置KUBECONFIG环境变量或者使用--kubeconfig参数来指定kubeconfig配置文件来使用此工具;

2、Dashboard:该工具是基于web网页的k8s客户端工具;我们可以使用该web页面查看集群中的应用概览信息,也可以创建或者修改k8s资源,也可以对deployment实现弹性伸缩、发起滚动升级、删除pod或这是用向导创建新的应用;

基于ubuntu2204,containerd为容器引擎部署K8S

1、系统优化

添加内核参数

| 1 2 3 4 5 6 7 8 9 10 11 12 |

|

应用上述配置,使其内核参数生效

| 1 2 3 4 5 6 7 8 9 10 11 |

|

提示:这里提示bridge-nf-call-ip6tables和bridge-nf-call-iptables没有找到文件;其原因是br_netfilter模块没有挂载;

挂载br_netfilter内核模块

| 1 2 3 4 5 6 |

|

再次使用sysctl -p命令看看 bridge-nf-call-ip6tables和bridge-nf-call-iptables是否还会包未找到文件呢?

| 1 2 3 4 5 6 7 8 9 10 11 |

|

内核模块开机挂载

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

|

提示:上述配置需要重启或者使用modprobe命令分别加载;如: tail -23 /etc/modules-load.d/modules.conf |xargs -L1 modprobe;

安装ipvs管理工具和一些依赖包

| 1 |

|

配置资源限制

| 1 2 3 4 5 6 7 8 9 10 11 12 |

|

提示:配置上述资源限制重启服务器生效;

禁用swap设备

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

|

2、基于脚本自动化安装containerd

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 |

|

提示:上述脚本主要用几个函数来描述了在centos和ubuntu系统之上安装docker、docker-compose和containerd相关步骤;使用该脚本,需要将所需安装二进制包,配置文件都放置与脚本同一目录;

运行脚本安装containerd

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

|

验证:containerd是否安装成功,nerdctl是否可正常使用?

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

|

跑一个nginx容器看看是否可以正常跑起来?

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

|

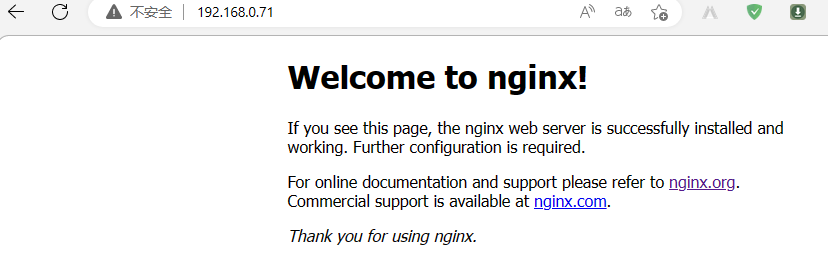

浏览器访问master01的IP地址80端口,看看nginx是否能够正常访问?

提示:能够看到我们刚才跑的nginx容器是可以正常访问的,说明我们基于自动化脚本部署的containerd容器环境已经准备好了;

删除容器测试

| 1 2 3 4 5 6 7 8 |

|

3、配置apt源并安装kubeadm、kubelet、kubectl

| 1 2 3 4 5 6 |

|

安装kubeadm、kubelet、kubectl

| 1 |

|

提示:添加了软件源以后,必须使用apt update命令来更新源;然后可以使用apt-cache madison kubeadm来查看仓库中所有kubeadm版本,如果要安装指定版本的软件,可以使用上述方式,如果不指定就是安装仓库中最新版本;

验证kubeadm的版本信息

| 1 2 3 |

|

查看部署k8s所需的镜像

| 1 2 3 4 5 6 7 8 9 |

|

提示:上述列出部署k8s v1.26.3版本所需镜像;上述镜像在国内需要代理才可以正常下载,解决方案有两个,第一个是使用国内阿里云的镜像仓库中的镜像;第二就是使用代理;

第一种方式,使用阿里云镜像仓库中的镜像

| 1 2 3 4 5 6 7 8 9 |

|

提示:可以使用--image-repository选项来指定镜像仓库地址;下载和初始化集群master节点的时候也是用相同选项指定镜像仓库地址即可;

第二种方式,在crt终端使用export 导出代理配置到当前终端环境变量

| 1 2 3 |

|

提示:后面写自己的代理服务器IP地址和端口;

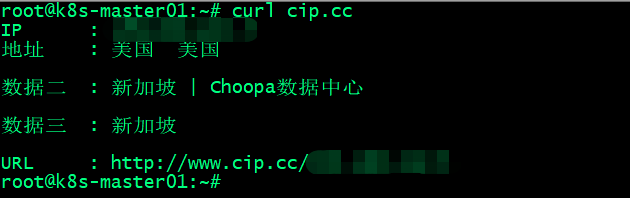

验证当前终端是否是代理服务的IP地址?

测试,使用nerdctl命令行工具,下载registry.k8s.io/etcd:3.5.6-0镜像,看看可以正常下载下来?

提示:可以看到现在直接下载谷歌仓库中的镜像是没有问题;这种方式网速稍微有点慢,建议使用国内仓镜像库;

下载部署k8s所需的镜像 并指定从阿里云仓库下载

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

|

4、k8s集群初始化

master节点初始化

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 |

|

提示:如果你能看到类似上述信息,表示k8smaster节点初始化成功;

创建用户家目录/.kube目录,并复制配置文件

| 1 2 3 4 |

|

验证kubectl工具是否可用?

| 1 2 3 4 5 6 7 8 9 10 11 12 13 |

|

提示:能够看到上述信息,表示kubectl工具使用是没有问题的;

加入node节点

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

|

提示:能够看到上述信息,表示节点加入成功;

验证:在master节点使用kubectl get nodes命令,看看对应节点是否都加入集群?

| 1 2 3 4 5 6 |

|

提示:能够看到有两个节点现在已经加入集群;但是状态是notready,这是因为集群还没有部署网络插件;

5、部署网络组组件calico

下载网络插件calico的部署清单

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

|

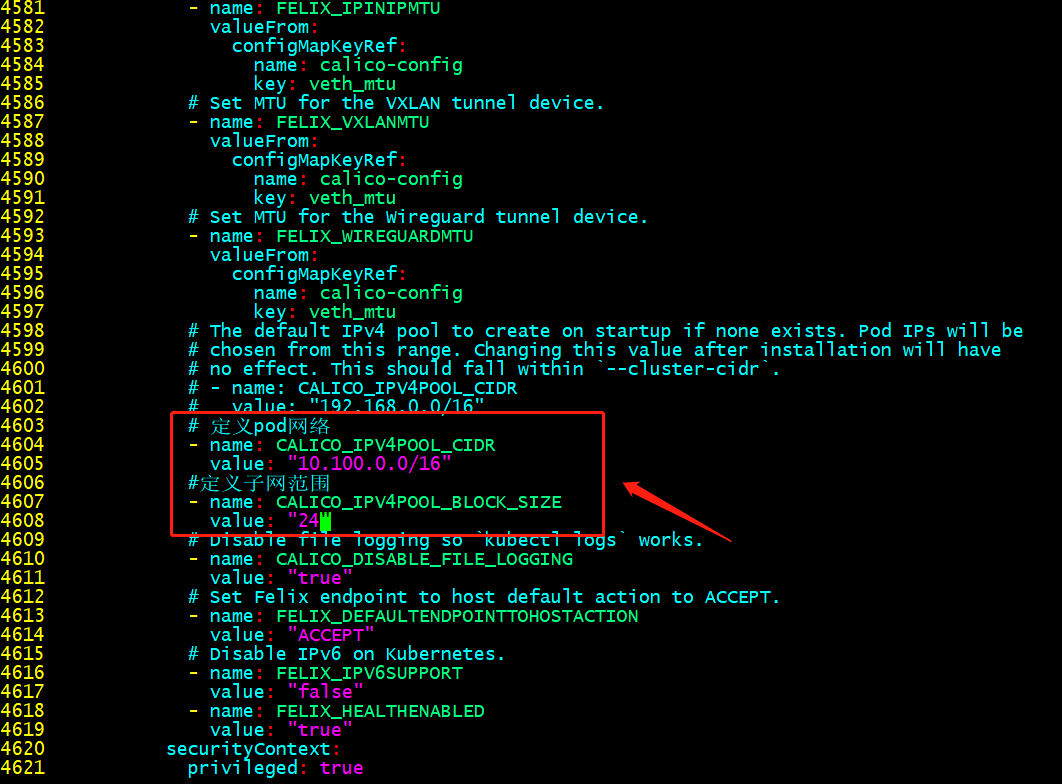

修改calica.yaml

提示:这个配置必须和集群初始化指定的pod网络相同;

在calica.yaml中指定网卡信息

提示:这个根据自己环境中服务器的网卡名称来指定;我这里服务器都是ens33;需要注意的是,修改yaml文件一定要注意格式缩进;

查看calico所需镜像

| 1 2 3 4 5 6 7 |

|

提前下载镜像

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 |

|

在k8smaster上应用calica部署清单

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

|

验证:查看pod是否正常running?node是否都准备就绪?

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

|

提示:能够看到上述node和pod都处ready状态表示网络插件部署完成;这里需要注意一点的是,如果calico-kube-controllers被调度到非master节点上运行的话,需要拷贝master节点用户家目录下.kube/config文件到node节点上~/.kube/config,因为calico-kube-controllers初始化需要连接到k8s集群上,没有这个文件认证通不过会导致calico-kube-controllers初始化不成功;

复制master家目录的下的.kube目录到node节点,防止calico-kube-controllers调度到node节点初始化不成功

| 1 2 3 |

|

提示:node3也是相同的操作;

6、部署官方dashboard

下载官方dashboard部署清单

| 1 |

|

查看清单所需镜像

| 1 2 3 4 5 |

|

提前下载所需镜像

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

|

应用配置清单

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

|

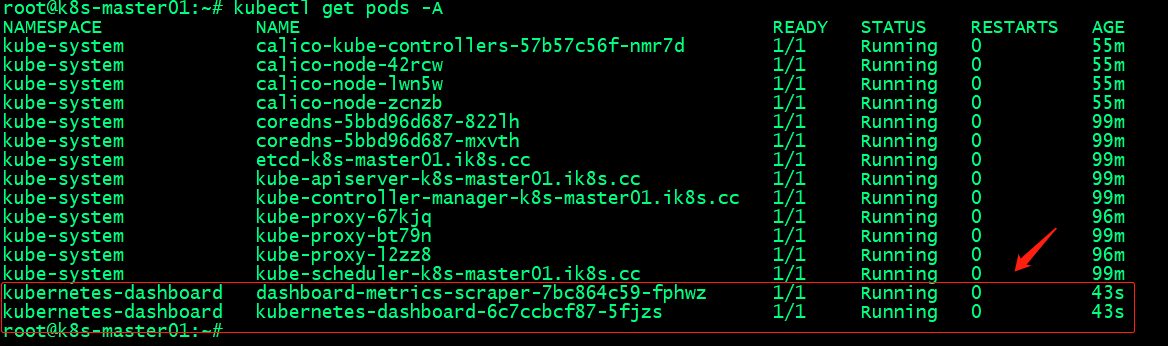

验证pod是否都running?

提示:能够看到这两个pod正常running,表示dashboard部署成功;

创建用户和密钥

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

|

应用及配置清单

| 1 2 3 4 5 6 |

|

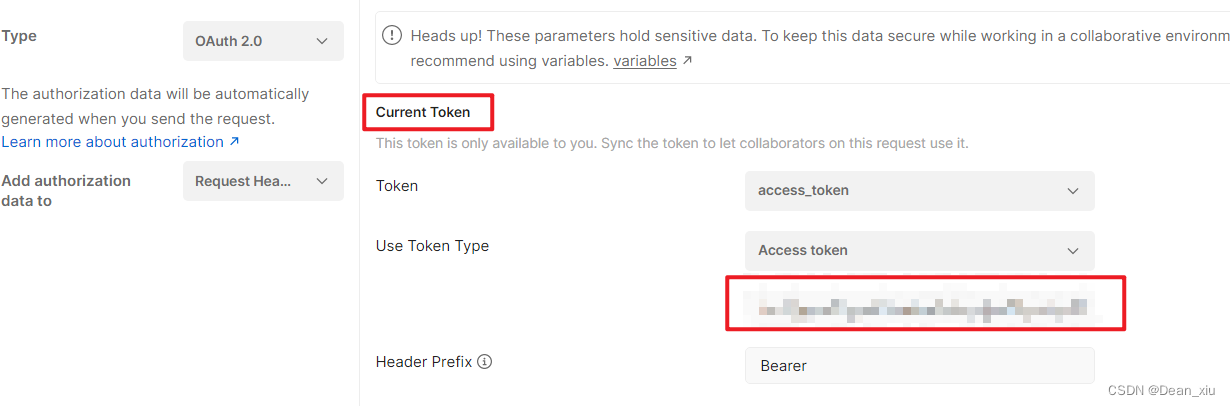

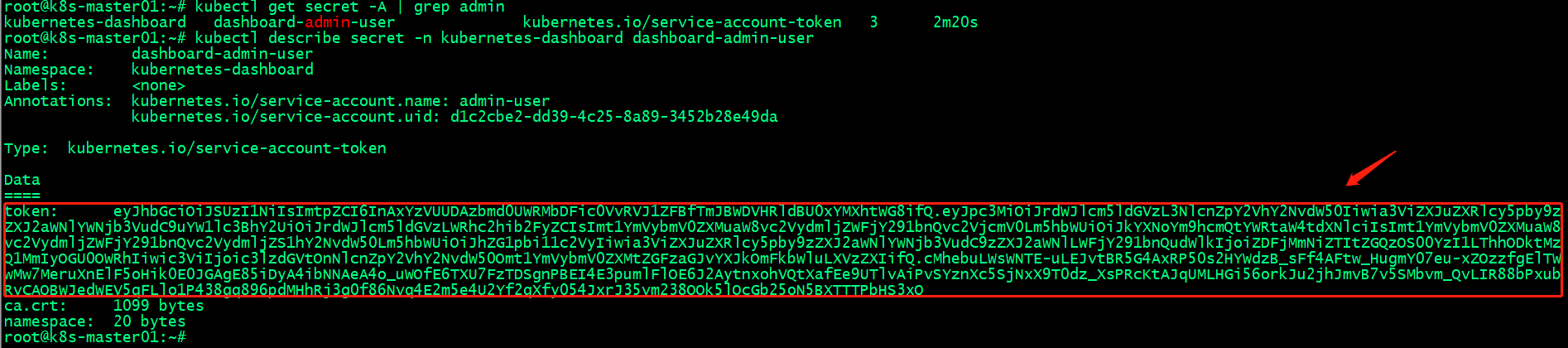

验证:查看admin-user的token信息

提示:在集群上能够查到上述用户和token信息,表示我们创建用户和secret成功;

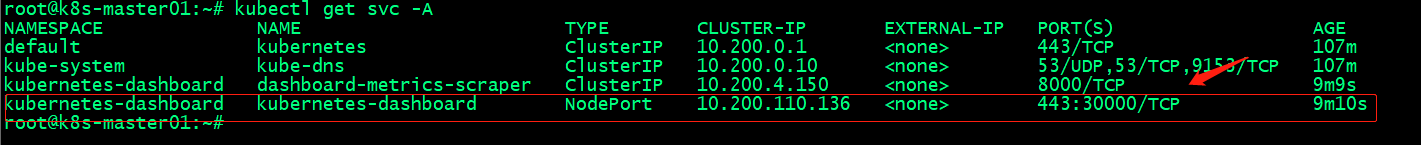

查看dashboard服务

提示:从上图可以看到dashboard通过nodeport将主机30000端口和pod的443端口绑定,这告诉我们访问集群任意节点的30000端口都可以访问到dashboard;

验证:通过访问集群任意节点的30000端口,看看是否能够访问到dashboard?

通过token登录dashboard

提示:能够通过token登录到dashboard,能够看到集群相关信息,说明dashboard部署没有问题,同时基于containerd和kubeadm部署的k8s集群也没有问题;