conv1d 先看看官方文档

再来个简单的例子

import torch

import numpy as np

import torch.nn as nndata = np.arange(1, 13).reshape([1, 4, 3])

data = torch.tensor(data, dtype=torch.float)

print("[data]:\n", data)

conv = nn.Conv1d(in_channels=4, out_channels=1, kernel_size=3, stride=1, padding=0, bias=False)

print("[weight]:\n", conv.weight)

print("[element-wise multiply]:", (data*conv.weight).sum().item())

output = conv(data)

print("[output size]:", output.size())

print("[output]:", output)"""

[data]:tensor([[[ 1., 2., 3.],[ 4., 5., 6.],[ 7., 8., 9.],[10., 11., 12.]]])

[weight]:Parameter containing:

tensor([[[ 0.2599, -0.1309, -0.2319],[-0.1974, -0.0371, -0.1319],[-0.2190, -0.1151, 0.0644],[-0.0862, -0.2313, 0.1159]]], requires_grad=True)

[element-wise multiply]: -6.354348182678223

[output size]: torch.Size([1, 1, 1])

[output]: tensor([[[-6.3543]]], grad_fn=<SqueezeBackward1>)

"""

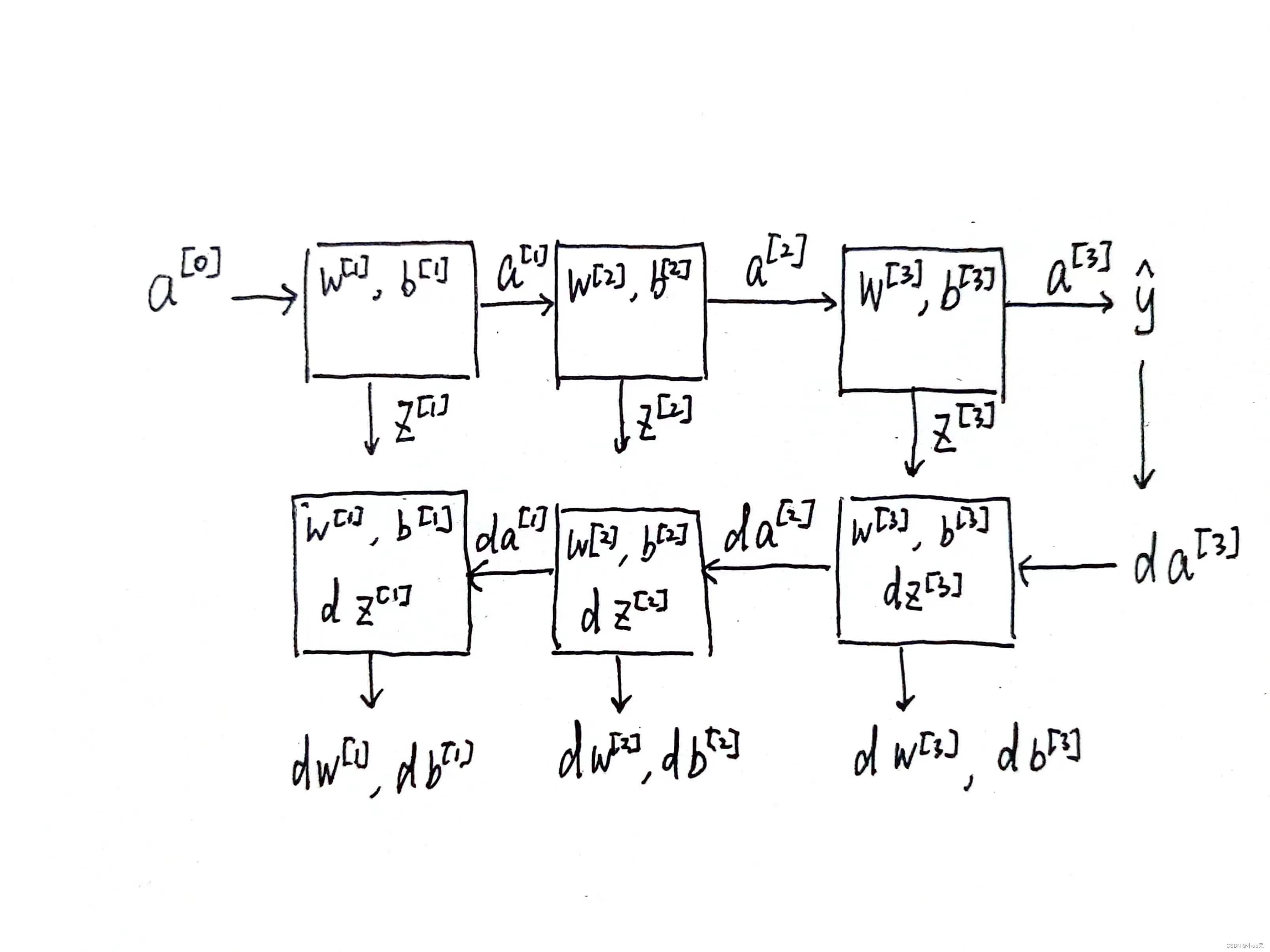

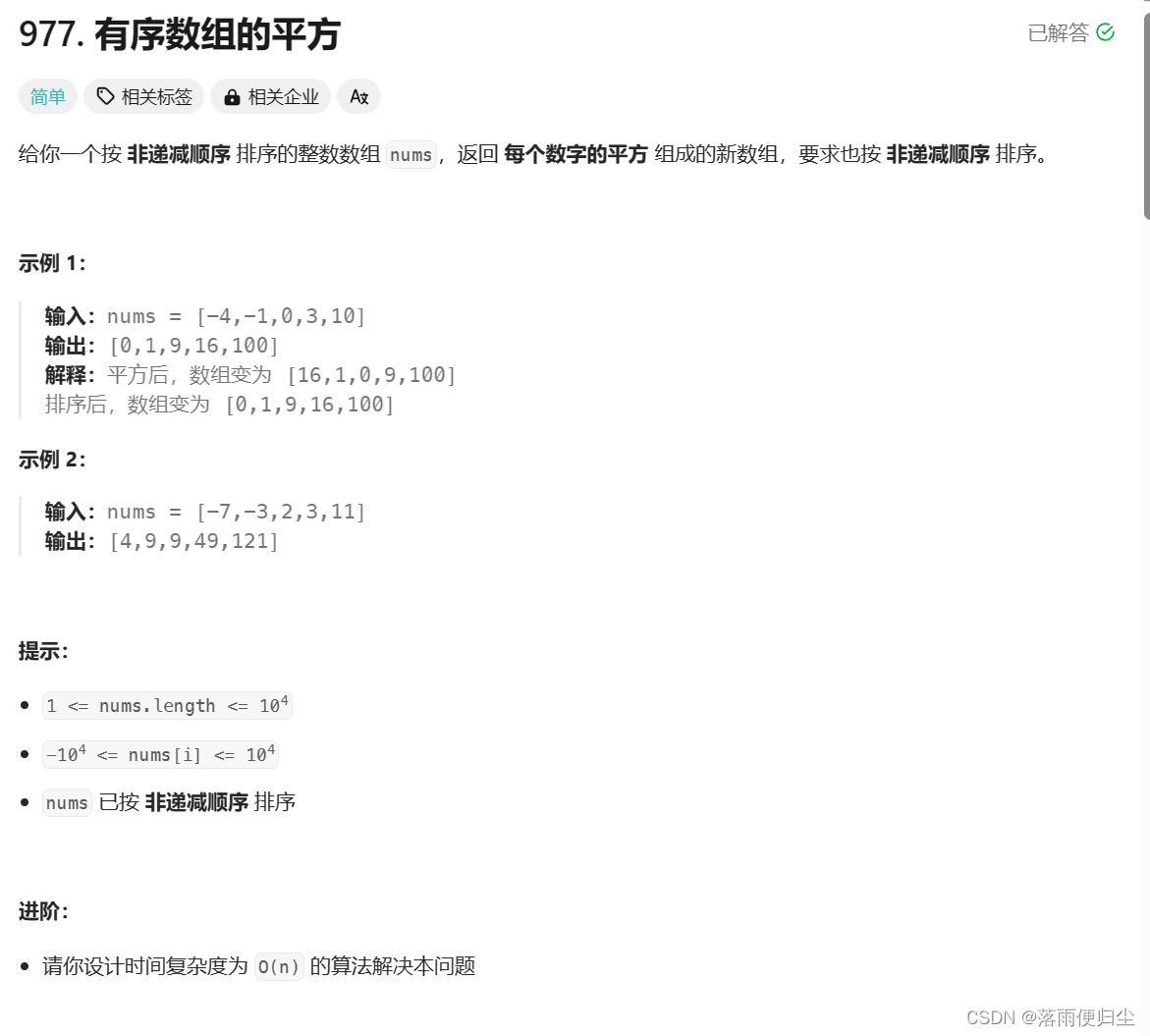

2D卷积是以 n 个 3D tensor 在二维平面滑动,所以叫 2D,标准2D卷积的卷积核 C C C 和输入特征图的 C i n C_{in} Cin 应该保持一致

忽略 C 的 2D conv 演示,金字塔,哈哈哈

同理

1D 卷积是以 n 个 2D tensor 在一维平面滑动,标准 1D 卷积核的 h 和 输入的 h 应该保持一致,别潜意识的理解为 h 只能等于 1,像下图这样,h = 1

再看一个稍微复杂的例子

eg:

import torch

import numpy as np

import torch.nn as nndata = np.arange(1, 13).reshape([1, 4, 3])

data = torch.tensor(data, dtype=torch.float)

print("[data]:\n", data)

conv = nn.Conv1d(in_channels=4, out_channels=2, kernel_size=3, stride=1, padding=0, bias=False)

print("[weight]:\n", conv.weight)

print("[element-wise multiply]:", (data*conv.weight[0]).sum().item())

print("[element-wise multiply]:", (data*conv.weight[1]).sum().item())

output = conv(data)

print("[output size]:", output.size())

print("[output]:", output)"""

[data]:tensor([[[ 1., 2., 3.],[ 4., 5., 6.],[ 7., 8., 9.],[10., 11., 12.]]])

[weight]:Parameter containing:

tensor([[[-0.1565, 0.2598, -0.2152],[-0.0130, -0.1495, -0.0799],[-0.2842, -0.1508, -0.1939],[-0.1133, -0.2627, 0.1949]],[[ 0.0576, 0.1712, -0.1465],[-0.2060, 0.1648, 0.2039],[ 0.2221, -0.1940, 0.1126],[-0.2098, -0.0749, 0.1407]]], requires_grad=True)

[element-wise multiply]: -8.187724113464355

[element-wise multiply]: 0.9679145812988281

[output size]: torch.Size([1, 2, 1])

[output]: tensor([[[-8.1877],[ 0.9679]]], grad_fn=<SqueezeBackward1>)

"""结合可视化看看 1D 卷积是怎么滑动的(来自 添加链接描述)

eg

m = nn.Conv1d(4, 2, 3, stride=2)

input = torch.randn(1, 4, 9)

print(input)

output = m(input)

print(output)

print(output.size())

output

tensor([[[-0.2105, -1.0958, 0.7299, 1.1003, 2.3175, 0.8186, -1.7510, -0.1925, 0.8591],[ 1.0991, -0.3016, 1.5633, 0.6162, 0.3150, 1.0413, 1.0571, -0.7014, 0.2239],[-0.0658, 0.4755, -0.6653, -0.0696, 0.3483, -0.0360, -0.4665, 1.2606, 1.3365],[-0.0186, -1.1802, -0.8835, -1.1813, -0.5145, -0.0534, -1.2568, 0.3211, -2.4793]]])tensor([[[-0.8012, 0.0589, 0.1576, -0.8222],[-0.8231, -0.4233, 0.7178, -0.6621]]], grad_fn=<SqueezeBackward1>)torch.Size([1, 2, 4])

第一个卷积核

第二个卷积核