介绍:我有两台数据库,分别称为200和203,200和203的服务器性能配置相当,203的配置甚至还要好一点。都是安装的centos7.7,oracle 19C,均已开日志归档,这两台服务器在同一个机房,同一个网段。当我在本地使用JDBC去往这两个数据库分别插入10w条记录,每插入一条提交一次,200耗时约为220多秒,203耗时约为340多秒。耗时相差较大,请问是什么原因导致的,我该如何排查这个问题。

本人排查过程:

JAVA代码

该java代码比较简单,就是一张表有十个字段,然后插入10w条记录,每次插入自动提交(jdbc的特性)

public class JdbcBatchTest {public static int exec(int totalCnt) throws SQLException, ClassNotFoundException {String user = "xxxx";String password = "xxxx"; String driver;String url;driver = "oracle.jdbc.OracleDriver";url = "jdbc:oracle:thin:@xxx:1521/xxx";long l1 = System.currentTimeMillis();Class.forName(driver);Connection connection = DriverManager.getConnection(url, user, password);String sql = "insert into test411 values (?, ?, ?, ?, ?, ?, ?, ?, ?, ?)";PreparedStatement preparedStatement = connection.prepareStatement(sql);for (int i = 1; i <= totalCnt; i++) {preparedStatement.setInt(1, i);preparedStatement.setString(2, "222fda2e41e0488a983483b7e6b9cc82" + i);preparedStatement.setString(3, "33744f367c0549b798b841e707bcf539" + i);preparedStatement.setString(4, "44ae0195ec864ba78f29d62dc387c03b" + i);preparedStatement.setString(5, "552aeb154f684df892b700a0a561f418" + i);preparedStatement.setString(6, "662fda2e41e0488a983483b7e6b9cc82" + i);preparedStatement.setString(7, "77744f367c0549b798b841e707bcf539" + i);preparedStatement.setString(8, "88ae0195ec864ba78f29d62dc387c03b" + i);preparedStatement.setString(9, "992aeb154f684df892b700a0a561f418" + i);preparedStatement.setString(10, "102aeb154f684df892b700a0a561f418" + i);preparedStatement.executeUpdate();}connection.close();long l2 = System.currentTimeMillis();System.out.println("总条数:" + totalCnt + ",一共耗时:"+ (l2-l1) + " 毫秒");return 100;}public static void main(String[] args) throws SQLException, ClassNotFoundException {exec(100000);}

}

200 总条数:100000,一共耗时:225353 毫秒

203 总条数:100000,一共耗时:348713 毫秒

服务器配置

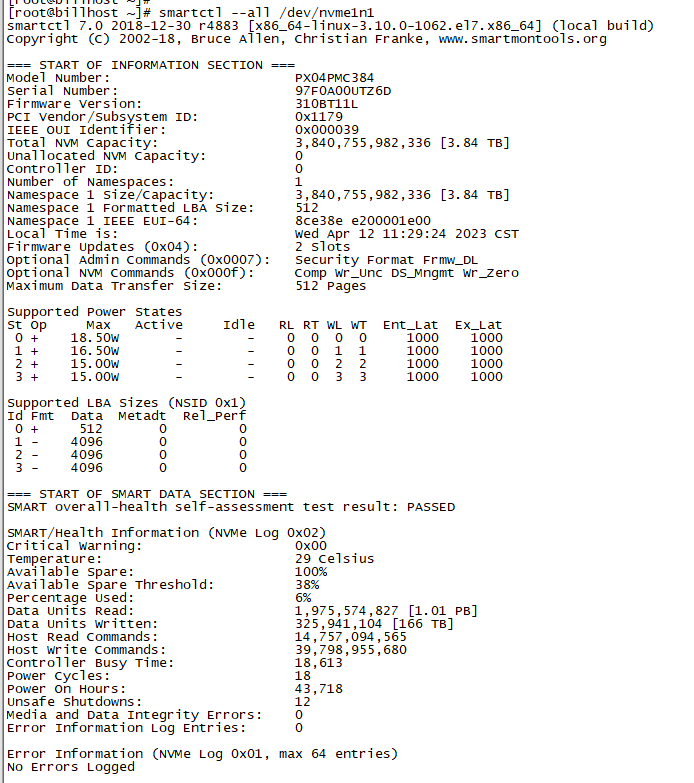

200的cpu较203要差一点,且nvme卡的配置也要差一点

200的配置:

Intel(R) Xeon(R) Gold 6150 CPU @ 2.70GHz * 2

NVME卡

[root@billhost ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 3.5T 0 disk

├─sda1 8:1 0 500M 0 part /boot/efi

├─sda2 8:2 0 32G 0 part [SWAP]

└─sda3 8:3 0 2T 0 part /

nvme0n1 259:1 0 3.5T 0 disk

└─nvme0n1p1 259:3 0 3.5T 0 part └─md0 9:0 0 3.5T 0 raid1 /data

nvme1n1 259:0 0 3.5T 0 disk

└─nvme1n1p1 259:2 0 3.5T 0 part └─md0 9:0 0 3.5T 0 raid1 /data

[oracle@billhost ~]$ cat /etc/sysctl.conf

kernel.shmmax = 82463371264

kernel.shmall = 20132659

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

fs.file-max = 6815744

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

fs.aio-max-nr = 1048576

vm.swappiness = 10

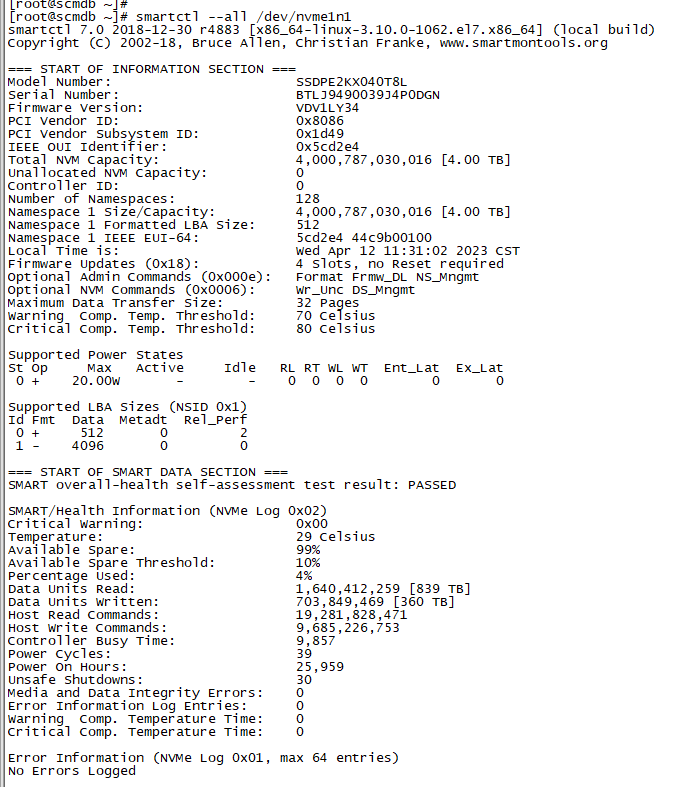

203的配置:

CPU Intel(R) Xeon(R) Gold 6248 CPU @ 2.50GHz * 2

NVME卡

[root@scmdb ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 5.2T 0 disk

├─sda1 8:1 0 500M 0 part /boot/efi

├─sda2 8:2 0 500M 0 part /boot

├─sda3 8:3 0 16G 0 part [SWAP]

└─sda4 8:4 0 5.2T 0 part /

sdb 8:16 0 14T 0 disk

└─sdb1 8:17 0 14T 0 part /data2

sdc 8:32 0 3.7T 0 disk

└─sdc1 8:33 0 3.7T 0 part /rmanbak

nvme0n1 259:1 0 3.7T 0 disk

└─nvme0n1p1 259:3 0 3.7T 0 part └─md0 9:0 0 3.7T 0 raid1 /data

nvme1n1 259:0 0 3.7T 0 disk

└─nvme1n1p1 259:2 0 3.7T 0 part └─md0 9:0 0 3.7T 0 raid1 /data

[root@scmdb ~]# cat /etc/sysctl.conf

kernel.shmmax = 77309411328

kernel.core_uses_pid = 1

kernel.shmall = 60293120

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

fs.file-max = 6815744

net.ipv4.ip_local_port_range = 9000 65535

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

vm.swappiness=10

vm.vfs_cache_pressure=200

vm.min_free_kbytes=409600

fs.aio-max-nr=3145728

数据库参数

200的数据库参数:

*._use_adaptive_log_file_sync='FALSE'

*._use_single_log_writer='TRUE'

*.audit_file_dest='/u01/app/oracle/admin/cdb200c/adump'

*.audit_trail='NONE'

*.compatible='19.0.0'

*.control_file_record_keep_time=31

*.control_files='/data/oradata/CDB200C/control01.ctl','/data/oradata/CDB200C/control02.ctl'

*.db_block_size=8192

*.db_name='cdb200c'

*.deferred_segment_creation=FALSE

*.diagnostic_dest='/u01/app/oracle'

*.dispatchers='(PROTOCOL=TCP) (SERVICE=cdb200cXDB)'

*.enable_pluggable_database=true

*.log_archive_dest_1='location=/data/archivelog/cdb200c/'

*.nls_language='AMERICAN'

*.nls_territory='AMERICA'

*.open_cursors=2000

*.open_links_per_instance=50

*.open_links=50

*.pga_aggregate_target=12g

*.processes=1000

*.remote_login_passwordfile='EXCLUSIVE'

*.session_cached_cursors=100

*.sga_target=24g

*.undo_tablespace='UNDOTBS1'

203的数据库参数:

*._use_adaptive_log_file_sync='FALSE'

*._use_single_log_writer='TRUE'

*.audit_file_dest='/u01/app/oracle/admin/scmdb/adump'

*.audit_trail='NONE'

*.compatible='19.0.0'

*.control_file_record_keep_time=31

*.control_files='/u02/oradata/SCMDB/control01.ctl','/u02/oradata/SCMDB/control02.ctl'

*.db_32k_cache_size=21474836480

*.db_block_size=8192

*.db_cache_size=21474836480

*.db_files=1000

*.db_name='scmdb'

*.db_unique_name='SCMDB'

*.diagnostic_dest='/u01/app/oracle'

*.dispatchers='(PROTOCOL=TCP) (SERVICE=scmdbXDB)'

*.enable_pluggable_database=TRUE

*.fal_server='scmdbdg'

*.log_archive_config='DG_CONFIG=(scmdb,scmdbdg)'

*.log_archive_dest_1='LOCATION=/data2/archivelogs VALID_FOR=(ALL_LOGFILES,ALL_ROLES) DB_UNIQUE_NAME=scmdb'

*.log_archive_dest_2='SERVICE=scmdbdg ASYNC NOAFFIRM REOPEN=60 VALID_FOR=(ONLINE_LOGFILES,PRIMARY_ROLE) DB_UNIQUE_NAME=scmdbdg'

*.log_archive_dest_state_1='ENABLE'

*.log_archive_dest_state_2='ENABLE'

*.nls_language='AMERICAN'

*.nls_territory='AMERICA'

*.open_cursors=2000

*.open_links=50

*.open_links_per_instance=50

*.optimizer_mode='ALL_ROWS'

*.parallel_execution_message_size=8192

*.pga_aggregate_target=12884901888

*.processes=6000

*.remote_login_passwordfile='EXCLUSIVE'

*.session_cached_cursors=500

*.sga_max_size=64424509440

*.sga_target=64424509440

*.shared_pool_size=9663676416

*.standby_file_management='AUTO'

*.undo_tablespace='UNDOTBS1'

onlinelog

200的onlinelog,5组,每个大小2G

/data/oradata/CDB200C/redo04.log

/data/oradata/CDB200C/redo05.log

/data/oradata/CDB200C/redo06.log

/data/oradata/CDB200C/redo07.log

/data/oradata/CDB200C/redo08.log

203的onlinelog,8组,每个大小4G

/data/SCMDB/redo16a.log

/data/SCMDB/redo17a.log

/data/SCMDB/redo18a.log

/data/SCMDB/redo19a.log

/data/SCMDB/redo20a.log

/data/SCMDB/redo21a.log

/data/SCMDB/redo22a.log

/data/SCMDB/redo23a.log

fio测试

我测试了/data这个挂载点连续写,每次4k,总大小5G

显示200的写入性能的确不如203

200上的fio测试

[root@billhost data]# fio -filename=/data/test200 -direct=1 -iodepth 1 -thread -rw=write -ioengine=psync -bs=4k -size=5G -numjobs=1 -group_reporting -name=test200

test200: (g=0): rw=write, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

fio-3.7

Starting 1 thread

test200: Laying out IO file (1 file / 5120MiB)

Jobs: 1 (f=1): [W(1)][100.0%][r=0KiB/s,w=156MiB/s][r=0,w=40.0k IOPS][eta 00m:00s]

test200: (groupid=0, jobs=1): err= 0: pid=209451: Tue Apr 11 19:47:22 2023write: IOPS=38.3k, BW=150MiB/s (157MB/s)(5120MiB/34217msec)clat (usec): min=15, max=13616, avg=25.31, stdev=14.33lat (usec): min=15, max=13617, avg=25.43, stdev=14.35clat percentiles (nsec):| 1.00th=[15936], 5.00th=[16192], 10.00th=[16320], 20.00th=[16512],| 30.00th=[19584], 40.00th=[20096], 50.00th=[29056], 60.00th=[30080],| 70.00th=[30336], 80.00th=[30592], 90.00th=[31360], 95.00th=[36608],| 99.00th=[51968], 99.50th=[55552], 99.90th=[63232], 99.95th=[67072],| 99.99th=[74240]bw ( KiB/s): min=113872, max=165784, per=99.95%, avg=153144.62, stdev=16359.26, samples=68iops : min=28468, max=41446, avg=38286.12, stdev=4089.80, samples=68lat (usec) : 20=39.16%, 50=59.33%, 100=1.51%, 250=0.01%lat (msec) : 20=0.01%cpu : usr=6.89%, sys=45.62%, ctx=1310753, majf=0, minf=84IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%issued rwts: total=0,1310720,0,0 short=0,0,0,0 dropped=0,0,0,0latency : target=0, window=0, percentile=100.00%, depth=1Run status group 0 (all jobs):WRITE: bw=150MiB/s (157MB/s), 150MiB/s-150MiB/s (157MB/s-157MB/s), io=5120MiB (5369MB), run=34217-34217msecDisk stats (read/write):md0: ios=0/1307776, merge=0/0, ticks=0/0, in_queue=0, util=0.00%, aggrios=0/1310833, aggrmerge=0/0, aggrticks=0/15948, aggrin_queue=15819, aggrutil=50.40%nvme0n1: ios=0/1310833, merge=0/0, ticks=0/17416, in_queue=17274, util=50.40%nvme1n1: ios=0/1310833, merge=0/0, ticks=0/14480, in_queue=14365, util=41.91%

203上的fio测试

[root@scmdb data]# fio -filename=/data/test203 -direct=1 -iodepth 1 -thread -rw=write -ioengine=psync -bs=4k -size=5G -numjobs=1 -group_reporting -name=test203

test203: (g=0): rw=write, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1

fio-3.7

Starting 1 thread

test203: Laying out IO file (1 file / 5120MiB)

Jobs: 1 (f=1): [W(1)][100.0%][r=0KiB/s,w=236MiB/s][r=0,w=60.3k IOPS][eta 00m:00s]

test203: (groupid=0, jobs=1): err= 0: pid=157623: Tue Apr 11 19:29:27 2023write: IOPS=47.7k, BW=186MiB/s (195MB/s)(5120MiB/27478msec)clat (usec): min=12, max=9134, avg=20.40, stdev=18.50lat (usec): min=12, max=9135, avg=20.48, stdev=18.53clat percentiles (usec):| 1.00th=[ 13], 5.00th=[ 14], 10.00th=[ 14], 20.00th=[ 14],| 30.00th=[ 14], 40.00th=[ 14], 50.00th=[ 14], 60.00th=[ 14],| 70.00th=[ 15], 80.00th=[ 36], 90.00th=[ 37], 95.00th=[ 45],| 99.00th=[ 83], 99.50th=[ 93], 99.90th=[ 116], 99.95th=[ 130],| 99.99th=[ 237]bw ( KiB/s): min=85520, max=244888, per=99.53%, avg=189898.30, stdev=66553.76, samples=54iops : min=21380, max=61222, avg=47474.59, stdev=16638.47, samples=54lat (usec) : 20=76.86%, 50=19.80%, 100=3.05%, 250=0.28%, 500=0.01%lat (usec) : 750=0.01%, 1000=0.01%lat (msec) : 2=0.01%, 4=0.01%, 10=0.01%cpu : usr=4.85%, sys=42.00%, ctx=1310725, majf=0, minf=84IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%issued rwts: total=0,1310720,0,0 short=0,0,0,0 dropped=0,0,0,0latency : target=0, window=0, percentile=100.00%, depth=1Run status group 0 (all jobs):WRITE: bw=186MiB/s (195MB/s), 186MiB/s-186MiB/s (195MB/s-195MB/s), io=5120MiB (5369MB), run=27478-27478msecDisk stats (read/write):md0: ios=8/1305950, merge=0/0, ticks=0/0, in_queue=0, util=0.00%, aggrios=4/1310859, aggrmerge=0/1, aggrticks=0/14510, aggrin_queue=14438, aggrutil=54.42%nvme0n1: ios=8/1310859, merge=0/1, ticks=1/13946, in_queue=13882, util=50.38%nvme1n1: ios=0/1310859, merge=0/1, ticks=0/15074, in_queue=14994, util=54.42%

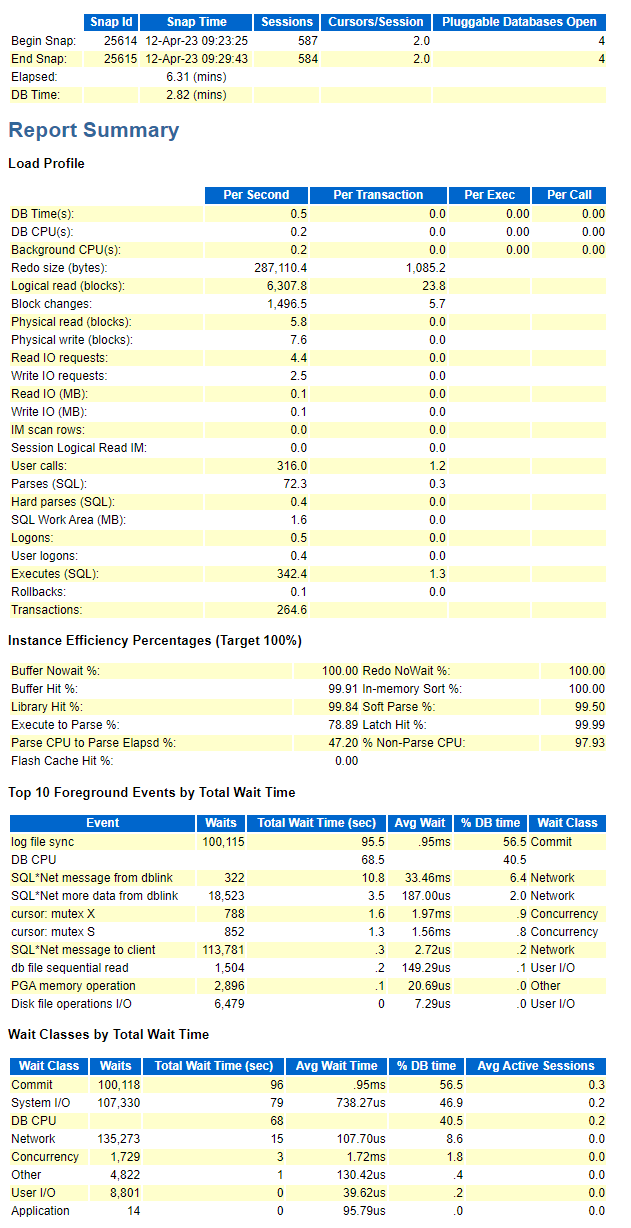

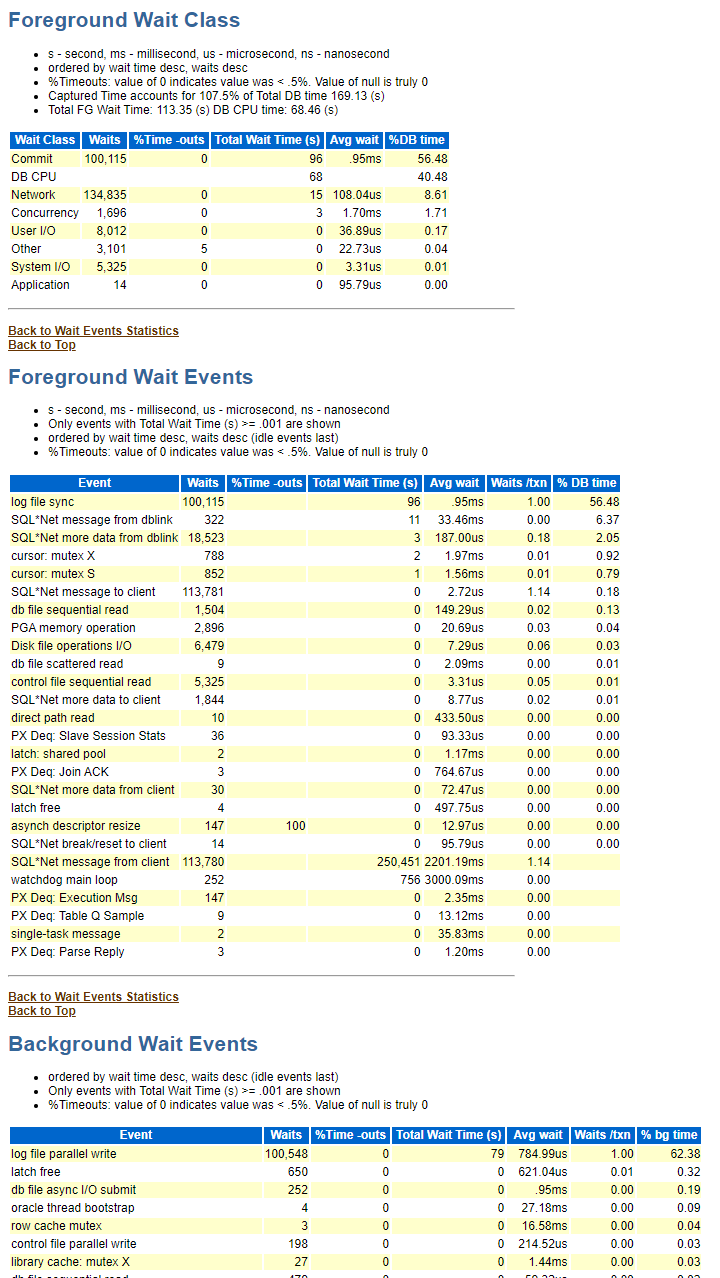

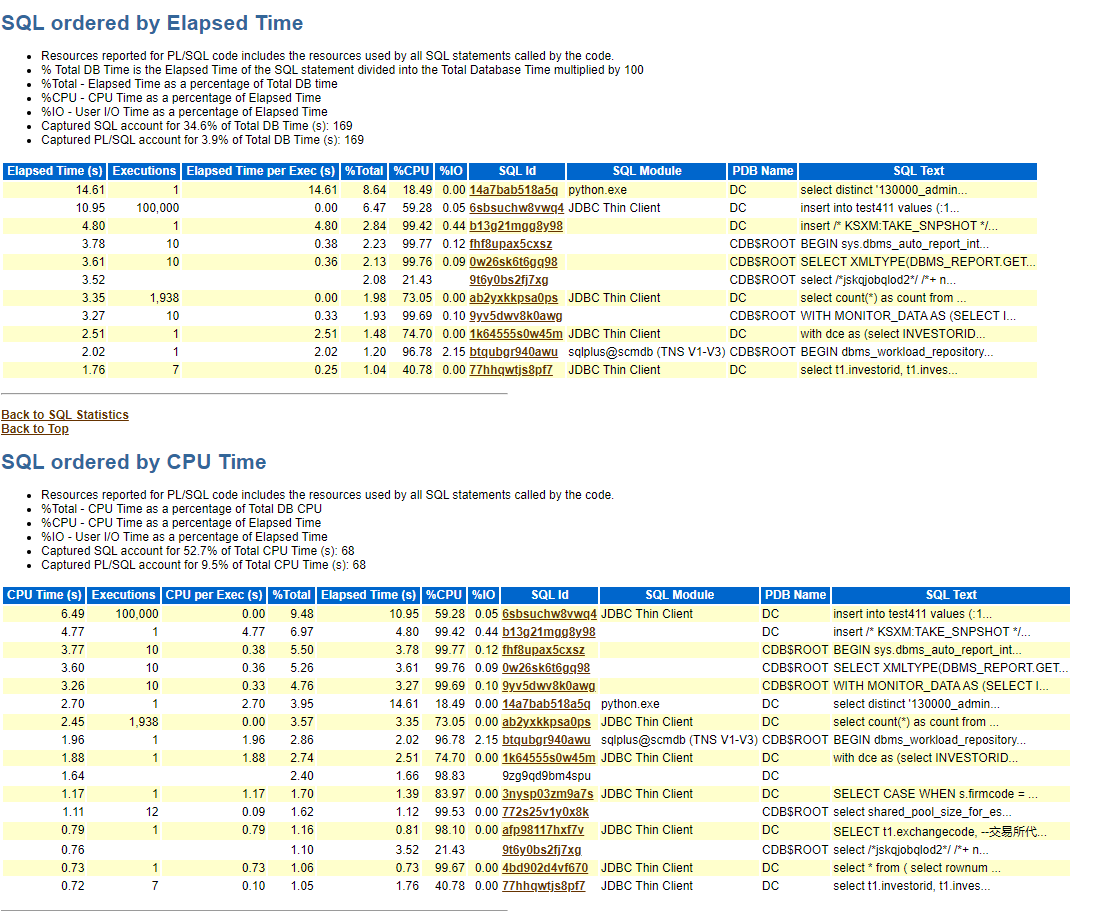

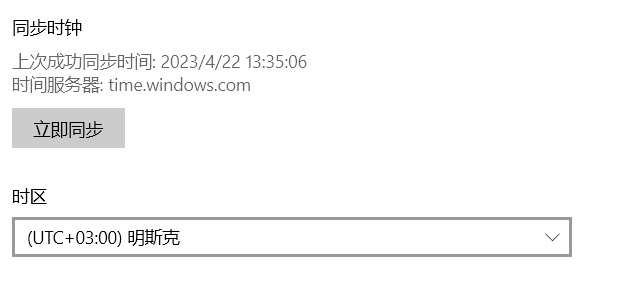

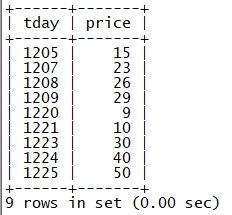

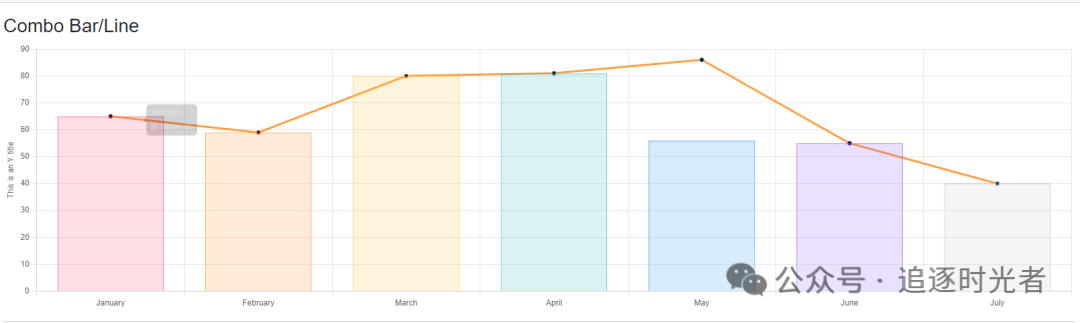

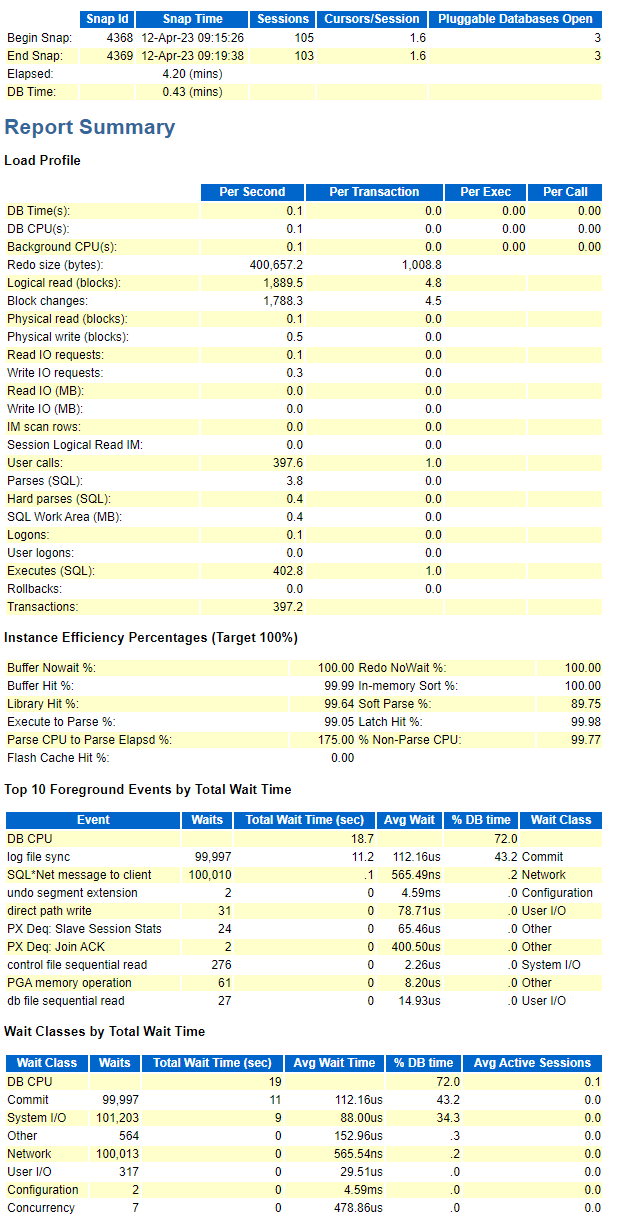

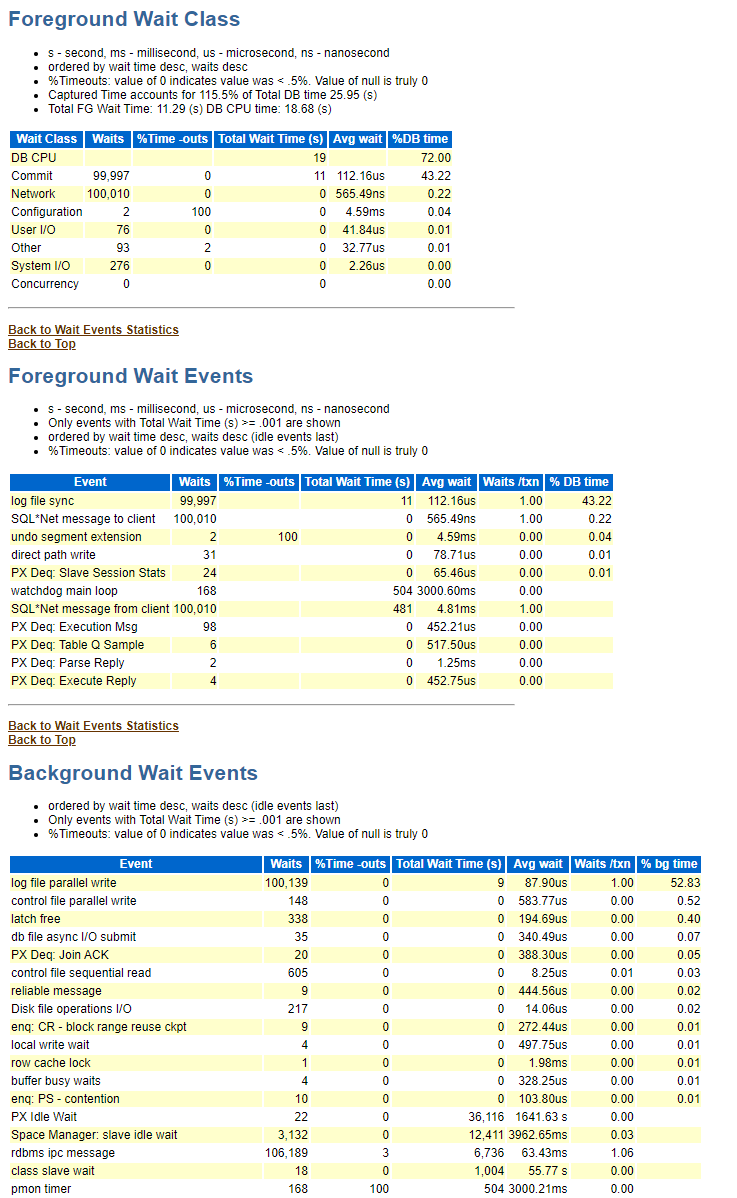

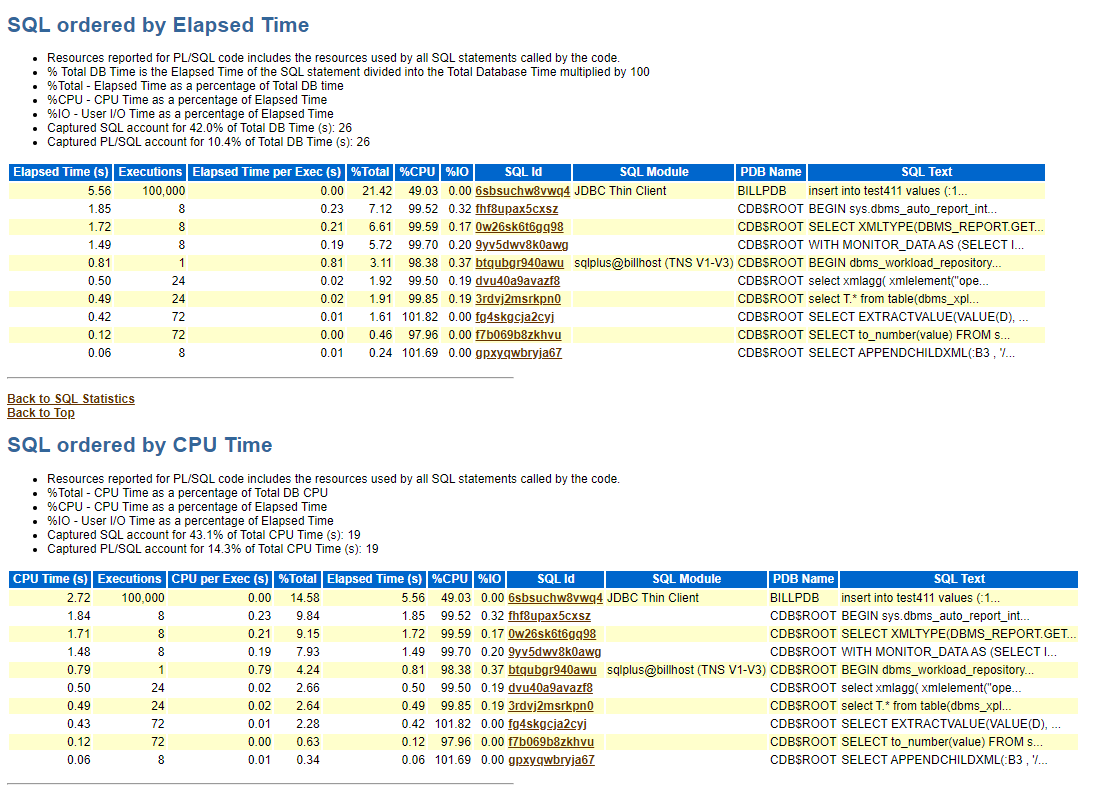

awr截图

从awr截图上面可以看到203的log file sync和log file parallel wirte的avg wait都要远远高于200,导致203插入10万条记录比200插入10万条记录的时间长

200的截图

203的截图