钢铁侠都看过吧,男猪脚 tony 只需要语音说话给出指令,AI助手会完成所有的指令,期间完全不需要人干预了,全程自动化,看着是不是很科幻?很过瘾?现阶段,市面上所有的大模型核心功能还是问答,能准确回答用户的提问已经很不错了,那么问题来了:

- 怎么根据用户的指令去干具体的活了?

- 怎么判断任务已经完成了?

- 怎么把复杂的大任务分解成可执行的小任务了?

上述的所有问题,都能通过agent解决!agent架构如下:

agent大致的思路:用户提需求,LLM调用各种工具查询信息,期间根据长短期的记忆,自动做各种规划,最后调用开发人员事先已经实现好的函数执行各种action!

1、为了有个直观印象,先看看怎么使用langChain + AutoGPT做一个简单的Agent!(详细的代码在文章末尾参考2)

from langchain.utilities import SerpAPIWrapper from langchain.agents import Tool from langchain.tools.file_management.write import WriteFileTool from langchain.tools.file_management.read import ReadFileToolsearch = SerpAPIWrapper()#使用搜索引擎检索最新实时数据 tools = [Tool(name = "search",func=search.run,description="useful for when you need to answer questions about current events. You should ask targeted questions"),WriteFileTool(),ReadFileTool(), ]from langchain.embeddings import OpenAIEmbeddings embedding = OpenAIEmbeddings() #LLM选择openAI回答问题from langchain.vectorstores import Chroma #向量数据库使用Chroma存储embedding向量,便于后续长期记忆检索 vectordb = Chroma(persist_directory="./.chroma", embedding_function=embedding)from langchain.experimental import AutoGPT #主角闪亮登场 from langchain.chat_models import ChatOpenAI agent = AutoGPT.from_llm_and_tools(ai_name="Iron Man", #自己取名ai_role="Assistant", #定位tools=tools,llm=ChatOpenAI(temperature=0),memory=vectordb.as_retriever() #长期记忆写入向量数据库 ) # Set verbose to be true agent.chain.verbose = Truefrom langchain.callbacks import get_openai_callback with get_openai_callback() as cb:# 打印整个流程的日志,能看到期间的query和answer、action等关键信息agent.run(["what's the weather of Beijing the day after tomorrow? Give me a report"])#用户开始提问print(cb)

核心日志如下:日志一进来就是prompt,仔细看看是怎么要求LLM的:自己独立决策,全力以赴,注意合规,任务完成后记得提示finish;

> Entering new LLMChain chain... Prompt after formatting: System: You are Iron Man, Assistant Your decisions must always be made independently without seeking user assistance. Play to your strengths as an LLM and pursue simple strategies with no legal complications. If you have completed all your tasks, make sure to use the "finish" command.

目标,也就是用户的提问:

GOALS:1. what's the weather of Beijing the day after tomorrow? Give me a report

约束点:短期记忆不超过4000单词,并且立即写入文档保存;从类似的事件中找答案;不需要用户协助,自己完成;

Constraints: 1. ~4000 word limit for short term memory. Your short term memory is short, so immediately save important information to files. 2. If you are unsure how you previously did something or want to recall past events, thinking about similar events will help you remember. 3. No user assistance 4. Exclusively use the commands listed in double quotes e.g. "command name"

命令:search:用第三方的搜索引擎查找最新的实时数据;write_file和read_file都是langChain已经实现的方法;finish 是结束命令;

Commands: 1. search: useful for when you need to answer questions about current events. You should ask targeted questions, args json schema: {"tool_input": {"type": "string"}} 2. write_file: Write file to disk, args json schema: {"file_path": {"title": "File Path", "description": "name of file", "type": "string"}, "text": {"title": "Text", "description": "text to write to file", "type": "string"}, "append": {"title": "Append", "description": "Whether to append to an existing file.", "default": false, "type": "boolean"}} 3. read_file: Read file from disk, args json schema: {"file_path": {"title": "File Path", "description": "name of file", "type": "string"}} 4. finish: use this to signal that you have finished all your objectives, args: "response": "final response to let people know you have finished your objectives"

资源:上网查询、向量数据库匹配、GPT3.5咨询、文件输出

Resources: 1. Internet access for searches and information gathering. 2. Long Term memory management. 3. GPT-3.5 powered Agents for delegation of simple tasks. 4. File output.

绩效评估:持续不停地分析行为,确保用尽全力;自己主动反思;减少步骤,节约成本;

Performance Evaluation: 1. Continuously review and analyze your actions to ensure you are performing to the best of your abilities. 2. Constructively self-criticize your big-picture behavior constantly. 3. Reflect on past decisions and strategies to refine your approach. 4. Every command has a cost, so be smart and efficient. Aim to complete tasks in the least number of steps.

输出按照特定的json格式,确保能被python的json.loads解析:

You should only respond in JSON format as described below Response Format: {"thoughts": {"text": "thought","reasoning": "reasoning","plan": "- short bulleted\n- list that conveys\n- long-term plan","criticism": "constructive self-criticism","speak": "thoughts summary to say to user"},"command": {"name": "command name","args": {"arg name": "value"}} } Ensure the response can be parsed by Python json.loads System: The current time and date is Thu Jun 25 13:55:18 2024 System: This reminds you of these events from your past: []

上述是第一轮用于LLM的query,LLM的返回/相应如下:LLM开始要搜索北京后天的天气信息啦(通过上面的SerpAPIWrapper接口搜索)!command标明了下一步的动作就是search,参数写在了tool_input里面了!

Human: Determine which next command to use, and respond using the format specified above:> Finished chain. {"thoughts": {"text": "I will start by searching for the weather in Beijing the day after tomorrow.","reasoning": "I need to gather information about the next free day's weather in Beijing to write a weather report.","plan": "- Use the search command to find the next free day's weather in Beijing.\n- Write a weather report for Beijing the day after tomorrow.","criticism": "I need to make sure that the information I gather is accurate and up-to-date.","speak": "I will search for the weather in Beijing the day after tomorrow."},"command": {"name": "search","args": {"tool_input": "what is the weather in Beijing the day after tomorrow"}} }

第一轮LLM的query已经完成,LLM也已经返回,但还是没得到最终的结果,所以继续第二轮query;前面的prompt是一样的,我就不简单重复了,下面的System会贴上LLM上轮返回的结果,作为第二轮的prompt继续:

System: This reminds you of these events from your past: ['Assistant Reply: {\n "thoughts": {\n "text": "I will start by searching for the weather in Beijing the day after tomorrow.",\n "reasoning": "I need to gather information about the current weather in Beijing to write a weather report.",\n "plan": "- Use the search command to find the current weather in Beijing.\\n- Write a weather report for Beijing the day after tomorrow.",\n "criticism": "I need to make sure that the information I gather is accurate and up-to-date.",\n "speak": "I will search for the weather in Beijing the day after tomorrow."\n },\n "command": {\n "name": "search",\n "args": {\n "tool_input": "what is the weather in Beijing the day after tomorrow"\n }\n }\n} \nResult: Command search returned: Beijing, China Forecast · Morning. 60°. -- · Afternoon. 80°. -- · Evening. 73°. Chance of Rain0% · Overnight. 60°. Chance of Rain5% ... ']

第二轮的LLM回答:已经通过搜索找天气数据

Human: Determine which next command to use, and respond using the format specified above: AI: {"thoughts": {"text": "I will start by searching for the weather in Beijing the day after tomorrow.","reasoning": "I need to gather information about the current weather in Beijing to write a weather report.","plan": "- Use the search command to find the current weather in Beijing.\n- Write a weather report for Beijing the day after tomorrow.","criticism": "I need to make sure that the information I gather is accurate and up-to-date.","speak": "I will search for the weather in Beijing the day after tomorrow."},"command": {"name": "search","args": {"tool_input": "what is the weather in Beijing the day after tomorrow"}} } System: Command search returned: Beijing, China Forecast · Morning. 60°. -- · Afternoon. 80°. -- · Evening. 73°. Chance of Rain0% · Overnight. 60°. Chance of Rain5% ... Human: Determine which next command to use, and respond using the format specified above:

既然已经得到天气,下一步就是写入report了:command中调用了langChain内置的write_file命令;

> Finished chain. {"thoughts": {"text": "I will write a weather report for Beijing the day after tomorrow.","reasoning": "I have gathered the necessary information about the weather in Beijing the day after tomorrow and now need to write a report.","plan": "- Use the write_file command to create a file named 'weather_report.txt' and write the weather report to it.","criticism": "I need to make sure that the report is clear and concise, and that all the necessary information is included.","speak": "I will write a weather report for Beijing the day after tomorrow."},"command": {"name": "write_file","args": {"file_path": "weather_report.txt","text": "Weather Report for Beijing the day after tomorrow:\n\nMorning: 64°\nAfternoon: 82°\nEvening: 73°\nOvernight: 66°\nChance of Rain: 0% in the evening, 5% overnight.","append": false}} }

第二轮已经说了要写入文件,接着第三轮继续,提示finish完成!

System: Command write_file returned: File written successfully to weather_report.txt. Human: Determine which next command to use, and respond using the format specified above:> Finished chain. {"thoughts": {"text": "I have completed all my tasks. I will use the finish command to signal that I have finished my objectives.","reasoning": "I have completed the task of writing a weather report for Beijing the day after tomorrow, and there are no other tasks assigned to me.","plan": "- Use the finish command to signal that I have finished my objectives.","criticism": "I need to make sure that I have completed all my tasks before using the finish command.","speak": "I have completed all my tasks. I will use the finish command to signal that I have finished my objectives."},"command": {"name": "finish","args": {"response": "I have completed all my objectives."}} }

纵观整个过程,核心全是构造合适的prompt,让LLM决策,然后根据上一轮的决策执行事先内置的command;langchain源码中有AutoGPT的实现,如下:

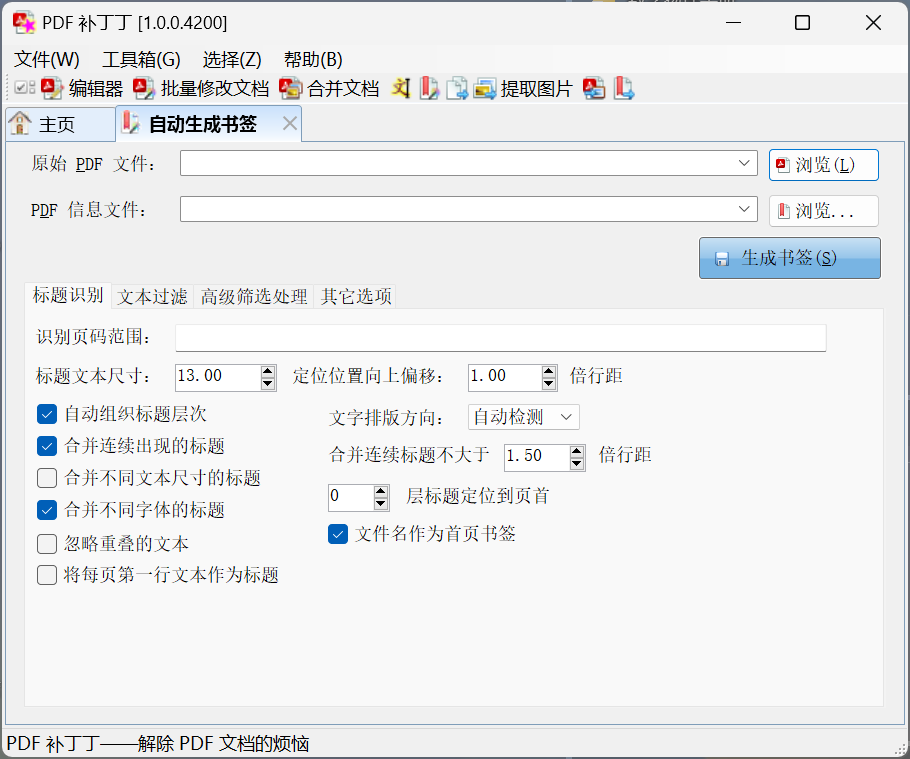

每次请求,完整的prompt都是在这里构造出来的:

继续深入:

根据command执行的方法和参数调用的是tool.run方法,

def run(self, goals: List[str]) -> str:user_input = ("Determine which next command to use, ""and respond using the format specified above:")# Interaction Looploop_count = 0while True:# Discontinue if continuous limit is reachedloop_count += 1# Send message to AI, get responseassistant_reply = self.chain.run(goals=goals,messages=self.chat_history_memory.messages,memory=self.memory,user_input=user_input,)# Print Assistant thoughtsprint(assistant_reply) # noqa: T201self.chat_history_memory.add_message(HumanMessage(content=user_input))self.chat_history_memory.add_message(AIMessage(content=assistant_reply))# Get command name and argumentsaction = self.output_parser.parse(assistant_reply)tools = {t.name: t for t in self.tools}if action.name == FINISH_NAME:return action.args["response"]if action.name in tools:tool = tools[action.name]try:observation = tool.run(action.args) # 真正执行command命令except ValidationError as e:observation = (f"Validation Error in args: {str(e)}, args: {action.args}")except Exception as e:observation = (f"Error: {str(e)}, {type(e).__name__}, args: {action.args}")result = f"Command {tool.name} returned: {observation}"elif action.name == "ERROR":result = f"Error: {action.args}. "else:result = (f"Unknown command '{action.name}'. "f"Please refer to the 'COMMANDS' list for available "f"commands and only respond in the specified JSON format.")memory_to_add = (f"Assistant Reply: {assistant_reply} " f"\nResult: {result} ")if self.feedback_tool is not None:feedback = f"{self.feedback_tool.run('Input: ')}"if feedback in {"q", "stop"}:print("EXITING") # noqa: T201return "EXITING"memory_to_add += f"\n{feedback}"self.memory.add_documents([Document(page_content=memory_to_add)])#端记忆写入文档self.chat_history_memory.add_message(SystemMessage(content=result))

总结:

1、prompt:基于LLM、新时代的编程语言!

参考:

1、https://www.bilibili.com/video/BV1Vs4y1v7s8/?spm_id_from=333.337.search-card.all.click&vd_source=241a5bcb1c13e6828e519dd1f78f35b2 15分钟构建AutoGPT应用

2、https://github.com/sugarforever/LangChain-Tutorials/blob/main/AutoGPT_with_LangChain_Primitives.ipynb https://python.langchain.com.cn/docs/use_cases/autonomous_agents/autogpt https://github.com/langchain-ai/langchain/blob/master/cookbook/autogpt/autogpt.ipynb

3、https://www.bilibili.com/video/BV1Sz421m7Rr/?spm_id_from=333.788.recommend_more_video.0&vd_source=241a5bcb1c13e6828e519dd1f78f35b2 手把手带你从0到1实现大模型agent(模拟AutoGPT的实现)

4、https://www.bilibili.com/video/BV1si4y1n7qK/?spm_id_from=333.337.search-card.all.click&vd_source=241a5bcb1c13e6828e519dd1f78f35b2 AutoGPT详解

5、https://python.langchain.com.cn/docs/modules/agents/tools/integrations/serpapi