Cilium Ingress 特性(转载)

一、环境信息

| 主机 | IP |

|---|---|

| ubuntu | 10.0.0.234 |

| 软件 | 版本 |

|---|---|

| docker | 26.1.4 |

| helm | v3.15.0-rc.2 |

| kind | 0.18.0 |

| kubernetes | 1.23.4 |

| ubuntu os | Ubuntu 22.04.6 LTS |

| kernel | 5.15.0-106 |

二、Cilium Ingress 流程图

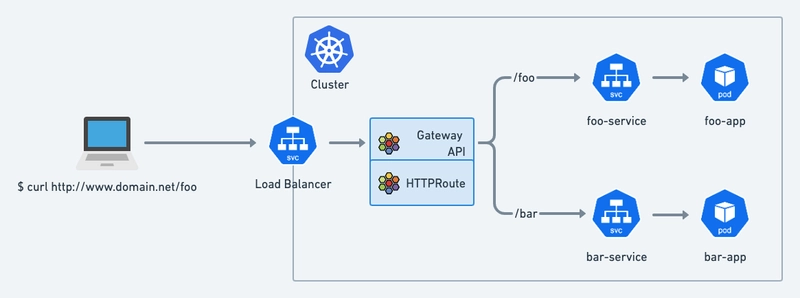

Cilium现在提供了开箱即用的KubernetesIngress实现。Cilium ingress实现了基于路径的路由、TLS终止还可以提供多服务共享一个负载均衡器IP等功能。Cilium Ingress Controller在底层使用了Envoy和eBPF,可管理进入Kubernetes集群的南北向流量,集群内的东西向流量以及跨集群通信的流量,同时实现丰富的L7负载均衡衡和L7流量管理。

参考官方链接

三、Cilium Ingress 模式环境搭建

kind 配置文件信息

root@KinD:~# cat install.sh#!/bin/bash

date

set -v# 1.prep noCNI env

cat <<EOF | kind create cluster --name=cilium-ingress-http --image=kindest/node:v1.23.4 --config=-

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

networking:# kind 默认使用 rancher cni,cni 我们需要自己创建disableDefaultCNI: true# 此处使用 cilium 代替 kube-proxy 功能kubeProxyMode: "none"

nodes:- role: control-plane- role: worker- role: workercontainerdConfigPatches:

- |-[plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor.evescn.com"]endpoint = ["https://harbor.evescn.com"]

EOF# 2.remove taints

controller_node_ip=`kubectl get node -o wide --no-headers | grep -E "control-plane|bpf1" | awk -F " " '{print $6}'`

# kubectl taint nodes $(kubectl get nodes -o name | grep control-plane) node-role.kubernetes.io/master:NoSchedule-

kubectl get nodes -o wide# 3.install cni

helm repo add cilium https://helm.cilium.io > /dev/null 2>&1

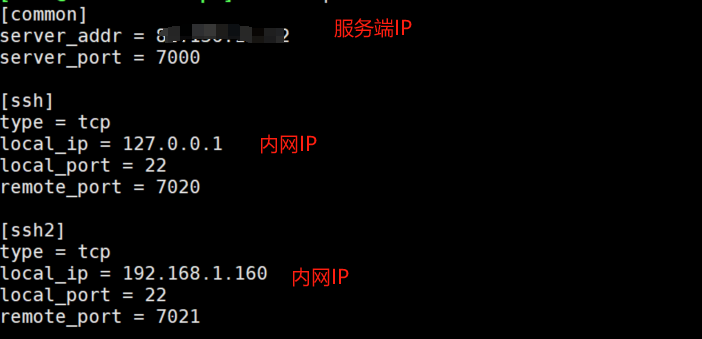

helm repo update > /dev/null 2>&1helm install cilium cilium/cilium \--set k8sServiceHost=$controller_node_ip \--set k8sServicePort=6443 \--version 1.13.0-rc5 \--namespace kube-system \--set debug.enabled=true \--set debug.verbose=datapath \--set monitorAggregation=none \--set ipam.mode=cluster-pool \--set cluster.name=cilium-ingress-http \--set kubeProxyReplacement=strict \--set tunnel=disabled \--set autoDirectNodeRoutes=true \--set ipv4NativeRoutingCIDR=10.0.0.0/8 \--set bpf.masquerade=true \--set installNoConntrackIptablesRules=true \--set ingressController.enabled=true # 4.install necessary tools

for i in $(docker ps -a --format "table {{.Names}}" | grep cilium)

doecho $idocker cp /usr/bin/ping $i:/usr/bin/pingdocker exec -it $i bash -c "sed -i -e 's/jp.archive.ubuntu.com\|archive.ubuntu.com\|security.ubuntu.com/old-releases.ubuntu.com/g' /etc/apt/sources.list"docker exec -it $i bash -c "apt-get -y update >/dev/null && apt-get -y install net-tools tcpdump lrzsz bridge-utils >/dev/null 2>&1"

done

--set 参数解释

-

--set kubeProxyReplacement=strict- 含义: 启用 kube-proxy 替代功能,并以严格模式运行。

- 用途: Cilium 将完全替代 kube-proxy 实现服务负载均衡,提供更高效的流量转发和网络策略管理。

-

--set tunnel=disabled- 含义: 禁用隧道模式。

- 用途: 禁用后,Cilium 将不使用 vxlan 技术,直接在主机之间路由数据包,即 direct-routing 模式。

-

--set autoDirectNodeRoutes=true- 含义: 启用自动直接节点路由。

- 用途: 使 Cilium 自动设置直接节点路由,优化网络流量。

-

--set ipv4NativeRoutingCIDR="10.0.0.0/8"- 含义: 指定用于 IPv4 本地路由的 CIDR 范围,这里是

10.0.0.0/8。 - 用途: 配置 Cilium 使其知道哪些 IP 地址范围应该通过本地路由进行处理,不做 snat , Cilium 默认会对所用地址做 snat。

- 含义: 指定用于 IPv4 本地路由的 CIDR 范围,这里是

-

--set bpf.masquerade- 含义: 启用 eBPF 功能。

- 用途: 使用 eBPF 实现数据路由,提供更高效和灵活的网络地址转换功能。

-

--set installNoConntrackIptablesRules=true:- 安装无连接跟踪的 iptables 规则,这样可以减少 iptables 规则集中的连接跟踪负担。

-

--set socketLB.enabled=true:- 启用 Socket Load Balancer(SLB),用于优化服务之间的负载均衡。

-

--set ingressController.enabled=true- 启用 Ingress 控制器,Cilium 将管理 Ingress 资源。

-

Cilium 必须使用 kubeProxyReplacement 配置为部分或严格。详情请参考 kube-proxy replacement。

-

配置 Cilium 时,必须使用 --enable-l7-proxy 标志(默认已启用)启用 L7 代理。

-

Cilium Ingress 支持的 Kubernetes 版本最低为 1.19。

-

安装

k8s集群和cilium服务

root@KinD:~# ./install.shCreating cluster "cilium-ingress-http" ...✓ Ensuring node image (kindest/node:v1.23.4) 🖼✓ Preparing nodes 📦 📦 📦 ✓ Writing configuration 📜 ✓ Starting control-plane 🕹️ ✓ Installing StorageClass 💾 ✓ Joining worker nodes 🚜

Set kubectl context to "kind-cilium-ingress-http"

You can now use your cluster with:kubectl cluster-info --context kind-cilium-ingress-httpHave a question, bug, or feature request? Let us know! https://kind.sigs.k8s.io/#community 🙂

- 查看安装的服务

root@KinD:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system cilium-5gmxj 1/1 Running 0 5m52s

kube-system cilium-operator-dd757785c-bnczh 1/1 Running 0 5m52s

kube-system cilium-operator-dd757785c-t6xpb 1/1 Running 0 5m52s

kube-system cilium-vmjqt 1/1 Running 0 5m52s

kube-system cilium-wjvh2 1/1 Running 0 5m52s

kube-system coredns-64897985d-8jvrv 1/1 Running 0 6m15s

kube-system coredns-64897985d-lmmwh 1/1 Running 0 6m15s

kube-system etcd-cilium-ingress-http-control-plane 1/1 Running 0 6m30s

kube-system kube-apiserver-cilium-ingress-http-control-plane 1/1 Running 0 6m32s

kube-system kube-controller-manager-cilium-ingress-http-control-plane 1/1 Running 0 6m29s

kube-system kube-scheduler-cilium-ingress-http-control-plane 1/1 Running 0 6m29s

local-path-storage local-path-provisioner-5ddd94ff66-wb4tp 1/1 Running 0 6m15s

安装 metallb 服务,提供 LoadBanlencer 功能

- 安装

metallb服务

root@KinD:~# kubectl apply -f https://gh.api.99988866.xyz/https://raw.githubusercontent.com/metallb/metallb/v0.13.10/config/manifests/metallb-native.yamlnamespace/metallb-system created

customresourcedefinition.apiextensions.k8s.io/addresspools.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bfdprofiles.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgpadvertisements.metallb.io created

customresourcedefinition.apiextensions.k8s.io/bgppeers.metallb.io created

customresourcedefinition.apiextensions.k8s.io/communities.metallb.io created

customresourcedefinition.apiextensions.k8s.io/ipaddresspools.metallb.io created

customresourcedefinition.apiextensions.k8s.io/l2advertisements.metallb.io created

serviceaccount/controller created

serviceaccount/speaker created

role.rbac.authorization.k8s.io/controller created

role.rbac.authorization.k8s.io/pod-lister created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/controller created

rolebinding.rbac.authorization.k8s.io/pod-lister created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

configmap/metallb-excludel2 created

secret/webhook-server-cert created

service/webhook-service created

deployment.apps/controller created

daemonset.apps/speaker created

validatingwebhookconfiguration.admissionregistration.k8s.io/metallb-webhook-configuration created

- 创建 metalLb layer2 的 IPAddressPool

root@KinD:~# cat metallb-l2-ip-config.yaml

---

# metallb 分配给 loadbanlencer 的 ip 地址段定义

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:name: ippoolnamespace: metallb-system

spec:addresses:- 172.18.0.200-172.18.0.210---

# 创建 L2Advertisement 进行 IPAddressPool 地址段

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:name: ippoolnamespace: metallb-system

spec:ipAddressPools:- ippoolroot@KinD:~# kubectl apply -f metallb-l2-ip-config.yaml

ipaddresspool.metallb.io/ippool unchanged

l2advertisement.metallb.io/ippool createdroot@KinD:~# kubectl get ipaddresspool -n metallb-system

NAME AUTO ASSIGN AVOID BUGGY IPS ADDRESSES

ippool true false ["172.18.0.200-172.18.0.210"]

root@KinD:~# kubectl get l2advertisement -n metallb-system

NAME IPADDRESSPOOLS IPADDRESSPOOL SELECTORS INTERFACES

ippool ["ippool"]

测试 Ingress HTTP 特性

k8s集群安装Pod测试Ingress特性

root@KinD:~# cat cni.yaml

---

apiVersion: apps/v1

kind: DaemonSet

#kind: Deployment

metadata:labels:app: cniname: cni

spec:#replicas: 1selector:matchLabels:app: cnitemplate:metadata:labels:app: cnispec:containers:- image: harbor.dayuan1997.com/devops/nettool:0.9name: nettoolboxsecurityContext:privileged: true---

apiVersion: v1

kind: Service

metadata:name: cni

spec:ports:- port: 80targetPort: 80protocol: TCPtype: ClusterIPselector:app: cni

root@KinD:~# kubectl apply -f cni.yaml

daemonset.apps/cni created

service/serversvc created

- 查看安装服务信息

root@KinD:~# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cni-2qqzv 1/1 Running 0 2m54s 10.0.1.162 cilium-ingress-http-worker <none> <none>

cni-jnt6p 1/1 Running 0 2m54s 10.0.2.118 cilium-ingress-http-worker2 <none> <none>root@KinD:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cni ClusterIP 10.96.86.241 <none> 80/TCP 13s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5m10s

配置 Ingress HTTP

root@KinD:~# cat ingress.yamnl

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:name: basic-ingressnamespace: default

spec:ingressClassName: ciliumrules:- http:paths:- backend:service:name: cniport:number: 80path: /pathType: Prefixroot@KinD:~# kubectl apply -f ingress.yaml

ingress.networking.k8s.io/basic-ingress createdroot@KinD:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cilium-ingress-basic-ingress LoadBalancer 10.96.95.86 172.18.0.201 80:31690/TCP,443:30103/TCP 2m43s

cni ClusterIP 10.96.86.241 <none> 80/TCP 3m3s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 8mroot@KinD:~# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

basic-ingress cilium * 172.18.0.201 80 3m17s

创建

Ingress后basic-ingress在定义的地址池中自动获取了一个IP地址,并且自动创建了一个cilium-ingress-basic-ingresssvc服务,服务的EXTERNAL-IP为basic-ingress从地址池中自动获取的IP地址

测试

root@KinD:~# HTTP_INGRESS=$(kubectl get ingress basic-ingress -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

root@KinD:~# curl -v --fail -s http://"$HTTP_INGRESS"/

* Trying 172.18.0.201:80...

* Connected to 172.18.0.201 (172.18.0.201) port 80 (#0)

> GET / HTTP/1.1

> Host: 172.18.0.201

> User-Agent: curl/7.81.0

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< server: envoy

< date: Sat, 13 Jul 2024 08:53:48 GMT

< content-type: text/html

< content-length: 47

< last-modified: Sat, 13 Jul 2024 08:48:55 GMT

< etag: "66923f77-2f"

< accept-ranges: bytes

< x-envoy-upstream-service-time: 0

<

PodName: cni-jnt6p | PodIP: eth0 10.0.2.118/32

* Connection #0 to host 172.18.0.201 left intact

在宿主机

172.18.0.1主机上访问业务正常访问,使用 ip 地址为172.18.0.201

测试 Ingress HTTPS 特性

创建 tls 证书,此处使用了一个测试证书

root@KinD:~# kubectl create secret tls demo-cert --cert=tls.crt --key=tls.key

secret/demo-cert created

配置 Ingress HTTPS

root@KinD:~# cat ingress.yamnl

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:name: tls-ingressnamespace: default

spec:ingressClassName: ciliumrules:- host: cni.evescn.comhttp:paths:- backend:service:name: cniport:number: 80path: /pathType: Prefixtls:- hosts:- cni.evescn.comsecretName: demo-certroot@KinD:~# k apply -f 5-ingress.yaml

ingress.networking.k8s.io/tls-ingress createdroot@KinD:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cilium-ingress-basic-ingress LoadBalancer 10.96.95.86 172.18.0.201 80:31690/TCP,443:30103/TCP 60m

cilium-ingress-tls-ingress LoadBalancer 10.96.226.30 172.18.0.202 80:30331/TCP,443:32394/TCP 7s

cni ClusterIP 10.96.86.241 <none> 80/TCP 60m

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 65mroot@KinD:~# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

basic-ingress cilium * 172.18.0.201 80 60m

tls-ingress cilium cni.evescn.com 172.18.0.202 80, 443 12s

创建

Ingress后tls-ingress在定义的地址池中自动获取了一个IP地址,并且自动创建了一个cilium-ingress-tls-ingresssvc服务,服务的EXTERNAL-IP为tls-ingress从地址池中自动获取的IP地址

测试

/etc/hosts新增dns解析

root@KinD:~# cat /etc/hosts

......

172.18.0.202 cni.evescn.com

- 测试

IngressHTTPS

root@KinD:~# HTTP_INGRESS=$(kubectl get ingress tls-ingress -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

root@KinD:~# curl -v --fail -s http://"$HTTP_INGRESS"/

root@KinD:~/wcni-kind/cilium/cilium_1.13.0-rc5/cilium-ingress/2-https# curl --cacert /root/evescn.com.pem -v https://cni.evescn.com/

* Trying 172.18.0.202:443...

* Connected to cni.evescn.com (172.18.0.202) port 443 (#0)

> GET / HTTP/1.1

> Host: cni.evescn.com

> User-Agent: curl/7.81.0

> Accept: */*

>

* TLSv1.2 (IN), TLS header, Supplemental data (23):

* TLSv1.3 (IN), TLS handshake, Newsession Ticket (4):

* TLSv1.3 (IN), TLS handshake, Newsession Ticket (4):

* old SSL session ID is stale, removing

* TLSv1.2 (IN), TLS header, Supplemental data (23):

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< server: envoy

< date: Sat, 13 Jul 2024 09:52:20 GMT

< content-type: text/html

< content-length: 47

< last-modified: Sat, 13 Jul 2024 08:48:55 GMT

< etag: "66923f77-2f"

< accept-ranges: bytes

< x-envoy-upstream-service-time: 0

<

PodName: cni-2qqzv | PodIP: eth0 10.0.1.162/32

* Connection #0 to host cni.evescn.com left intact

在宿主机

172.18.0.1主机上访问业务正常访问,使用 ip 地址为172.18.0.201

六、转载博客

https://bbs.huaweicloud.com/blogs/418684#H13

![[HNCTF 2022 WEEK2]ez_SSTI](https://img2024.cnblogs.com/blog/3390865/202407/3390865-20240713163043663-1507270589.png)