目录标题

- 前言

- 环境使用:

- 模块使用]:

- 代码展示

- 尾语

前言

嗨喽~大家好呀,这里是魔王呐 ❤ ~!

环境使用:

-

Python 3.8 解释器

-

Pycharm 编辑器

模块使用]:

-

import requests —> 数据请求模块 pip install requests

-

import csv

第三方模块安装:

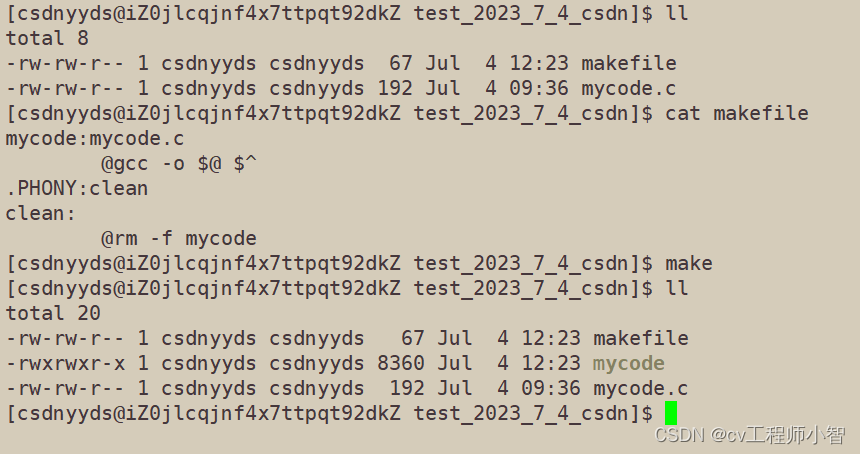

win + R 输入cmd 输入安装命令 pip install 模块名 (如果你觉得安装速度比较慢, 你可以切换国内镜像源)

python资料、源码、教程\福利皆: 点击此处跳转文末名片获取

代码展示

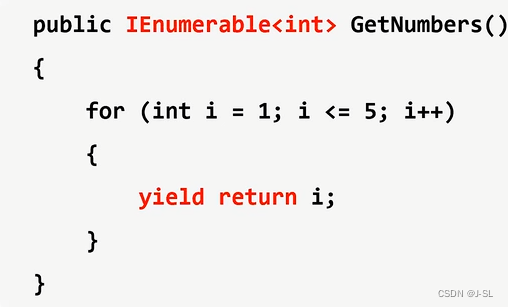

# 导入数据请求模块

import requests

# 导入格式化输出模块

from pprint import pprint

# 导入csv

import csv

# 导入pandas

import pandas as pd

f = open('data.csv', mode='w', encoding='utf-8', newline='')

csv_writer = csv.DictWriter(f, fieldnames=['股票名称','发行量(万)','发行价','发行后每股净资产','首日开盘价','首日涨跌幅','首日换手率','上市后涨跌幅','市盈率(TTM)','市净率','当前价','今日涨跌幅','每签获利',

])

csv_writer.writeheader()

"""

1. 发送请求, 模拟浏览器对于url地址发送请求- 请求链接: 比较长分段写: ? 前面链接 ? 后请求参数

批量替换:ctrl + R 勾选.* 使用正则命令进行批量替换(.*?): (.*)'$1': '$2',

模拟浏览器:请求失败, 被识别是爬虫程序"""

多页采集

for page in range(1, 11):

请求链接

url = 'https://****/v5/stock/preipo/cn/query.json'

请求参数 字典数据类型

data = {'page': page,'size': '30','order': 'desc','order_by': 'list_date','type': 'quote',}

模拟浏览器

headers = {# Cookie 用户信息'Cookie': 'device_id=2fd33de446466b8053638f07d480b33f; s=d613bsv82e; xq_a_token=57b2a0b86ca3e943ee1ffc69509952639be342b9; xq_r_token=59c1392434fb1959820e4323bb26fa31dd012ea4; xq_id_token=eyJ0eXAiOiJKV1QiLCJhbGciOiJSUzI1NiJ9.eyJ1aWQiOi0xLCJpc3MiOiJ1YyIsImV4cCI6MTY5MDMzMTY5OCwiY3RtIjoxNjg3OTQ5MzMzNzM2LCJjaWQiOiJkOWQwbjRBWnVwIn0.c5xOaPy5EzJVIttd0qCKFctugs7l_86SB1DaHR5Qagnhc3eyUHFMnE_ZLaQ3oJC2CCirhdTkYjxEUQfC_FZ2igeSw_frxediYuhf8Bd8BWVU6oPRhSvJTADXtsYH96XXD5X0c8AslHhaw55O4FkXuaTgqJFSWRb-eVetp5Q64Chjk7pL24XrJtNpv6epPLf4JVvWSRNuoRKM43SRSZp4a0SItsuAs-0nC9jPHbPa-oz46Ay-LEfyyAnv9DIyiUmHna25bS7yAcKtt6J_BudRJjuT_iFpn1JRV85pqDKYXtbI60cFtYsRvdHAO-KYexB6nWK9uMCfPEHZE_q_R1BbWQ; u=531687949381081; Hm_lvt_1db88642e346389874251b5a1eded6e3=1687949381; Hm_lpvt_1db88642e346389874251b5a1eded6e3=1687949381',# User-Agent 用户代理'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36'}# 发送请求 --> <Response [403]> 请求失败了response = requests.get(url, params=data, headers=headers)

"""

2. 获取数据, 获取服务器返回响应数据response.json() 获取响应json数据 <字典数据类型>3. 解析数据, 提取我们想要的数据内容字典取值 --> 键值对取值

"""

info = []# for循环遍历for index in response.json()['data']['items']:dit = {'股票名称': index['name'],'发行量(万)': index['actissqty'],'发行价': index['iss_price'],'发行后每股净资产': index['napsaft'],'首日开盘价': index['first_open_price'],'首日涨跌幅': index['first_percent'],'首日换手率': index['first_turnrate'],'上市后涨跌幅': index['listed_percent'],'市盈率(TTM)': index['pe_ttm'],'市净率': index['pb'],'当前价': index['current'],'今日涨跌幅': index['percent'],'每签获利': index['stock_income'],}info.append(dit)csv_writer.writerow(dit)print(dit)

import requests

import datetime

import csv

f = open('data1.csv', mode='w', encoding='utf-8', newline='')

csv_writer = csv.DictWriter(f, fieldnames=[])

csv_writer.writeheader()

url = 'https://******/v5/stock/chart/minute.json?symbol=SZ301378&period=1d'

headers = {'Cookie':'device_id=2fd33de446466b8053638f07d480b33f; s=d613bsv82e; xq_a_token=57b2a0b86ca3e943ee1ffc69509952639be342b9; xq_r_token=59c1392434fb1959820e4323bb26fa31dd012ea4; xq_id_token=eyJ0eXAiOiJKV1QiLCJhbGciOiJSUzI1NiJ9.eyJ1aWQiOi0xLCJpc3MiOiJ1YyIsImV4cCI6MTY5MDMzMTY5OCwiY3RtIjoxNjg3OTQ5MzMzNzM2LCJjaWQiOiJkOWQwbjRBWnVwIn0.c5xOaPy5EzJVIttd0qCKFctugs7l_86SB1DaHR5Qagnhc3eyUHFMnE_ZLaQ3oJC2CCirhdTkYjxEUQfC_FZ2igeSw_frxediYuhf8Bd8BWVU6oPRhSvJTADXtsYH96XXD5X0c8AslHhaw55O4FkXuaTgqJFSWRb-eVetp5Q64Chjk7pL24XrJtNpv6epPLf4JVvWSRNuoRKM43SRSZp4a0SItsuAs-0nC9jPHbPa-oz46Ay-LEfyyAnv9DIyiUmHna25bS7yAcKtt6J_BudRJjuT_iFpn1JRV85pqDKYXtbI60cFtYsRvdHAO-KYexB6nWK9uMCfPEHZE_q_R1BbWQ; u=531687949381081; Hm_lvt_1db88642e346389874251b5a1eded6e3=1687949381; is_overseas=0; Hm_lpvt_1db88642e346389874251b5a1eded6e3=1687956046','User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36'

}

response = requests.get(url, headers=headers)

for index in response.json()['data']['items']:timestamp = int(str(index['timestamp'])[:-3])dt = datetime.datetime.fromtimestamp(timestamp)print(dt)dit = {'时间': dt,'最新': index['current'],'涨跌幅': index['percent'],'涨跌额': index['chg'],'均价': index['avg_price'],'最高': index['high'],'最低': index['low'],'成交额': str(index['volume'])[:-2],}print(dit)

尾语

感谢你观看我的文章呐~本次航班到这里就结束啦 🛬

希望本篇文章有对你带来帮助 🎉,有学习到一点知识~

躲起来的星星🍥也在努力发光,你也要努力加油(让我们一起努力叭)。