train.py代码如下

import torch import torch.nn as nn import torch.optim as optimmodel_save_path = "my_model.pth"# 定义简单的线性神经网络模型 class MyModel(nn.Module):def __init__(self):super(MyModel, self).__init__()self.output = nn.Linear(2, 4) # 输入2个特征,输出4个类别def forward(self, x):x = self.output(x)return xdef main():# 数据点x = torch.tensor([[0, 0], [0, 10], [10, 0], [10, 10]], dtype=torch.float32)y = torch.tensor([0, 1, 2, 3], dtype=torch.long)# 初始化模型model = MyModel()# 定义损失函数和优化器criterion = nn.CrossEntropyLoss()optimizer = optim.Adam(model.parameters(), lr=0.01)# 训练模型num_iterations = 10000 # 迭代次数for i in range(num_iterations):model.train()# 前向传播:计算预测输出y_pred = model(x)# 计算损失loss = criterion(y_pred, y)# 输出每1000次迭代的损失值if i % 1000 == 0:print(f"迭代 {i},损失:{loss.item():.4f}")# 反向传播与梯度更新optimizer.zero_grad() # 清除梯度loss.backward() # 计算梯度optimizer.step() # 更新参数# 打印优化后的权重和偏置print("\n优化后的权重和偏置:")for name, param in model.named_parameters():if param.requires_grad:print(f"{name} = {param.data.numpy()}")# 保存模型 torch.save(model.state_dict(), model_save_path)print(f"模型已保存到 {model_save_path}")if __name__ == "__main__":main()

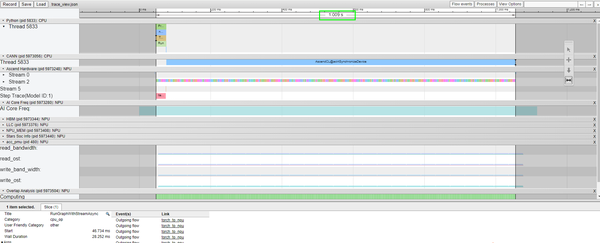

运行结果

test.py代码如下

import numpy as np import torch from torch import nnfrom train import MyModel, model_save_path# 加载模型 loaded_model = MyModel() loaded_model.load_state_dict(torch.load(model_save_path)) loaded_model.eval() # 切换到评估模式# 定义预测数据 input_data = [0, 9]# 使用加载的模型进行预测 x_new = torch.tensor([input_data], dtype=torch.float32) # 新数据点 y_new_pred = loaded_model(x_new) # 计算预测值# 使用softmax计算每个类别的概率 softmax = nn.Softmax(dim=1) y_new_pred_probs = softmax(y_new_pred)# 找到预测的类别 predicted_class = torch.argmax(y_new_pred_probs, dim=1)# 将概率分布四舍五入到三位小数 y_new_pred_probs_rounded = np.round(y_new_pred_probs.detach().numpy(), 3)print(f"\n对x = {input_data}的预测类别:{predicted_class.item()}") print(f"预测类别的概率分布:{y_new_pred_probs_rounded}")# 打印权重和偏置 weights = loaded_model.output.weight # 获取输出层权重 bias = loaded_model.output.bias # 获取输出层偏置print(f"\n模型权重:\n{weights}") print(f"\n模型偏置:\n{bias}")# 计算input_data * 模型权重 + 模型偏置 with torch.no_grad():linear_output = x_new @ weights.t() + biasprint(f"\ninput_data * weights + bias ={linear_output.numpy()}")# 手动计算Softmax概率分布 linear_output_np = linear_output.numpy() exp_output = np.exp(linear_output_np) softmax_output_manual = exp_output / np.sum(exp_output)print(f"\n手动计算的Softmax概率分布:{softmax_output_manual}") print(f"手动计算的预测类别:{np.argmax(softmax_output_manual)}")

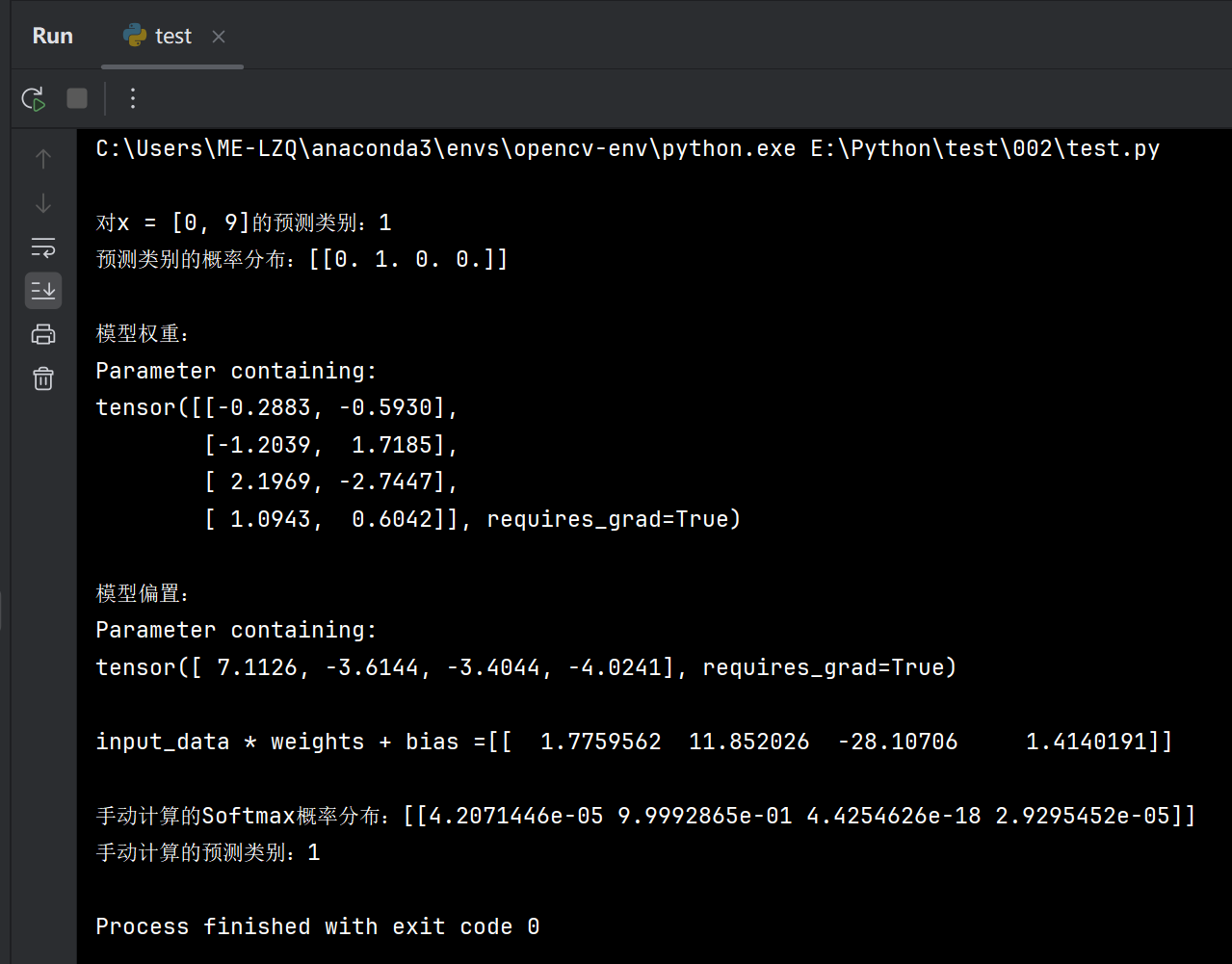

运行结果