Centoschina

项目要求

爬取centoschina_cn的所有问题,包括文章标题和内容

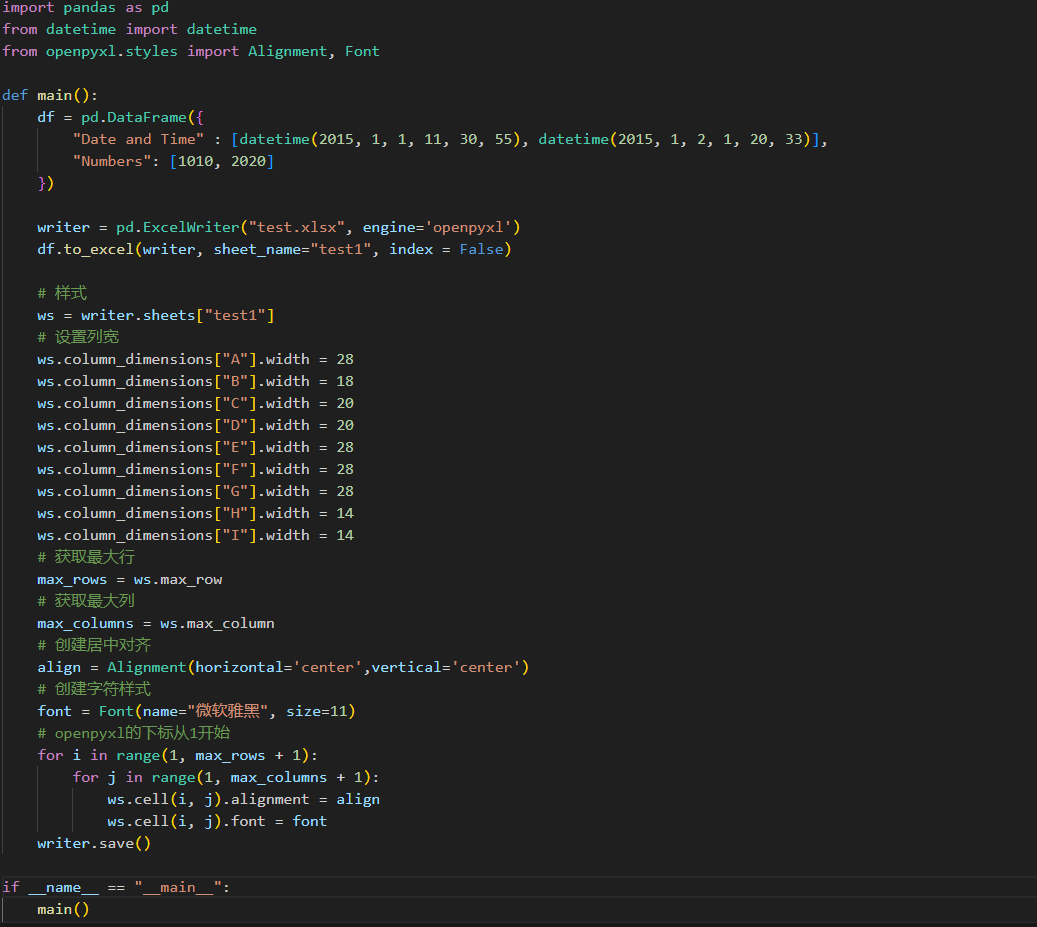

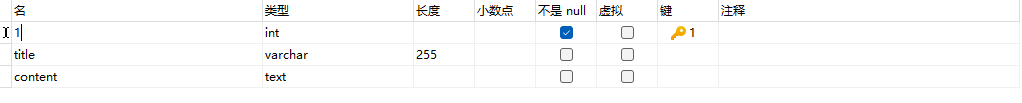

数据库表设计

库表设计:

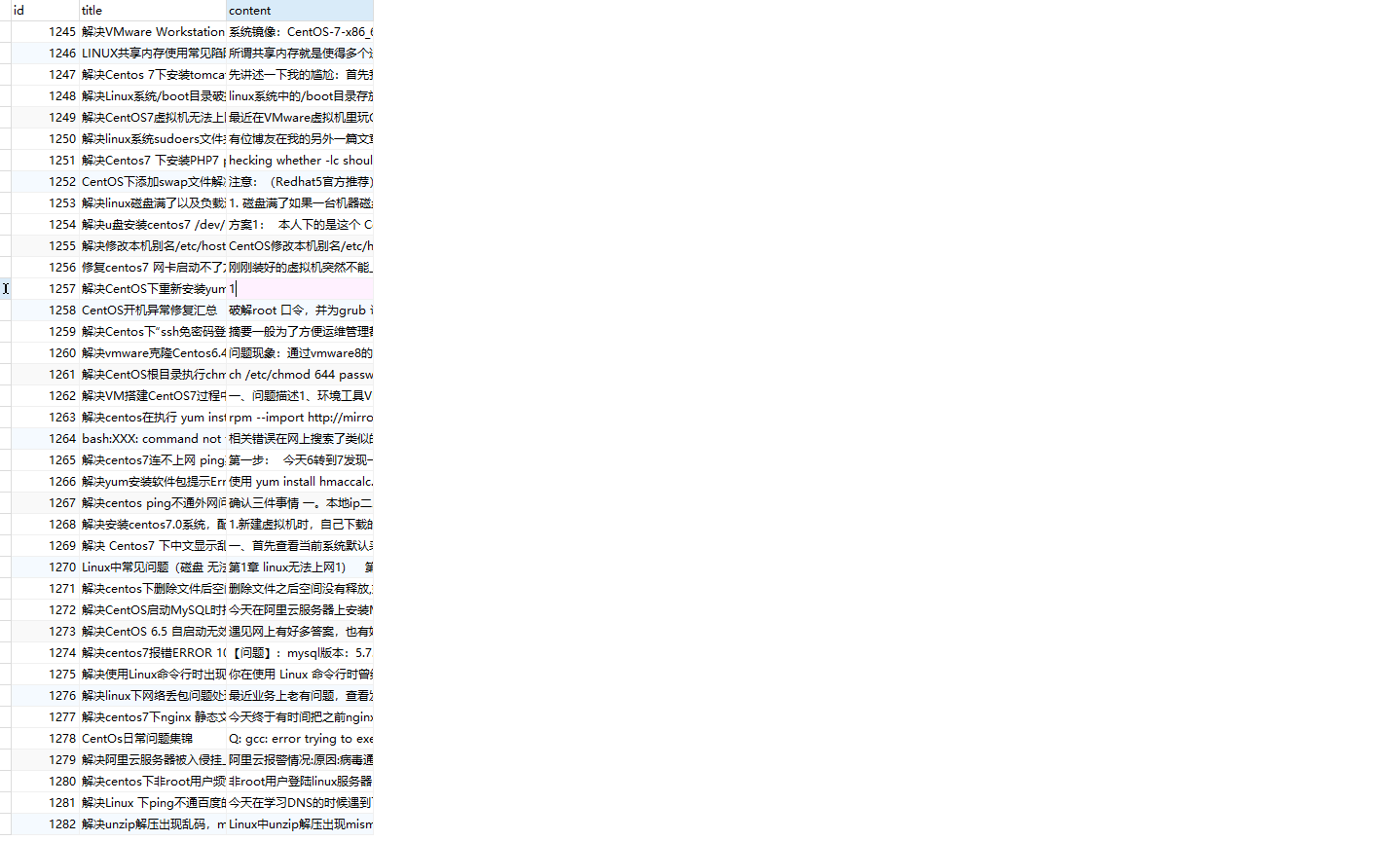

数据展示:

项目亮点

-

低耦合,高内聚。

爬虫专有settings

custom_settings = custom_settings_for_centoschina_cncustom_settings_for_centoschina_cn = {'MYSQL_USER': 'root','MYSQL_PWD': '123456','MYSQL_DB': 'questions', } -

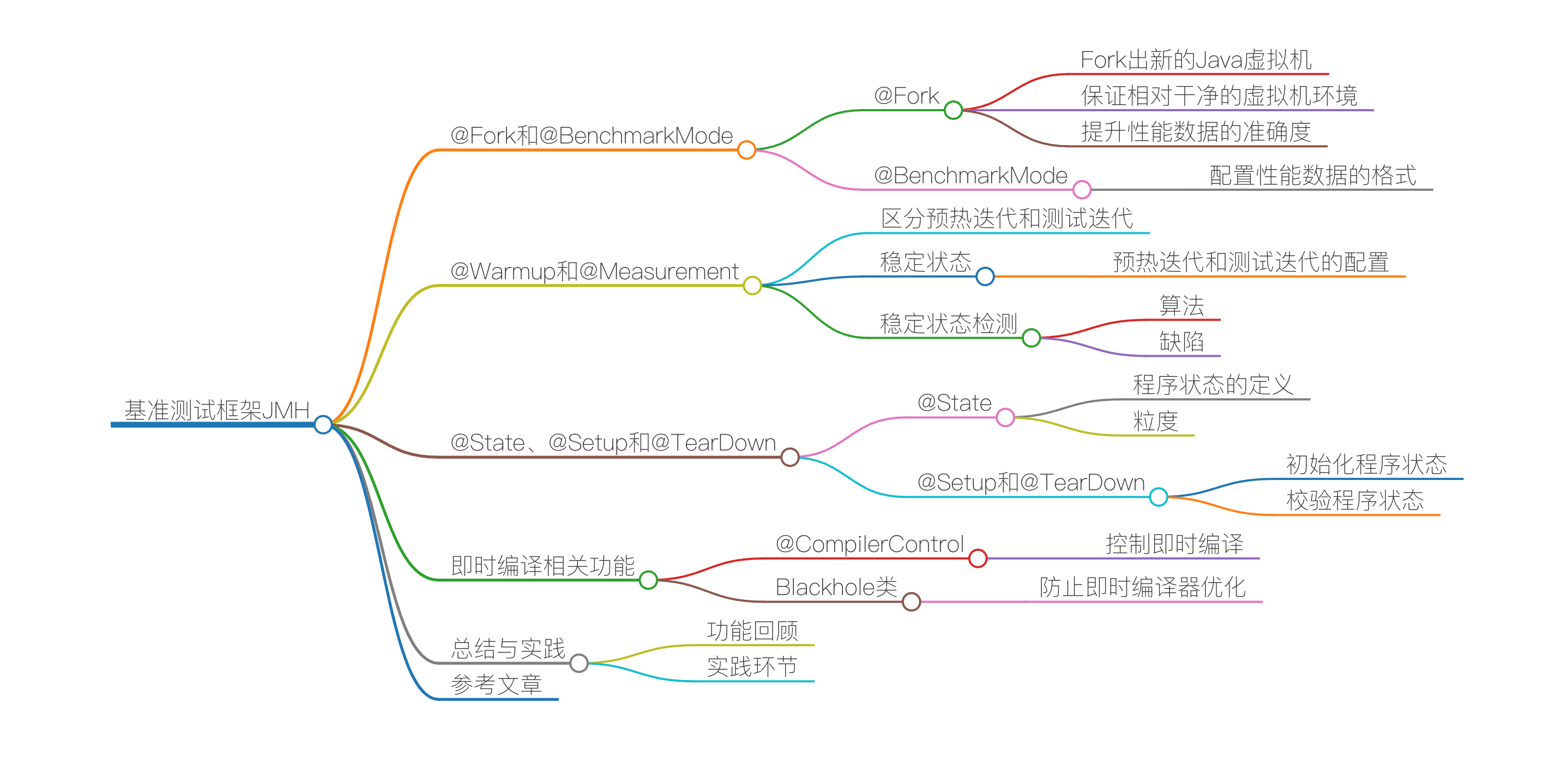

DownloaderMiddleware使用

class CentoschinaDownloaderMiddleware:# Not all methods need to be defined. If a method is not defined,# scrapy acts as if the downloader middleware does not modify the# passed objects.@classmethoddef from_crawler(cls, crawler):# This method is used by Scrapy to create your spiders.s = cls()crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)return s# 处理请求def process_request(self, request, spider):# Called for each request that goes through the downloader# middleware.# Must either:# - return None: continue processing this request 继续执行下一步操作,不处理默认返回None# - or return a Response object 直接返回响应, 如scrapy和pyppeteer不需要用下载器中间件访问外网,直接返回响应, pyppeteer有插件,一般和scrapy还能配合,selenium不行,没有插件# - or return a Request object 将请求返回到schdular的调度队列中供以后重新访问# - or raise IgnoreRequest: process_exception() methods of# installed downloader middleware will be calledreturn None# 处理响应def process_response(self, request, response, spider):# Called with the response returned from the downloader.# Must either;# - return a Response object 返回响应结果# - return a Request object 结果不对(判断结果对不对一般判断状态码和内容大小)一般返回request,也是将请求返回到schdular的调度队列中供以后重新访问# - or raise IgnoreRequestreturn response# 处理异常:如超时错误等def process_exception(self, request, exception, spider):# Called when a download handler or a process_request()# (from other downloader middleware) raises an exception.# Must either:# - return None: continue processing this exception 继续执行下一步,没有异常# - return a Response object: stops process_exception() chain 如果其返回一个 Response 对象,则已安装的中间件链的 process_response() 方法被调用。Scrapy将不会调用任何其他中间件的 process_exception() 方法。# - return a Request object: stops process_exception() chain 将请求返回到schdular的调度队列中供以后重新访问passdef spider_opened(self, spider):spider.logger.info("Spider opened: %s" % spider.name) -

DownloaderMiddleware中抛弃请求写法

- 适用场景:请求异常,换代理或者换cookie等操作

# from scrapy.exceptions import IgnoreRequest # raise IgnoreRequest(f'Failed to retrieve {request.url} after {max_retries} retries')例子:处理下载异常并重试请求

import logging from scrapy.exceptions import IgnoreRequestclass RetryExceptionMiddleware:def __init__(self):self.logger = logging.getLogger(__name__)def process_exception(self, request, exception, spider):# 记录异常信息self.logger.warning(f'Exception {exception} occurred while processing {request.url}')# 检查是否达到重试次数限制max_retries = 3retries = request.meta.get('retry_times', 0) + 1if retries <= max_retries:self.logger.info(f'Retrying {request.url} (retry {retries}/{max_retries})')# 增加重试次数request.meta['retry_times'] = retriesreturn requestelse:self.logger.error(f'Failed to retrieve {request.url} after {max_retries} retries')raise IgnoreRequest(f'Failed to retrieve {request.url} after {max_retries} retries')例子:切换代理

import randomclass SwitchProxyMiddleware:def __init__(self, proxy_list):self.proxy_list = proxy_listself.logger = logging.getLogger(__name__)@classmethoddef from_crawler(cls, crawler):proxy_list = crawler.settings.get('PROXY_LIST')return cls(proxy_list)def process_exception(self, request, exception, spider):self.logger.warning(f'Exception {exception} occurred while processing {request.url}')# 切换代理proxy = random.choice(self.proxy_list)self.logger.info(f'Switching proxy to {proxy}')request.meta['proxy'] = proxy# 重试请求return request -

piplines中抛弃item写法

- 适用场景:数据清洗、去重、验证等操作

# from scrapy.exceptions import DropItem # raise DropItem("Duplicate item found: %s" % item) -

保存到文件(通过命令)

from scrapy.cmdline import execute execute(['scrapy', 'crawl', 'centoschina_cn', '-o', 'questions.csv'])

更多精致内容: