- 作业①

- 实验要求及结果

- 心得体会

- 作业②

- 实验要求及结果

- 心得体会

- 作业③

- 实验要求及结果

- 心得体会

- 码云连接

作业①

实验要求及结果

- 要求

要求:指定一个网站,爬取这个网站中的所有的所有图片,例如:中国气象网(http://www.weather.com.cn)。使用scrapy框架分别实现单线程和多线程的方式爬取。

–务必控制总页数(学号尾数2位)、总下载的图片数量(尾数后3位)等限制爬取的措施。

输出信息: 将下载的Url信息在控制台输出,并将下载的图片存储在images子文件中,并给出截图。

- 代码

这里我选择爬取当当网

spider.py(包含单线程和多线程爬虫)

# 单线程

import os

import requests

import scrapy

from dangdang_images.items import DangdangImagesItemclass DangdangSearchSpider(scrapy.Spider):name = 'dangdang_search'allowed_domains = ['search.dangdang.com','ddimg.cn']start_urls = ['https://search.dangdang.com/?key=%CA%E9%B0%FC&act=input']max_images = 135 # 最大图片下载数量max_pages = 35 # 最大页数image_count = 0 # 已下载图片数量计数page_count = 0 # 已访问页面计数def parse(self, response):# 检查是否达到爬取的页数限制if self.page_count >= self.max_pages or self.image_count >= self.max_images:return# 获取所有书籍封面图片的 URLimage_urls = response.css("img::attr(data-original)").getall()if not image_urls:# 有些图片URL属性可能是 `src`,尝试备用选择器image_urls = response.css("img::attr(src)").getall()for url in image_urls:if self.image_count < self.max_images:self.image_count += 1item = DangdangImagesItem()# 使用 response.urljoin 补全 URLitem['image_urls'] = [response.urljoin(url)]print("Image URL:", item['image_urls'])yield itemelse:return # 如果图片数量达到限制,停止爬取# 控制页面数量并爬取下一页self.page_count += 1next_page = response.css("li.next a::attr(href)").get()if next_page and self.page_count < self.max_pages:yield response.follow(next_page, self.parse)# 多线程# import scrapy

# from dangdang_images.items import DangdangImagesItem

# import concurrent.futures

#

# class DangdangSearchSpider(scrapy.Spider):

# name = 'dangdang_search'

# allowed_domains = ['search.dangdang.com', 'ddimg.cn']

# start_urls = ['https://search.dangdang.com/?key=%CA%E9%B0%FC&act=input']

# max_images = 135 # 最大图片下载数量

# max_pages = 35 # 最大页数

# image_count = 0 # 已下载图片数量计数

# page_count = 0 # 已访问页面计数

#

# def parse(self, response):

# # 检查是否达到爬取的页数限制

# if self.page_count >= self.max_pages or self.image_count >= self.max_images:

# return

#

# # 获取所有书籍封面图片的 URL

# image_urls = response.css("img::attr(data-original)").getall()

# if not image_urls:

# # 有些图片URL属性可能是 `src`,尝试备用选择器

# image_urls = response.css("img::attr(src)").getall()

#

# # 使用 ThreadPoolExecutor 下载图片

# with concurrent.futures.ThreadPoolExecutor(max_workers=4) as executor:

# futures = []

# for url in image_urls:

# if self.image_count < self.max_images:

# self.image_count += 1

# item = DangdangImagesItem()

# # 使用 response.urljoin 补全 URL

# item['image_urls'] = [response.urljoin(url)]

# print("Image URL:", item['image_urls'])

# futures.append(executor.submit(self.download_image, item))

# else:

# break # 如果图片数量达到限制,停止爬取

#

# # 控制页面数量并爬取下一页

# self.page_count += 1

# next_page = response.css("li.next a::attr(href)").get()

# if next_page and self.page_count < self.max_pages:

# yield response.follow(next_page, self.parse)

#

# def download_image(self, item):

# # 获取图片 URL

# image_url = item['image_urls'][0]

#

# # 确定保存路径

# image_name = image_url.split("/")[-1] # 从 URL 中提取图片文件名

# save_path = os.path.join('./images2', image_name)

#

# try:

# # 发送 GET 请求下载图片

# response = requests.get(image_url, stream=True)

# response.raise_for_status() # 检查请求是否成功

#

# # 创建目录(如果不存在)

# os.makedirs(os.path.dirname(save_path), exist_ok=True)

#

# # 保存图片到本地

# with open(save_path, 'wb') as f:

# for chunk in response.iter_content(chunk_size=8192):

# f.write(chunk)

#

# self.logger.info(f"Image downloaded and saved to {save_path}")

#

# except requests.exceptions.RequestException as e:

# self.logger.error(f"Failed to download image from {image_url}: {e}")settings.py

BOT_NAME = "dangdang_images"

SPIDER_MODULES = ["dangdang_images.spiders"]

NEWSPIDER_MODULE = "dangdang_images.spiders"

DEFAULT_REQUEST_HEADERS = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/130.0.0.0 Safari/537.36 Edg/130.0.0.0"

}

# Obey robots.txt rules

ROBOTSTXT_OBEY = False# 图片存储路径

IMAGES_STORE = './images'# 开启图片管道

ITEM_PIPELINES = {'scrapy.pipelines.images.ImagesPipeline': 1,

}# 并发请求数控制

CONCURRENT_REQUESTS = 4 # 可以根据需求调整

DOWNLOAD_DELAY = 2 # 设置下载延迟

LOG_LEVEL = 'DEBUG'RETRY_TIMES = 5 # 增加重试次数

RETRY_HTTP_CODES = [500, 502, 503, 504, 408]

items.py

import scrapyclass DangdangImagesItem(scrapy.Item):image_urls = scrapy.Field()images = scrapy.Field()

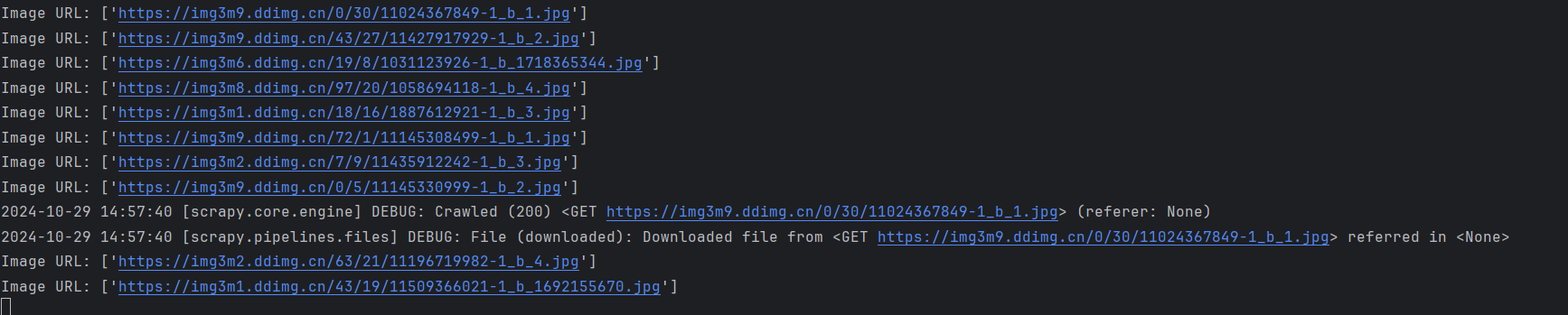

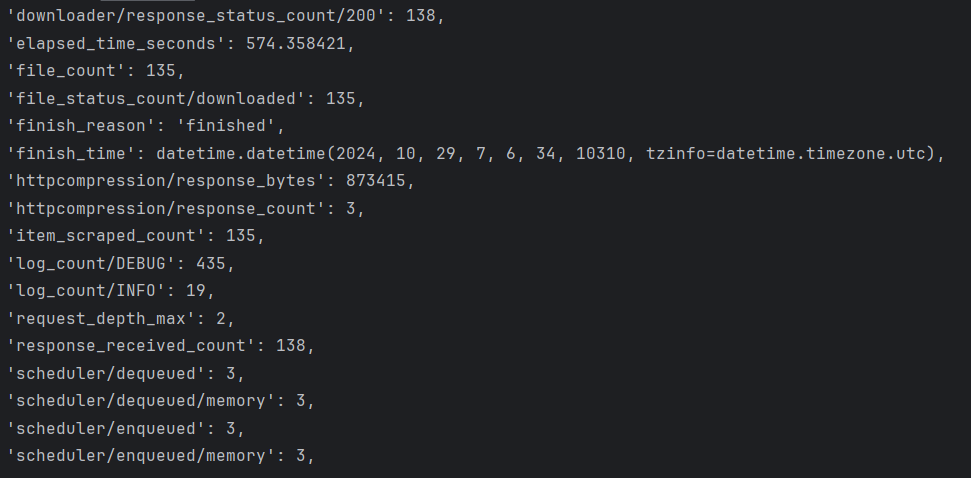

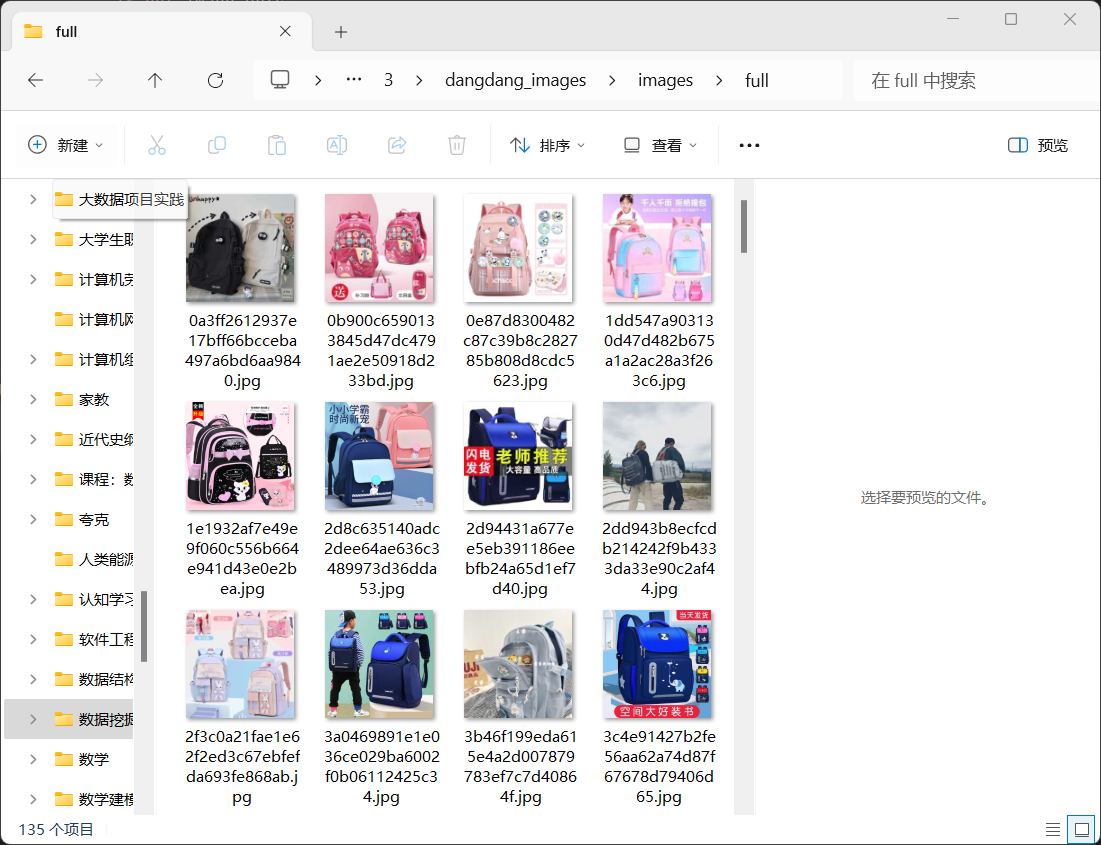

- 运行结果

单线程

多线程

心得体会

一开始爬取并不成功,后面发现违反了robots.txt规定的协议,所以我禁用了settings里面的设置,果然爬取成功。至于单线程和多线程爬虫,由于scrapy框架本身便是异步爬取,所以没有很能搞懂这里的区分,我认为在scrapy框架里,单线程和多线程的区别便是CONCURRENT_REQUESTS = 32 这句代码是否存在,也就是异步并发的次数。但我也写了使用threading库的多线程模式。

作业②

实验要求及结果

- 要求:

要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取股票相关信息。

候选网站:东方财富网:https://www.eastmoney.com/

输出信息:MySQL数据库存储和输出格式如下:

表头英文命名例如:序号id,股票代码:bStockNo……,由同学们自行定义设计

| 序号 | 股票代码 | 股票名称 | 最新报价 | 涨跌幅 | 涨跌额 | 成交量 | 振幅 | 最高 | 最低 | 今开 | 昨收 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 688093 | N世华 | 28.47 | 10.92 | 26.13万 | 7.6亿 | 22.34 | 32.0 | 28.08 | 30.20 | 17.55 |

- 代码:

stock_spider.py

import reimport scrapy

import json

import pymysqlclass StocksSpider(scrapy.Spider):name = 'stocks'# 股票分类及接口参数cmd = {"沪深京A股": "f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048","上证A股": "f3&fs=m:1+t:2,m:1+t:23","深证A股": "f3&fs=m:0+t:6,m:0+t:80","北证A股": "f3&fs=m:0+t:81+s:2048",}start_urls = []def start_requests(self):for market_code in self.cmd.values():for page in range(1, 3): # 爬取前两页url = f"https://98.push2.eastmoney.com/api/qt/clist/get?cb=jQuery&pn={page}&pz=20&po=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&dect=1&wbp2u=|0|0|0|web&fid={market_code}&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152"yield scrapy.Request(url, callback=self.parse)def parse(self, response):# 提取JSON格式数据data = response.textleft_data = re.search(r'^.*?(?=\()', data)if left_data:left_data = left_data.group()data = re.sub(left_data + '\(', '', data)data = re.sub('\);', '', data)try:stock_data = json.loads(data)except json.JSONDecodeError as e:print(f"JSON Decode Error: {e}")return # 返回以避免后续操作print(f"Parsed JSON Data: {json.dumps(stock_data, indent=4, ensure_ascii=False)}") # 打印解析后的数据if 'data' in stock_data and 'diff' in stock_data['data']:for key, stock in stock_data['data']['diff'].items(): # 遍历 diff 字典# 在此添加调试信息,检查每个股票的数据print(f"Stock Data: {stock}")yield {'bStockNo': stock.get("f12", "N/A"),'bStockName': stock.get("f14", "N/A"),'fLatestPrice': stock.get("f2", "N/A"),'fChangeRate': stock.get("f3", "N/A"),'fChangeAmount': stock.get("f4", "N/A"),'fVolume': stock.get("f5", "N/A"),'fTurnover': stock.get("f6", "N/A"),'fAmplitude': stock.get("f7", "N/A"),'fHighest': stock.get("f15", "N/A"),'fLowest': stock.get("f16", "N/A"),'fOpeningPrice': stock.get("f17", "N/A"),'fPreviousClose': stock.get("f18", "N/A")}else:print("No 'data' or 'diff' found in stock_data.")else:print("Left data not found in response.")items.py

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.htmlimport scrapyclass StocksScraperItem(scrapy.Item):bStockNo = scrapy.Field()bStockName = scrapy.Field()fLatestPrice = scrapy.Field()fChangeRate = scrapy.Field()fChangeAmount = scrapy.Field()fVolume = scrapy.Field()fTurnover = scrapy.Field()fAmplitude = scrapy.Field()fHighest = scrapy.Field()fLowest = scrapy.Field()fOpeningPrice = scrapy.Field()fPreviousClose = scrapy.Field()pipelines.py

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html# useful for handling different item types with a single interface

from itemadapter import ItemAdapterimport pymysqlclass StocksScraperPipeline:def open_spider(self, spider):self.connection = pymysql.connect(host='127.0.0.1',port=33068,user='root', # 替换为你的MySQL用户名password='160127ss', # 替换为你的MySQL密码database='spydercourse', # 数据库名charset='utf8mb4',use_unicode=True,)self.cursor = self.connection.cursor()# 创建表格create_table_sql = """CREATE TABLE IF NOT EXISTS stocks (id INT AUTO_INCREMENT PRIMARY KEY,bStockNo VARCHAR(10),bStockName VARCHAR(50),fLatestPrice DECIMAL(10, 2),fChangeRate DECIMAL(5, 2),fChangeAmount DECIMAL(10, 2),fVolume BIGINT,fTurnover DECIMAL(10, 2),fAmplitude DECIMAL(5, 2),fHighest DECIMAL(10, 2),fLowest DECIMAL(10, 2),fOpeningPrice DECIMAL(10, 2),fPreviousClose DECIMAL(10, 2));"""self.cursor.execute(create_table_sql)def close_spider(self, spider):self.connection.close()def process_item(self, item, spider):print(f"Storing item: {item}") # 打印每个存储的项try:insert_sql = """INSERT INTO stocks (bStockNo, bStockName, fLatestPrice, fChangeRate, fChangeAmount, fVolume, fTurnover, fAmplitude, fHighest, fLowest, fOpeningPrice, fPreviousClose) VALUES (%s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s)"""self.cursor.execute(insert_sql, (item['bStockNo'],item['bStockName'],float(item['fLatestPrice']),float(item['fChangeRate']),float(item['fChangeAmount']),int(item['fVolume']),float(item['fTurnover']),float(item['fAmplitude']),float(item['fHighest']),float(item['fLowest']),float(item['fOpeningPrice']),float(item['fPreviousClose']),))self.connection.commit()except Exception as e:print(f"Error storing item: {e}")print(f"SQL: {insert_sql} | Values: {item}")settings.py

BOT_NAME = "stocks_scraper"SPIDER_MODULES = ["stocks_scraper.spiders"]

NEWSPIDER_MODULE = "stocks_scraper.spiders"ITEM_PIPELINES = {'stocks_scraper.pipelines.StocksScraperPipeline': 300,

}

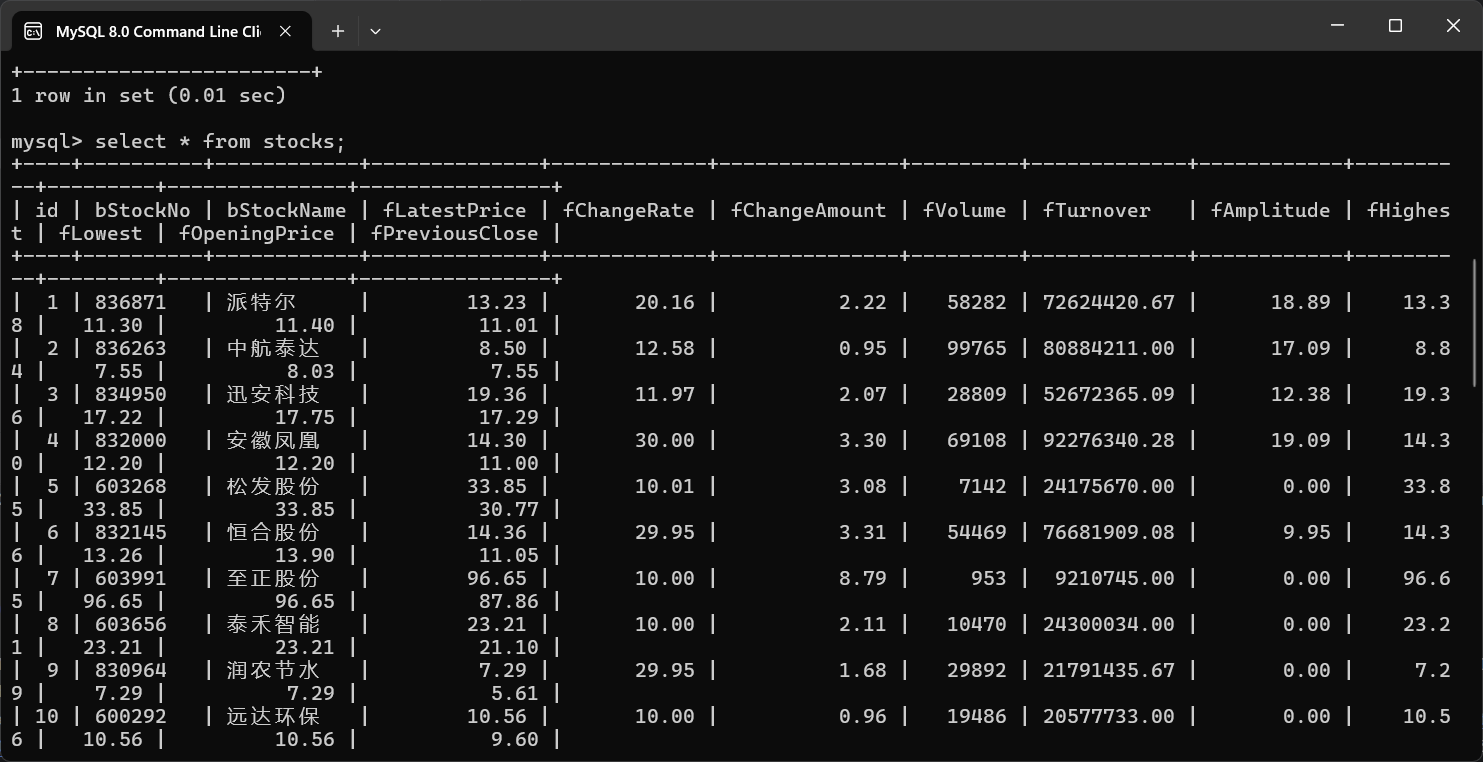

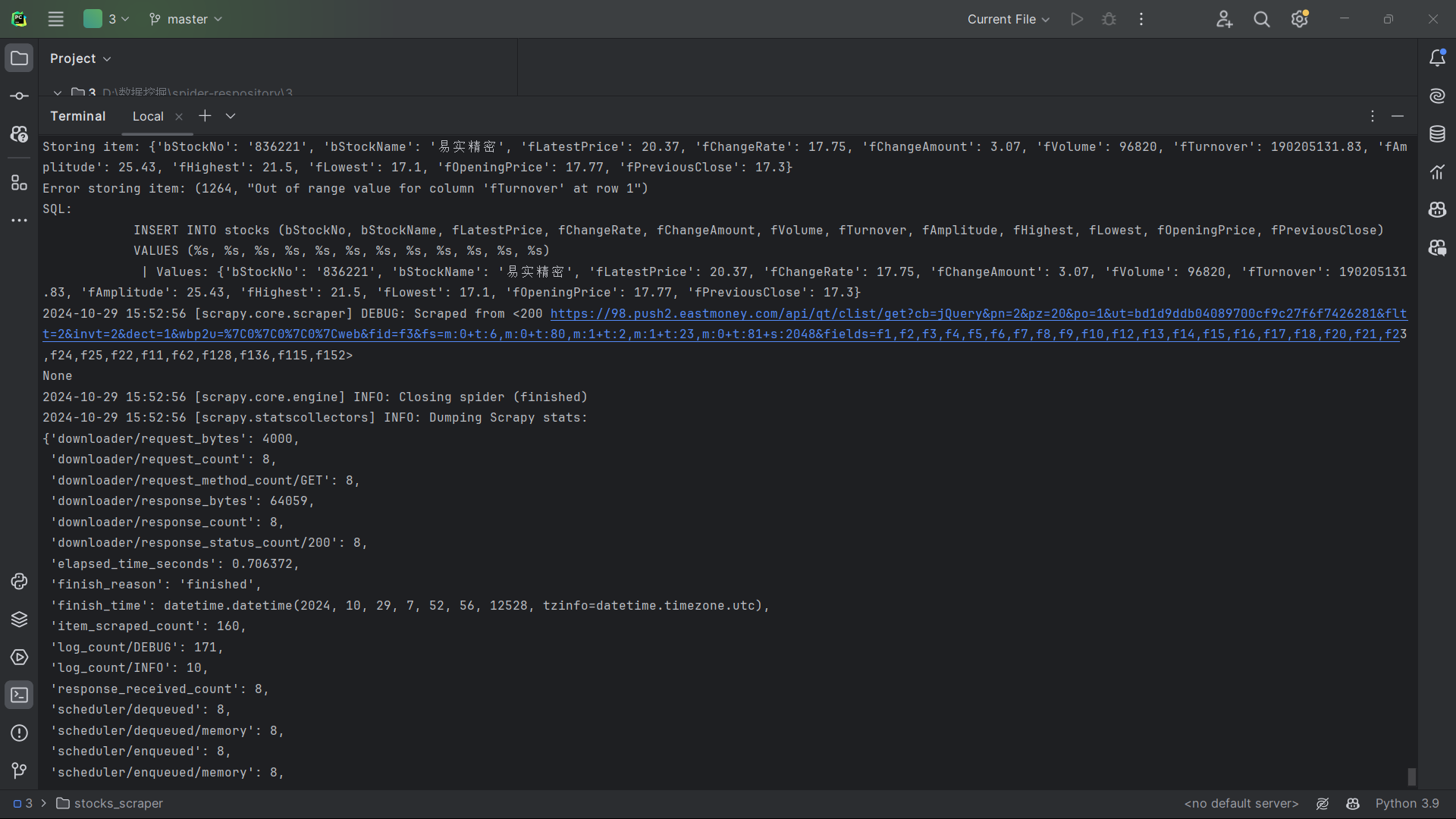

ROBOTSTXT_OBEY = False- 截图

心得体会

这道题花费的时间非常的长,先是在实践课上使用scrapy框架爬取动态网页一直不出结果,最后在老师的提示下延续上次的爬取东方财富网的实验使用抓包再转换成scrapy框架格式爬取静态网页后才得以成功。

感觉使用selenium驱动得到静态网页的源码,在使用scrapy框架来做也是可以的。

作业③

实验要求及结果

- 要求:要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取外汇网站数据。

候选网站:中国银行网:https://www.boc.cn/sourcedb/whpj/

输出信息:

| Currency | TBP | CBP | TSP | CSP | Time |

|---|---|---|---|---|---|

| 阿联酋迪拉姆 | 198.58 | 192.31 | 199.98 | 206.59 | 11:27:14 |

- 代码:

bank_spider.py

import scrapy

from bank.items import BankItem

class BankSpider(scrapy.Spider):name = 'bank'start_urls = ['https://www.boc.cn/sourcedb/whpj/index.html']def start_requests(self):num_pages = int(getattr(self, 'pages', 4))for page in range(1, num_pages + 1):if page == 1:start_url = f'https://www.boc.cn/sourcedb/whpj/index.html'else:start_url = f'https://www.boc.cn/sourcedb/whpj/index_{page - 1}.html'yield scrapy.Request(start_url, callback=self.parse)def parse(self, response):bank_list = response.xpath('//tr[position()>1]')for bank in bank_list:item = BankItem()item['Currency'] = bank.xpath('.//td[1]/text()').get()item['TBP'] = bank.xpath('.//td[2]/text()').get()item['CBP'] = bank.xpath('.//td[3]/text()').get()item['TSP'] = bank.xpath('.//td[4]/text()').get()item['CSP'] = bank.xpath('.//td[5]/text()').get()item['Time'] = bank.xpath('.//td[8]/text()').get()yield itemitems.py

import scrapyclass BankItem(scrapy.Item):Currency = scrapy.Field()TBP = scrapy.Field()CBP = scrapy.Field()TSP = scrapy.Field()CSP = scrapy.Field()Time = scrapy.Field()

pipelines.py

from itemadapter import ItemAdapter

import pymysqlclass BankPipeline:def open_spider(self, spider):# 连接到 MySQL 数据库self.connection = pymysql.connect(host='127.0.0.1',port=33068,user='root', # 替换为你的MySQL用户名password='160127ss', # 替换为你的MySQL密码database='spydercourse', # 数据库名charset='utf8mb4',use_unicode=True,)self.cursor = self.connection.cursor() # 修正为使用 self.connectionself.create_database()def create_database(self):# 创建表格self.cursor.execute('''CREATE TABLE IF NOT EXISTS banks (Currency VARCHAR(50),TBP DECIMAL(10, 2),CBP DECIMAL(10, 2),TSP DECIMAL(10, 2),CSP DECIMAL(10, 2),Time VARCHAR(20) # 改为 VARCHAR 类型以存储时间字符串)''')self.connection.commit()def process_item(self, item, spider):# 插入数据self.cursor.execute('''INSERT INTO banks (Currency,TBP,CBP,TSP,CSP,Time) VALUES (%s, %s, %s, %s, %s, %s)''',(item['Currency'], item['TBP'], item['CBP'], item['TSP'], item['CSP'], item['Time']))self.connection.commit()return itemdef close_spider(self, spider):# 关闭数据库连接self.cursor.close()self.connection.close() # 修正为使用 self.connectionsettings.py

BOT_NAME = "bank"SPIDER_MODULES = ["bank.spiders"]

NEWSPIDER_MODULE = "bank.spiders"

CONCURRENT_REQUESTS = 1

DOWNLOAD_DELAY = 1ITEM_PIPELINES = {'bank.pipelines.BankPipeline': 300,

}

ROBOTSTXT_OBEY = False

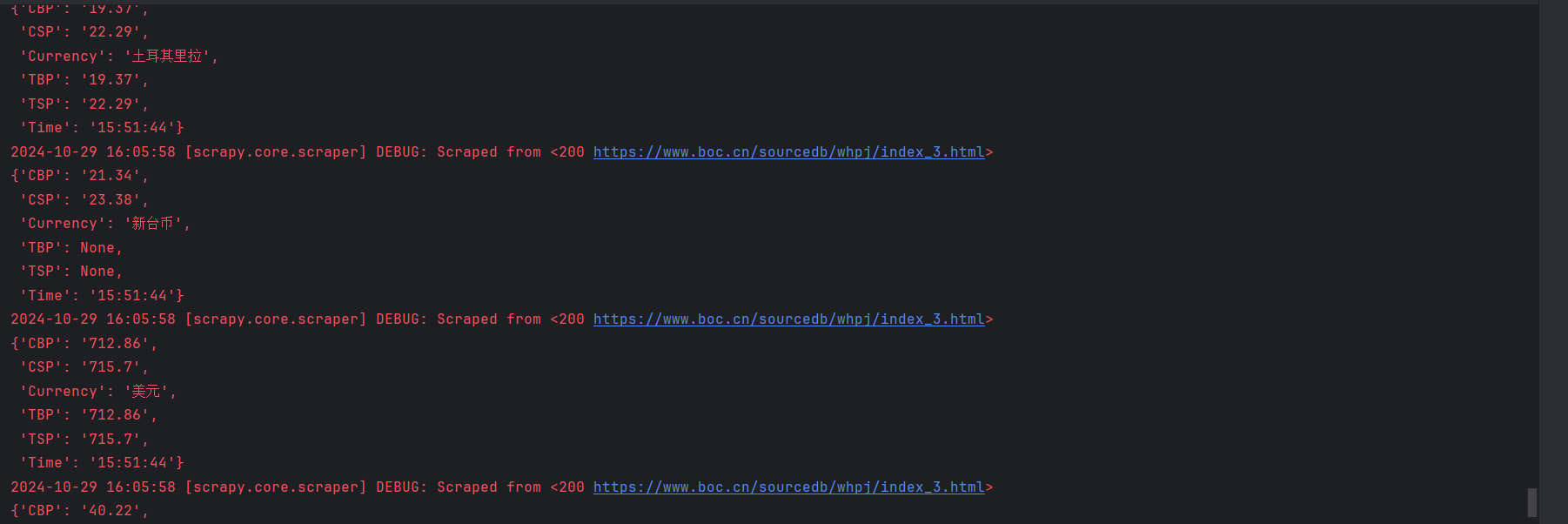

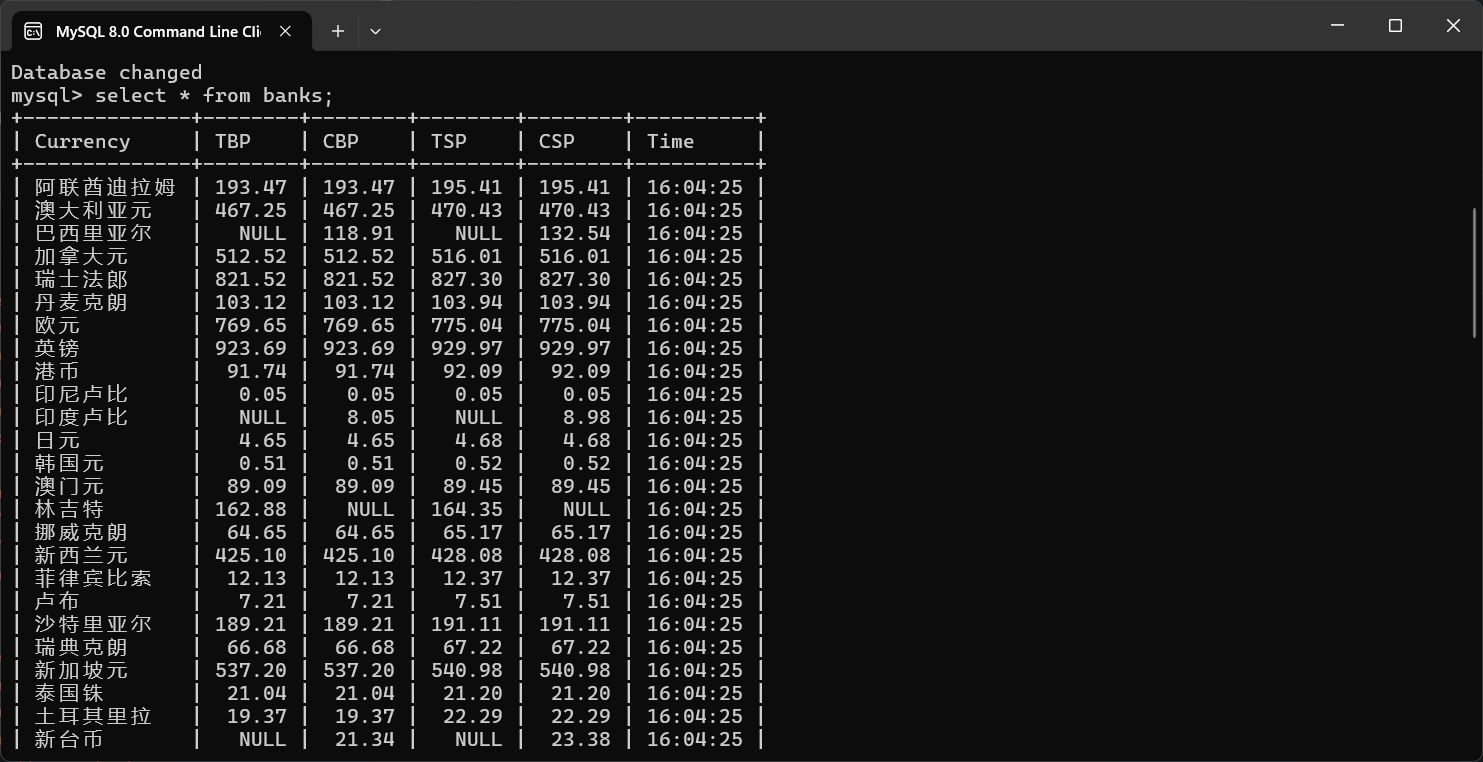

- 运行结果:

心得体会

这道题目主要考查了我对Scrapy框架和MySQL数据库操作的综合运用能力。在项目实现过程中,我通过Scrapy的Item和Pipeline模块完成了数据的提取、清洗和存储,同时还学习了如何将数据从爬虫传递到MySQL数据库中。这不仅加深了我对爬虫框架的理解,也锻炼了我解决实际问题的能力。

在实际操作中遇到的几个关键问题,比如字段数据为空值的处理、MySQL连接参数的配置错误、以及数据插入时的字段类型匹配问题,虽然一开始带来了困扰,但在调试和查阅文档后得到了圆满解决。这让我明白了在编程实践中,耐心分析和逐步解决问题的重要性。