最近公司有个要识别的项目需要计算机识别,于是就找到了opencv来进行,opencv的cuda版本需要自己来进行编译需要去opencv官网下载,我下载的版本是opencv4.10

https://github.com/opencv/opencv/archive/refs/tags/4.10.0.zip

还有需要opencv_contrib-4.10.0和cmake下载

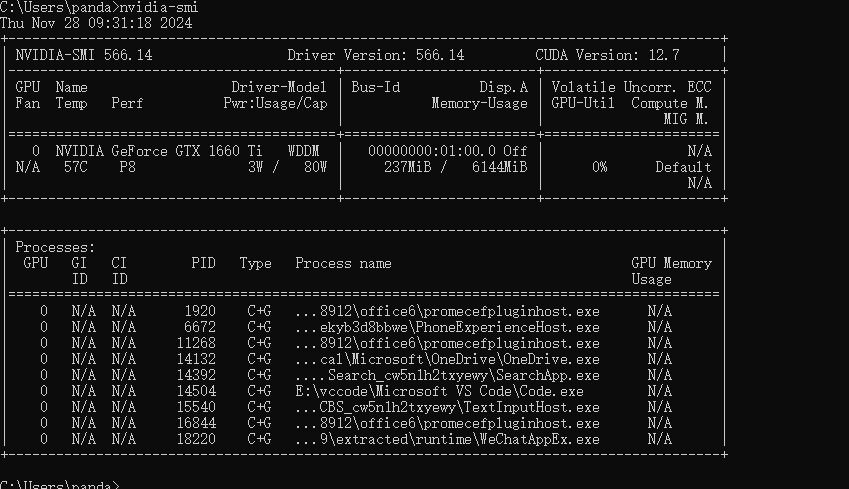

下载之前需要检查自己的cuda和cudnn

cmd输入nvidia-smi查看自己的cuda版本去官网下载对于的版本来安装

我这边使用的是之前安装的12.6了就不更新了 https://developer.nvidia.com/cuda-downloads

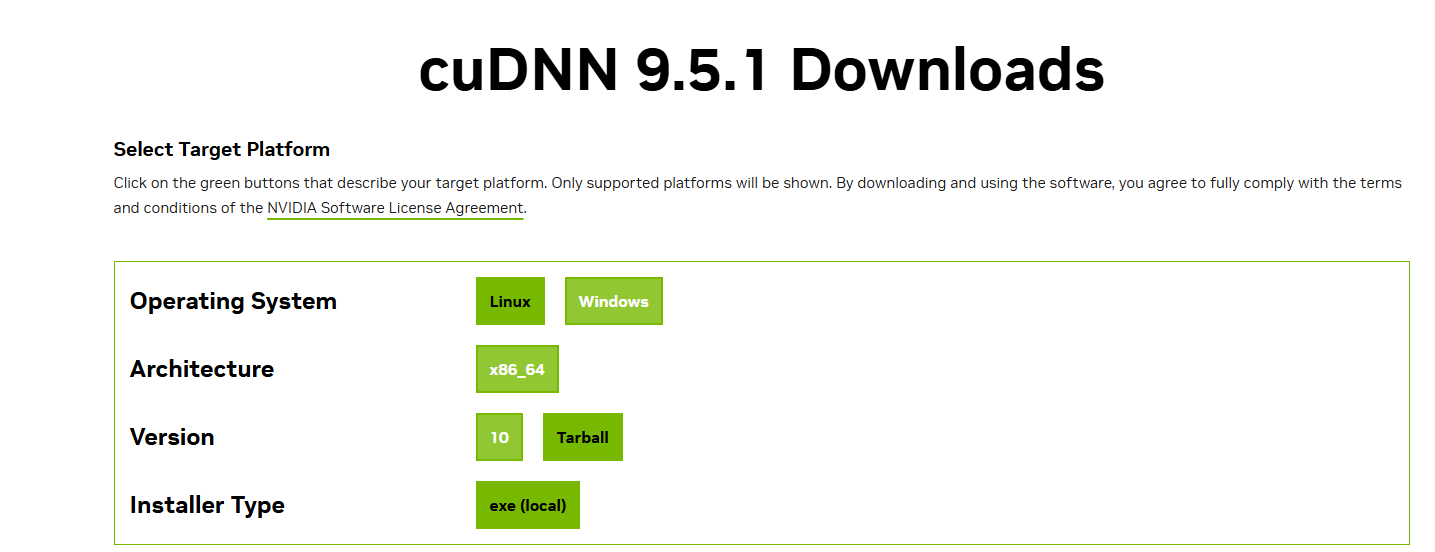

现在对于cuda的cudnn版本https://developer.nvidia.com/cudnn-downloads

需要记下自己的cudnn地址我是默认的在C:\Program Files\NVIDIA\CUDNN\v9.5 需要将对应的bin include lib和对应的版本号下的文件移动到对应的cuda下载地址C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v12.6的bin include lib下这样cudnn就设置好了

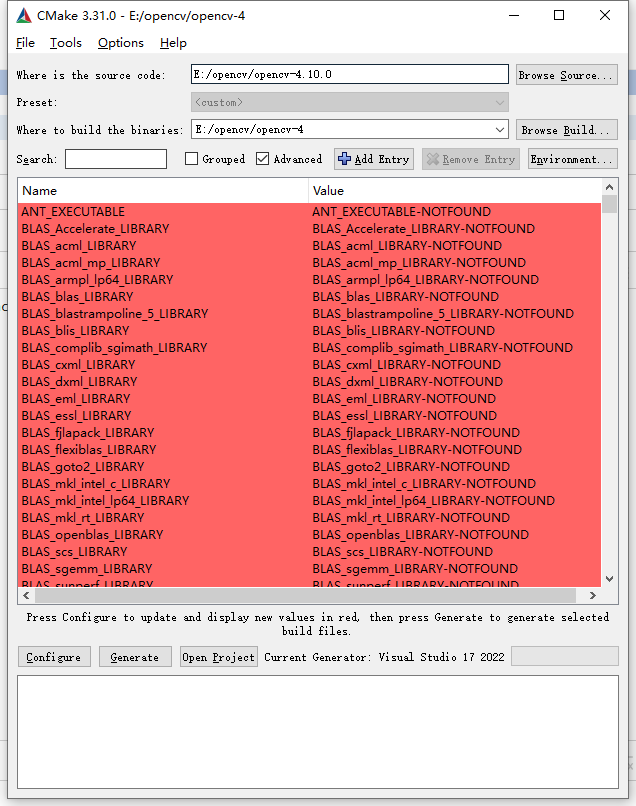

rust一般都是下载过c++环境的这边就不安装了 直接进行的编译 E:/opencv/opencv-4.10.0是自己的opencv解压后的包E:/opencv/opencv-4是自己的编译到的地址选择完以后

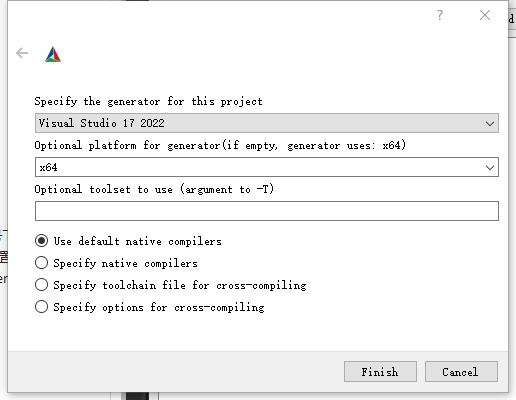

点击configure 选择自己的vs版本和x64

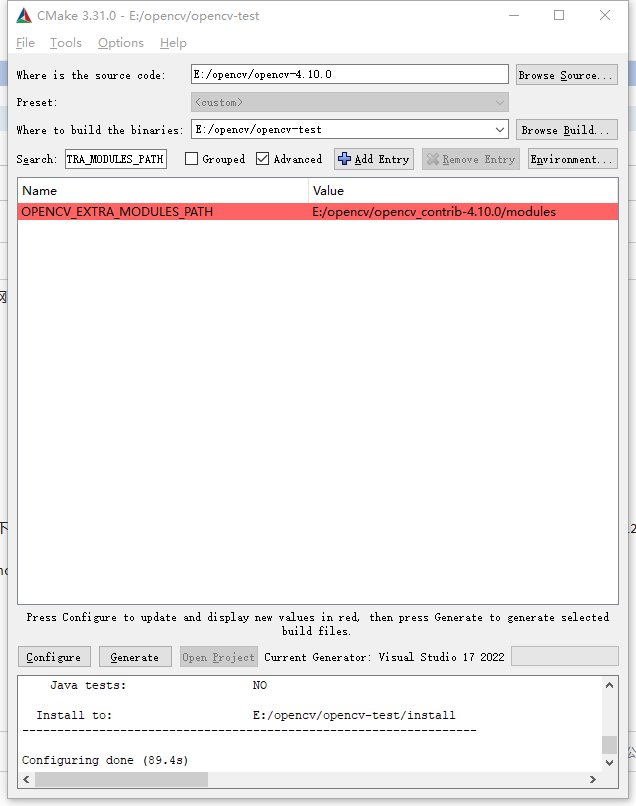

等done以后搜索OPENCV_EXTRA_MODULES_PATH选择自己解压opencv_contrib的models

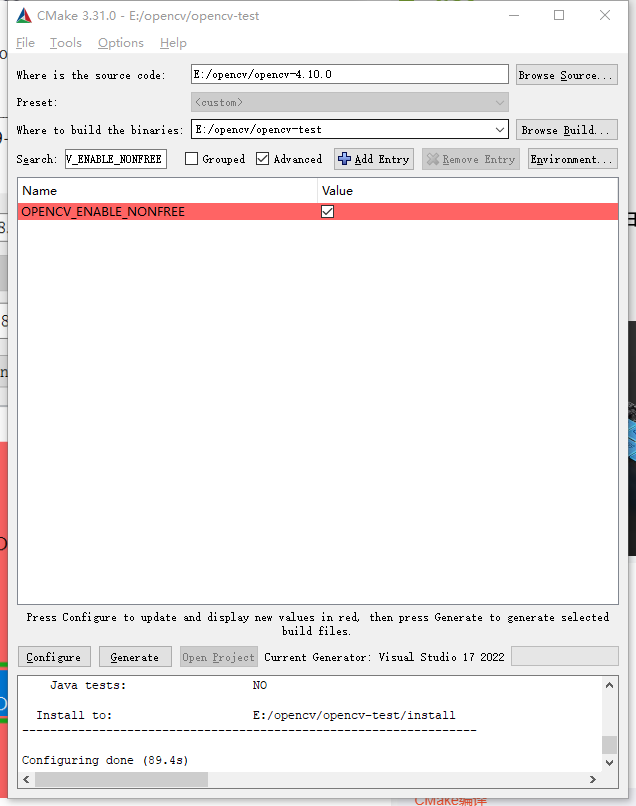

再搜索OPENCV_ENABLE_NONFREE勾选

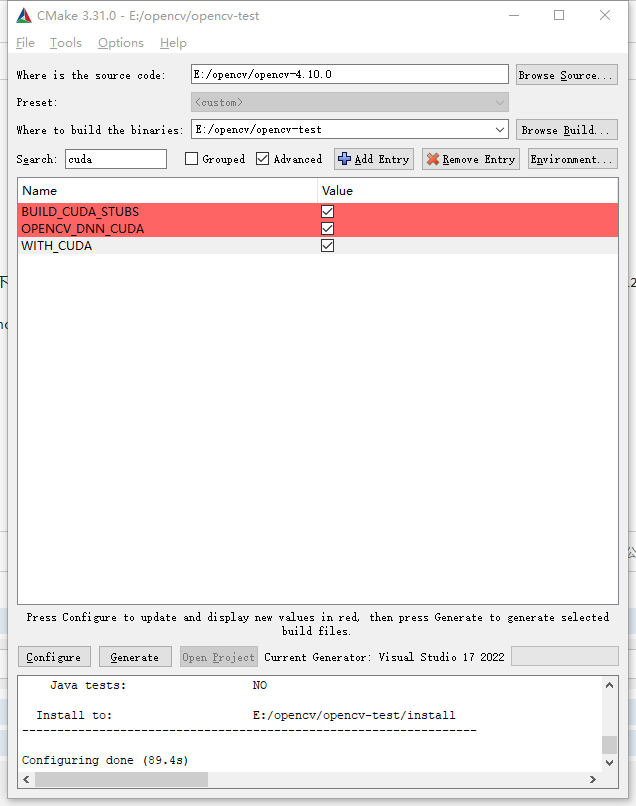

搜索cuda全部勾选

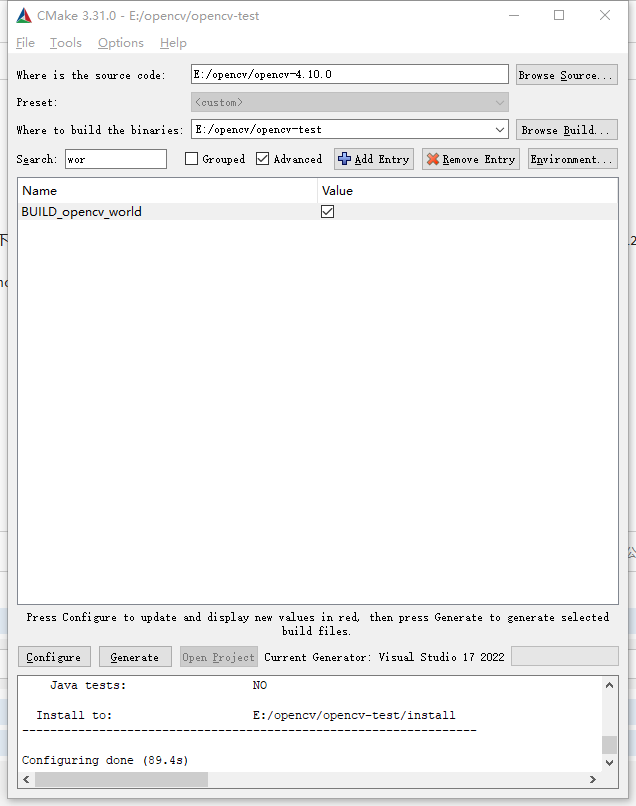

最后再搜索wor勾选

点击configure 中间可能会出现 raw.githubusercontent.com/文件下载出问题的 去找一下github更改host的文件的即可

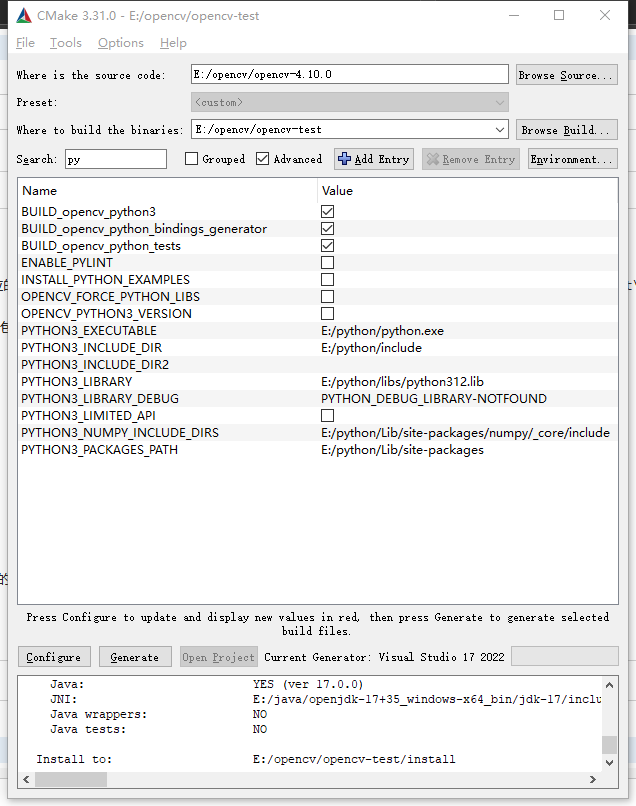

rust的opencv是调用c++的代码的如果没有py和java等环境需要搜索以后全部取消勾选

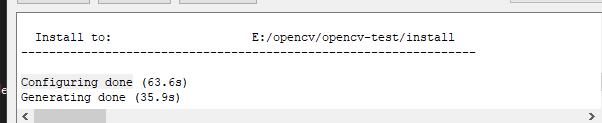

Configuring done以后点击generate等待generate done以后点击 open project打开vs

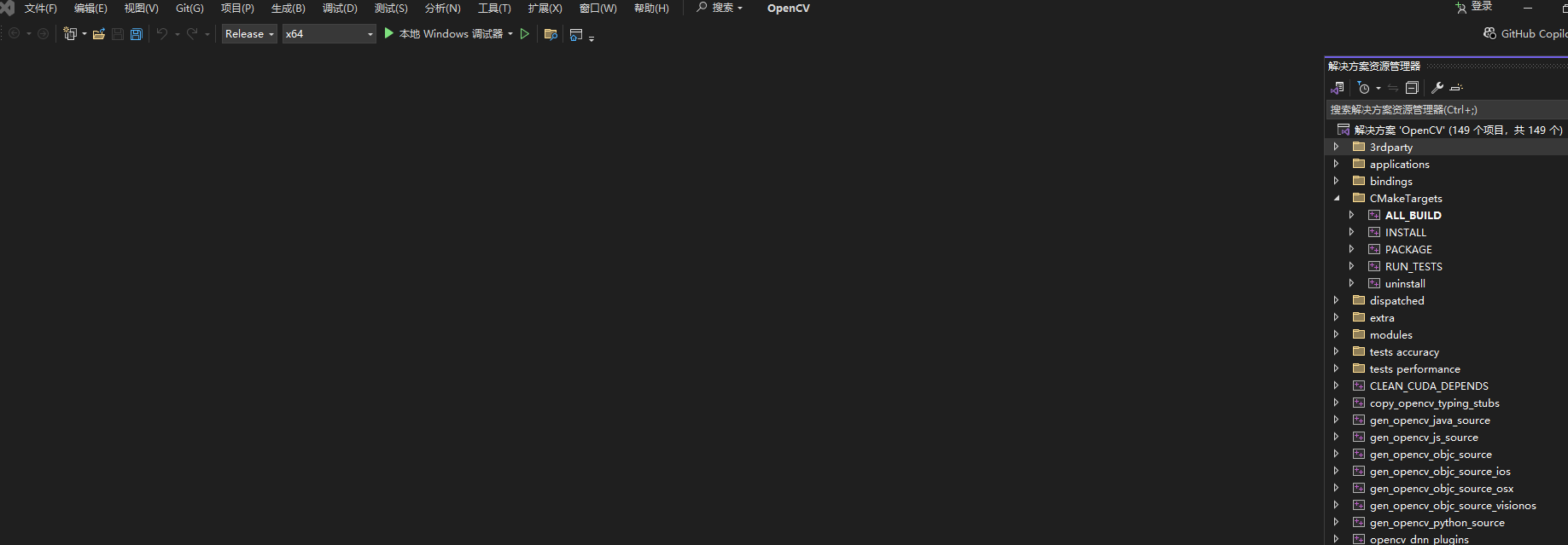

选择release x64 选择CMakeTargets 先ALL_BUILD 生成 然后再INSTALL编译出包

opencv的环境配置参考https://www.cnblogs.com/-CO-/p/18075315

开发流程

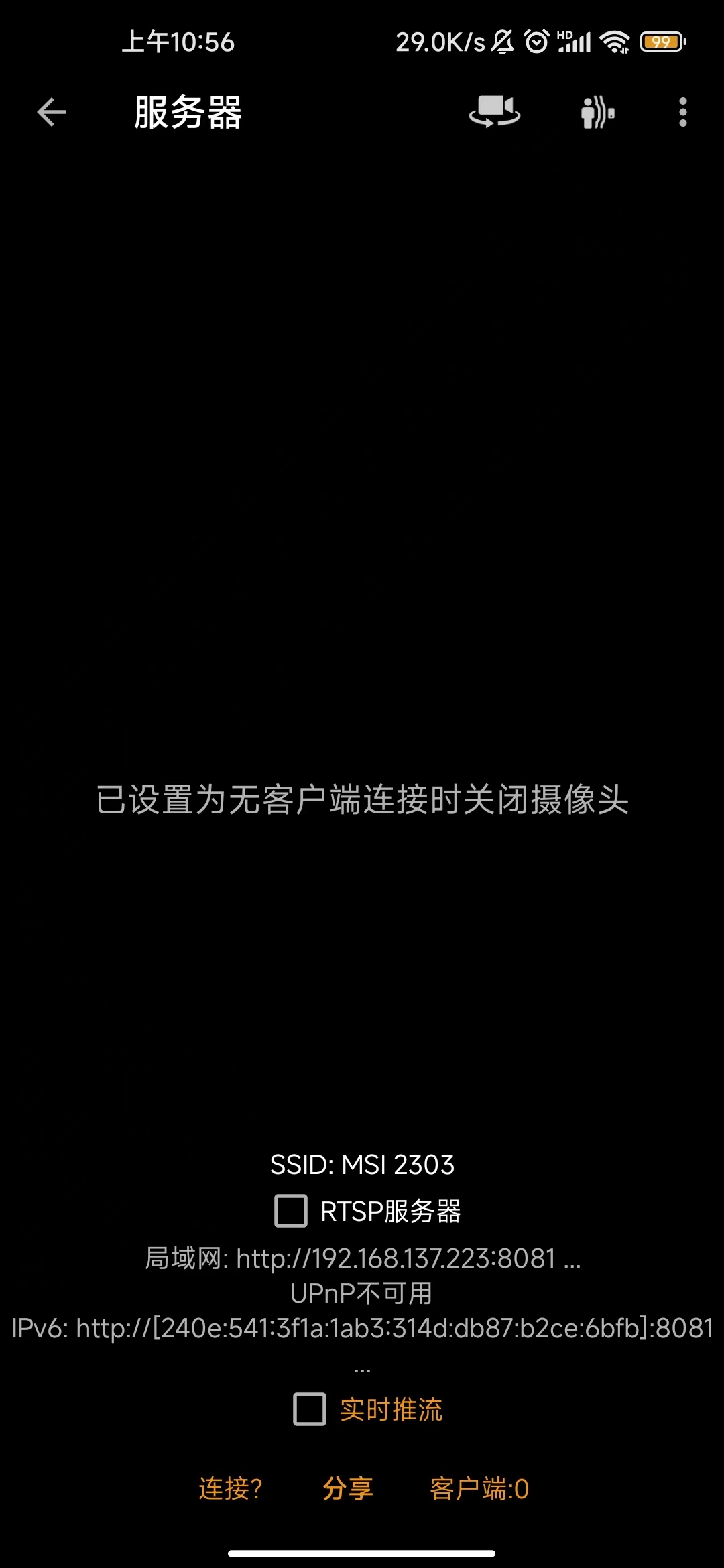

我使用的手机的ip摄像头软件进行连接摄像头 需要局域网不行的话需要电脑连接手机热点

创建一个项目 添加opencv cargo add opencv

导入使用的模块

use opencv::{core::{self, Mat, Rect, Size, Vector, Point,CV_32F, CV_8UC3}, dnn::{self, DNN_BACKEND_CUDA, DNN_TARGET_CUDA}, highgui::{self, imshow}, prelude::*, videoio::{self, VideoCapture}

};

const SOURCE_WINDOW: &str = "Video Stream";#[derive(Debug)]

pub struct BoxDetection {pub xmin: i32, // bounding box left-top xpub ymin: i32, // bounding box left-top ypub xmax: i32, // bounding box right-bottom xpub ymax: i32, // bounding box right-bottom ypub class: i32, // class indexpub conf: f32 // confidence score

}

#[derive(Debug)]

pub struct Detections {pub detections: Vec<BoxDetection>

}

//net获取模型onnx

let mut net = dnn::read_net_from_onnx("mx/yolov8s.onnx").unwrap();

//设置cuda后端

net.set_preferable_backend(DNN_BACKEND_CUDA).unwrap();

net.set_preferable_target(DNN_TARGET_CUDA).unwrap();

//设置窗口id

let _ = highgui::named_window(SOURCE_WINDOW, highgui::WindowFlags::WINDOW_FREERATIO as i32);

//需要修改ip为自己手机ip摄像头的ip

let vide = "http://admin:admin@192.168.254.36:8081/";

//创建视频流

let mut vc = VideoCapture::default().unwrap();

//通过ip打开视频流

let bool = vc.open_file(&vide, videoio::CAP_FFMPEG).unwrap();

//模型id对应合集

let classes_labels: Vec<&str> = vec!["person", "bicycle", "car", "motorbike", "aeroplane", "bus", "train", "truck", "boat", "traffic light", "fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple", "sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "sofa", "pottedplant", "bed", "diningtable", "toilet", "tvmonitor", "laptop", "mouse", "remote", "keyboard", "cell phone", "microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear", "hair drier", "toothbrush"];

if bool {loop {//读取视频流if vc.read(&mut Mat::default()).unwrap() {// 显示帧let mut frame = Mat::default();vc.read(&mut frame).unwrap();let h = frame.rows();let w = frame.cols();let _max = std::cmp::max(w, h);let mut image = Mat::zeros(_max, _max, CV_8UC3).unwrap().to_mat().unwrap();frame.copy_to(&mut image).unwrap();//let fr = dnn::blob_from_image(&image,1.0 / 255.0,Size::new(640, 640),core::Scalar::new(0f64,0f64,0f64,0f64),true,false,CV_32F).unwrap();net.set_input(&fr, "", 1.0, core::Scalar::default()).unwrap();let mut output_blobs: Vector<Mat> = Vector::default();let name = net.get_unconnected_out_layers_names().unwrap();//进行推理net.forward(&mut output_blobs, &name).unwrap();let dets = output_blobs.get(0).unwrap();let rows = dets.mat_size().get(2).unwrap(); // 8400let cols = dets.mat_size().get(1).unwrap(); let mut boxes: Vector<Rect> = Vector::default();let mut scores: Vector<f32> = Vector::default();let mut indices: Vector<i32> = Vector::default();let mut class_index_list: Vector<i32> = Vector::default();let x_scale = 1;let y_scale = 1;for row in 0..rows {let mut vec = Vec::new();let mut max_score = 0f32;let mut max_index = 0;for col in 0..cols {let value: f32 = *dets.at_3d::<f32>(0, col, row).unwrap();if col > 3 {if value > max_score {max_score = value;max_index = col - 4;}}vec.push(value);}if max_score > 0.25 {scores.push(max_score);class_index_list.push(max_index as i32);let cx = vec[0];let cy = vec[1];let w = vec[2];let h = vec[3]; boxes.push( Rect{x: (((cx) - ((w) / 2.0)) * x_scale as f32).round() as i32, y: (((cy) - ((h) / 2.0)) * y_scale as f32).round() as i32, width: (w * x_scale as f32).round() as i32, height: (h * y_scale as f32).round() as i32});indices.push(row as i32);}}dnn::nms_boxes(&boxes, &scores, 0.5, 0.5, &mut indices, 1.0, 0).unwrap();let mut final_boxes : Vec<BoxDetection> = Vec::default();for i in &indices {let class = class_index_list.get(i as usize).unwrap();// println!("{}", class);let rect = boxes.get(i as usize).unwrap();let bbox = BoxDetection{xmin: rect.x,ymin: rect.y,xmax: rect.x + rect.width,ymax: rect.y + rect.height,conf: scores.get(i as usize).unwrap(),class: class};// println!("bbox 111111{:?}", bbox);final_boxes.push(bbox);}//绘制图像for i in 0..final_boxes.len() {let bbox = &final_boxes[i];let rect = Rect::new(bbox.xmin, bbox.ymin, bbox.xmax - bbox.xmin, bbox.ymax - bbox.ymin);let label = classes_labels.get(bbox.class as usize);let label = match label {Some(label) => label,None => "Unknown",};let box_color = core::Scalar::new(0.0, 255.0, 0.0, 0.0);opencv::imgproc::rectangle(&mut image, rect, box_color, 2, opencv::imgproc::LINE_8, 0).unwrap();let _ = opencv::imgproc::put_text_def(&mut image, label, Point{x: bbox.xmax, y: bbox.ymax/ 2}, 1,1.0,box_color);}//显示视频imshow(SOURCE_WINDOW, &image).unwrap();// 等待按键事件if highgui::wait_key(10).unwrap() > 0 {break;}} else {println!("Error: Unable to read mat.");break;}}}

现在推理耗时很久每次需要300ms 正在尝试用多线程去写 有问题请发邮箱至anmingle@163.com