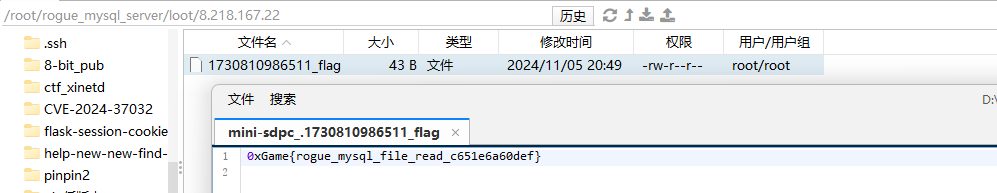

为DataSophon制作streampark-2.1.5安装包.md

下载并解压streampark 2.1.5安装包

StreamPark官网下载

wget -O /opt/datasophon/DDP/packages/apache-streampark_2.12-2.1.5-incubating-bin.tar.gz https://www.apache.org/dyn/closer.lua/incubator/streampark/2.1.5/apache-streampark_2.12-2.1.5-incubating-bin.tar.gz?action=downloadcd /opt/datasophon/DDP/packages/tar -xzvf apache-streampark_2.12-2.1.5-incubating-bin.tar.gz

修改安装包目录名称

保持和service_ddl.json中 decompressPackageName 一致

mv apache-streampark_2.12-2.1.5-incubating-bin streampark-2.1.5

修改 conf/config.yaml【可选】

- 修改连接信息

进入到 conf 下,修改 conf/config.yaml,找到 spring 这一项,找到 profiles.active 的配置,数据库修改成MySQL即可,如下:

vi /opt/datasophon/DDP/packages/streampark-2.1.5/conf/config.yaml

spring:profiles.active: mysql #[h2,pgsql,mysql]application.name: StreamParkdevtools.restart.enabled: falsemvc.pathmatch.matching-strategy: ant_path_matcherservlet:multipart:enabled: truemax-file-size: 500MBmax-request-size: 500MBaop.proxy-target-class: truemessages.encoding: utf-8jackson:date-format: yyyy-MM-dd HH:mm:sstime-zone: GMT+8main:allow-circular-references: truebanner-mode: off

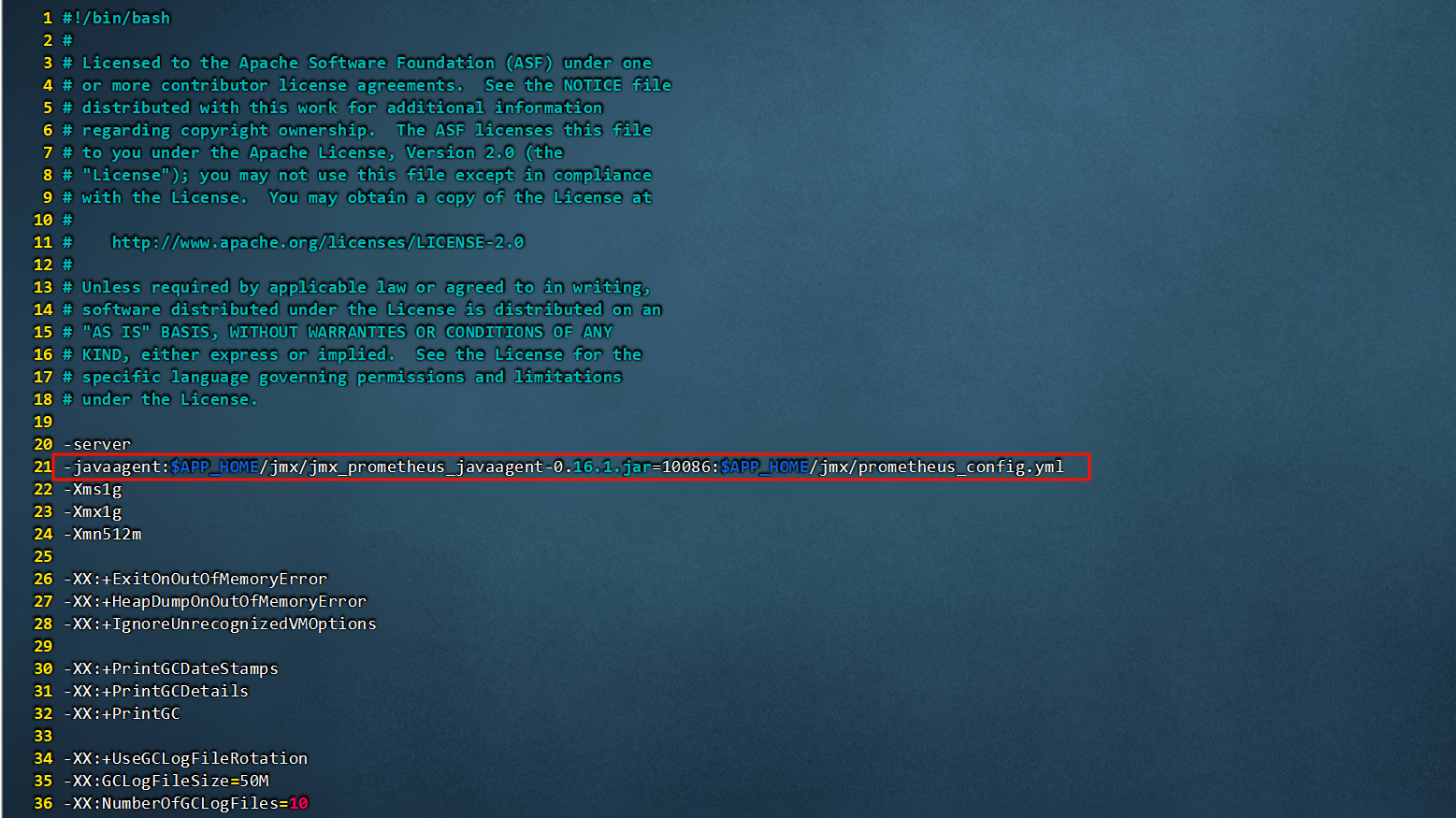

修改streampark-2.1.5/bin/jvm_opts.sh文件

(约21行)中增加prometheus_javaagent配置,如下:

vi /opt/datasophon/DDP/packages/streampark-2.1.5/bin/jvm_opts.sh

# 增加以下内容

-javaagent:$APP_HOME/jmx/jmx_prometheus_javaagent-0.16.1.jar=10086:$APP_HOME/jmx/prometheus_config.yml

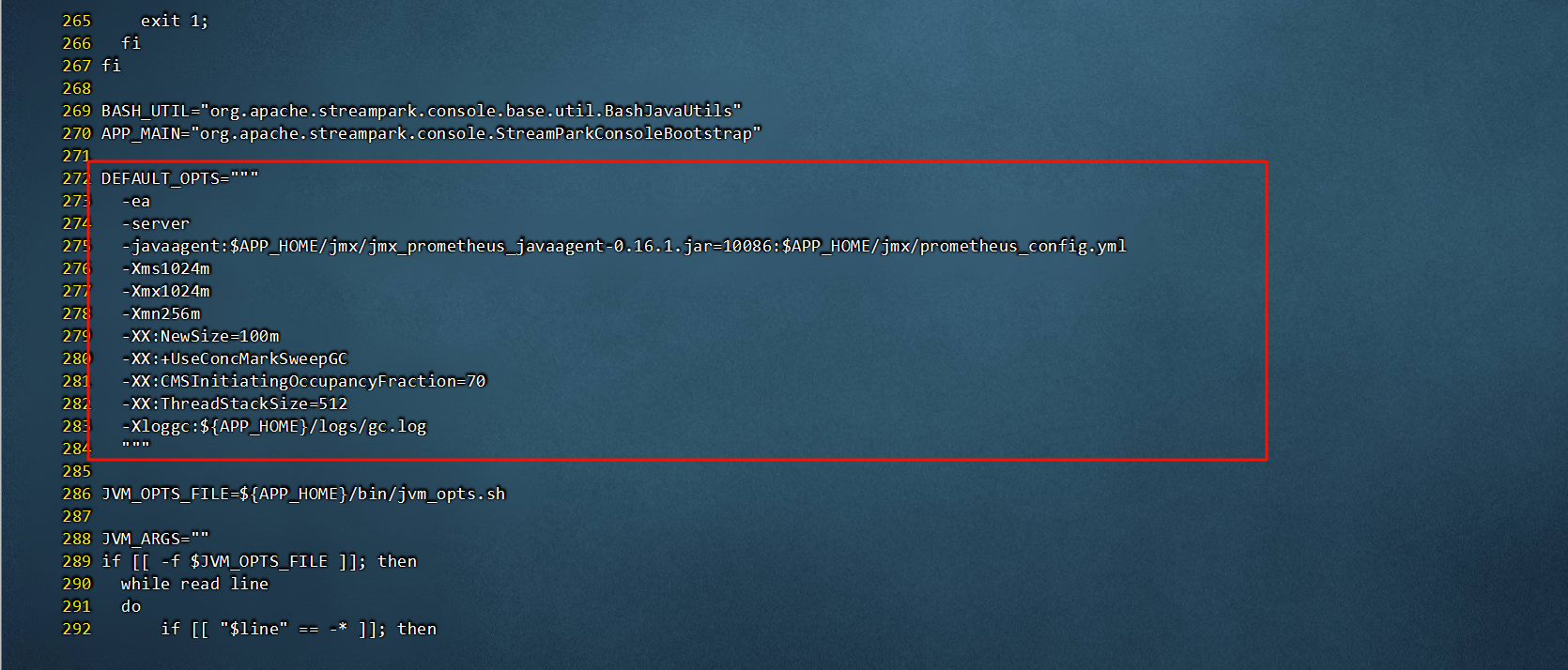

如果是低一些的版本或者StreamPark2.1.1,需要直接在bin/streampark.sh文件增加prometheus_javaagent,那个时候就可以直接把下面的内容增加进去。

- streampark.sh文件在DEFAULT_OPTS(约271行)中增加prometheus_javaagent配置,如下:

vi /opt/datasophon/DDP/packages/streampark-2.1.5/bin/streampark.sh

DEFAULT_OPTS="""-ea-server-javaagent:$APP_HOME/jmx/jmx_prometheus_javaagent-0.16.1.jar=10086:$APP_HOME/jmx/prometheus_config.yml-Xms1024m-Xmx1024m-Xmn256m-XX:NewSize=100m-XX:+UseConcMarkSweepGC-XX:CMSInitiatingOccupancyFraction=70-XX:ThreadStackSize=512-Xloggc:${APP_HOME}/logs/gc.log"""

注意:为什么jvm_opts.sh文件中只是需要加上一行内容,其他的不要了呢?据群里大佬反馈:加了个参数压根都没用到

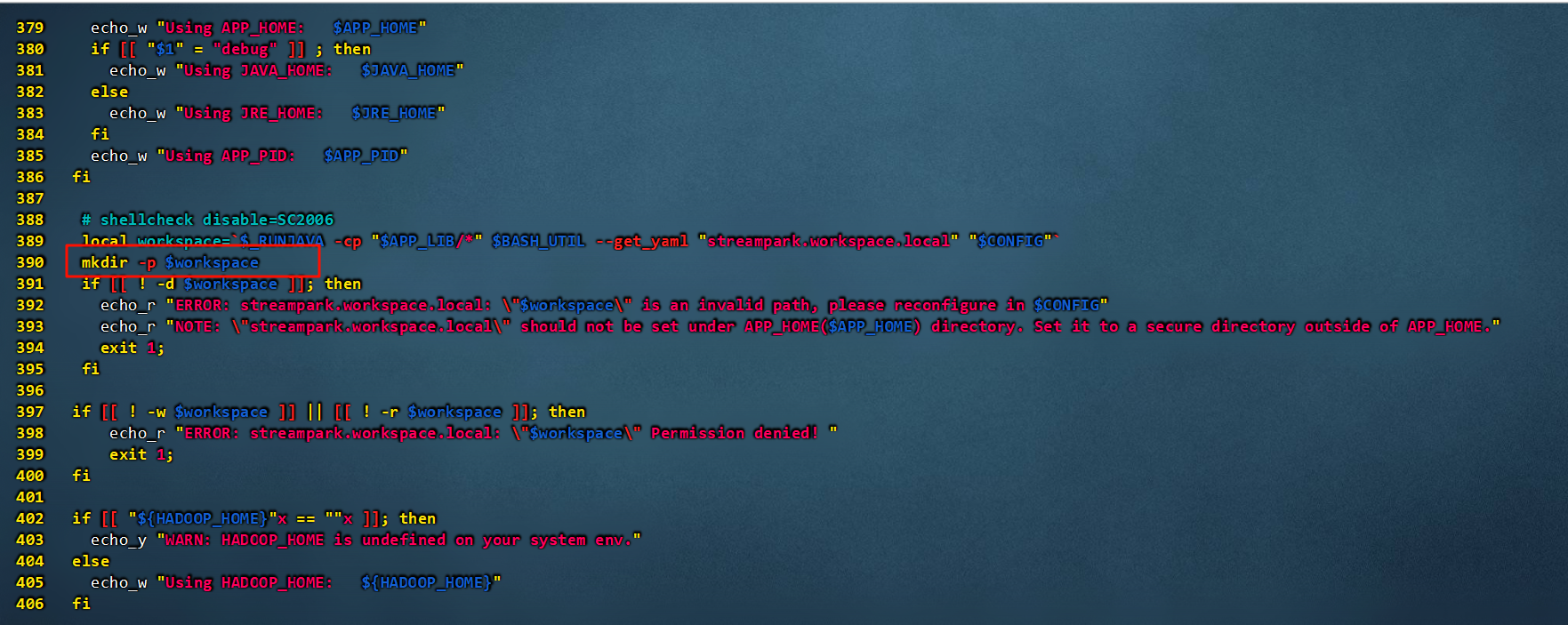

修改streampark-2.1.5/bin/streampark.sh文件

vi /opt/datasophon/DDP/packages/streampark-2.1.5/bin/streampark.sh

增加prometheus_javaagent【省略】-新版本已把jvm配置外置到jvm_opts.sh

StreamPark2.1.5版本已经把jvm配置外置到jvm_opts.sh,直接参考步骤4修改即可,这里可以直接不用看了。

修改start函数

- 在start函数中,

local workspace=...略(约390行)下一行,增加mkdir-p $workspace,如下

local workspace=`$_RUNJAVA -cp "$APP_LIB/*" $BASH_UTIL --get_yaml "streampark.workspace.local" "$CONFIG"`mkdir -p $workspaceif [[ ! -d $workspace ]]; thenecho_r "ERROR: streampark.workspace.local: \"$workspace\" is an invalid path, please reconfigure in $CONFIG"echo_r "NOTE: \"streampark.workspace.local\" should not be set under APP_HOME($APP_HOME) directory. Set it to a secure directory outside of APP_HOME."exit 1;fiif [[ ! -w $workspace ]] || [[ ! -r $workspace ]]; thenecho_r "ERROR: streampark.workspace.local: \"$workspace\" Permission denied! "exit 1;fi

修改status函数

- 修改status函数(约564行)中增加

exit 1,如下:

status() {# shellcheck disable=SC2155# shellcheck disable=SC2006local PID=$(get_pid)if [[ $PID -eq 0 ]]; thenecho_r "StreamPark is not running"exit 1elseecho_g "StreamPark is running pid is: $PID"fi

}

--完整streampark.sh文件参考如下:

#!/bin/bash

#

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing,

# software distributed under the License is distributed on an

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License.

#

# -----------------------------------------------------------------------------

# Control Script for the StreamPark Server

#

# Environment Variable Prerequisites

#

# APP_HOME May point at your StreamPark "build" directory.

#

# APP_BASE (Optional) Base directory for resolving dynamic portions

# of a StreamPark installation. If not present, resolves to

# the same directory that APP_HOME points to.

#

# APP_CONF (Optional) config path

#

# APP_PID (Optional) Path of the file which should contains the pid

# of the StreamPark startup java process, when start (fork) is

# used

# -----------------------------------------------------------------------------# Bugzilla 37848: When no TTY is available, don't output to console

have_tty=0

# shellcheck disable=SC2006

if [[ "`tty`" != "not a tty" ]]; thenhave_tty=1

fi# Bugzilla 37848: When no TTY is available, don't output to console

have_tty=0

# shellcheck disable=SC2006

if [[ "`tty`" != "not a tty" ]]; thenhave_tty=1

fi# Only use colors if connected to a terminal

if [[ ${have_tty} -eq 1 ]]; thenPRIMARY=$(printf '\033[38;5;082m')RED=$(printf '\033[31m')GREEN=$(printf '\033[32m')YELLOW=$(printf '\033[33m')BLUE=$(printf '\033[34m')BOLD=$(printf '\033[1m')RESET=$(printf '\033[0m')

elsePRIMARY=""RED=""GREEN=""YELLOW=""BLUE=""BOLD=""RESET=""

fiecho_r () {# Color red: Error, Failed[[ $# -ne 1 ]] && return 1# shellcheck disable=SC2059printf "[%sStreamPark%s] %s$1%s\n" $BLUE $RESET $RED $RESET

}echo_g () {# Color green: Success[[ $# -ne 1 ]] && return 1# shellcheck disable=SC2059printf "[%sStreamPark%s] %s$1%s\n" $BLUE $RESET $GREEN $RESET

}echo_y () {# Color yellow: Warning[[ $# -ne 1 ]] && return 1# shellcheck disable=SC2059printf "[%sStreamPark%s] %s$1%s\n" $BLUE $RESET $YELLOW $RESET

}echo_w () {# Color yellow: White[[ $# -ne 1 ]] && return 1# shellcheck disable=SC2059printf "[%sStreamPark%s] %s$1%s\n" $BLUE $RESET $WHITE $RESET

}# OS specific support. $var _must_ be set to either true or false.

cygwin=false

os400=false

# shellcheck disable=SC2006

case "`uname`" in

CYGWIN*) cygwin=true;;

OS400*) os400=true;;

esac# resolve links - $0 may be a softlink

PRG="$0"while [[ -h "$PRG" ]]; do# shellcheck disable=SC2006ls=`ls -ld "$PRG"`# shellcheck disable=SC2006link=`expr "$ls" : '.*-> \(.*\)$'`if expr "$link" : '/.*' > /dev/null; thenPRG="$link"else# shellcheck disable=SC2006PRG=`dirname "$PRG"`/"$link"fi

done# Get standard environment variables

# shellcheck disable=SC2006

PRG_DIR=`dirname "$PRG"`# shellcheck disable=SC2006

# shellcheck disable=SC2164

APP_HOME=`cd "$PRG_DIR/.." >/dev/null; pwd`

APP_BASE="$APP_HOME"

APP_CONF="$APP_BASE"/conf

APP_LIB="$APP_BASE"/lib

APP_LOG="$APP_BASE"/logs

APP_PID="$APP_BASE"/.pid

APP_OUT="$APP_LOG"/streampark.out

# shellcheck disable=SC2034

APP_TMPDIR="$APP_BASE"/temp# Ensure that any user defined CLASSPATH variables are not used on startup,

# but allow them to be specified in setenv.sh, in rare case when it is needed.

CLASSPATH=if [[ -r "$APP_BASE/bin/setenv.sh" ]]; then# shellcheck disable=SC1090. "$APP_BASE/bin/setenv.sh"

elif [[ -r "$APP_HOME/bin/setenv.sh" ]]; then# shellcheck disable=SC1090. "$APP_HOME/bin/setenv.sh"

fi# For Cygwin, ensure paths are in UNIX format before anything is touched

if ${cygwin}; then# shellcheck disable=SC2006[[ -n "$JAVA_HOME" ]] && JAVA_HOME=`cygpath --unix "$JAVA_HOME"`# shellcheck disable=SC2006[[ -n "$JRE_HOME" ]] && JRE_HOME=`cygpath --unix "$JRE_HOME"`# shellcheck disable=SC2006[[ -n "$APP_HOME" ]] && APP_HOME=`cygpath --unix "$APP_HOME"`# shellcheck disable=SC2006[[ -n "$APP_BASE" ]] && APP_BASE=`cygpath --unix "$APP_BASE"`# shellcheck disable=SC2006[[ -n "$CLASSPATH" ]] && CLASSPATH=`cygpath --path --unix "$CLASSPATH"`

fi# Ensure that neither APP_HOME nor APP_BASE contains a colon

# as this is used as the separator in the classpath and Java provides no

# mechanism for escaping if the same character appears in the path.

case ${APP_HOME} in*:*) echo "Using APP_HOME: $APP_HOME";echo "Unable to start as APP_HOME contains a colon (:) character";exit 1;

esac

case ${APP_BASE} in*:*) echo "Using APP_BASE: $APP_BASE";echo "Unable to start as APP_BASE contains a colon (:) character";exit 1;

esac# For OS400

if ${os400}; then# Set job priority to standard for interactive (interactive - 6) by using# the interactive priority - 6, the helper threads that respond to requests# will be running at the same priority as interactive jobs.COMMAND='chgjob job('${JOBNAME}') runpty(6)'system "${COMMAND}"# Enable multi threadingexport QIBM_MULTI_THREADED=Y

fi# Get standard Java environment variables

if ${os400}; then# -r will Only work on the os400 if the files are:# 1. owned by the user# 2. owned by the PRIMARY group of the user# this will not work if the user belongs in secondary groups# shellcheck disable=SC1090. "$APP_HOME"/bin/setclasspath.sh

elseif [[ -r "$APP_HOME"/bin/setclasspath.sh ]]; then# shellcheck disable=SC1090. "$APP_HOME"/bin/setclasspath.shelseecho "Cannot find $APP_HOME/bin/setclasspath.sh"echo "This file is needed to run this program"exit 1fi

fi# Add on extra jar files to CLASSPATH

# shellcheck disable=SC2236

if [[ ! -z "$CLASSPATH" ]]; thenCLASSPATH="$CLASSPATH":

fi

CLASSPATH="$CLASSPATH"# For Cygwin, switch paths to Windows format before running java

if ${cygwin}; then# shellcheck disable=SC2006JAVA_HOME=`cygpath --absolute --windows "$JAVA_HOME"`# shellcheck disable=SC2006JRE_HOME=`cygpath --absolute --windows "$JRE_HOME"`# shellcheck disable=SC2006APP_HOME=`cygpath --absolute --windows "$APP_HOME"`# shellcheck disable=SC2006APP_BASE=`cygpath --absolute --windows "$APP_BASE"`# shellcheck disable=SC2006CLASSPATH=`cygpath --path --windows "$CLASSPATH"`

fi# get jdk version, return version as an Integer.

JDK_VERSION=$("$_RUNJAVA" -version 2>&1 | grep -i 'version' | head -n 1 | cut -d '"' -f 2)

MAJOR_VER=$(echo "$JDK_VERSION" 2>&1 | cut -d '.' -f 1)

[[ $MAJOR_VER -eq 1 ]] && MAJOR_VER=$(echo "$JDK_VERSION" 2>&1 | cut -d '.' -f 2)

MIN_VERSION=8if [[ $MAJOR_VER -lt $MIN_VERSION ]]; thenecho "JDK Version: \"${JDK_VERSION}\", the version cannot be lower than 1.8"exit 1

fiif [[ -z "$USE_NOHUP" ]]; thenif $hpux; thenUSE_NOHUP="true"elseUSE_NOHUP="false"fi

fi

unset NOHUP

if [[ "$USE_NOHUP" = "true" ]]; thenNOHUP="nohup"

fiCONFIG="${APP_CONF}/application.yml"

# shellcheck disable=SC2006

if [[ -f "$CONFIG" ]] ; thenecho_y """[WARN] in the \"conf\" directory, found the \"application.yml\" file. The \"application.yml\" file is deprecated.For compatibility, this application.yml will be used preferentially. The latest configuration file is \"config.yaml\". It is recommended to use \"config.yaml\".Note: \"application.yml\" will be completely deprecated in version 2.2.0. """

elseCONFIG="${APP_CONF}/config.yaml"if [[ ! -f "$CONFIG" ]] ; thenecho_r "can not found config.yaml in \"conf\" directory, please check."exit 1;fi

fiBASH_UTIL="org.apache.streampark.console.base.util.BashJavaUtils"

APP_MAIN="org.apache.streampark.console.StreamParkConsoleBootstrap"

JVM_OPTS_FILE=${APP_HOME}/bin/jvm_opts.shJVM_ARGS=""

if [[ -f $JVM_OPTS_FILE ]]; thenwhile read linedoif [[ "$line" == -* ]]; thenJVM_ARGS="${JVM_ARGS} $line"fidone < $JVM_OPTS_FILE

fiJAVA_OPTS=${JAVA_OPTS:-"${JVM_ARGS}"}

JAVA_OPTS="$JAVA_OPTS -XX:HeapDumpPath=${APP_HOME}/logs/dump.hprof"

JAVA_OPTS="$JAVA_OPTS -Xloggc:${APP_HOME}/logs/gc.log"

[[ $MAJOR_VER -gt $MIN_VERSION ]] && JAVA_OPTS="$JAVA_OPTS --add-opens java.base/jdk.internal.loader=ALL-UNNAMED --add-opens jdk.zipfs/jdk.nio.zipfs=ALL-UNNAMED"

[[ $MAJOR_VER -ge 17 ]] && JAVA_OPTS="$JAVA_OPTS -Djava.security.manager=allow"SERVER_PORT=$($_RUNJAVA -cp "$APP_LIB/*" $BASH_UTIL --get_yaml "server.port" "$CONFIG")

# ----- Execute The Requested Command -----------------------------------------print_logo() {printf '\n'printf ' %s _____ __ __ %s\n' $PRIMARY $RESETprintf ' %s / ___// /_________ ____ _____ ___ ____ ____ ______/ /__ %s\n' $PRIMARY $RESETprintf ' %s \__ \/ __/ ___/ _ \/ __ `/ __ `__ \/ __ \ __ `/ ___/ //_/ %s\n' $PRIMARY $RESETprintf ' %s ___/ / /_/ / / __/ /_/ / / / / / / /_/ / /_/ / / / ,< %s\n' $PRIMARY $RESETprintf ' %s /____/\__/_/ \___/\__,_/_/ /_/ /_/ ____/\__,_/_/ /_/|_| %s\n' $PRIMARY $RESETprintf ' %s /_/ %s\n\n' $PRIMARY $RESETprintf ' %s Version: 2.1.5 %s\n' $BLUE $RESETprintf ' %s WebSite: https://streampark.apache.org%s\n' $BLUE $RESETprintf ' %s GitHub : http://github.com/apache/streampark%s\n\n' $BLUE $RESETprintf ' %s ──────── Apache StreamPark, Make stream processing easier ô~ô!%s\n\n' $PRIMARY $RESETif [[ "$1"x == "start"x ]]; thenprintf ' %s http://localhost:%s %s\n\n' $PRIMARY $SERVER_PORT $RESETfi

}# shellcheck disable=SC2120

get_pid() {if [[ -f "$APP_PID" ]]; thenif [[ -s "$APP_PID" ]]; then# shellcheck disable=SC2155# shellcheck disable=SC2006local PID=`cat "$APP_PID"`kill -0 $PID >/dev/null 2>&1# shellcheck disable=SC2181if [[ $? -eq 0 ]]; thenecho $PIDexit 0fielserm -f "$APP_PID" >/dev/null 2>&1fifi# shellcheck disable=SC2006if [[ "${SERVER_PORT}"x == ""x ]]; thenecho_r "server.port is required, please check $CONFIG"exit 1;else# shellcheck disable=SC2006# shellcheck disable=SC2155local used=`$_RUNJAVA -cp "$APP_LIB/*" $BASH_UTIL --check_port "$SERVER_PORT"`if [[ "${used}"x == "used"x ]]; then# shellcheck disable=SC2006local PID=`jps -l | grep "$APP_MAIN" | awk '{print $1}'`# shellcheck disable=SC2236if [[ ! -z $PID ]]; thenecho "$PID"elseecho 0fielseecho 0fifi

}# shellcheck disable=SC2120

start() {# shellcheck disable=SC2006local PID=$(get_pid)if [[ $PID -gt 0 ]]; then# shellcheck disable=SC2006echo_r "StreamPark is already running pid: $PID , start aborted!"exit 1fi# Bugzilla 37848: only output this if we have a TTYif [[ ${have_tty} -eq 1 ]]; thenecho_w "Using APP_BASE: $APP_BASE"echo_w "Using APP_HOME: $APP_HOME"if [[ "$1" = "debug" ]] ; thenecho_w "Using JAVA_HOME: $JAVA_HOME"elseecho_w "Using JRE_HOME: $JRE_HOME"fiecho_w "Using APP_PID: $APP_PID"fi# shellcheck disable=SC2006local workspace=`$_RUNJAVA -cp "$APP_LIB/*" $BASH_UTIL --get_yaml "streampark.workspace.local" "$CONFIG"`mkdir -p $workspaceif [[ ! -d $workspace ]]; thenecho_r "ERROR: streampark.workspace.local: \"$workspace\" is an invalid path, please reconfigure in $CONFIG"echo_r "NOTE: \"streampark.workspace.local\" should not be set under APP_HOME($APP_HOME) directory. Set it to a secure directory outside of APP_HOME."exit 1;fiif [[ ! -w $workspace ]] || [[ ! -r $workspace ]]; thenecho_r "ERROR: streampark.workspace.local: \"$workspace\" Permission denied! "exit 1;fiif [[ "${HADOOP_HOME}"x == ""x ]]; thenecho_y "WARN: HADOOP_HOME is undefined on your system env."elseecho_w "Using HADOOP_HOME: ${HADOOP_HOME}"fi## classpath options:# 1): java env (lib and jre/lib)# 2): StreamPark# 3): hadoop conf# shellcheck disable=SC2091local APP_CLASSPATH=".:${JAVA_HOME}/lib:${JAVA_HOME}/jre/lib"# shellcheck disable=SC2206# shellcheck disable=SC2010local JARS=$(ls "$APP_LIB"/*.jar | grep -v "$APP_LIB/streampark-flink-shims_.*.jar$")# shellcheck disable=SC2128for jar in $JARS;doAPP_CLASSPATH=$APP_CLASSPATH:$jardoneif [[ -n "${HADOOP_CONF_DIR}" ]] && [[ -d "${HADOOP_CONF_DIR}" ]]; thenecho_w "Using HADOOP_CONF_DIR: ${HADOOP_CONF_DIR}"APP_CLASSPATH+=":${HADOOP_CONF_DIR}"elseAPP_CLASSPATH+=":${HADOOP_HOME}/etc/hadoop"fiecho_g "JAVA_OPTS: ${JAVA_OPTS}"eval $NOHUP $_RUNJAVA $JAVA_OPTS \-classpath "$APP_CLASSPATH" \-Dapp.home="${APP_HOME}" \-Djava.io.tmpdir="$APP_TMPDIR" \-Dlogging.config="${APP_CONF}/logback-spring.xml" \$APP_MAIN "$@" >> "$APP_OUT" 2>&1 "&"local PID=$!local IS_NUMBER="^[0-9]+$"# Add to pid file if successful startif [[ ${PID} =~ ${IS_NUMBER} ]] && kill -0 $PID > /dev/null 2>&1 ; thenecho $PID > "$APP_PID"# shellcheck disable=SC2006echo_g "StreamPark start successful. pid: $PID"elseecho_r "StreamPark start failed."exit 1fi

}# shellcheck disable=SC2120

start_docker() {# Bugzilla 37848: only output this if we have a TTYif [[ ${have_tty} -eq 1 ]]; thenecho_w "Using APP_BASE: $APP_BASE"echo_w "Using APP_HOME: $APP_HOME"if [[ "$1" = "debug" ]] ; thenecho_w "Using JAVA_HOME: $JAVA_HOME"elseecho_w "Using JRE_HOME: $JRE_HOME"fiecho_w "Using APP_PID: $APP_PID"fiif [[ "${HADOOP_HOME}"x == ""x ]]; thenecho_y "WARN: HADOOP_HOME is undefined on your system env,please check it."elseecho_w "Using HADOOP_HOME: ${HADOOP_HOME}"fi# classpath options:# 1): java env (lib and jre/lib)# 2): StreamPark# 3): hadoop conf# shellcheck disable=SC2091local APP_CLASSPATH=".:${JAVA_HOME}/lib:${JAVA_HOME}/jre/lib"# shellcheck disable=SC2206# shellcheck disable=SC2010local JARS=$(ls "$APP_LIB"/*.jar | grep -v "$APP_LIB/streampark-flink-shims_.*.jar$")# shellcheck disable=SC2128for jar in $JARS;doAPP_CLASSPATH=$APP_CLASSPATH:$jardoneif [[ -n "${HADOOP_CONF_DIR}" ]] && [[ -d "${HADOOP_CONF_DIR}" ]]; thenecho_w "Using HADOOP_CONF_DIR: ${HADOOP_CONF_DIR}"APP_CLASSPATH+=":${HADOOP_CONF_DIR}"elseAPP_CLASSPATH+=":${HADOOP_HOME}/etc/hadoop"fiJAVA_OPTS="$JAVA_OPTS -XX:-UseContainerSupport"echo_g "JAVA_OPTS: ${JAVA_OPTS}"$_RUNJAVA $JAVA_OPTS \-classpath "$APP_CLASSPATH" \-Dapp.home="${APP_HOME}" \-Djava.io.tmpdir="$APP_TMPDIR" \-Dlogging.config="${APP_CONF}/logback-spring.xml" \$APP_MAIN}# shellcheck disable=SC2120

stop() {# shellcheck disable=SC2155# shellcheck disable=SC2006local PID=$(get_pid)if [[ $PID -eq 0 ]]; thenecho_r "StreamPark is not running. stop aborted."exit 1fishiftlocal SLEEP=3# shellcheck disable=SC2006echo_g "StreamPark stopping with the PID: $PID"kill -9 "$PID"while [ $SLEEP -ge 0 ]; do# shellcheck disable=SC2046# shellcheck disable=SC2006kill -0 "$PID" >/dev/null 2>&1# shellcheck disable=SC2181if [[ $? -gt 0 ]]; thenrm -f "$APP_PID" >/dev/null 2>&1if [[ $? != 0 ]]; thenif [[ -w "$APP_PID" ]]; thencat /dev/null > "$APP_PID"elseecho_r "The PID file could not be removed."fifiecho_g "StreamPark stopped."breakfiif [[ $SLEEP -gt 0 ]]; thensleep 1fi# shellcheck disable=SC2006# shellcheck disable=SC2003SLEEP=`expr $SLEEP - 1 `doneif [[ "$SLEEP" -lt 0 ]]; thenecho_r "StreamPark has not been killed completely yet. The process might be waiting on some system call or might be UNINTERRUPTIBLE."fi

}status() {# shellcheck disable=SC2155# shellcheck disable=SC2006local PID=$(get_pid)if [[ $PID -eq 0 ]]; thenecho_r "StreamPark is not running"exit 1elseecho_g "StreamPark is running pid is: $PID"fi

}restart() {# shellcheck disable=SC2119stop# shellcheck disable=SC2119start

}main() {case "$1" in"start")shiftstart "$@"[[ $? -eq 0 ]] && print_logo "start";;"start_docker")print_logostart_docker;;"stop")print_logostop;;"status")print_logostatus;;"restart")restart[[ $? -eq 0 ]] && print_logo "start";;*)echo_r "Unknown command: $1"echo_w "Usage: streampark.sh ( commands ... )"echo_w "commands:"echo_w " start \$conf Start StreamPark with application config."echo_w " stop Stop StreamPark, wait up to 3 seconds and then use kill -KILL if still running"echo_w " start_docker start in docker or k8s mode"echo_w " status StreamPark status"echo_w " restart \$conf restart StreamPark with application config."exit 0;;esac

}main "$@"增加jmx文件夹

cp -r /opt/datasophon/hadoop-3.3.3/jmx /opt/datasophon/DDP/packages/streampark-2.1.5/

下载MySQL8驱动包至lib目录

(streampark从某个版本后把mysql驱动包移除了)

wget -O /opt/datasophon/DDP/packages/streampark-2.1.5/lib/mysql-connector-java-8.0.29.jar https://repo1.maven.org/maven2/mysql/mysql-connector-java/8.0.29/mysql-connector-java-8.0.29.jar

拷贝streampark的 mysql-schema.sql 和 mysql-data.sql 脚本出来备用

cp /opt/datasophon/DDP/packages/streampark-2.1.5/script/schema/mysql-schema.sql /opt/datasophon/DDP/packages/streampark_mysql-schema.sqlcp /opt/datasophon/DDP/packages/streampark-2.1.5/script/data/mysql-data.sql /opt/datasophon/DDP/packages/streampark_mysql-data.sql

打压缩包并生成md5

tar -czf streampark-2.1.5.tar.gz streampark-2.1.5

md5sum streampark-2.1.5.tar.gz | awk '{print $1}' >streampark-2.1.5.tar.gz.md5

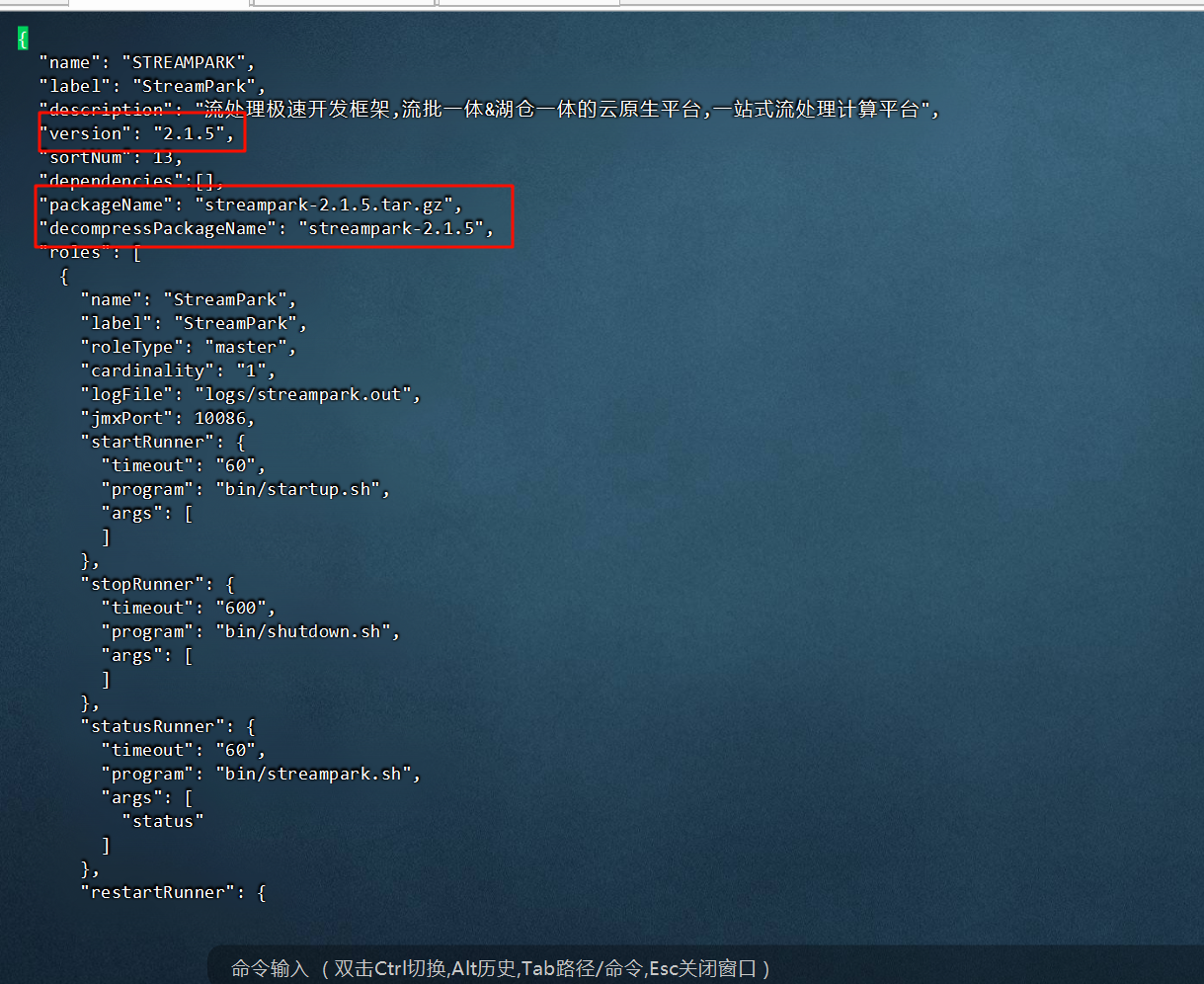

修改配置文件service_ddl.json

# 修改streampark的版本号

vi /opt/datasophon/datasophon-manager-1.2.1/conf/meta/DDP-1.2.1/STREAMPARK/service_ddl.json

{"name": "STREAMPARK","label": "StreamPark","description": "流处理极速开发框架,流批一体&湖仓一体的云原生平台,一站式流处理计算平台","version": "2.1.5","sortNum": 13,"dependencies":[],"packageName": "streampark-2.1.5.tar.gz","decompressPackageName": "streampark-2.1.5","roles": [{"name": "StreamPark","label": "StreamPark","roleType": "master","cardinality": "1","logFile": "logs/streampark.out","jmxPort": 10086,"startRunner": {"timeout": "60","program": "bin/startup.sh","args": []},"stopRunner": {"timeout": "600","program": "bin/shutdown.sh","args": []},"statusRunner": {"timeout": "60","program": "bin/streampark.sh","args": ["status"]},"restartRunner": {"timeout": "60","program": "bin/streampark.sh","args": ["restart"]},"externalLink": {"name": "StreamPark Ui","label": "StreamPark Ui","url": "http://${host}:${serverPort}"}}],"configWriter": {"generators": [{"filename": "config.yaml","configFormat": "custom","outputDirectory": "conf","templateName": "streampark.ftl","includeParams": ["databaseUrl","username","password","serverPort","hadoopUserName","workspaceLocal","workspaceRemote"]}]},"parameters": [{"name": "databaseUrl","label": "StreamPark数据库地址","description": "","configType": "map","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "jdbc:mysql://${apiHost}:3306/streampark?useSSL=false&useUnicode=true&characterEncoding=UTF-8&useJDBCCompliantTimezoneShift=true&useLegacyDatetimeCode=false&serverTimezone=GMT%2B8"},{"name": "username","label": "StreamPark数据库用户名","description": "","configType": "map","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "root"},{"name": "password","label": "StreamPark数据库密码","description": "","configType": "map","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "root"},{"name": "serverPort","label": "StreamPark服务端口","description": "","configType": "map","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "10000"},{"name": "hadoopUserName","label": "StreamPark Hadoop操作用户","description": "","configType": "map","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "root"},{"name": "workspaceLocal","label": "StreamPark本地工作空间目录","description": "自行创建,用于存放项目源码,构建的目录等","configType": "map","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "/data/streampark/workspace"},{"name": "workspaceRemote","label": "StreamPark HDFS工作空间目录","description": "HDFS工作空间目录","configType": "map","required": true,"type": "input","value": "","configurableInWizard": true,"hidden": false,"defaultValue": "hdfs://${dfs.nameservices}/user/yarn/nodeLabelsstreampark"}]

}

各节点修改streampark.ftl文件

vi /opt/datasophon/datasophon-worker/conf/templates/streampark.ftl

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#logging:level:root: infoserver:port: ${serverPort}session:# The user's login session has a validity period. If it exceeds this time, the user will be automatically logout# unit: s|m|h|d, s: second, m:minute, h:hour, d: dayttl: 2h # unit[s|m|h|d], e.g: 24h, 2d....undertow: # see: https://github.com/undertow-io/undertow/blob/master/core/src/main/java/io/undertow/Undertow.javabuffer-size: 1024direct-buffers: truethreads:io: 16worker: 256# system database, default h2, mysql|pgsql|h2

datasource:dialect: mysql #h2, mysql, pgsqlh2-data-dir: ~/streampark/h2-data # if datasource.dialect is h2, you can configure the data dir# if datasource.dialect is mysql or pgsql, you need to configure the following connection information# mysql/postgresql/h2 connect userusername: ${username}# mysql/postgresql/h2 connect passwordpassword: ${password}# mysql/postgresql connect jdbcURL# mysql example: datasource.url: jdbc:mysql://localhost:3306/streampark?useUnicode=true&characterEncoding=UTF-8&useJDBCCompliantTimezoneShift=true&useLegacyDatetimeCode=false&serverTimezone=GMT%2B8# postgresql example: jdbc:postgresql://localhost:5432/streampark?stringtype=unspecifiedurl: ${databaseUrl}streampark:workspace:# Local workspace, storage directory of clone projects and compiled projects,Do not set under $APP_HOME. Set it to a directory outside of $APP_HOME.local: ${workspaceLocal}# The root hdfs path of the jars, Same as yarn.provided.lib.dirs for flink on yarn-application and Same as --jars for spark on yarnremote: ${workspaceRemote}proxy:# lark proxy address, default https://open.feishu.cnlark-url:# hadoop yarn proxy path, e.g: knox process address https://streampark.com:8443/proxy/yarnyarn-url:yarn:# flink on yarn or spark on yarn, monitoring job status from yarn, it is necessary to set hadoop.http.authentication.typehttp-auth: 'simple' # default simple, or kerberos# flink on yarn or spark on yarn, HADOOP_USER_NAMEhadoop-user-name: ${hadoopUserName}project:# Number of projects allowed to be running at the same time , If there is no limit, -1 can be configuredmax-build: 16#openapi white-list, You can define multiple openAPI, separated by spaces(" ") or comma(,).openapi.white-list:# flink on yarn or spark on yarn, when the hadoop cluster enable kerberos authentication, it is necessary to set Kerberos authentication parameters.

security:kerberos:login:debug: falseenable: falsekeytab:krb5:principal:ttl: 2h # unit [s|m|h|d]# sign streampark with ldap.

ldap:base-dn: dc=streampark,dc=com # Login Accountenable: false # ldap enabled'username: cn=Manager,dc=streampark,dc=compassword: streamparkurls: ldap://99.99.99.99:389 #AD server IP, default port 389user:email-attribute: mailidentity-attribute: uid重启

各节点worker重启

sh /opt/datasophon/datasophon-worker/bin/datasophon-worker.sh restart worker

主节点重启api

sh /opt/datasophon/datasophon-manager-1.2.1/bin/datasophon-api.sh restart api

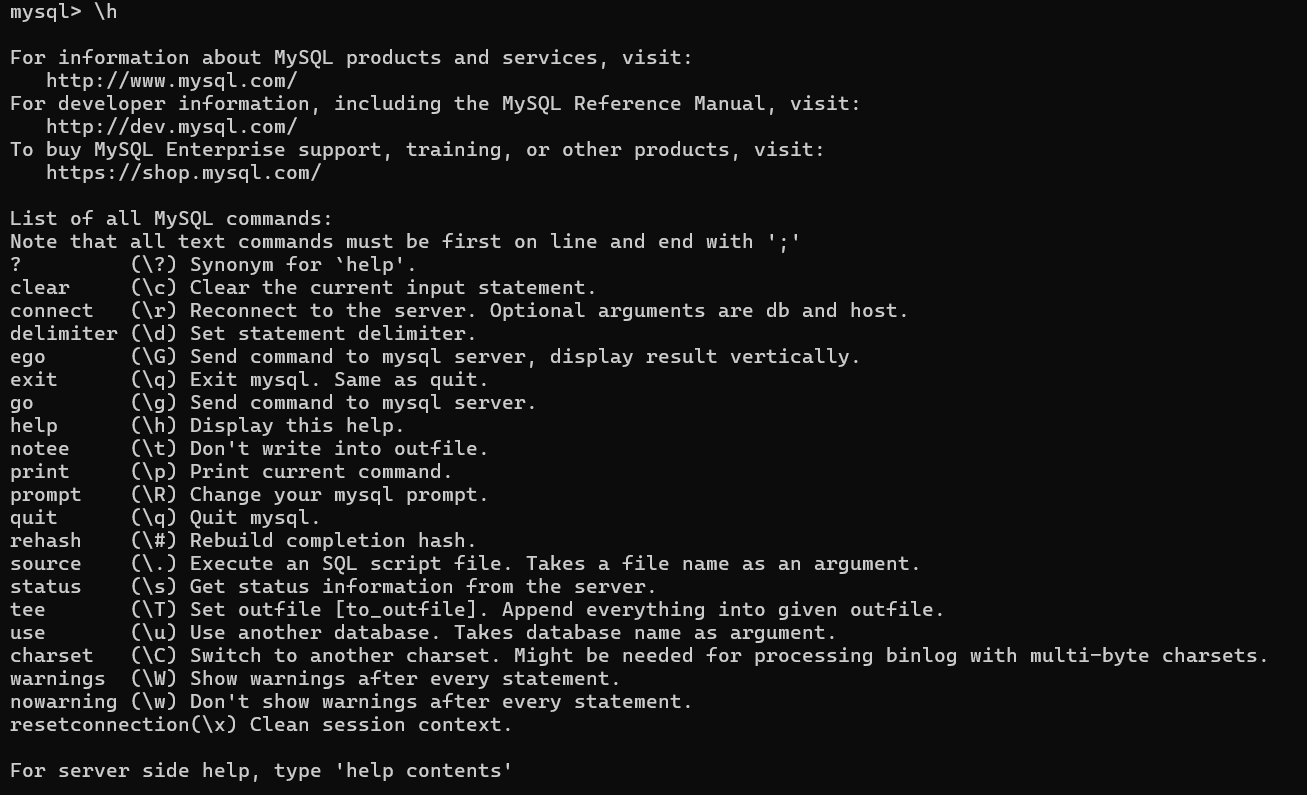

手动创建数据库并且运行初始化SQL

初始化StreamPark数据库。

mysql -u root -p -e "CREATE DATABASE streampark DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci"

执行/opt/datasophon/DDP/packages目录下streampark.sql创建streampark数据库表。

use streampark;

source /opt/datasophon/DDP/packages/streampark.sql

source /opt/datasophon/DDP/packages/streampark_mysql-schema.sql

source /opt/datasophon/DDP/packages/streampark_mysql-data.sql

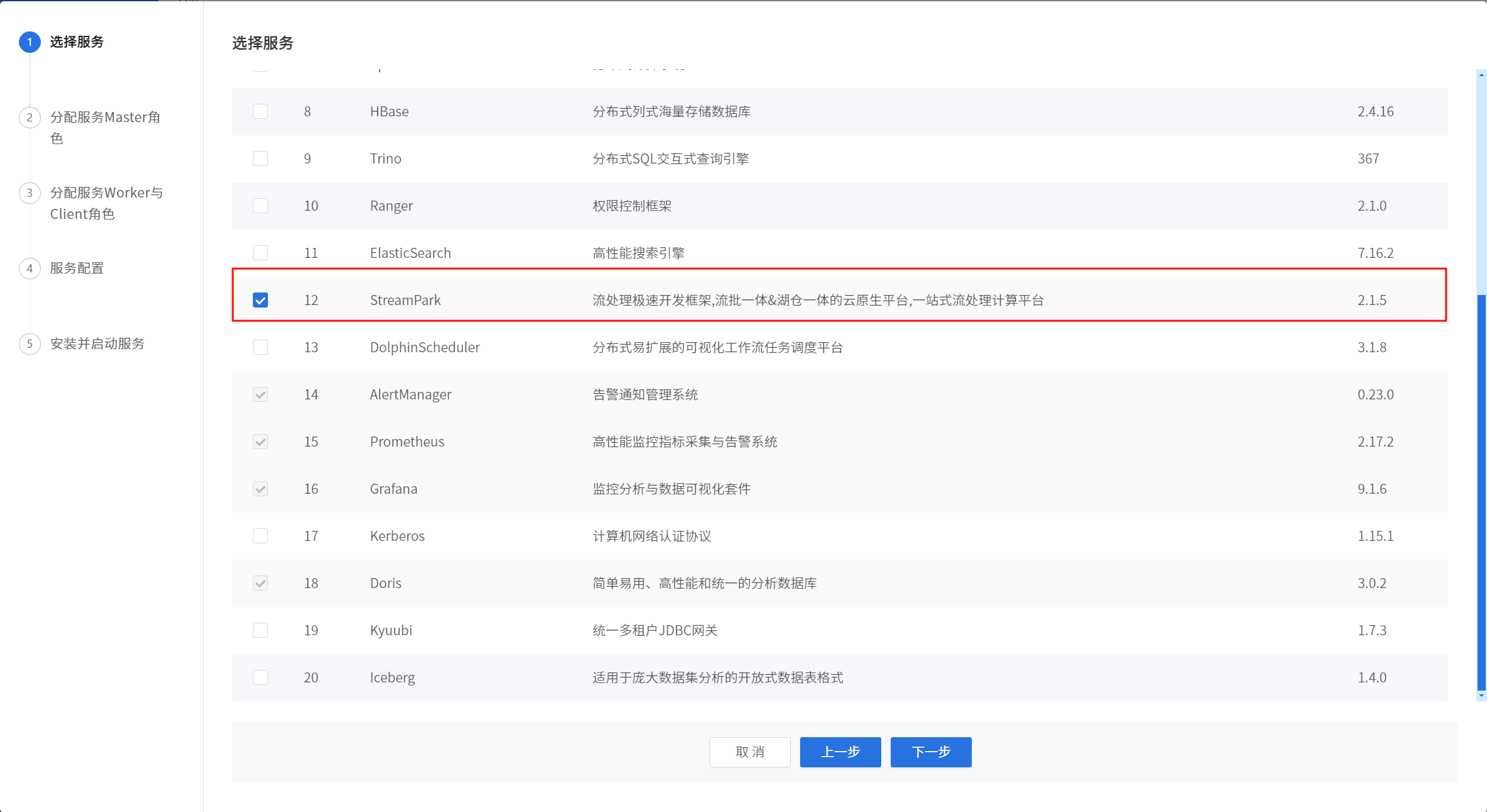

安装StreamPark

添加StreamPark。

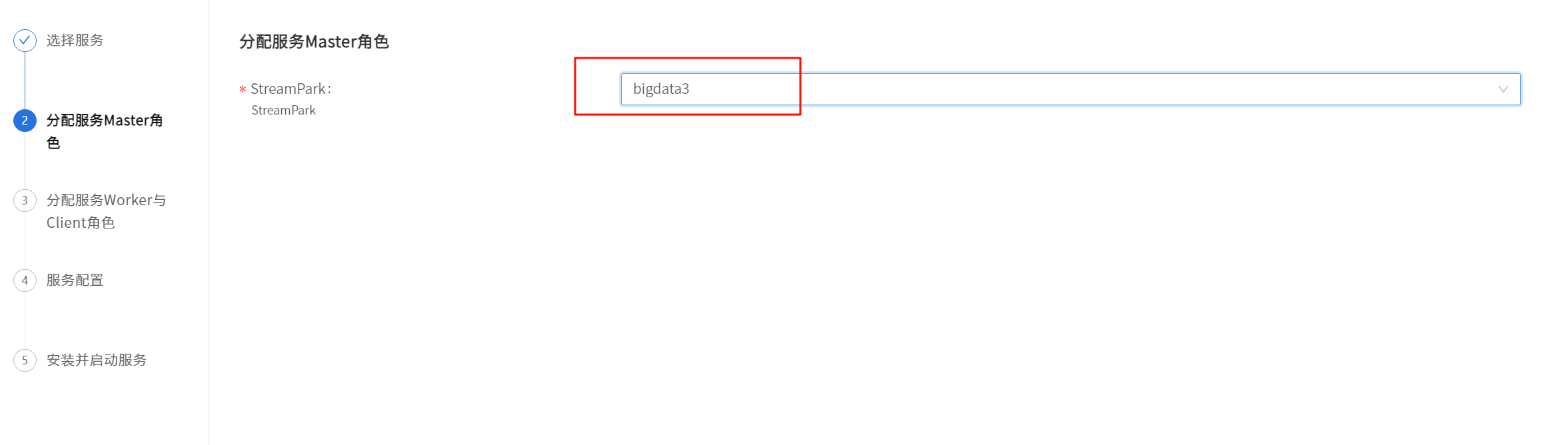

分配streampark角色,根据实际选择安装StreamPark在哪个节点机器

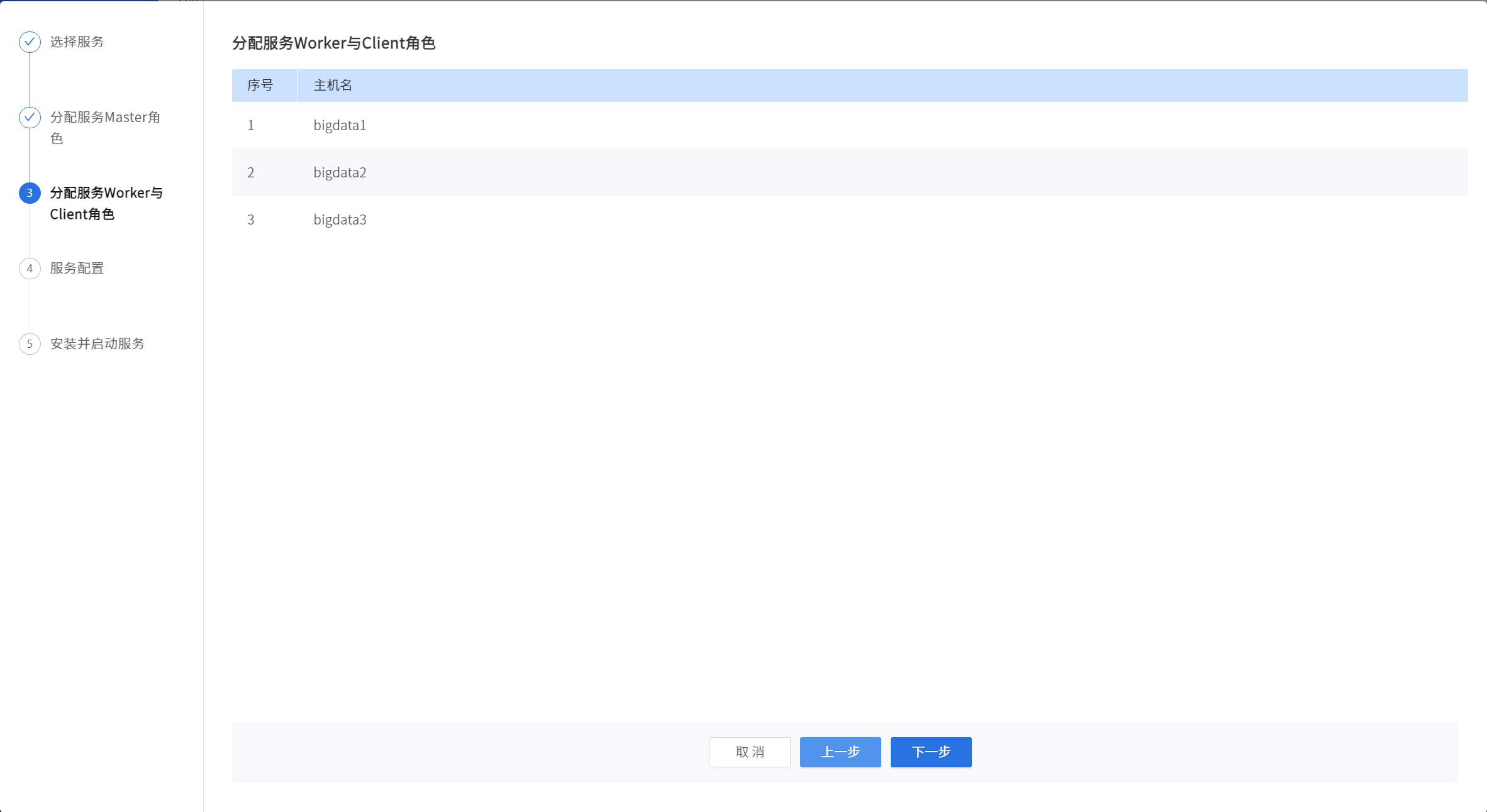

直接下一步

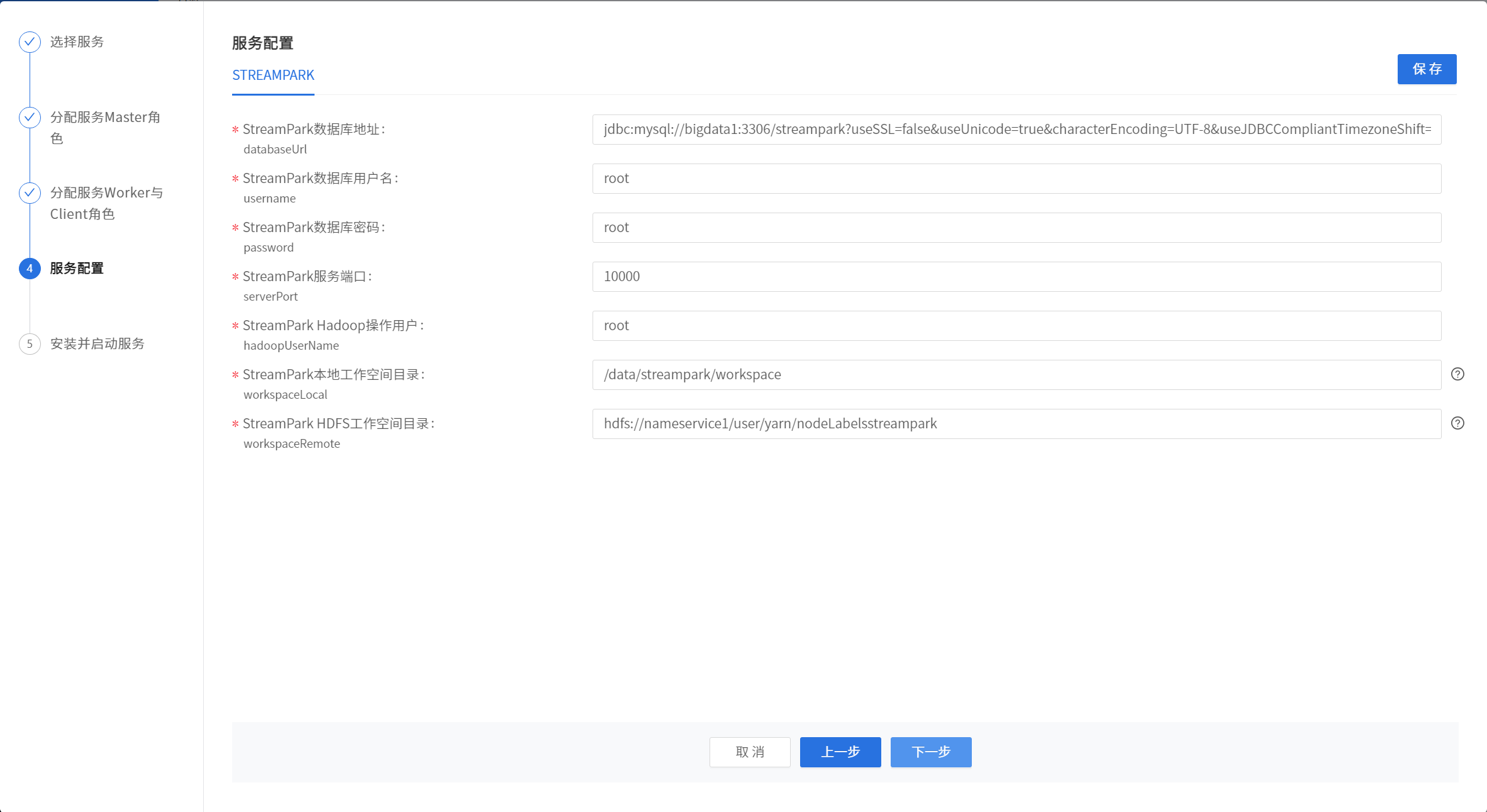

根据实际情况修改相关配置。

根据实际情况,修改streampark配置。

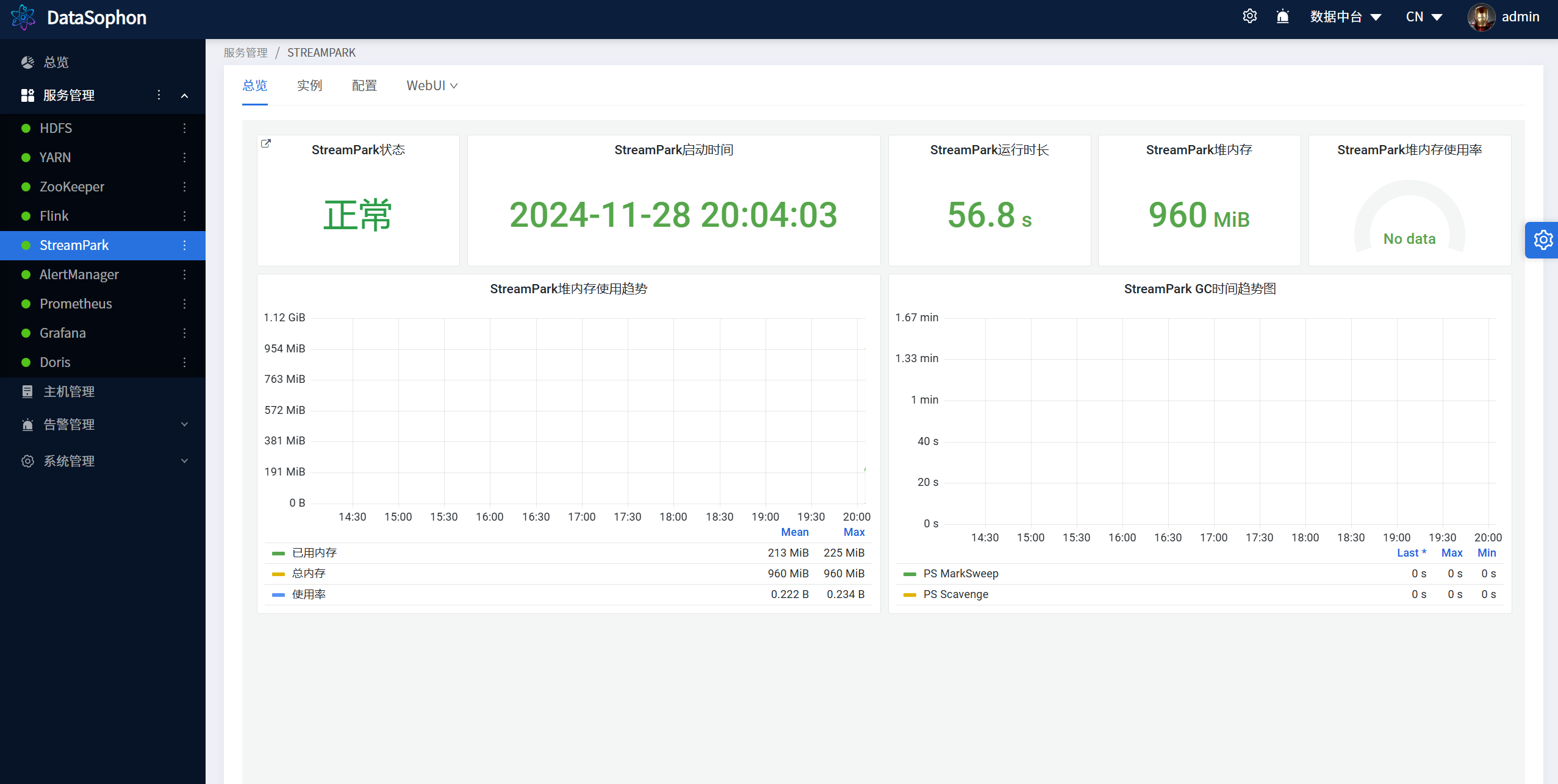

到此,DataSophon集成StreamPark2.1.5成功!